This exercise was prompted by a question on a forum https://community.dataquest.io/t/how-to-download-an-excel-online-file/494093 regarding how to download a read-only file http://www.presupuesto.pr.gov/PRESUPUESTOPROPUESTO2020-2021/_layouts/15/WopiFrame.aspx?sourcedoc=%7B566feecf-1e0d-46b8-a505-7cd762665268%7D&action=edit&source=http%3A%2F%2Fwww%2Epresupuesto%2Epr%2Egov%2FPRESUPUESTOPROPUESTO2020%2D2021%2FFOMB%2520Budget%2520Requirements%2520FY%25202021%2FForms%2FAllItems%2Easpx%3FRootFolder%3D%252FPRESUPUESTOPROPUESTO2020%252D2021%252FFOMB%2520Budget%2520Requirements%2520FY%25202021 from excel online that required authentication to Download. Copy pasting a few cells works fine, but Ctrl+A copy-pasting leads to just the text “Retrieving data. Wait a few seconds and try to cut or copy again.” being pasted with no data, making data analysis of the full file difficult. The follow sections will go through how to move around the document, get all the information, clean them, and put them together. Full notebook at https://gist.github.com/gitgithan/28f63f707bdbdd5dd9f51f553c6322dc

此练习是由论坛https://community.dataquest.io/t/how-to-download-an-excel-online-file/494093上有关如何下载只读文件http://的问题引起的www.presupuesto.pr.gov/PRESUPUESTOPROPUESTO2020-2021/_layouts/15/WopiFrame.aspx?sourcedoc=%7B566feecf-1e0d-46b8-a505-7cd762665268%7D&action=edit&source=http%3A%2F%2Fwww%2Epresupuesto%2 %2FPRESUPUESTOPROPUESTO2020%2D2021%2FFOMB%2520预算%2520Requirements%2520FY%25202021%2FForms%2FAllItems%2Easpx%3FRootFolder%3D%252FPRESUPUESTOPROPUESTO2020%252D2021%252FFOMB%25Y20%By%25Y20%20Y从20% 复制粘贴几个单元格可以很好地工作,但是Ctrl + A复制粘贴只会导致文本“ 正在检索数据。 等待几秒钟,然后尝试再次剪切或复制 。” 由于没有数据粘贴,因此很难对整个文件进行数据分析。 以下各节将介绍如何在文档中移动,获取所有信息,清理它们并将它们放在一起。 完整笔记本位于https://gist.github.com/gitgithan/28f63f707bdbdd5dd9f51f553c6322dc

安装并连接到WebDriver (Installing and Connecting to the WebDriver)

driver = webdriver.Chrome() driver.get('http://www.presupuesto.pr.gov/PRESUPUESTOPROPUESTO2020-2021/_layouts/15/WopiFrame.aspx?sourcedoc=%7B566feecf-1e0d-46b8-a505-7cd762665268%7D&action=edit&source=http%3A%2F%2Fwww%2Epresupuesto%2Epr%2Egov%2FPRESUPUESTOPROPUESTO2020%2D2021%2FFOMB%2520Budget%2520Requirements%2520FY%25202021%2FForms%2FAllItems%2Easpx%3FRootFolder%3D%252FPRESUPUESTOPROPUESTO2020%252D2021%252FFOMB%2520Budget%2520Requirements%2520FY%25202021') driver.switch_to.frame(0)To make use of Selenium to execute commands in a browser, we need to install a driver in our system. I usually use Chrome on Windows, so installed a matching ChromeDriver version from https://chromedriver.chromium.org/downloads. After that, webdriver.Chrome() creates the driver with no errors, opens a new Chrome window (if you don’t use headless option) useful for observing how selenium is scrolling, and we can call methods on the driver to execute desired interactions with the browser. The data is regarding budgeting, but that is not as important as how to parse it, because the skills here are transferable to other data sources.

要使用Selenium在浏览器中执行命令,我们需要在系统中安装驱动程序。 我通常在Windows上使用Chrome,因此从https://chromedriver.chromium.org/downloads安装了匹配的ChromeDriver版本。 之后,webdriver.Chrome()会正确创建驱动程序,打开一个新的Chrome窗口(如果您不使用无头选项),可用于观察Selenium的滚动方式,我们可以在驱动程序上调用方法以执行所需的交互使用浏览器。 数据与预算有关,但这并不像解析那样重要,因为这里的技能可以转移到其他数据源。

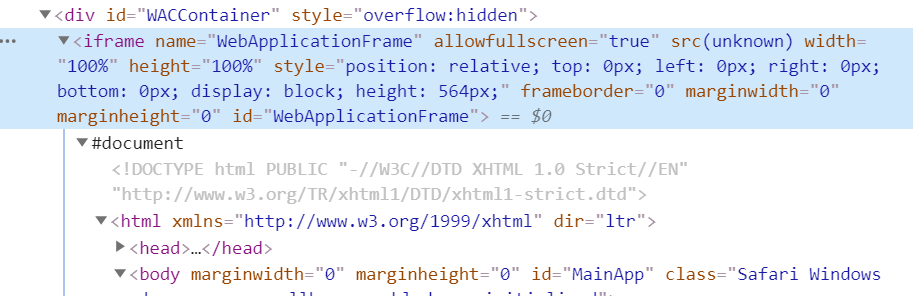

Normally, the fastest way to get information is to find the direct link, then use a scraping library like requests.get(url) to get the information. However, if we view source here (Ctrl+U in chrome), we can see that the data is hidden in an iframe. Notice how the <div> tag starts and ends just like that.

通常,获取信息的最快方法是找到直接链接,然后使用诸如requests.get(url)之类的抓取库来获取信息。 但是,如果我们在此处查看源代码(Chrome中为Ctrl + U),则可以看到数据隐藏在iframe中。 注意<div>标记是如何开始和结束的。

<div id="WACContainer" style="overflow:hidden"> <iframe name="WebApplicationFrame" allowfullscreen="true" src="" width="100%" height="100%" style="position: relative; top: 0; left: 0; right: 0; bottom: 0; display: block" frameborder="0" marginwidth="0" marginheight="0"></iframe> </div>This is different from what we can see with 1. F12 to open developer tools2. Inspector tool to mouse over any data element 3. Click up the interactive DOM hierarchy to see where the static html and javascript loaded elements match

这与我们在1. F12中打开开发人员工具2所看到的有所不同。 检查器工具,将鼠标悬停在任何数据元素上3.单击交互式DOM层次结构,以查看静态html和javascript加载的元素在哪里匹配

There is a whole #document hidden within not available from the static HTML!

有一个完整的#document隐藏在其中,无法从静态HTML获得!

driver.switch_to.frame(0) allows the selenium driver to move into the frame, so that all future interactions will be in the context of the frame. Without this step, find_element_by_* will not find anything, and scrolling would not be possible.

driver.switch_to.frame(0)允许Selenium驱动器移入框架,以便所有将来的交互都将在框架的上下文中进行。 没有此步骤, find_element_by_*将找不到任何内容,并且将无法滚动。

寻找WebElements(列+数据) (Looking for the WebElements (Columns + Data))

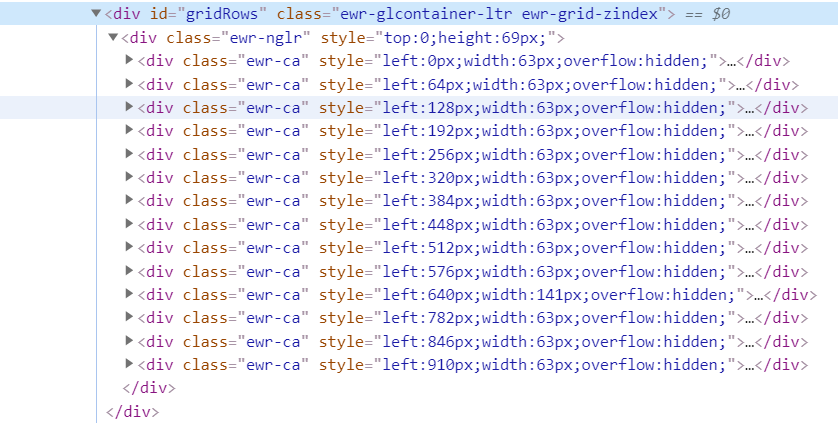

XPath is a convenient selector since we can just right click any DOM element → Copy → Copy XPath. There are however many other options for selectors (https://selenium-python.readthedocs.io/locating-elements.html), and you can choose based on what HTML attributes are available. find_element_by_id is fastest since id must be unique across the whole document, while using XPath Contains() allows partially specifying an XPath to allow matching multiple elements when only a small part of the XPath is changing. (eg …Flow_0, …Flow_1). By scrolling down the excel, we can see new blocks of …Flow_x being added, while old blocks disappear from the DOM, such that at any moment (other than 2 blocks at initialization ), there are only 3 blocks of data available for parsing. We can find such WebElements using e = driver.find_element_by_xpath (or by ID as done later in the notebook after experimental phase) and use the resulting element for scrolling and text parsing.

XPath是一个方便的选择器,因为我们可以右键单击任何DOM元素→复制→复制XPath。 但是,选择器还有许多其他选项( https://selenium-python.readthedocs.io/locating-elements.html ),您可以根据可用HTML属性进行选择。 find_element_by_id是最快的,因为id在整个文档中必须是唯一的,而使用XPath Contains()允许部分指定XPath以允许在XPath的一小部分发生更改时匹配多个元素。 (例如…Flow_0,…Flow_1)。 通过向下滚动excel,我们可以看到添加了... Flow_x的新块,而旧块从DOM中消失了,因此在任何时候(初始化时不是2个块),只有3个数据块可用于解析。 我们可以使用e = driver.find_element_by_xpath (或通过ID(在实验阶段稍后在笔记本中完成)找到此类WebElement,并将所得元素用于滚动和文本解析。

The element containing the column names can be found by the same method of inspecting any of the 14 column names and moving back up the DOM to find the XPath of the closest parent containing all 14 column names, then using .text (much slower than the javascript method used to access data later) on the WebElement to extract the names.

可以通过相同的方法来查找包含列名称的元素,该方法是检查14个列名称中的任何一个,然后返回DOM来查找包含所有14个列名称的最接近父级的XPath,然后使用.text(比用于稍后提取数据的javascript方法)以提取名称。

解析数据块的3种方法 (3 Ways of Parsing a block of Data)

- Whole block of 28 rows and 14 columns (392 elements in each newline separated string) 28行14列的整个块(每个换行符分隔的字符串中的392个元素)

- 28 rows x 14 elements (14 elements in each newline separated string) 28行x 14个元素(每个换行符分隔的字符串中有14个元素)

- cell by cell (1 element in each string) 单元格(每个字符串1个元素)

These are in increasing time required, 500ms, 900ms 5000ms. Usually the 1st method is most convenient, but later see that the 2nd method is necessary when data gets dirty.

这些都需要增加时间,即500ms,900ms,5000ms。 通常,第一种方法最方便,但是后来看到数据变脏时有必要使用第二种方法。

def parse_block(row_block):

"""Parse webelement to iterables grouped into iterable of 14 for EDA purposes"""

iterable = row_block.text.split('\n')

return zip(*[iter(iterable)]*14)The grouper recipe from itertools (https://docs.python.org/3.8/library/itertools.html#itertools-recipes) allows very fast grouping of a 392 length generator into a nested generator of 28 x 14 elements for convenient feeding into a pandas DataFrame for debugging.

来自itertools的石斑鱼配方( https://docs.python.org/3.8/library/itertools.html#itertools-recipes )可以将392个长度的生成器非常快速地分组为28 x 14个元素的嵌套生成器,以便于将其馈入一个用于调试的pandas DataFrame。

下移文件(滚动,等待,outerText) (Moving down the file (Scrolling, Waits, outerText))

# Excel Count shows 15056, but it is not the last row, Ctrl+Down shows last row is 15254 (15253 rows)

number_rows = 15253

block_size = 28

last_block = number_rows//block_sizefor i in range(5+1):

element_to_wait = "m_excelWebRenderer_ewaCtl_sheetContentDiv_Flow_{}".format(i)

last_element = driver.find_element_by_id(element_to_wait)

driver.execute_script("arguments[0].scrollIntoView();", last_element)

# wait for text to be loaded into element (during switching into frame and scrolling)

text = driver.execute_script(f"return document.getElementById('{element_to_wait}').outerText")

while len(text) == 0:

text = driver.execute_script(f"return document.getElementById('{element_to_wait}').outerText")iterable = text.split('\n')

element_text_list[i] = zip(*[iter(iterable)]*14)After exploration, we see how the DOM loads 3 blocks of 28 rows at any moment. Initially i implemented (now commented in notebook) find_elements_by_xpath(xpath_pattern) to get all 3 elements, then save the last (newest unparsed) one, but later realized find_element_by_id is faster and more robust. The issue with the former method is sometimes when scrolling too fast, the new WebElement will not have loaded yet when finding elements, leading to the same old element being parsed. The latter method will be able to error out on this because every id is unique so it gets exactly what it searches for, rather than depending on “the last element”.

探索之后,我们将看到DOM如何随时加载3个28行的块。 最初,我实现了find_elements_by_xpath(xpath_pattern) (现在在笔记本中进行了find_elements_by_xpath(xpath_pattern)以获取所有3个元素,然后保存最后一个(最新的未解析的)元素,但后来意识到find_element_by_id更快,更可靠。 前一种方法的问题有时是滚动速度过快时,查找元素时尚未加载新的WebElement,导致解析了相同的旧元素。 后一种方法将能够对此进行错误处理,因为每个id都是唯一的,因此它可以准确地获取其搜索内容,而不是依赖于“最后一个元素”。

We do scrollIntoView() early in the loop to move down the elements. When scrolling Flow_1 into view, the DOM will add Flow_2 to Flow_0,Flow_1. This allows the Flow_2 WebElement to be loaded (preventing WebElement not loaded issue in previous paragraph), and also allow time for the text in Flow_2 to be loaded (else empty string) while the text of Flow_1 is being processed. Similarly, on the next iteration of scrolling Flow_2 into view, Flow_3 will be added to DOM and Flow_0 will be kicked out while Flow_2 text is processed, leaving Flow_1,Flow_2,Flow_3. Being aware of this helps to understand “NoSuchElementException: Message: no such element: Unable to locate element” when you manually scroll the Selenium window out of where it should be at (reversible action) causing elements to not be searchable.

我们在循环的早期执行scrollIntoView()来向下移动元素。 当将Flow_1滚动到视图中时,DOM将把Flow_2添加到Flow_0,Flow_1。 这允许Flow_2 WebElement加载(防止上一段中的WebElement未加载问题),并且还允许在处理Flow_1的文本时有时间加载Flow_2中的文本(其他空字符串)。 类似地,在将Flow_2滚动到视图的下一次迭代中,将Flow_3添加到DOM,并且在处理Flow_2文本时将Flow_0踢出,剩下Flow_1,Flow_2,Flow_3。 当您手动将Selenium窗口滚动到应处于的位置(可逆操作)导致无法搜索元素时,意识到这一点有助于理解“ NoSuchElementException:消息:没有这样的元素:无法定位元素”。

Before parsing the text, we have to wait for it to be available (not always available at such looping speeds). Selenium has many waits available under their Expected Conditions (https://www.selenium.dev/selenium/docs/api/py/webdriver_support/selenium.webdriver.support.expected_conditions.html), but all of them use .text which is extremely slow (450ms) compared to the javascript method (10ms) of outerText (textContent innerText would be missing \n separators in the string and impossible to parse). Repeatedly calling the wait with .text makes things worse, so a simple custom while loop gets the job done faster.

在解析文本之前,我们必须等待它可用(并非总是以这种循环速度可用)。 Selenium在其预期条件下有许多等待时间( https://www.selenium.dev/selenium/docs/api/py/webdriver_support/selenium.webdriver.support.expected_conditions.html ),但是它们都使用.text极慢(450ms)相比JavaScript方法(10ms)的outerText ( textContent innerText会错过\ n分离器串中,也不可能分析)。 反复使用.text调用wait会使情况变得更糟,因此,简单的自定义while循环可以更快地完成工作。

调试意外的数据行计数 (Debugging Unexpected Data Row Counts)

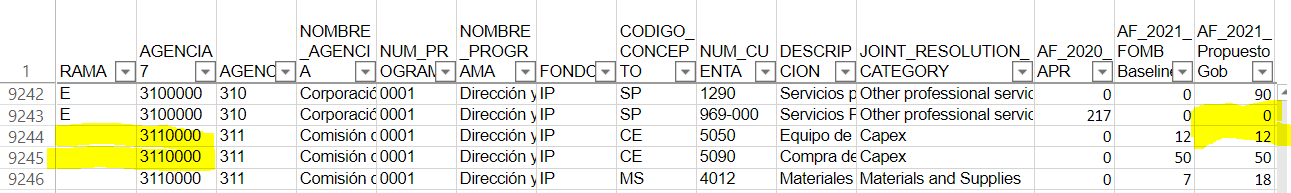

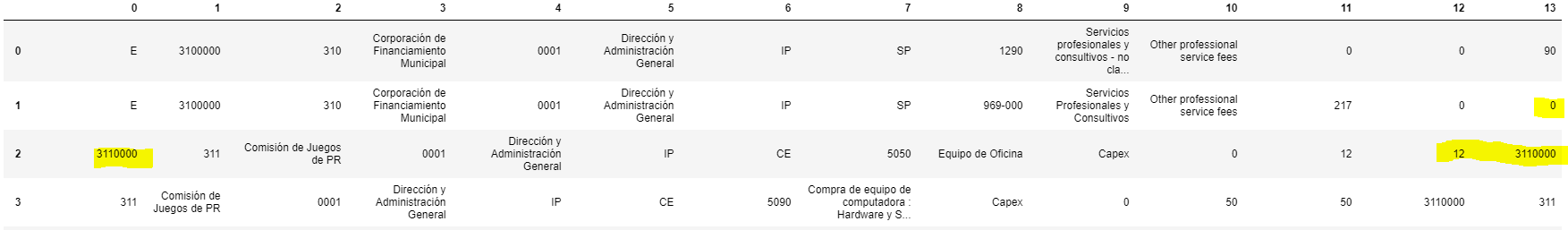

After a full run, i strangely see that there are only 15238 rows when 15253 (544 blocks of 28 rows + last block of 21 rows) are expected. Printing some statistics shows that not all blocks are 28, some are incomplete at 26,27 rows.

全面运行后,我奇怪地看到只有15238行,而预期的是15253(544行28行+最后21行)。 打印一些统计数据表明,并不是所有的块都为28,有的块不完整为26,27行。

With the missing data not represented in the string, string.split(‘\n’) with the itertools zipping by groups of 14 no matter their content would cause the misalignment in Figure 5 (every missing column shifts subsequent data 1 column to the left), and create blocks with less than 392 elements, which we can use as a condition for cleaning later.

如果丢失的数据未在字符串中表示,则itertools按14个组压缩string.split('\ n'),无论其内容如何都会导致图5中的未对齐(每个丢失的列都会将后续数据向左移动1列),并创建少于392个元素的图块,我们可以将其用作以后清洁的条件。

清理数据 (Cleaning the Data)

Because there can be missing data in rows, we must now turn to the 2nd method of reading data introduced earlier.

由于行中可能会丢失数据,因此我们现在必须转向前面介绍的读取数据的第二种方法。

def clean_block(e):

data = []

e_rows = e.find_elements_by_xpath('.//*[@id="gridRows"]/div[*]')

for row in e_rows:

columns = row.text.split('\n')

if len(columns) < 14:

columns.insert(0,'')

data.append(columns)

return dataMy assumption is that only the 1st column can have missing data. This may be wrong, (which makes cleaning much harder) but we will check it later.Assuming it’s the right guess, this code just inserts an empty string at the 1st position into each column of length less than 14.

我的假设是只有第1列可以包含缺失的数据。 这可能是错误的(这会使清理工作变得更加困难),但我们稍后会进行检查。假设是正确的猜测,此代码只是在第一个位置的长度小于14的每一列中插入一个空字符串。

验证丢失的数据位置猜测和解析的正确性 (Verifying Correctness of Missing Data Position Guess and Parsing)

Finally, we convert the last 3 columns which are expected to be integers into int type. If this fails, we know that there are still some misalignment causing strings to occupy the int columns and the previous “missing data position and number assumption” is wrong. If this succeeds, we know that the previous assumption is correct and data is cleaned, because any amount of translation of data between columns will cause string types to fill into one of these 3 int type columns. There is no rotational symmetry possible. (Like if the columns were alternating str,int,str,int…), unless an entire row of 14 is missing.(not the case since row counts are correct)

最后,我们将预期为整数的最后3列转换为int类型。 如果失败,我们知道仍然存在一些未对齐的情况,导致字符串占据int列,并且以前的“缺少数据位置和数字假设”是错误的。 如果成功的话,我们知道先前的假设是正确的并且数据已被清理,因为列之间的任何数据转换量都将导致字符串类型填充到这3个int类型的列之一中。 没有旋转对称的可能。 (例如,如果各列是交替的str,int,str,int ...),除非缺少整行14。(因为行数正确,所以不是这种情况)

If anyone knows of faster ways to access and parse such data, please share in the comments section. Also, how could data cleaning be handled if the missing pattern was not so easy?

如果有人知道访问和解析此类数据的更快方法,请在评论部分中分享。 另外,如果丢失的模式不太容易,如何处理数据清理?

Edit: I answered the above question in a new article, improving runtime from 90 to 5 seconds https://medium.com/@hanqi_47643/scraping-excel-online-read-only-file-with-requests-142b70e7b40d

编辑:我在一篇新文章中回答了上述问题,将运行时间从90秒缩短到了5秒https://medium.com/@hanqi_47643/scraping-excel-online-read-only-file-with-requests-142b70e7b40d

205

205

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?