wx 存储数据太大

When we are working on any data science project, one of the essential steps to take is to download some data from an API to the memory so we can process it.

当我们从事任何数据科学项目时,必须采取的基本步骤之一是将一些数据从API下载到内存中,以便我们进行处理。

When doing that, there are some problems that we can face; one of these problems is having too much data to process. If the size of our data is larger than the size of our available memory (RAM), we might face some problems in getting the project done.

这样做时,我们会遇到一些问题。 这些问题之一是要处理的数据过多。 如果数据的大小大于可用内存(RAM)的大小,则在完成项目时可能会遇到一些问题。

So, what to do then?

那么,那该怎么办呢?

There are different options to solve the problem of big data, small problems. These solutions either cost time or money.

解决大数据问题, 小问题有不同的选择。 这些解决方案花费时间或金钱。

可能的解决方案 (Possible solutions)

Money-costing solution: One possible solution is to buy a new computer with a more robust CPU and larger RAM that is capable of handling the entire dataset. Or, rent a cloud or a virtual memory and then create some clustering arrangement to handle the workload.

成本核算解决方案 :一种可能的解决方案是购买一台具有更强大的CPU和更大的RAM的新计算机,该计算机能够处理整个数据集。 或者,租用云或虚拟内存,然后创建一些群集安排来处理工作负载。

Time-costing solution: Your RAM might be too small to handle your data, but often, your hard drive is much larger than your RAM. So, why not just use it? Using the hard drive to deal with your date will make the processing of it much slower because even an SSD hard drive is slower than a RAM.

时间计算解决方案 :您的RAM可能太小而无法处理数据,但通常,硬盘驱动器比RAM大得多。 那么,为什么不使用它呢? 使用硬盘驱动器处理日期将使它的处理速度大大降低,因为即使SSD硬盘驱动器也比RAM慢。

Now, both those solutions are very valid, that is, if you have the resources to do so. If you have a big budget for your project or the time is not a constraint, then using one of those techniques is the simplest and most straightforward answer.

现在,这两种解决方案都是非常有效的,也就是说,如果您有足够的资源可以这样做。 如果您的项目预算很大,或者时间不受限制,那么使用其中一种技术是最简单,最直接的答案。

But,

但,

What if you can’t? What if you’re working on a budget? What if your data is so big, loading it from the drive will increase your processing time 5X or 6X or even more? Is there a solution to handling big data that doesn’t cost money or time?

如果不能,该怎么办? 如果您正在制定预算怎么办? 如果您的数据如此之大,那么从驱动器中加载数据将使您的处理时间增加5倍或6倍甚至更多? 是否有解决方案来处理无需花费金钱或时间的大数据?

I am glad you asked — or I asked?.

我很高兴你问-还是我问?

There are some techniques that you can use to handle big data that don’t require spending any money or having to deal with long loading times. This article will cover 3 techniques that you can implement using Pandas to deal with large size datasets.

您可以使用一些技术来处理大数据,这些技术不需要花费任何金钱或需要处理很长的加载时间。 本文将介绍3种可使用Pandas来处理大型数据集的技术。

技术№1:压缩 (Technique №1: Compression)

The first technique we will cover is compressing the data. Compression here doesn’t mean putting the data in a ZIP file; it instead means storing the data in the memory in a compressed format.

我们将介绍的第一个技术是压缩数据。 这里的压缩并不意味着将数据放入ZIP文件中; 相反,它意味着以压缩格式将数据存储在内存中。

In other words, compressing the data is finding a way to represent the data in a different way that will use less memory. There are two types of data compression: lossless compression and lossy one. Both these types only affect the loading of your data and won’t cause any changes in the processing section of your code.

换句话说,压缩数据正在寻找一种以不同的方式表示数据的方式,该方式将使用较少的内存。 数据压缩有两种类型: 无损压缩和有损压缩。 这两种类型都只会影响数据的加载,不会在代码的处理部分造成任何更改。

无损压缩 (Lossless compression)

Lossless compression doesn’t cause any losses in the data. That is, the original data and the compressed ones are semantically identical. You can perform lossless compression on your data frames in 3 ways:

无损压缩不会导致数据丢失。 也就是说,原始数据和压缩数据在语义上是相同的。 您可以通过以下三种方式对数据帧执行无损压缩:

For the remainder of this article, I will use this dataset that contains COVID-19 cases in the united states divided into different counties.

在本文的其余部分中,我将使用此数据集,其中包含在美国分为不同县的COVID-19案例。

Load specific columns

加载特定列

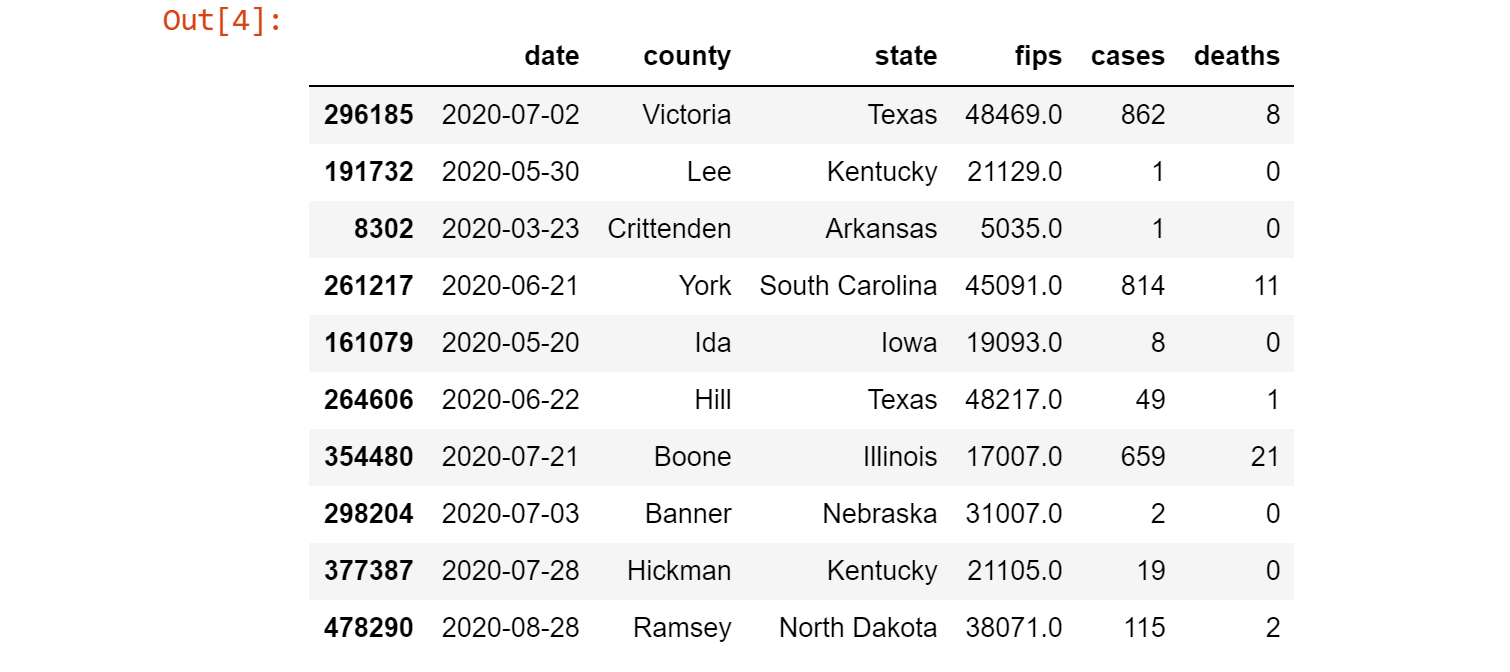

The dataset I am using has the following structure:

我正在使用的数据集具有以下结构:

import pandas as pd

data = pd.read_csv("https://raw.githubusercontent.com/nytimes/covid-19-data/master/us-counties.csv")

data.sample(10)

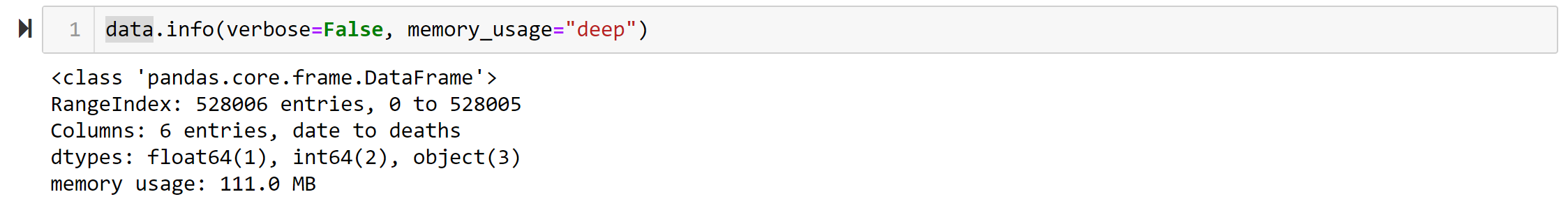

Loading the entire dataset takes 111 MB of memory!

加载整个数据集需要111 MB的内存!

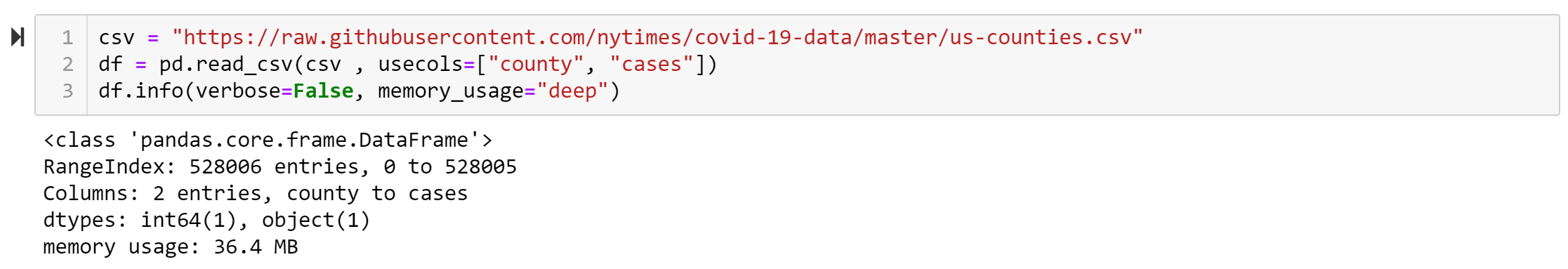

However, I really only need two columns of this dataset, the county and the case columns, so why would I load the entire dataset? Loading only the two columns I need requires 36 MB, which is a 32% decrease in memory usage.

但是,我实际上只需要此数据集的两列,即County和Case列,那么为什么要加载整个数据集? 仅加载我需要的两列需要36 MB,这使内存使用量减少了32%。

I can use Pandas to load only the columns I need like this

我可以像这样使用Pandas加载我需要的列

Code snippet for this section

本节的代码段

#Import needed library

import pandas as pd

#Dataset

csv = "https://raw.githubusercontent.com/nytimes/covid-19-data/master/us-counties.csv"

#Load entire dataset

data = pd.read_csv(csv)

data.info(verbose=False, memory_usage="deep")

#Create sub dataset

df = data[["county", "cases"]]

df.info(verbose=False, memory_usage="deep")

#Load only two columns

df_2col = pd.read_csv(csv , usecols=["county", "cases"])

df_2col.info(verbose=False, memory_usage="deep")Manipulate datatypes

处理数据类型

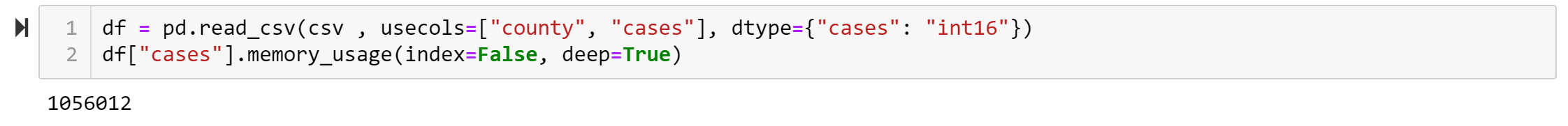

Another way to decrease the memory usage of our data is to truncate numerical items in the data. For example, whenever we load a CSV into a column in a data frame, if the file contains numbers, it will store it as which takes 64 bytes to store one numerical value. However, we can truncate that and use other int formates to save some memory.

减少数据的内存使用量的另一种方法是截断数据中的数字项。 例如,每当我们将CSV加载到数据框中的列中时,如果文件包含数字,它将以CSV格式存储,该格式需要64个字节来存储一个数值。 但是,我们可以截断它并使用其他int格式来节省一些内存。

int8 can store integers from -128 to 127.

int8可以存储-128到127之间的整数。

int16 can store integers from -32768 to 32767.

int16可以存储-32768到32767之间的整数。

int64 can store integers from -9223372036854775808 to 9223372036854775807.

int64可以存储从-9223372036854775808到9223372036854775807的整数。

if you know that the numbers in a particular column will never be higher than 32767, you can use an int16 or int32 and reduce the memory usage of that column by 75%.

如果您知道特定列中的数字永远不会高于32767,则可以使用int16或int32并将该列的内存使用量减少75%。

So, assume that the number of cases in each county can’t exceed 32767 — which is not true in real-life — then, we can truncate that column to int16 instead of int64.

因此,假设每个县的案件数量不能超过32767(在现实生活中并非如此),那么我们可以将该列截断为int16而不是int64 。

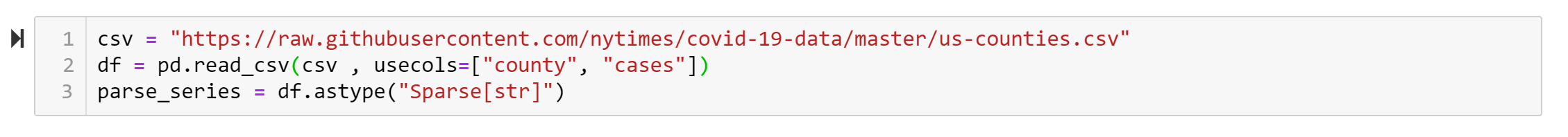

Sparse columns

稀疏列

If the data has a column or more with lots of empty values stored as NaN you save memory by using a sparse column representation so you won't waste memory storing all those empty values.

如果数据中有一个或多个列,并且有许多空值存储为NaN可以使用稀疏列表示形式来节省内存,这样您就不会浪费存储所有这些空值的内存。

Assume the county column has some NaN values and I just want to skip the rows containing NaN, I can do that easily using sparse series.

假设County列具有一些NaN值,而我只想跳过包含NaN的行,那么我可以使用稀疏序列轻松地做到这一点。

有损压缩 (Lossy compression)

What if performing lossless compression wasn’t enough? What if I need to compress my data even more? In this case, you can use lossy compression, so you sacrifice 100% accuracy in your data for the sake of memory usage.

如果执行无损压缩还不够怎么办? 如果我需要进一步压缩数据怎么办? 在这种情况下,您可以使用有损压缩,因此为了节省内存,您会牺牲100%的数据准确性。

You can perform lossy compression in two ways: modify numeric values and sampling.

您可以通过两种方式执行有损压缩:修改数值和采样。

Modifying numeric values: Sometimes, you don’t need full accuracy in your numeric data so that you can truncate them from

int64toint32orint16.修改数值:有时,您不需要数字数据的完全准确性,因此可以将它们从

int64截断为int32或int16。Sampling: Maybe you want to prove that some states have higher COVID cases than others, so you take a sample of some counties to see which states have more cases. Doing that is considered lossy compression because you’re not considering all rows.

抽样:也许您想证明某些州的COVID病例数比其他州高,因此您对一些县进行抽样,以查看哪些州的病例数更多。 这样做被认为是有损压缩,因为您没有考虑所有行。

技术2:分块 (Technique №2: Chunking)

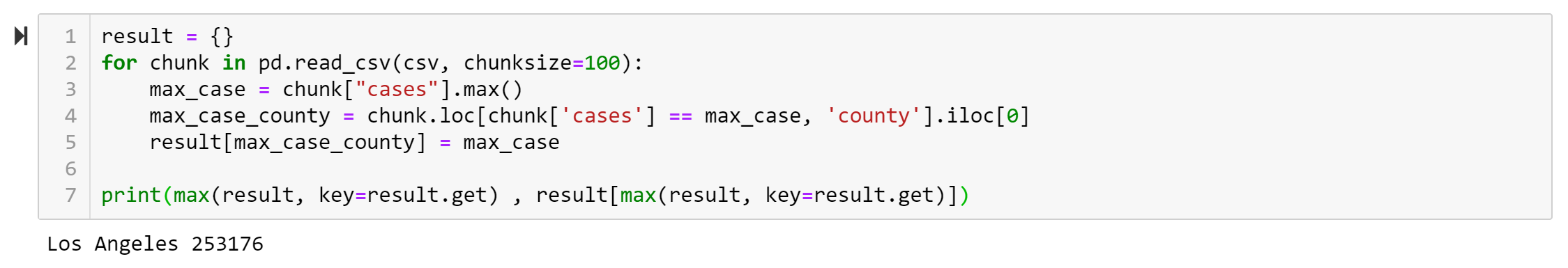

Another way to handle large datasets is by chunking them. That is cutting a large dataset into smaller chunks and then processing those chunks individually. After all the chunks have been processed, you can compare the results and calculate the final findings.

处理大型数据集的另一种方法是对它们进行分块。 那就是将大数据集切成较小的块,然后分别处理这些块。 处理完所有块后,您可以比较结果并计算最终结果。

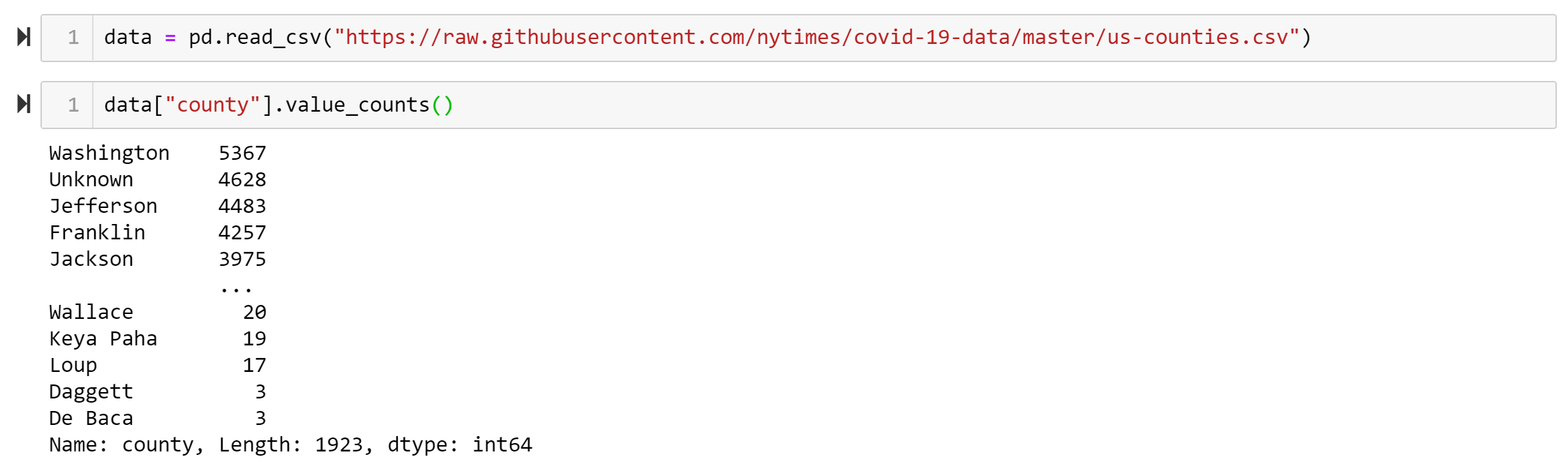

This dataset contains 1923 rows.

该数据集包含1923行。

Let’s assume I want to find the country with the most number of cases. I can divide my dataset into chunks of 100 rows and process each of them individually and then get the maximum of the smaller results.

假设我想找到病例数最多的国家。 我可以将数据集分为100行,然后分别处理每个行,然后获得较小结果的最大值。

Code snippet for this section

本节的代码段

#Import needed libraries

import pandas as pd

#Dataset

csv = "https://raw.githubusercontent.com/nytimes/covid-19-data/master/us-counties.csv"

#Loop different chuncks and get the max of each one

result = {}

for chunk in pd.read_csv(csv, chunksize=100):

max_case = chunk["cases"].max()

max_case_county = chunk.loc[chunk['cases'] == max_case, 'county'].iloc[0]

result[max_case_county] = max_case

#Display results

print(max(result, key=result.get) , result[max(result, key=result.get)])技术№3:索引 (Technique №3: Indexing)

Chunking is excellent if you need to load your dataset only once, but if you want to load multiple datasets, then indexing is the way to go.

如果只需要加载一次数据集,则分块是极好的选择,但是如果您要加载多个数据集,那么索引是最理想的选择。

Think of indexing as the index of a book; you can know the necessary information about an aspect without needing to read the entire book.

认为索引是一本书的索引; 您无需阅读整本书即可了解某个方面的必要信息。

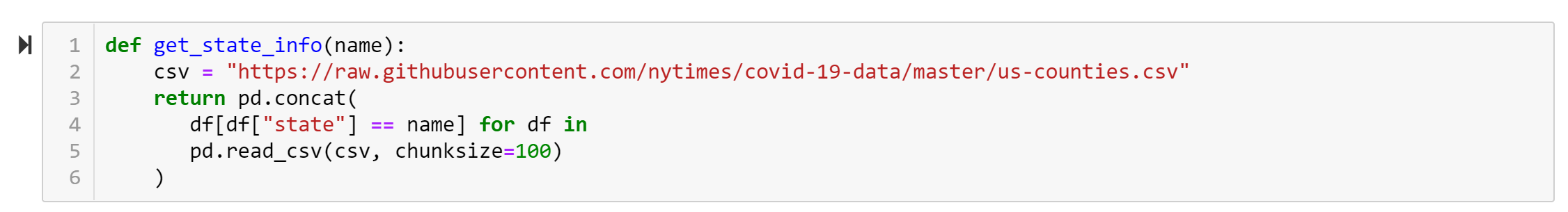

For example, let’s say I want to get the cases for a specific state. In this case, chunking would make sense; I could write a simple function that accomplishes that.

例如,假设我要获取特定状态的案例。 在这种情况下,分块将是有意义的。 我可以编写一个简单的函数来完成该任务。

索引与分块 (Indexing vs. chunking)

In chunking, you need to read all data, while in indexing, you just need a part of the data.

在分块中,您需要读取所有数据,而在建立索引时,您只需要部分数据。

So, my small function loads all the rows in each chunk but only cares about the ones for the state I want. That leads to significant overhead. I can avoid having this by using a database next to Pandas. The simplest one I can use is SQLite.

因此,我的小函数会加载每个块中的所有行,但只关心我想要的状态的行。 这导致大量的开销。 我可以通过使用Pandas旁边的数据库来避免这种情况。 我可以使用的最简单的方法是SQLite。

To do that, I first need to load my data frame into an SQLite database.

为此,我首先需要将数据框加载到SQLite数据库中。

import sqlite3

csv = "https://raw.githubusercontent.com/nytimes/covid-19-data/master/us-counties.csv"

# Create a new database file:

db = sqlite3.connect("cases.sqlite")

# Load the CSV in chunks:

for c in pd.read_csv(csv, chunksize=100):

# Append all rows to a new database table

c.to_sql("cases", db, if_exists="append")

# Add an index on the 'state' column:

db.execute("CREATE INDEX state ON cases(state)")

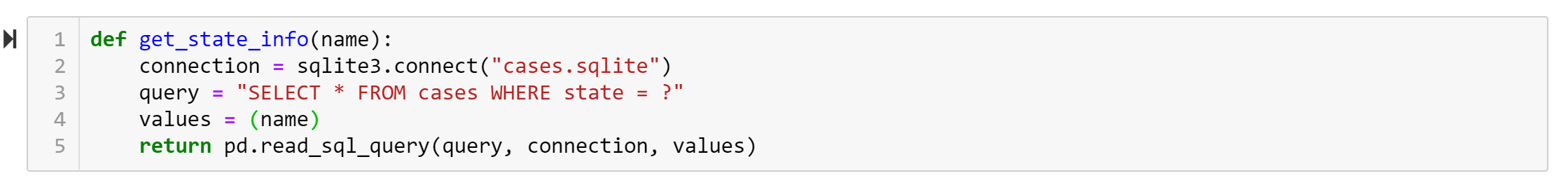

db.close()Then I need to re-write my get_state_info function and use the database in it.

然后,我需要重写我的get_state_info函数并在其中使用数据库。

By doing that, I can decrease the memory usage by 50%.

这样,我可以将内存使用量减少50%。

结论 (Conclusion)

Handing big datasets can be such a hassle, especially if it doesn’t fit in your memory. Some solutions for that can either be time or money consuming, which is you have the resource that could be the simplest, most straightforward approach.

处理大型数据集可能会很麻烦,尤其是当它不适合您的内存时。 某些解决方案可能既耗时又耗钱,这就是您拥有的资源可能是最简单,最直接的方法。

However, if you don’t have the resources, you can use some techniques in Pandas to decrease the memory usage of loading your data — techniques such as compression, indexing, and chucking.

但是,如果您没有资源,则可以在Pandas中使用某些技术来减少加载数据的内存使用量,例如压缩,索引编制和卡紧处理。

翻译自: https://towardsdatascience.com/what-to-do-when-your-data-is-too-big-for-your-memory-65c84c600585

wx 存储数据太大

本文探讨了当数据集过大,超出计算机内存限制时的处理策略。介绍了使用Pandas进行数据压缩、分块和索引的技术,以减少内存使用并提高处理效率。

本文探讨了当数据集过大,超出计算机内存限制时的处理策略。介绍了使用Pandas进行数据压缩、分块和索引的技术,以减少内存使用并提高处理效率。

2968

2968

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?