knative

If you’re already using Kubernetes, you’ve probably heard about serverless. While both platforms are scalable, serverless goes the extra mile by providing developers with running code without worrying about infrastructure and saves on infra costs by virtually scaling your application instances from zero.

如果您已经在使用Kubernetes,您可能已经听说过无服务器。 虽然这两种平台都是可扩展的,但无服务器通过为开发人员提供运行代码而不用担心基础架构而更加努力,并通过将应用程序实例从零扩展为虚拟主机,从而节省了基础成本。

Kubernetes, on the other hand, provides its advantages with zero limitations, following a traditional hosting model and advanced traffic management techniques that help you do things — like blue-green deployments and A/B testing.

另一方面,Kubernetes提供了零限制的优势,它遵循传统的托管模型和高级流量管理技术来帮助您完成工作,例如蓝绿色部署和A / B测试。

Knative is an attempt to create the best of the two worlds. As an open-source cloud-native platform, it enables you to run your serverless workloads on Kubernetes, providing all Kubernetes capabilities, plus the simplicity and flexibility of serverless.

Knative试图创造两个世界中最好的一个。 作为开源的云原生平台,它使您能够在Kubernetes上运行无服务器工作负载,提供所有Kubernetes功能,以及无服务器的简单性和灵活性。

So while your developers can focus on writing code and deploying the container to Kubernetes with a single command, Knative can manage the application by taking care of the networking details, the autoscaling to zero, and the revision tracking.

因此,尽管您的开发人员可以专注于编写代码并使用单个命令将容器部署到Kubernetes,但Knative可以通过照顾网络详细信息,自动缩放为零以及修订跟踪来管理应用程序。

It also takes care of allowing developers to write loosely coupled code with its eventing framework that provides the universal subscription, delivery, and management of events. That means you can declare event connectivity, and your apps can subscribe to specific data streams.

它还注意允许开发人员使用事件框架来编写松耦合的代码,该事件框架提供事件的通用订阅,交付和管理。 这意味着您可以声明事件连接性,并且您的应用程序可以订阅特定的数据流。

Spearheaded by Google, this open-source platform has been adopted by the Cloud Native Computing Foundation, which means you don’t experience vendor lock-in — a considerable limitation of the current cloud-based serverless FaaS solutions. You can run Knative on any Kubernetes cluster.

这个开放源代码平台由Google牵头,已被Cloud Native Computing Foundation采用,这意味着您不会遇到供应商锁定的情况-这是当前基于云的无服务器FaaS解决方案的一个很大的限制。 您可以在任何Kubernetes集群上运行Knative。

本地观众 (Knative Audience)

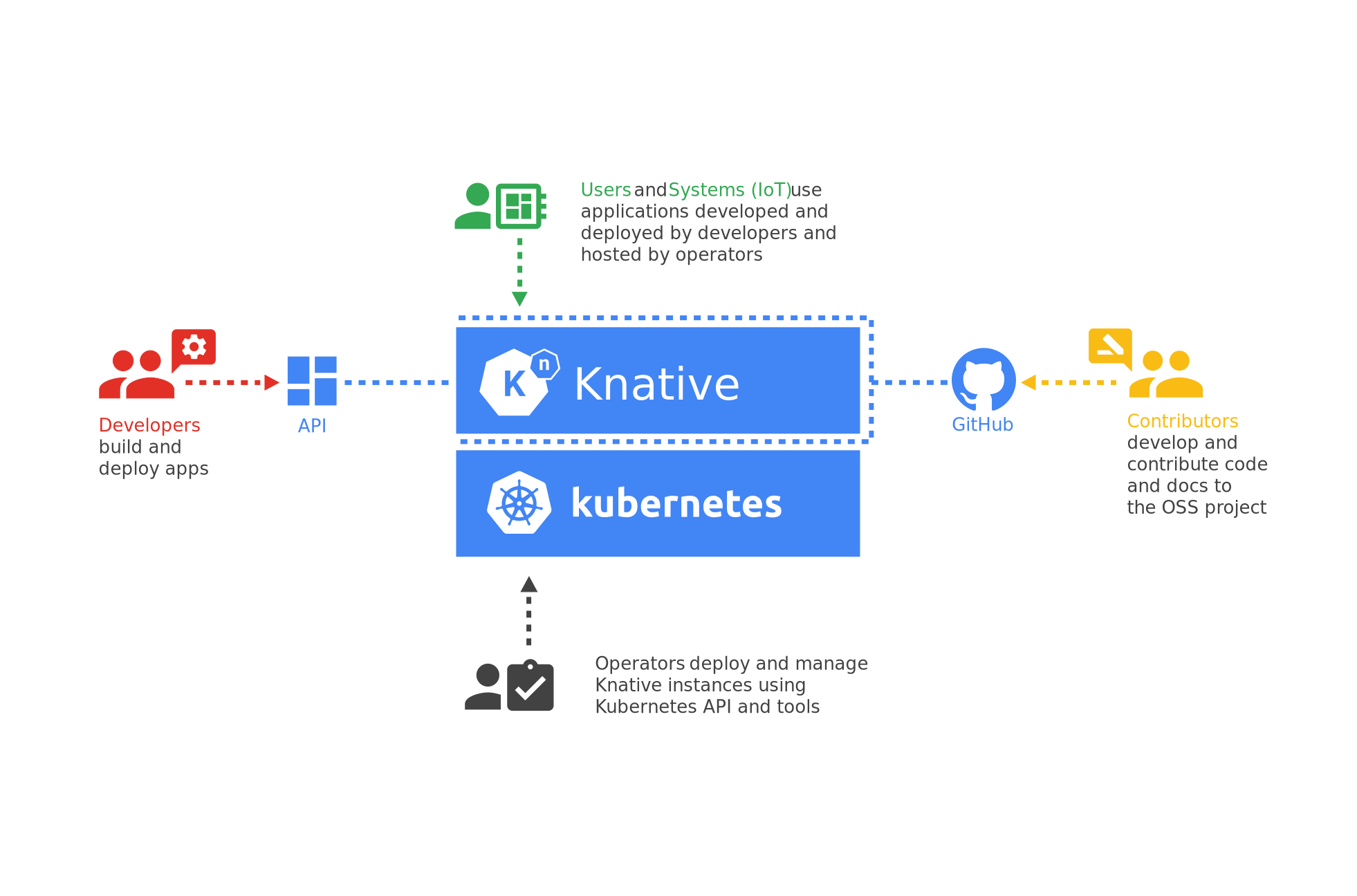

Knative helps a variety of audiences, each having a particular area of expertise and responsibility.

Knative帮助各种受众,每个受众都有特定的专业知识和责任领域。

So while your operators can focus on managing your Kubernetes cluster and installing and maintaining Knative instances using kubectl, your developers can focus on building and deploying applications using the Knative API interface.

因此,尽管您的运营商可以专注于管理您的Kubernetes集群以及使用kubectl安装和维护Knative实例,但是您的开发人员可以专注于使用Knative API接口构建和部署应用程序。

That gives any organization immense power, as now different teams can focus on their area of expertise without stepping on each other’s shoes.

这给任何组织都带来了巨大的力量,因为现在不同的团队可以专注于自己的专业领域,而不会互相踩踏。

为什么要使用Knative (Why You Should Use Knative)

Most organizations that run Kubernetes have a complex ops process in managing deployments and running workloads. This results in developers looking at details they’re not supposed to worry too much about. Developers should focus on writing code, rather than worrying about builds and deployments.

大多数运行Kubernetes的组织在管理部署和运行工作负载方面都有复杂的操作流程。 这使得开发人员可以查看他们不应该过多担心的细节。 开发人员应该专注于编写代码,而不用担心构建和部署。

Kubeless helps simplify the way developers run their code on Kubernetes without learning too much about the internal nitty-gritty of Kubernetes.

Kubeless有助于简化开发人员在Kubernetes上运行代码的方式,而无需过多了解Kubernetes的内部细节。

A Kubernetes cluster occupies infrastructure resources, as it requires all applications to have at least one container running. Knative manages that aspect for you and takes care of automatically scaling your containers within the cluster from zero. That allows Kubernetes administrators to pack a lot of applications into a single cluster.

Kubernetes集群占用基础架构资源,因为它要求所有应用程序至少运行一个容器。 Knative为您管理该方面,并负责自动将群集中的容器从零扩展。 这使Kubernetes管理员可以将许多应用程序打包到一个集群中。

If you have multiple applications having different peak times or if you have a cluster that has worker-node autoscaling, you can benefit a lot from this in your idle time.

如果您有多个具有不同高峰时间的应用程序,或者您的集群具有工作节点自动缩放功能,那么您可以从空闲时间中受益匪浅。

It’s a goldmine for microservices applications that may or may not need microservices running at a particular time. It fosters better resource utilization, and you can do more with limited resources.

它是微服务应用程序的金矿,可能需要也可能不需要在特定时间运行微服务。 它可以提高资源利用率,并且您可以在有限的资源下做更多的事情。

Apart from that, it integrates quite well with the Eventing engine, and it provides you with a seamless experience in designing decoupled systems. Your application code can remain completely free of any endpoint configuration, and you can publish and subscribe to events by declaring configurations in the Kubernetes layer. That’s a considerable advantage for complex microservice applications.

除此之外,它与Eventing引擎集成得很好,并且为您提供了设计去耦系统的无缝体验。 您的应用程序代码可以完全不受任何端点配置的影响,并且可以通过在Kubernetes层中声明配置来发布和订阅事件。 对于复杂的微服务应用程序来说,这是一个很大的优势。

基尼工作原理 (How Knative Works)

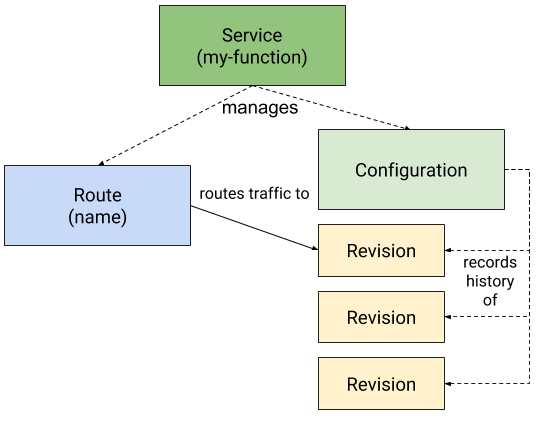

Knative exposes the kn API interface using Kubernetes operators and CRDs. Using this, you can deploy your applications with the command line. In the background, Knative will create all of the Kubernetes resources (such as deployment, services, ingress, etc.) required to run your applications without you having to worry about it.

Knative使用Kubernetes运算符和CRD公开kn API接口。 使用此功能,您可以使用命令行部署应用程序。 在后台,Knative将创建运行您的应用程序所需的所有Kubernetes资源(例如部署,服务,入口等),而无需您担心。

Bear in mind Knative doesn’t create the pods immediately. Instead, it provides a virtual endpoint for your applications and listens on them. If there’s a hit to those endpoints, it spins up the pods required to serve the requests. That allows Knative to scale your applications from zero to the required number of instances.

请记住,Knative不会立即创建吊舱。 相反,它为您的应用程序提供了一个虚拟终结点,并监听它们。 如果这些端点受到打击,它将旋转处理请求所需的Pod。 这样,Knative可以将您的应用程序从零扩展到所需的实例数。

Knative provides application endpoints using a custom domain in the format [app-name].[namespace].[custom-domain]. That helps in identifying an application uniquely. It’s similar to how Kubernetes handles services, but you need to create A records of the custom domain to point to the Istio ingress gateway. Istio manages all of the traffic that flows through your cluster in the background.

Knative使用格式为[app-name].[namespace].[custom-domain]的自定义域提供应用程序端点。 这有助于唯一地识别应用程序。 这与Kubernetes处理服务的方式类似,但是您需要创建自定义域的A记录以指向Istio入口网关。 Istio在后台管理流过群集的所有流量。

Knative is an amalgamation of a lot of CNCF and open-source products — such as Kubernetes, Istio, Prometheus, Grafana, and event-streaming engines, such as Kafka and Google Pub/Sub.

Knative是许多CNCF和开源产品(例如Kubernetes,Istio,Prometheus,Grafana)和事件流引擎(例如Kafka和Google Pub / Sub)的合并。

安装Knative (Installing Knative)

Knative is reasonably modular, and you can choose to install only the required components. Knative offers the eventing, serving, and monitoring components. You can install them by applying the custom CRDs.

Knative具有合理的模块化,您可以选择仅安装所需的组件。 Knative提供事件,服务和监视组件。 您可以通过应用自定义CRD安装它们。

Knative does have external dependencies and requirements for every component. For example, if you’re installing the serving component, you also need to install Istio and a DNS add-on.

Knative确实对每个组件都有外部依赖性和要求。 例如,如果要安装服务组件,则还需要安装Istio和DNS加载项。

As installing Knative is relatively complex, I’ll cover the setup in another article, but for the demo, let’s start with installing the serving component.

由于安装Knative相对复杂,因此我将在另一篇文章中介绍该设置,但对于演示,让我们从安装服务组件开始。

You require a running Kubernetes cluster for this activity.

您需要一个正在运行的Kubernetes集群才能进行此活动。

Install the Service CRDs and the serving core components:

安装服务CRD和serving核心组件:

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-crds.yaml

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-core.yamlInstall Istio for Knative:

安装Istio for Knative:

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.7.0 sh - && cd istio-1.7.0 && export PATH=$PWD/bin:$PATH

istioctl install --set profile=demo

kubectl label namespace knative-serving istio-injection=enabledWait for Istio to be ready by checking if Kubernetes has allocated an external IP to the Istio Ingress Gateway:

通过检查Kubernetes是否已将外部IP分配给Istio Ingress网关来等待Istio准备就绪:

kubectl -n istio-system get service istio-ingressgatewayDefine a custom domain, and configure the DNS to point to the Istio ingress gateway IP:

定义一个自定义域,并将DNS配置为指向Istio入口网关IP:

kubectl patch configmap/config-domain --namespace knative-serving --type merge -p "{\"data\":{\"$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}').xip.io\":\"\"}}"

kubectl apply -f https://github.com/knative/net-istio/releases/download/v0.17.0/release.yaml

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-default-domain.yamlInstall the Istio HPA add-on:

安装Istio HPA附加组件:

kubectl apply -f https://github.com/knative/serving/releases/download/v0.17.0/serving-hpa.yaml安装Knative CLI (Installing the Knative CLI)

Installing the Knative CLI is simple. You need to download the latest Knative CLI binary into your bin folder or set the appropriate path.

安装Knative CLI很简单。 您需要将最新的Knative CLI二进制文件下载到bin文件夹中或设置适当的路径。

sudo wget https://storage.googleapis.com/knative-nightly/client/latest/kn-linux-amd64 -O /usr/local/bin/kn

sudo chmod +x /usr/local/bin/kn

kn version运行“你好,世界!” 应用 (Running a “Hello, World!” Application)

Let’s now run our first “Hello, World!” application on Knative to understand how simple it is to deploy once things are ready.

现在运行我们的第一个“ Hello,World!” Knative上的应用程序,以了解一旦准备就绪,部署将多么简单。

Let’s use a sample “Hello, World!” Go application for this demo. That’s a simple REST API that returns Hello $TARGET, where $TARGET is an environment variable you can set in the container.

让我们使用一个示例“ Hello,World!” 去这个演示的应用程序。 那是一个简单的REST API,它返回Hello $TARGET ,其中$TARGET是可以在容器中设置的环境变量。

Run the below to get started:

运行以下内容以开始使用:

$ kn service create helloworld-go --image gcr.io/knative-samples/helloworld-go --env TARGET="World" --annotation autoscaling.knative.dev/target=10

Creating service 'helloworld-go' in namespace 'default':

0.171s Configuration "helloworld-go" is waiting for a Revision to become ready.

6.260s ...

6.324s Ingress has not yet been reconciled.

6.496s Waiting for load balancer to be ready

6.637s Ready to serve.

Service 'helloworld-go' created to latest revision 'helloworld-go-zglmv-1' is available at URL:

http://helloworld-go.default.34.71.125.175.xip.iokubectl get pod

No resources found in default namespace.Let’s trigger the helloworld service.

让我们触发helloworld服务。

$ curl http://helloworld-go.default.34.71.125.175.xip.io

Hello World!And we get a response after a while. Let’s look at the pods.

一段时间后,我们得到了回应。 让我们看一下豆荚。

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

helloworld-go-zglmv-1-deployment-6d4b7fb4f-ctz86 2/2 Running 0 50sSo as you can see, Knative spun up a pod in the background when we made the first hit. So we literally scaled from zero.

如您所见,当我们第一次点击时,Knative在后台旋转了一个豆荚。 因此,我们从零开始进行了缩放。

If we give it a while, we’ll see the pod start terminating. Let’s watch the pod.

如果稍等片刻,我们会看到吊舱开始终止。 让我们看一下豆荚。

$ kubectl get pod -w

NAME READY STATUS RESTARTS AGE

helloworld-go-zglmv-1-deployment-6d4b7fb4f-d9ks6 2/2 Running 0 7s

helloworld-go-zglmv-1-deployment-6d4b7fb4f-d9ks6 2/2 Terminating 0 67s

helloworld-go-zglmv-1-deployment-6d4b7fb4f-d9ks6 1/2 Terminating 0 87sThat shows Knative is managing the pods according to the requirement. While the first request is slow, as Knative is creating workloads to process it, subsequent requests will be faster. You can fine-tune the time to spin down the pods based on your requirements or if you have a tighter SLA.

这表明Knative正在根据需求管理吊舱。 尽管第一个请求很慢,但随着Knative正在创建工作负载来处理它,后续请求将更快。 您可以根据您的要求或如果您拥有更严格的SLA,可以微调旋转吊舱的时间。

Let’s take this to the next level. If you look at the annotations, we had limited each pod to process a maximum of 10 concurrent requests. So what happens if we load our functions beyond that? Let’s find out.

让我们将其带入一个新的水平。 如果您查看注释,则我们将每个pod限制为最多处理10个并发请求。 那么,如果我们加载超出此范围的函数会发生什么呢? 让我们找出答案。

We’ll use the hey utility to load the application. The following command sends 50 concurrent requests to the endpoint for 30 seconds.

我们将使用hey实用程序加载应用程序。 以下命令在30秒内将50个并发请求发送到端点。

$ hey -z 30s -c 50 http://helloworld-go.default.34.121.106.103.xip.io

Average: 0.1222 secs

Requests/sec: 408.3187

Total data: 159822 bytes

Size/request: 13 bytes

Response time histogram:

0.103 [1] |

0.444 [12243] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

0.785 [0] |

1.126 [0] |

1.467 [0] |

1.807 [0] |

2.148 [0] |

2.489 [0] |

2.830 [0] |

3.171 [0] |

3.512 [50] |

Latency distribution:

10% in 0.1042 secs

25% in 0.1048 secs

50% in 0.1057 secs

75% in 0.1077 secs

90% in 0.1121 secs

95% in 0.1192 secs

99% in 0.1826 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0010 secs, 0.1034 secs, 3.5115 secs

DNS-lookup: 0.0006 secs, 0.0000 secs, 0.1365 secs

req write: 0.0000 secs, 0.0000 secs, 0.0062 secs

resp wait: 0.1211 secs, 0.1033 secs, 3.2698 secs

resp read: 0.0001 secs, 0.0000 secs, 0.0032 secs

Status code distribution:

[200] 12294 responsesLet’s look at the pods now.

让我们现在看一下豆荚。

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

helloworld-go-thmmb-1-deployment-77976785f5-6cthr 2/2 Running 0 59s

helloworld-go-thmmb-1-deployment-77976785f5-7dckg 2/2 Running 0 59s

helloworld-go-thmmb-1-deployment-77976785f5-fdvjn 0/2 Pending 0 57s

helloworld-go-thmmb-1-deployment-77976785f5-gt55v 0/2 Pending 0 58s

helloworld-go-thmmb-1-deployment-77976785f5-rwwcv 2/2 Running 0 59s

helloworld-go-thmmb-1-deployment-77976785f5-tbrr7 2/2 Running 0 58s

helloworld-go-thmmb-1-deployment-77976785f5-vtnz4 0/2 Pending 0 58s

helloworld-go-thmmb-1-deployment-77976785f5-w8pn6 2/2 Running 0 59sAs we see, Knative is scaling the pods as the load of the function increases, and it’ll spin it down when the load is no longer there.

如我们所见,随着函数的负载增加,Knative正在缩放Pod,当负载不再存在时,Knative会将其降低。

结论 (Conclusion)

Knative combines the best possible aspects of both serverless and Kubernetes and is slowly heading toward a standard way of implementing FaaS. As the CNCF adopts it and as it continues to see significant interest among techies, we’ll soon find cloud providers implementing it into their serverless offerings.

Knative结合了无服务器和Kubernetes的最佳方面,并正在逐步向实现FaaS的标准方式迈进。 随着CNCF的采用,以及不断吸引技术人员的关注,我们很快就会发现云提供商将其实施到其无服务器产品中。

Thanks for reading — I hope you enjoyed the article!

感谢您的阅读-希望您喜欢这篇文章!

翻译自: https://medium.com/better-programming/go-serverless-on-kubernetes-with-knative-b3aff3dbdffa

knative

278

278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?