场景介绍

有一个前台的 Web 应用,框架会记录访问日志,并定期归档,存储在特定的目录,目录格式如下:

/onlinelogs/应用名/环境名/年/月/日/小时/,例如 /onlinelogs//Prod/2018/08/07/01。

在该目录下:

访问日志文件可能有多个,文件名以 access-log 开头

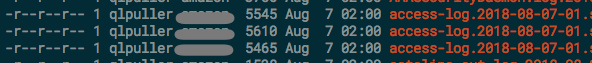

已压缩为 .gz 文件,并且只读,例如:

访问日志文件

访问日志文件中,有部分行是记录 HTTP 请求的,格式如下所示:

从中可以看出,请求的目标资源,响应码,客户端信息

222.67.225.134 - - [04/Aug/2018:01:16:44 +0000] "GET /?ref=as_cn_ags_resource_tb&ck-tparam-anchor=123067 HTTP/1.1" 200 7798 "https://gs.amazon.cn/resources.html/ref=as_cn_ags_hnav1_re_class" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_6) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/11.1.2 Safari/605.1.15"

222.67.225.134 - - [04/Aug/2018:01:16:54 +0000] "GET /tndetails?tnid=3be3f34dee8a4bf08baa072a478fc882 HTTP/1.1" 200 9152 "https://gs.amazon.cn/sba/?ref=as_cn_ags_resource_tb&ck-tparam-anchor=123067&tnm=Offline" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_6) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/11.1.2 Safari/605.1.15"

222.67.225.134 - - [04/Aug/2018:01:17:37 +0000] "GET /paymentinfo?oid=10763561&rtxref=164f9f8e2b5e4d7790d02d1220eae435 HTTP/1.1" 200 7138 "https://gs.amazon.cn/sba/paymentinfo?oid=10763561&rtxref=164f9f8e2b5e4d7790d02d1220eae435" "Mozilla/5.0 (Linux; Android 8.1.0; DE106 Build/OPM1.171019.026; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/62.0.3202.84 Mobile Safari/537.36 AliApp(DingTalk/4.5.3) com.alibaba.android.rimet/0 Channel/10006872 language/zh-CN"

222.67.225.134 - - [04/Aug/2018:01:17:39 +0000] "GET /paymentinfo?oid=10763561&rtxref=164f9f8e2b5e4d7790d02d1220eae435 HTTP/1.1" 200 7138 "https://gs.amazon.cn/sba/paymentinfo?oid=10763561&rtxref=164f9f8e2b5e4d7790d02d1220eae435" "Mozilla/5.0 (Linux; Android 8.1.0; DE106 Build/OPM1.171019.026; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/62.0.3202.84 Mobile Safari/537.36 AliApp(DingTalk/4.5.3) com.alibaba.android.rimet/0 Channel/10006872 language/zh-CN"

222.67.225.134 - - [04/Aug/2018:01:17:40 +0000] "GET /paymentinfo?oid=10763561&rtxref=164f9f8e2b5e4d7790d02d1220eae435 HTTP/1.1" 200 7138 "https://gs.amazon.cn/sba/paymentinfo?oid=10763561&rtxref=164f9f8e2b5e4d7790d02d1220eae435" "Mozilla/5.0 (Linux; Android 8.1.0; DE106 Build/OPM1.171019.026; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/62.0.3202.84 Mobile Safari/537.36 AliApp(DingTalk/4.5.3) com.alibaba.android.rimet/0 Channel/10006872 language/zh-CN"

140.243.121.197 - - [04/Aug/2018:01:17:41 +0000] "GET /?ref=as_cn_ags_resource_tb HTTP/1.1" 302 - "https://gs.amazon.cn/resources.html/ref=as_cn_ags_hnav1_re_class" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36"

分析目标

记录不同时间段的访问量

统计 PC 和 Mobile 端访问量

统计不同页面的访问量

基本思想:

由于原日志文件已压缩,并且只读,所以需要创建一个临时目录 /tmp/logs/unziped_logs 来解压缩日志文件

利用正则表达式 ^access\-log.*gz+$ 过滤日志文件

利用正则表达式 ^.*GET (.*) HTTP.*$ 过滤日志文件中的 HTTP 请求

通过日志行是否有 Mobile 来判断客户端

代码如下:(部分内容屏蔽)

#!/usr/bin/python3

import os

import os.path

import re

import shutil

import gzip

from collections import defaultdict

# define the ziped and unziped log file directorys

source_logs_dir = '/onlinelogs//Prod'

unziped_logs_dir = '/tmp/logs/unziped_logs'

# clear the unziped log file directory if exists

if os.path.exists(unziped_logs_dir):

shutil.rmtree(unziped_logs_dir)

# create the unziped log file directory

os.mkdir(unziped_logs_dir)

# regex used to match target log file name

log_file_name_regex = re.compile(r'^access\-log.*gz+$')

# regex used to match HTTP request

http_request_regex = re.compile(r'^.*GET.*gs.amazon.cn')

# regex used to match request page

request_page_regex = re.compile(r'^.*GET (.*) HTTP.*$')

# request_page_regex = re.compile(r'^.*GET (.*)\?.*$')

# a dictionary to store the HTTP request count of each day

day_count = defaultdict(int)

# a dictionary to store the count of each device (PC or Mobile)

device_count = defaultdict(int)

device_count['PC'] = 0

device_count['Mobile'] = 0

# a dictionary to store the count of each request page

request_page_count = defaultdict(int)

for root, dirs, files in os.walk(source_logs_dir):

for name in files:

# find the target log files

if log_file_name_regex.search(name):

# parst the day

day = root[-13:-3]

# copy the target log files

shutil.copyfile(os.path.join(root, name), os.path.join(unziped_logs_dir, name))

# unzip the log files

unziped_log_file = gzip.open(os.path.join(unziped_logs_dir, name), 'rb')

http_request_count = 0

pc_count = 0

mobile_count = 0

for line in unziped_log_file:

if(http_request_regex.search(line)):

# parse the request page

regex_obj = request_page_regex.search(line)

request_page = regex_obj.group(1)

# remove params of the request page

if('?' in request_page):

request_page = request_page[:request_page.find('?')]

http_request_count = http_request_count + 1

if('Mobile' in line):

mobile_count = mobile_count + 1

else:

pc_count = pc_count + 1

# update the count of each request page

if(request_page in request_page_count):

request_page_count[request_page] = request_page_count[request_page] + 1

else:

request_page_count[request_page] = 1

# update the HTTP request count of each day

if(day in day_count):

day_count[day] = day_count[day] + http_request_count

else:

day_count[day] = http_request_count

# update the count of each device (PC or Mobile)

device_count['PC'] = device_count['PC'] + pc_count

device_count['Mobile'] = device_count['Mobile'] + mobile_count

# remvoe the original zip log files

os.remove(os.path.join(unziped_logs_dir, name))

# print the HTTP request count of each day

total = 0

print 'HTTP request count of each day'

for day, count in sorted(day_count.items()):

print day, ':', count

total = total + count

print 'Total = ', total

print '###############################'

total = 0

print 'count of each device (PC or Mobile)'

# print the count of each device (PC or Mobile)

for device, count in sorted(device_count.items()):

print device, ':', count

total = total + count

print 'Total = ', total

print '###############################'

total = 0

print 'count of each request page'

# print the count of each request page

for request_page, count in sorted(request_page_count.items()):

print request_page, ':', count

total = total + count

print 'Total = ', total

214

214

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?