最近在学习网易云课堂上吴恩达教授的《神经网络与深度学习》的课程,做了第一周的测试题,没有答案,于是想分享自己的参考解析(根据coursera 荣誉准则不允许公布答案),以供讨论。

1。What does the analogy “AI is the new electricity” refer to?

A. Similar to electricity starting about 100 years ago, AI is transforming multiple industries.

B. Through the “smart grid”, AI is delivering a new wave of electricity.

C. AI runs on computers and is thus powered by electricity, but it is letting computers do things not possible before.

D. AI is powering personal devices in our homes and offices, similar to electricity.

我的解析:AI是一种新的生产力,就像100年前的电的出现一样,带动了很多工业的发展。

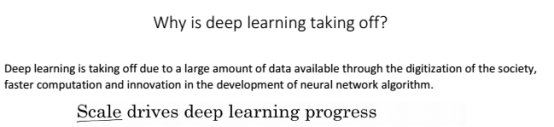

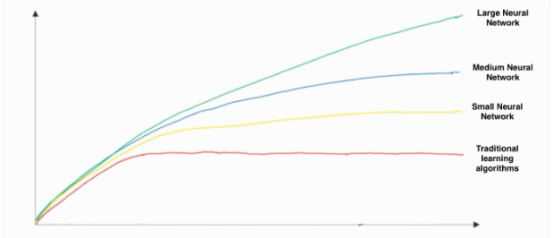

2。Which of these are reasons for Deep Learning recently taking off? (Check the three options that apply.)

A. Deep learning has resulted in significant improvements in important applications such as online advertising, speech recognition, and image recognition.

B. We have access to a lot more data.

C. We have access to a lot more computational power.

D. Neural Networks are a brand new field.

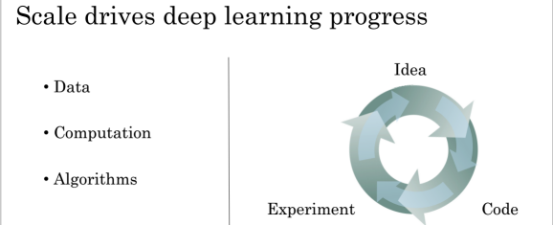

我的解析:视频中老师讲到,第三节的文档材料中如下图所示,阐述的是数据、计算速度、算法都是深度学习的兴起因素。

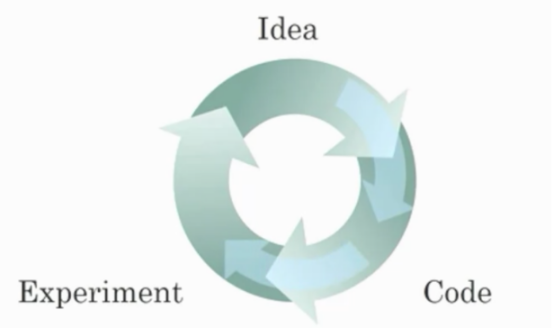

3。Recall this diagram of iterating over different ML ideas. Which of the statements below are true? (Check all that apply.)

A. Being able to try out ideas quickly allows deep learning engineers to iterate more quickly.

B. Faster computation can help speed up how long a team takes to iterate to a good idea.

C. It is faster to train on a big dataset than a small dataset.

D. Recent progress in deep learning algorithms has allowed us to train good models faster (even without changing the CPU/GPU hardware).

我的解析:数据、计算、算法

4。When an experienced deep learning engineer works on a new problem, they can usually use insight from previous problems to train a good model on the first try, without needing to iterate multiple times through different models. True/False?

我的解析:一位有经验的深度学习工程师可以基于之前的问题来选择问题适合的模型,而不用在模型上迭代很多次,就比如说CNN相比于其他模型来说更适用图像识别等,那么再遇到一个新的问题时,比如无人驾驶的问题,就可以使用CNN来处理图像。

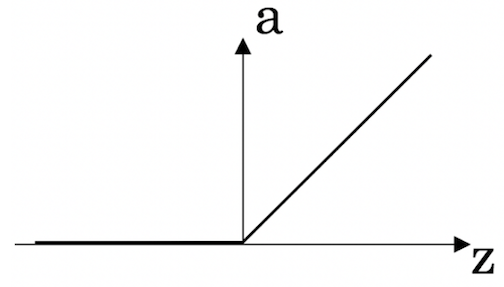

5。Which one of these plots represents a ReLU activation function?

我的解析:

6。Images for cat recognition is an example of “structured” data, because it is represented as a structured array in a computer. True/False?

我的解析:音频、图像、文本都是非结构化数据。

7。A demographic dataset with statistics on different cities' population, GDP per capita, economic growth is an example of “unstructured” data because it contains data coming from different sources. True/False?

我的解析:像统计数据这些有明确定义的数据是结构化数据。

8。Why is an RNN (Recurrent Neural Network) used for machine translation, say translating English to French? (Check all that apply.)

A. It can be trained as a supervised learning problem.

B. It is strictly more powerful than a Convolutional Neural Network (CNN).

C. It is applicable when the input/output is a sequence (e.g., a sequence of words).

D. RNNs represent the recurrent process of Idea->Code->Experiment->Idea->....

我的解析:RNN适合机器翻译,原因是机器翻译是作为监督学习问题处理,并RNN算法更适合处理序列化的数据。

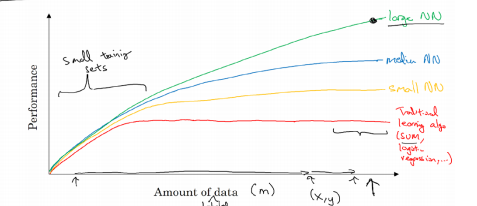

9。In this diagram which we hand-drew in lecture, what do the horizontal axis (x-axis) and vertical axis (y-axis) represent?

A. x-axis is the performance of the algorithm

y-axis (vertical axis) is the amount of data.

B. x-axis is the input to the algorithm

y-axis is outputs.

C. x-axis is the amount of data

y-axis is the size of the model you train.

D. x-axis is the amount of data

y-axis (vertical axis) is the performance of the algorithm.

我的解析:

10。Assuming the trends described in the previous question's figure are accurate (and hoping you got the axis labels right), which of the following are true? (Check all that apply.)

A. Decreasing the training set size generally does not hurt an algorithm’s performance, and it may help significantly.

B. Decreasing the size of a neural network generally does not hurt an algorithm’s performance, and it may help significantly.

C. Increasing the training set size generally does not hurt an algorithm’s performance, and it may

help significantly.

D. Increasing the size of a neural network generally does not hurt an algorithm’s performance, and it may help significantly.

我的解析:”Scale drives deep learning progress.”根据第9题的图也可以得出以下结论——无论是增加数据的规模还是神经网络的规模都不会降低算法的表现,有时会有显著作用。

1024

1024

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?