一:环境准备

1. 四台服务器:根据具体需求分配

A :master (192.168.0.115) 2核4g 用于k8s的主节点

B: node1 (192.168.0.126) 2核8g

C: node2 (192.168.0.128) 2核8g nfs随便找个节点去安装,这里我采用node2这台机器

D: data (192.168.0.129) 环境服务器 2核8g 部署 harbor gitlab mysql 等

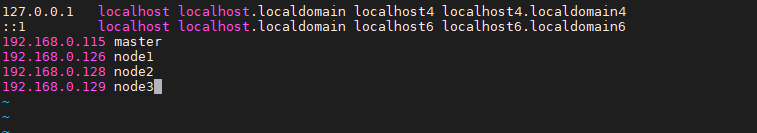

2:更换主机名和主机映射

vi /etc/hostname 修改主机名

vi /etc/hosts 修改主机映射

reboot 重启服务器

3:部署docker,k8s环境

3.1. 安装docker,k8s脚本

#!/bin/bash

# 关闭防火墙

systemctl stop firewalld.service

#systemctl status firewalld.service

systemctl disable firewalld

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

echo "vm.swappiness = 0" >> /etc/sysctl.conf

sysctl -p

#设置启动参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

#配置docker的yum库

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#install docker

yum -y install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io

#修改docker cgroup driver为systemd

mkdir /etc/docker

cat <<EOF > /etc/docker/daemon.json

{

"registry-mirrors": [

"https://xxx.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn",

"http://f1361db2.m.daocloud.io",

"https://registry.docker-cn.com"

],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

#启动docker

systemctl daemon-reload

systemctl restart docker

systemctl enable docker

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装kubectl、kubelet、kubeadm

yum -y makecache

yum -y install kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0

rpm -aq kubelet kubectl kubeadm

systemctl enable kubelet

wget https://bootstrap.pypa.io/get-pip.py && python3 get-pip.py

pip install docker-compose

校验是否安装成功

docker --version

kubeadm version

3.2. 安装docker-compose

1、安装pip : wget https://bootstrap.pypa.io/get-pip.py && python3 get-pip.py

2、安装docker-compose: pip install docker-compose

3、检查安装成功 docker-compose version

3.3. k8s初始化master(192.168.0.115)

kubeadm init --apiserver-advertise-address=192.168.0.115 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=Swap

参数说明:

kubernetes-version:要安装的版本

pod-network-cidr:负载容器的子网网段

image-repository:指定镜像仓库(由于从阿里云拉镜像,解决了k8s.gcr.io镜像拉不下来的问题)

apiserver-advertise-address:节点绑定的服务器ip(多网卡可以用这个参数指定ip)

v=6:这个参数我还没具体查文档,用法是初始化过程显示详细内容,部署过程如果有问题看详细信息很多时候能找到问题所在

常见初始化错误问题

1:如果是大陆网络下载镜像超时可用docker 先下载下来再tag

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9CxLReSN-1627542054865)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625633376258.png)]](https://img-blog.csdnimg.cn/6cd92d105b234e0c9a828c9444fd89a5.png)

解决办法

docker pull coredns/coredns

docker tag coredns/coredns registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

#再执行init

kubeadm init --apiserver-advertise-address=192.168.0.115 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=Swap

2:出现这个错误 执行 sysctl -w net.ipv4.ip_forward=1 然后再次执行init

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-3SzDCAdm-1627542054866)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625104578403.png)]](https://img-blog.csdnimg.cn/bbdf5003052b49d4b5a03e8d295b7ab1.png)

出现下图初始化成功

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Qko6wysS-1627542054868)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625105990338.png)]](https://img-blog.csdnimg.cn/877dcc0cf26740d2808b8469332c1a16.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl8zOTAzNDAxMg==,size_16,color_FFFFFF,t_70)

节点加入

kubeadm join 192.168.0.115:6443 --token dz7vqa.naacs77qxuidb4s3 \

--discovery-token-ca-cert-hash sha256:b93f05d09e5336609f71a5a658b4f46f17e48c31d59409c62dde571dab47c7a4

3.4. 配置环境, 让当前用户可以执行kubectl命令

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

// 执行kubectl命令 查看节点信息

kubectl get node

3.5. 配置网络

master 执行下面命令即可 使用以下命令安装Calico

#不建议用calico一直有问题我也没找到

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

#建议用这个

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-n5tT4pK2-1627542054874)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625633829595.png)]](https://img-blog.csdnimg.cn/51d8334e041f4be3a390f12087380763.png)

3.6. 安装ingress

应用yaml文件

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

3.7. 安装k8s控制台(选择安装自行参考百度)

4:部署环境

一下操作均在一台节点服务器(D)上操作

4.1. 安装github

#创建gitlab安装目录 会持久化到该目录

mkdir /usr/local/gitlab

#进入到该目录

cd /usr/local/gitlab

#docker 进行安装

docker run -d \

--name gitlab \

-p 8443:443 \

-p 9999:80 \

-p 9998:22 \

-v $PWD/config:/etc/gitlab \

-v $PWD/logs:/var/log/gitlab \

-v $PWD/data:/var/opt/gitlab \

-v /etc/localtime:/etc/localtime \

gitlab/gitlab-ce:latest

刚安装启动会比较慢大概2-3分钟

访问地址:http://IP:9999

初次会先设置管理员密码 ,然后登陆,默认管理员用户名root,密码就是刚设置的。

4.2. 安装harbor启动chart插件

1:下载离线安装包 如果下载超时请自行安装

#下载

wget https://github.com/goharbor/harbor/releases/download/v2.2.3/harbor-offline-installer-v2.2.3.tgz

#解压

tar -zxvf harbor-offline-installer-v2.2.3.tgz

cd harbor

#修改模板名称

mv harbor.yml.tmpl harbor.yml

#修改主机ip

vi harbor.yml

hostname: 192.168.0.129

#编译

./prepare

#安装

./install.sh --with-chartmuseum

#查看

docker-compose ps

*注意有证书则需要配置证书 不使用证书注视掉这块配置

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-xSlfTAZ1-1627542054874)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625643525898.png)]](https://img-blog.csdnimg.cn/a62944a9ca8a4bd497fcf45ba4e43830.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl8zOTAzNDAxMg==,size_16,color_FFFFFF,t_70)

安装成功访问 http://ip 默认账号 admin 密码 Harbor12345

4.2.1. 配置Docker可信任

安装成功后需要配置docker的私有仓库 添加"insecure-registries": [“192.168.0.129”] 一定要加上 不然pod会拉不到镜像

# vi /etc/docker/daemon.json

systemctl daemon-reload

# systemctl restart docker

4.3 安装mysql

docker run -d --name db -p 3306:3306 -v /usr/local/mysql:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root mysql --character-set-server=utf8

4.4. 安装helmv3(master节点)

4.4.1. 安装Helm工具

# wget https://get.helm.sh/helm-v3.0.0-linux-amd64.tar.gz

# tar zxvf helm-v3.0.0-linux-amd64.tar.gz

# mv linux-amd64/helm /usr/bin/

4.4.2 配置国内Chart仓库(非必须)

# helm repo add stable http://mirror.azure.cn/kubernetes/charts

# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

# helm repo list

4.4.3 安装push插件

# helm plugin install https://github.com/chartmuseum/helm-push

如果网络下载不了,也可以直接解压课件里包:

# tar zxvf helm-push_0.7.1_linux_amd64.tar.gz

# mkdir -p /root/.local/share/helm/plugins/helm-push

# chmod +x bin/*

# mv bin plugin.yaml /root/.local/share/helm/plugins/helm-push

4.4.4 添加repo

# helm repo add --username admin --password Harbor12345 myrepo http://192.168.0.129/chartrepo/library

4.4.5 推送与安装Chart

# helm push mysql-1.4.0.tgz --username=admin --password=Harbor12345 http://192.168.0.129/chartrepo/library

# helm install web --version 1.4.0 myrepo/demo

5:K8S PV自动供给 网上参考文章很多

6:持续集成jenkins

由于默认插件源在国外服务器,大多数网络无法顺利下载,需修改国内插件源地址:

cd jenkins_home/updates

sed -i 's/http:\/\/updates.jenkins-ci.org\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g' default.json && \

sed -i 's/http:\/\/www.google.com/https:\/\/www.baidu.com/g' default.json

6.1 安装插件 pipeline(流水线) Kubernetes (动态slave)

配置kubernetes 插件管理—系统管理—最下面

6.2 部署jenkins master slave

配置kubenetes地址 由于在k8s集群里部署的 通过服务名就可以找到 https://kubernetes.default

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-PEWBhHpv-1627542054877)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625797499361.png)]

配置jenkins地址 由于在k8s集群里部署的 通过服务名就可以找到 http://jenkins.default

6.3创建slave镜像

需要注意的是 镜像根据具体逻辑制作

环境 maven jdk docker helm

FROM centos:8

LABEL maintainer lizhenliang

RUN yum install -y java-11-openjdk* maven curl git libtool-ltdl-devel && \

yum clean all && \

rm -rf /var/cache/yum/* && \

mkdir -p /usr/share/jenkins

COPY slave.jar /usr/share/jenkins/slave.jar

COPY jenkins-slave /usr/bin/jenkins-slave

COPY settings.xml /etc/maven/settings.xml

RUN chmod +x /usr/bin/jenkins-slave

COPY helm kubectl /usr/bin/

ENTRYPOINT ["jenkins-slave"]

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-sDpBrHTu-1627542054877)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\1625799639456.png)]

7: 流水线脚本

7.1需要插件

Git Parameter 动态获取git分支

/Git

/Pipeline

/Config File Provider

/kubernetes

/Extended Choice Parameter 多选参数

1114

1114

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?