本例是基于Elasticsearch6.7 版本, 安装了aliyun-knn插件;设计的图片向量特征为512维度.

如果自建ES,是无法使用aliyun-knn插件的,自建建议使用ES7.x版本,并按照fast-elasticsearch-vector-scoring插件(https://github.com/lior-k/fast-elasticsearch-vector-scoring/)

由于我的python水平有限,文中涉及到的图片特征提取,使用了yongyuan.name的VGGNet库,再此表示感谢!

一、 ES设计

1.1 索引结构

# 创建一个图片索引PUT images_v2{ "aliases": { "images": {} }, "settings": { "index.codec": "proxima", "index.vector.algorithm": "hnsw", "index.number_of_replicas":1, "index.number_of_shards":3 }, "mappings": { "_doc": { "properties": { "feature": { "type": "proxima_vector", "dim": 512 }, "relation_id": { "type": "keyword" }, "image_path": { "type": "keyword" } } } }}1.2 DSL语句

GET images/_search{ "query": { "hnsw": { "feature": { "vector": [255,....255], "size": 3, "ef": 1 } } }, "from": 0, "size": 20, "sort": [ { "_score": { "order": "desc" } } ], "collapse": { "field": "relation_id" }, "_source": { "includes": [ "relation_id", "image_path" ] }}二、图片特征

extract_cnn_vgg16_keras.py

# -*- coding: utf-8 -*-# Author: yongyuan.nameimport numpy as npfrom numpy import linalg as LAfrom keras.applications.vgg16 import VGG16from keras.preprocessing import imagefrom keras.applications.vgg16 import preprocess_inputfrom PIL import Image, ImageFileImageFile.LOAD_TRUNCATED_IMAGES = Trueclass VGGNet: def __init__(self): # weights: 'imagenet' # pooling: 'max' or 'avg' # input_shape: (width, height, 3), width and height should >= 48 self.input_shape = (224, 224, 3) self.weight = 'imagenet' self.pooling = 'max' self.model = VGG16(weights = self.weight, input_shape = (self.input_shape[0], self.input_shape[1], self.input_shape[2]), pooling = self.pooling, include_top = False) self.model.predict(np.zeros((1, 224, 224 , 3))) ''' Use vgg16 model to extract features Output normalized feature vector ''' def extract_feat(self, img_path): img = image.load_img(img_path, target_size=(self.input_shape[0], self.input_shape[1])) img = image.img_to_array(img) img = np.expand_dims(img, axis=0) img = preprocess_input(img) feat = self.model.predict(img) norm_feat = feat[0]/LA.norm(feat[0]) return norm_feat# 获取图片特征from extract_cnn_vgg16_keras import VGGNetmodel = VGGNet()file_path = "./demo.jpg"queryVec = model.extract_feat(file_path)feature = queryVec.tolist()三、将图片特征写入ES

helper.py

import reimport urllib.requestdef strip(path): """ 需要清洗的文件夹名字 清洗掉Windows系统非法文件夹名字的字符串 :param path: :return: """ path = re.sub(r'[?\\*|“<>:/]', '', str(path)) return pathdef getfilename(url): """ 通过url获取最后的文件名 :param url: :return: """ filename = url.split('/')[-1] filename = strip(filename) return filenamedef urllib_download(url, filename): """ 下载 :param url: :param filename: :return: """ return urllib.request.urlretrieve(url, filename)train.py

# coding=utf-8import mysql.connectorimport osfrom helper import urllib_download, getfilenamefrom elasticsearch5 import Elasticsearch, helpersfrom extract_cnn_vgg16_keras import VGGNetmodel = VGGNet()http_auth = ("elastic", "123455")es = Elasticsearch("http://127.0.0.1:9200", http_auth=http_auth)mydb = mysql.connector.connect( host="127.0.0.1", # 数据库主机地址 user="root", # 数据库用户名 passwd="123456", # 数据库密码 database="images")mycursor = mydb.cursor()imgae_path = "./images/"def get_data(page=1): page_size = 20 offset = (page - 1) * page_size sql = """ SELECT id, relation_id, photo FROM images LIMIT {0},{1} """ mycursor.execute(sql.format(offset, page_size)) myresult = mycursor.fetchall() return myresultdef train_image_feature(myresult): indexName = "images" photo_path = "http://域名/{0}" actions = [] for x in myresult: id = str(x[0]) relation_id = x[1] # photo = x[2].decode(encoding="utf-8") photo = x[2] full_photo = photo_path.format(photo) filename = imgae_path + getfilename(full_photo) if not os.path.exists(filename): try: urllib_download(full_photo, filename) except BaseException as e: print("gid:{0}的图片{1}未能下载成功".format(gid, full_photo)) continue if not os.path.exists(filename): continue try: feature = model.extract_feat(filename).tolist() action = { "_op_type": "index", "_index": indexName, "_type": "_doc", "_id": id, "_source": { "relation_id": relation_id, "feature": feature, "image_path": photo } } actions.append(action) except BaseException as e: print("id:{0}的图片{1}未能获取到特征".format(id, full_photo)) continue # print(actions) succeed_num = 0 for ok, response in helpers.streaming_bulk(es, actions): if not ok: print(ok) print(response) else: succeed_num += 1 print("本次更新了{0}条数据".format(succeed_num)) es.indices.refresh(indexName)page = 1while True: print("当前第{0}页".format(page)) myresult = get_data(page=page) if not myresult: print("没有获取到数据了,退出") break train_image_feature(myresult) page += 1四、搜索图片

import requestsimport jsonimport osimport timefrom elasticsearch5 import Elasticsearchfrom extract_cnn_vgg16_keras import VGGNetmodel = VGGNet()http_auth = ("elastic", "123455")es = Elasticsearch("http://127.0.0.1:9200", http_auth=http_auth)#上传图片保存upload_image_path = "./runtime/"upload_image = request.files.get("image")upload_image_type = upload_image.content_type.split('/')[-1]file_name = str(time.time())[:10] + '.' + upload_image_typefile_path = upload_image_path + file_nameupload_image.save(file_path)# 计算图片特征向量queryVec = model.extract_feat(file_path)feature = queryVec.tolist()# 删除图片os.remove(file_path)# 根据特征向量去ES中搜索body = { "query": { "hnsw": { "feature": { "vector": feature, "size": 5, "ef": 10 } } }, # "collapse": { # "field": "relation_id" # }, "_source": {"includes": ["relation_id", "image_path"]}, "from": 0, "size": 40}indexName = "images"res = es.search(indexName, body=body)# 返回的结果,最好根据自身情况,将得分低的过滤掉...经过测试, 得分在0.65及其以上的,比较符合要求五、依赖的包

mysql_connector_repackagedelasticsearchPillowtensorflowrequestspandasKerasnumpy正文完

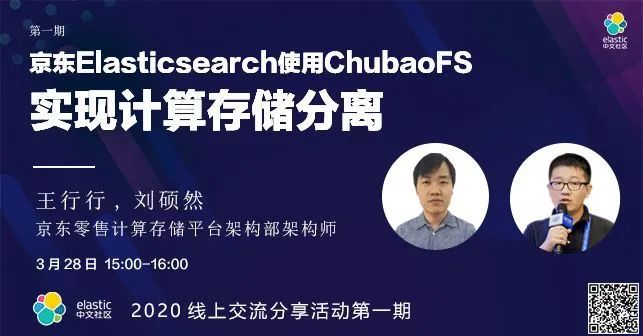

活动预告

2020 年的 Elastic 中文社区技术分享交流活动开始啦!只不过是以在线的方式进行的,第一期我们的分享嘉宾是来自京东零售计算存储平台两位架构师带来的关于如何在 Elasticsearch 之上实现存储与计算分离的实践,存储与计算分离是目前业界非常火的一个话题,要不要上车了解一下啊?

本次分享的嘉宾主持人为 Elastic 中文社区创始人 Medcl 和现就职于 Google 的工程师吴斌,宝宝们快去注册吧。

从下周开始,每周一期线上直播分享,欢迎持续关注!第二期先行预告: 滴滴离线索引快速构建FastIndex 架构实践。

嗨,互动起来吧!

喜欢这篇文章么?

欢迎留下你想说的,留言 100% 精选哦!

Elastic 社区公众号长期征稿,如果您有 Elastic 技术的相关文章,也欢迎投稿至本公众号,一起进步! 投稿请添加微信:medcl123

招聘信息

Job board

社区招聘栏目是一个新的尝试,帮助社区的小伙伴找到心仪的职位,也帮助企业找到所需的人才,为伯乐和千里马牵线搭桥。有招聘需求的企业和正在求职的社区小伙伴,可以联系微信 medcl123 提交招聘需求和发布个人简历信息。

关

注

我

们

Elastic中文社区公众号 (elastic-cn)

为您汇集 Elastic 社区的最新动态、精选干货文章、精华讨论、文档资料、翻译与版本发布等。

喜欢本篇内容就请给我们点个[在看]吧

89

89

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?