背景 从mysql 导出的数据保存为csv文件

导出两个文件,一个有csv的文件头 record1.csv

一个没有csv文件的文件头 record0.csv

try1

建表语句直接导入

sql='''

CREATE TABLE IF NOT EXISTS default.records

(

exer_recore_id BIGINT,

user_id int,

channel tinyint,

item_id int,

question_id int,

`type` tinyint,

question_num int,

orgin tinyint,

orgin_id int,

cate_id int,

is_hf tinyint,

user_answer string,

correct_answer string,

is_correct tinyint comment "正确与否",

dutration int,

record_id int,

subject_1 int,

subject_2 int,

subject_3 int,

status tinyint,

is_signed tinyint,

create_time int,

practice_level_1 string,

practice_level_2 string,

practice_level_3 string,

update_time int

)

comment "做题记录"

'''

spark.sql("LOAD DATA LOCAL INPATH '*/Desktop/record0.csv' OVERWRITE INTO TABLE "

"records")

df=spark.sql("select exer_recore_id,user_id,is_correct from records ")

df.show()

df.printSchema()

输出

+--------------+-------+----------+

|exer_recore_id|user_id|is_correct|

+--------------+-------+----------+

| null| null| null|

| null| null| null|

| null| null| null|

| null| null| null|

| null| null| null|

导入失败

try 2

建表语句加入 分隔符

comment "做题记录"

ROW FORMAT delimited fields terminated by ','

res 失败

加入了csv 的分隔符’,'依旧失败

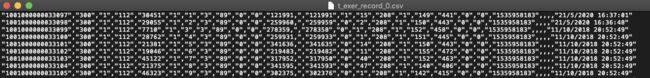

文本查看文件

如下图

try3

comment "做题记录"

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

WITH SERDEPROPERTIES (

"separatorChar" = ",",

"quoteChar" = "'"

)

success

下一个问题(表头不导入)

加入属性tblproperties(“skip.header.line.count”=“1”)

‘1’ 代表忽略第一行

comment "做题记录"

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

WITH SERDEPROPERTIES (

"separatorChar" = ",",

"quoteChar" = "'"

)

tblproperties("skip.header.line.count"="1")

解决

again

大意失荆州啊

刚才的输出

+------------------+-------+----------+---------+

| exer_recore_id|user_id|is_correct|record_id|

+------------------+-------+----------+---------+

|"1001110000021295"| "11"| "0"| "109"|

|"1001110000021296"| "11"| "0"| "109"|

|"1001110000021297"| "11"| "1"| "109"|

root

|-- exer_recore_id: string (nullable = true)

|-- user_id: string (nullable = true)

|-- is_correct: string (nullable = true)

|-- record_id: string (nullable = true)

全是字符串格式,还带着“

改

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde'

WITH SERDEPROPERTIES (

"separatorChar" = ",",

"quoteChar" = "\\"",

"escapeChar" = "\\\\"

)

tblproperties("skip.header.line.count"="1")

分隔符是"

输出

+----------------+-------+----------+---------+

| exer_recore_id|user_id|is_correct|record_id|

+----------------+-------+----------+---------+

|1001110000021295| 11| 0| 109|

|1001110000021296| 11| 0| 109|

2953

2953

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?