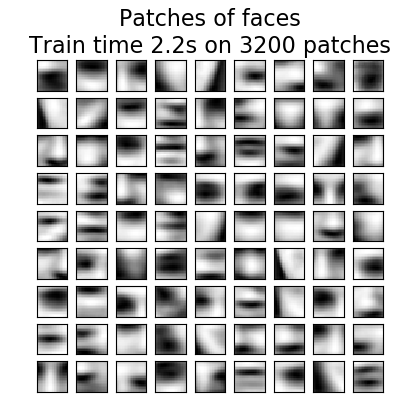

本示例使用大量的人脸数据集来学习一组构成人脸的20 x 20图像块。

从编程的角度上来看,这是很有趣,因为它展示了如何使用scikit-learn的在线学习API来按块(chunks)处理非常大的数据集。学习的方式是一次加载一张图像,并从该图像中随机抽取50个色块,一旦我们累积了500个这样的色块(使用10张图像),就可以运行KMeans在线学习对象MiniBatchKMeans的partial_fit

方法。

MiniBatchKMeans上的详细设置使我们能够看到,在连续调用部分训练(partial-fit)过程中重新分配了一些聚类,这是因为它们代表的块数量太少了,最好随机选择一个的新聚类。

Learning the dictionary...Partial fit of 100 out of 2400Partial fit of 200 out of 2400[MiniBatchKMeans] Reassigning 16 cluster centers.Partial fit of 300 out of 2400Partial fit of 400 out of 2400Partial fit of 500 out of 2400Partial fit of 600 out of 2400Partial fit of 700 out of 2400Partial fit of 800 out of 2400Partial fit of 900 out of 2400Partial fit of 1000 out of 2400Partial fit of 1100 out of 2400Partial fit of 1200 out of 2400Partial fit of 1300 out of 2400Partial fit of 1400 out of 2400Partial fit of 1500 out of 2400Partial fit of 1600 out of 2400Partial fit of 1700 out of 2400Partial fit of 1800 out of 2400Partial fit of 1900 out of 2400Partial fit of 2000 out of 2400Partial fit of 2100 out of 2400Partial fit of 2200 out of 2400Partial fit of 2300 out of 2400Partial fit of 2400 out of 2400done in 2.16s.print(__doc__)import timeimport matplotlib.pyplot as pltimport numpy as npfrom sklearn import datasetsfrom sklearn.cluster import MiniBatchKMeansfrom sklearn.feature_extraction.image import extract_patches_2dfaces = datasets.fetch_olivetti_faces()# ############################################################################## 学习图像字典print('Learning the dictionary... ')rng = np.random.RandomState(0)kmeans = MiniBatchKMeans(n_clusters=81, random_state=rng, verbose=True)patch_size = (20, 20)buffer = []t0 = time.time()# 在线学习部分:在整个数据集上循环6次index = 0for _ in range(6): for img in faces.images: data = extract_patches_2d(img, patch_size, max_patches=50, random_state=rng) data = np.reshape(data, (len(data), -1)) buffer.append(data) index += 1 if index % 10 == 0: data = np.concatenate(buffer, axis=0) data -= np.mean(data, axis=0) data /= np.std(data, axis=0) kmeans.partial_fit(data) buffer = [] if index % 100 == 0: print('Partial fit of %4i out of %i' % (index, 6 * len(faces.images)))dt = time.time() - t0print('done in %.2fs.' % dt)# ############################################################################## 绘制结果plt.figure(figsize=(4.2, 4))for i, patch in enumerate(kmeans.cluster_centers_): plt.subplot(9, 9, i + 1) plt.imshow(patch.reshape(patch_size), cmap=plt.cm.gray, interpolation='nearest') plt.xticks(()) plt.yticks(())plt.suptitle('Patches of faces\nTrain time %.1fs on %d patches' % (dt, 8 * len(faces.images)), fontsize=16)plt.subplots_adjust(0.08, 0.02, 0.92, 0.85, 0.08, 0.23)plt.show()

下载Python源代码: plot_dict_face_patches.py

下载Jupyter notebook源代码: plot_dict_face_patches.ipynb

由Sphinx-Gallery生成的画廊

文壹由“伴编辑器”提供技术支持

☆☆☆为方便大家查阅,小编已将scikit-learn学习路线专栏 文章统一整理到公众号底部菜单栏,同步更新中,关注公众号,点击左下方“系列文章”,如图:

欢迎大家和我一起沿着scikit-learn文档这条路线,一起巩固机器学习算法基础。(添加微信:mthler,备注:sklearn学习,一起进【sklearn机器学习进步群】开启打怪升级的学习之旅。)

490

490

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?