ISLR 8.1.1 - 树模型

要点:

0.树模型简介

1.回归树

-- 动机

-- 树分枝

-- 树剪枝

2.分类树

-- 基尼系数

-- 交叉熵

3.树模型vs线性模型

4.树模型优缺点

0. Tree-based methods

Involving stratifying / segmenting the predictor space into a number of simple regions

- Use the mean/mode of the training data in the region as prediction for test data

1. Regression Decision Tree

1.1 Motivation

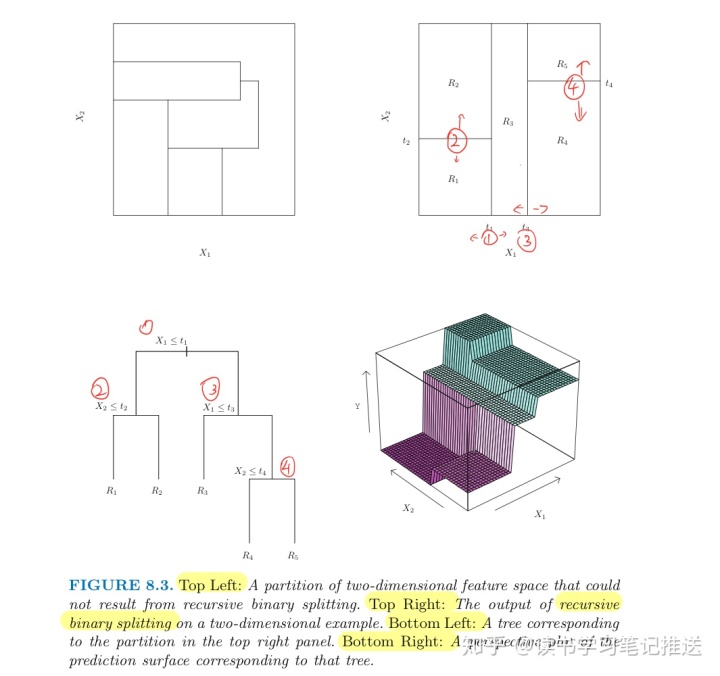

Making Prediction via Stratification of the Feature Space:

- Divide the predictor space -- that is, the set of possible response

for

-- into

distinct and non-overlapping regions,

- For every test data which will fall into the region

same prediction, which is simply the mean of the response

, we make the

for the training observations in

1.2 Tree Splitting

The goal is to find leaves

- where

mean response for the training observations within the

is the

leaf

「Problem」: it is computationally infeasible to consider every possible partition of the feature space into

「Solution」: Recursive Binary Splitting is a top-down, greedy approach:

- top-down because it begins at the top of the tree (all in one region) and then successively splits the predictor space;

- greedy because the best split is made at each step, rather than looking ahead globally and picking a split will lead to a better tree in some future step(which is impossible)

First, for any feature

to get the best

Next, repeat the process, looking for the best

- until a stopping criterion is reached:

- e.g. no region contains more than 5 observations.

If the number of features

- predict

mean of the train data in the region

in test data using the

to which the test data belongs

1.3 Tree Pruning

「Problem」:Complex Tree will lead overfit (each leaf has one data)

「Solution」: A smaller tree with fewer splits (fewer

「Method 1 - Threshold」

Splitting only as the decrease in the RSS exceeds some (high) threshold

- Problem: too short-sighted since a seemingly worthless split early on may lead to a better split with large reduction in RSS

「Method 2 - Pruning」

Grow a very large tree

- Goal: select a subtree that leads to the lowest test error rate

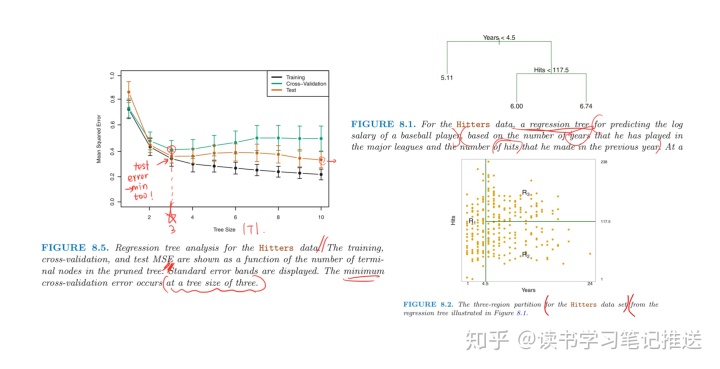

Rather than CVing every possible subtree, we consider a sequence of trees indexed by non-negative tuning parameter

- Cost Complexity / Weakest Link Pruning

For each value of

-

: number of leaves of the tree

The tuning parameter

-

, just measures the error

- As

penalty for the subtree with many leaves

increases, there is

- so branches get pruned from the tree in a nested and predictable fashion,

- then obtaining the whole sequence of subtrees (as a function of

) is easy

- also can be selected via CV and obtain the subtree corresponding to

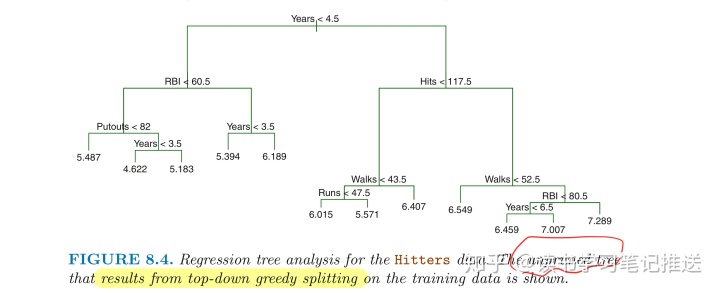

- 「Example on Baseball Hitters Data」

Perform 6-fold CV to estimate the CV MSE of the trees as a function of

- CV error is minimum at

based on the best

2. Classification Trees

For a classification tree, we predict the test data belongs to the most commonly occurring class of train data in the region to which it belongs

- RSS cannot be a criterion for classification tree

- need two criterions to evaluate the quality of a particular split

「Gini Index」:

A measure of total variance across the

-

proportion of train data in the

represents the

region that are from the

class

- G small if all

are close 1 or 0

- Node Purity: Smaller if a node contains larger amount of observations from a single class

「Cross-Entropy」:

- Like the Gini Index, the Entropy is smaller if

node is pure

- both are sensitive to node purity

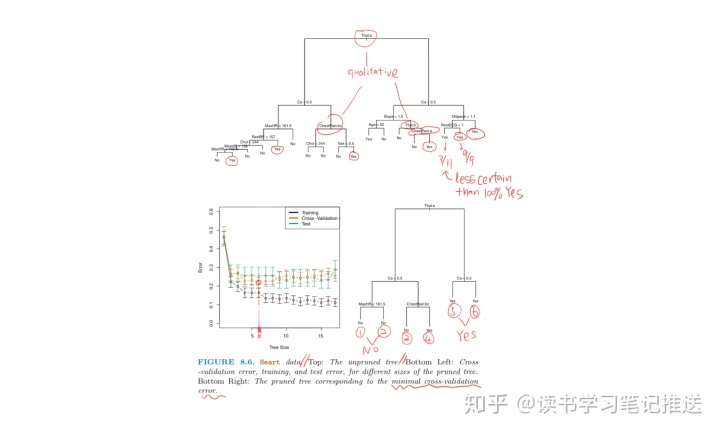

「Heart Disease Example」:

The splits may yield two same predicted value, there are reasons to keep them:

- because it leads to increased node purity

- improves the Gini Index and the Entropy

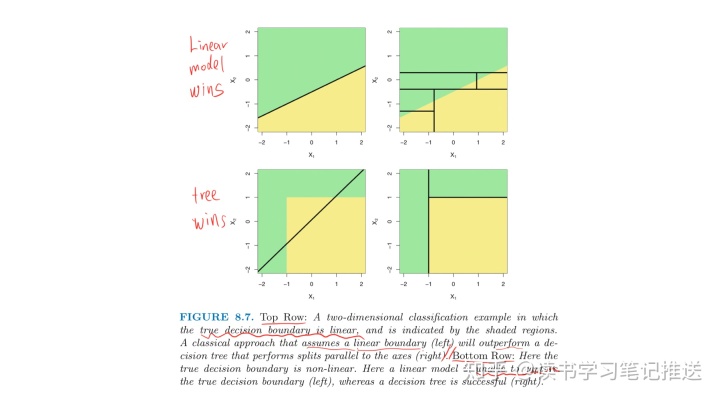

3. Trees vs Linear Models

If there is a highly non-linear complex relationship between the features and the response, CARTs may outperform classical approaches

- However, there may still be linear relationship

4. Pros & Cons of Trees

「Advantages:」

- Easier to interpret than Linear Regression

- More closely mirror human decision making

- Trees can be displayed graphically

- Easily handle qualitative predictor without the dummy variables

「Limitations」:

- Trees can be very non-robust:

- a small change in the data can cause a large change in the final estimated tree

- Trees do not have the same level of predictive accuracy as other regression and classification methods

- TOGO:By aggregating many decision trees, bagging, random forests and boosting will improve accuracy, at the expense of some loss in interpretation

5. Reference

An Introduction to Statistical Learning, with applications in R (Springer, 2013)

435

435

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?