内容来源为六星教育,这里仅作为学习笔记

集群伸缩

参考:https://www.talkwithtrend.com/Article/246605

redis 集群提供了灵活的节点扩容和收缩方案。在不影响集群对外服务的情况下,可以为集群添加节点进行扩容也可以下线部分节点进行缩容。

redis集群可以实现对节点的灵活上下线控制。其中原理可抽象为槽和对应数据在不同节点之间灵活移动

当主节点分别维护自己负责的槽和对应的数据,如果希望加入1个节点实现集群扩容时,需要通过相关命令把一部分槽和数据迁移给新节点

上面图里的每个节点把一部分槽和数据迁移到新的节点6385,每个节点负责的槽和数据相比之前变少了从而达到了集群扩容的目的,

集群伸缩=槽和数据在节点之间的移动。

扩容集群

扩容是分布式存储最常见的需求,Redis 集群扩容操作可分为如下步骤:

1)准备新节点。

2)加入集群。

3)迁移槽和数据。

准备新节点

确定当前集群的状态

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

54e6deffbcea redis5asm "/usr/local/bin/redi…" 15 hours ago Up 15 hours 0.0.0.0:6324->6379/tcp,

0.0.0.0:16324->16379/tcp redis5_cluster_204

18eb52e6e80e redis5asm "/usr/local/bin/redi…" 15 hours ago Up 15 hours 0.0.0.0:6325->6379/tcp,

0.0.0.0:16325->16379/tcp redis5_cluster_205

fa53f8484acb redis5asm "/usr/local/bin/redi…" 15 hours ago Up 15 hours 0.0.0.0:6323->6379/tcp,

0.0.0.0:16323->16379/tcp redis5_cluster_203

1116cc9fcea8 redis5asm "/usr/local/bin/redi…" 15 hours ago Up 15 hours 0.0.0.0:6321->6379/tcp,

0.0.0.0:16321->16379/tcp redis5_cluster_201

9bf708a37237 redis5asm "/usr/local/bin/redi…" 15 hours ago Up 15 hours 0.0.0.0:6320->6379/tcp,

0.0.0.0:16320->16379/tcp redis5_cluster_200

21b1fdbede7a redis5asm "/usr/local/bin/redi…" 15 hours ago Up 15 hours 0.0.0.0:6322->6379/tcp,

0.0.0.0:16322->16379/tcp redis5_cluster_202

[root@localhost ~]# redis-cli -h 192.160.1.200 cluster nodes

8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379@16379 slave a68508720542496d71cc675ebedf2ebaed956726 0 1590124787442 7

connected

a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379@16379 myself,master - 0 1590124788000 1 connected 0-5460

3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379@16379 master - 0 1590124787000 2 connected 5461-10922

a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379@16379 master - 0 1590124788986 7 connected 10923-16383

8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379@16379 slave 3e992bd376701b9f58dcb77082606d4796e4ba0a 0 1590124788000 6

connected

bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379@16379 slave a163aee6c9a5695f8d98828a850ca851afd73ea6 0 1590124788474 5

connected

[root@localhost ~]#

需要提前准备好新节点并运行在集群模式下,新节点建议跟集群内的节点配置保持一致,便于管理统一。

| 容器名称 | ip | 端口 | 模式 |

|---|---|---|---|

| redis-clustedr-100 | 192.160.1.100 | 6310:6379 - 16310:16379 | master |

| redis-clustedr-101 | 192.160.1.101 | 6311:6379 - 16311:16379 | slave |

如下是docker-compose中的内容

version: "3.6" # 确定docker-composer文件的版本

services: # 代表就是一组服务 - 简单来说一组容器

redis_100: # 这个表示服务的名称,课自定义; 注意不是容器名称

image: redis5asm # 指定容器的镜像文件

networks: ## 引入外部预先定义的网段

redis5sm:

ipv4_address: 192.160.1.100 #设置ip地址

container_name: redis5_cluster_100 # 这是容器的名称

ports: # 配置容器与宿主机的端口

- "6310:6379"

- "16310:16379"

volumes: # 配置数据挂载

- /redis_2004/cluster/new/100:/redis

command: /usr/local/bin/redis-server /redis/conf/redis.conf

redis_101: # 这个表示服务的名称,课自定义; 注意不是容器名称

image: redis5asm # 指定容器的镜像文件

networks: ## 引入外部预先定义的网段

redis5sm:

ipv4_address: 192.160.1.101 #设置ip地址

container_name: redis5_cluster_101 # 这是容器的名称

ports: # 配置容器与宿主机的端口

- "6311:6379"

- "16311:16379"

volumes: # 配置数据挂载

- /redis_2004/cluster/new/101:/redis

command: /usr/local/bin/redis-server /redis/conf/redis.conf

# 网段设置

networks:

#引用外部预先定义好的网段

redis5sm:

external:

name: redis5sm

构建容器之后新节点作为孤儿节点运行,并没有其他节点与之通信

[root@localhost new]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

4c68e4412c63 redis5asm "/usr/local/bin/redi…" 7 seconds ago Up 5 seconds 0.0.0.0:6311->6379/tcp,

0.0.0.0:16311->16379/tcp redis5_cluster_101

b626c8e7ff16 redis5asm "/usr/local/bin/redi…" 7 seconds ago Up 5 seconds 0.0.0.0:6310->6379/tcp,

0.0.0.0:16310->16379/tcp redis5_cluster_100

54e6deffbcea redis5asm "/usr/local/bin/redi…" 16 hours ago Up 16 hours 0.0.0.0:6324->6379/tcp,

0.0.0.0:16324->16379/tcp redis5_cluster_204

18eb52e6e80e redis5asm "/usr/local/bin/redi…" 16 hours ago Up 16 hours 0.0.0.0:6325->6379/tcp,

0.0.0.0:16325->16379/tcp redis5_cluster_205

fa53f8484acb redis5asm "/usr/local/bin/redi…" 16 hours ago Up 16 hours 0.0.0.0:6323->6379/tcp,

0.0.0.0:16323->16379/tcp redis5_cluster_203

1116cc9fcea8 redis5asm "/usr/local/bin/redi…" 16 hours ago Up 16 hours 0.0.0.0:6321->6379/tcp,

0.0.0.0:16321->16379/tcp redis5_cluster_201

9bf708a37237 redis5asm "/usr/local/bin/redi…" 16 hours ago Up 16 hours 0.0.0.0:6320->6379/tcp,

0.0.0.0:16320->16379/tcp redis5_cluster_200

21b1fdbede7a redis5asm "/usr/local/bin/redi…" 16 hours ago Up 15 hours 0.0.0.0:6322->6379/tcp,

0.0.0.0:16322->16379/tcp redis5_cluster_202

[root@localhost new]# redis-cli -h 192.160.1.200 cluster nodes

8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379@16379 slave a68508720542496d71cc675ebedf2ebaed956726 0 1590125757455 7

connected

a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379@16379 myself,master - 0 1590125756000 1 connected 0-5460

3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379@16379 master - 0 1590125758478 2 connected 5461-10922

a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379@16379 master - 0 1590125758000 7 connected 10923-16383

8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379@16379 slave 3e992bd376701b9f58dcb77082606d4796e4ba0a 0 1590125757000 6

connected

bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379@16379 slave a163aee6c9a5695f8d98828a850ca851afd73ea6 0 1590125757662 5

connected

加入集群

这里我们可以先了解一下redis5.0给我们提供的集群命令 redis-cli --cluster help 在redis5中,并不需要像redis4之前的管理方式需要通过 redis-trib 管理集群

当然你也可以使用 cluster meet ip port 进行集群之间的感知也是一样的

redis-cli -h 192.160.1.200 --cluster add-node 192.160.1.100:6379 192.160.1.200:6379

redis-cli -h 192.160.1.200 cluster nodes

redis-cli -h 192.160.1.200 --cluster add-node 192.160.1.101:6379 192.160.1.100:6379 --cluster-slave

如下是执行结果

[root@localhost new]# redis-cli -h 192.160.1.200 cluster nodes

8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379@16379 slave a68508720542496d71cc675ebedf2ebaed956726 0 1590127254061 7

connected

a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379@16379 myself,master - 0 1590127253000 1 connected 0-5460

3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379@16379 master - 0 1590127255083 2 connected 5461-10922

a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379@16379 master - 0 1590127255591 7 connected 10923-16383

8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379@16379 slave 3e992bd376701b9f58dcb77082606d4796e4ba0a 0 1590127255591 6

connected

bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379@16379 slave a163aee6c9a5695f8d98828a850ca851afd73ea6 0 1590127254572 5

connected

[root@localhost new]# redis-cli -h 192.160.1.200 --cluster add-node 192.160.1.100:6379 192.160.1.200:6379

>>> Adding node 192.160.1.100:6379 to cluster 192.160.1.200:6379

>>> Performing Cluster Check (using node 192.160.1.200:6379)

M: a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379

slots: (0 slots) slave

replicates a68508720542496d71cc675ebedf2ebaed956726

M: 3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379

slots: (0 slots) slave

replicates 3e992bd376701b9f58dcb77082606d4796e4ba0a

S: bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379

slots: (0 slots) slave

replicates a163aee6c9a5695f8d98828a850ca851afd73ea6

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.160.1.100:6379 to make it join the cluster.

[OK] New node added correctly.

[root@localhost new]# redis-cli -h 192.160.1.200 cluster nodes

8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379@16379 slave a68508720542496d71cc675ebedf2ebaed956726 0 1590127277000 7

connected

a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379@16379 myself,master - 0 1590127275000 1 connected 0-5460

3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379@16379 master - 0 1590127276535 2 connected 5461-10922

a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379@16379 master - 0 1590127276535 7 connected 10923-16383

8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379@16379 slave 3e992bd376701b9f58dcb77082606d4796e4ba0a 0 1590127275522 6

connected

bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379@16379 slave a163aee6c9a5695f8d98828a850ca851afd73ea6 0 1590127277550 5

connected

8b3cb12997b92b1de19a3d6bfdaf0a3ff8f13e47 192.160.1.100:6379@16379 master - 0 1590127275522 0 connected

[root@localhost new]# redis-cli -h 192.160.1.200 --cluster add-node 192.160.1.101:6379 192.160.1.100:6379 --cluster-slave

>>> Adding node 192.160.1.101:6379 to cluster 192.160.1.100:6379

>>> Performing Cluster Check (using node 192.160.1.100:6379)

M: 8b3cb12997b92b1de19a3d6bfdaf0a3ff8f13e47 192.160.1.100:6379

slots: (0 slots) master

S: 8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379

slots: (0 slots) slave

replicates a68508720542496d71cc675ebedf2ebaed956726

M: a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: 3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379

slots: (0 slots) slave

replicates a163aee6c9a5695f8d98828a850ca851afd73ea6

S: 8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379

slots: (0 slots) slave

replicates 3e992bd376701b9f58dcb77082606d4796e4ba0a

M: a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

Automatically selected master 192.160.1.100:6379

>>> Send CLUSTER MEET to node 192.160.1.101:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 192.160.1.100:6379.

[OK] New node added correctly.

[root@localhost new]# redis-cli -h 192.160.1.200 cluster nodes

8480eccf92eb45d66d9344982460678444618ea9 192.160.1.202:6379@16379 slave a68508720542496d71cc675ebedf2ebaed956726 0 1590127308000 7

connected

a163aee6c9a5695f8d98828a850ca851afd73ea6 192.160.1.200:6379@16379 myself,master - 0 1590127308000 1 connected 0-5460

3e992bd376701b9f58dcb77082606d4796e4ba0a 192.160.1.201:6379@16379 master - 0 1590127307520 2 connected 5461-10922

a68508720542496d71cc675ebedf2ebaed956726 192.160.1.203:6379@16379 master - 0 1590127308000 7 connected 10923-16383

8057c014c56c1f0bbf7db66bb5bca81744dce85a 192.160.1.205:6379@16379 slave 3e992bd376701b9f58dcb77082606d4796e4ba0a 0 1590127308538 6

connected

bc8175b99a6030e3029a8fddf3acb0ac550ed07d 192.160.1.204:6379@16379 slave a163aee6c9a5695f8d98828a850ca851afd73ea6 0 1590127308128 5

connected

8b3cb12997b92b1de19a3d6bfdaf0a3ff8f13e47 192.160.1.100:6379@16379 master - 0 1590127307520 0 connected

0e41a25c96818d43a8e8576e28788a26f2ed27b4 192.160.1.101:6379@16379 slave 8b3cb12997b92b1de19a3d6bfdaf0a3ff8f13e47 0 1590127308640 0

connected

在redis5中这一过程因为对redis-cli的命令优化而变得异常的简单;我们这里对于流程做一个简述一下

首先对于节点在加入的时候集群内新旧接地那经过一段时间的ping/pong消息通信指挥,所有节点会发现新的节点并将它们的状态保存到本地。但是因为是刚刚加入的主节点,没有分配槽,所以还是不能接任何读写操作。

迁移槽和数据

加入集群后需要为新节点迁移槽和相关数据,槽在迁移过程中集群可以正常提供读写服务,迁移过程是集群扩容最核心的环节,下面详细讲解。

槽迁移计划

槽是 Redis 集群管理数据的基本单位,首先需要为新节点制定槽的迁移计划,确定原有节点的哪些槽需要迁移到新节点。迁移计划需要确保每个节点负责相似数量的槽,从而保证各节点的数据均匀,比如之前是三个节点,现在是四个节点,把节点槽分布在四个节点上。

槽迁移计划确定后开始逐个把槽内数据从源节点迁移到目标节点

迁移数据

数据迁移过程是逐个槽进行的

流程说明:

- 对目标节点发送

cluster setslot {slot} importing {sourceNodeId}导入命令,让目标节点准备导入槽的数据。 - 对源节点发送

cluster setslot {slot} migrating {sourceNodeId}导出命令,让源节点准备迁出槽的数据。 - 源节点循环执行

cluster getkeysinslot {slot} {count}迁移命令,获取count个属于槽{slot}的键。 - 在源节点上会执行

migrate {targetIp} {targetPort} "" 0 {timeout} keys {keys...}命令,把获取的键通过流水先(pipeline)机制批量迁移到目标节。 - 重复 3,4操作指导槽下所有的兼职数据迁移到目标节点

- 向集群内所有主节点发送

cluster setslot {slot} node {targetNodeId}命令,通知槽分配给目标节点。为了保证槽节点映射更及时传播,需要遍历发送给所有主节点更新呗迁移的槽指向新节点。

当然上面是往期的redis版本流程实际到了redis5之后就是异常简单…一个命令解决 redis-cli --cluster reshard {ip:port}

因为往期方式过于麻烦有兴趣的可以去看看peter老师讲解的redis课程,在他的课程中有讲这个过程

redis5分配槽

-

重新分配哈希槽

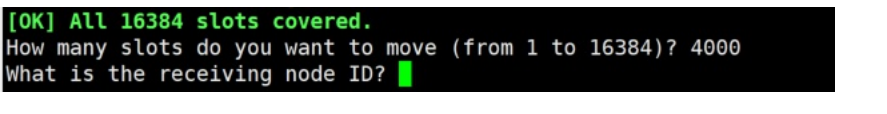

redis-cli -h 192.160.1.100 --cluster reshard 192.160.1.200:6379

这个

192.160.1.200:6379是可以随意一个节点都可以(有效主节点); -

输入要分配多少个哈希槽(数量)?比如我要分配4000个哈希槽

-

输入指定要分配哈希槽的节点ID,如100的master节点哈希槽的数量为0(选择任意一个节点作为目标节点进行分配哈希槽)

-

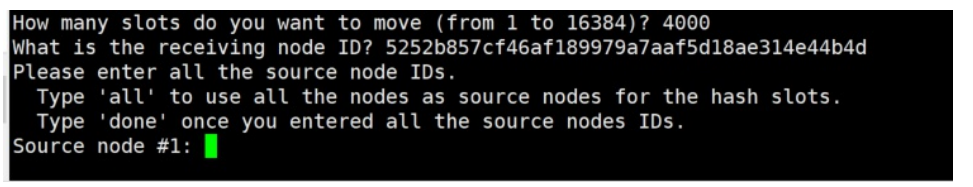

分配哈希槽有两种方式

- all : 将所有节点用作哈希槽的源节点

- done : 在指定的节点拿出指定数量的哈希槽分配到目标节点

all方式

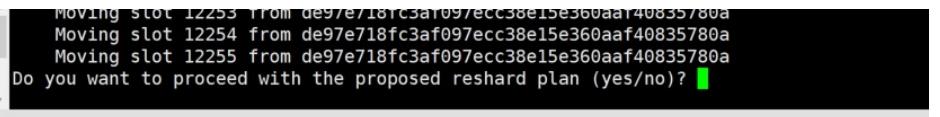

是否继续执行建议的reshard计划

输入yes后,就会完成分配哈希槽:

done方式

收缩集群

收缩集群意味着缩减规模,需要从现有集群中安全下线部分节点,下线节点过程如下

1)首先需要确定下线节点是否有负责的槽,如果是,需要把槽迁移到其他节点,保证节点下线后整个集群槽节点映射的完整性。

2)当下线节点不再负责槽或者本身是从节点时,就可以通知集群内其他节点忘记下线节点,当所有的节点忘记该节点后可以正常关闭。

下线迁移槽

下线节点需要把自己负责的槽迁移到其他节点,原理与之前节点扩容的迁移槽过程一致,但是过程收缩正好和扩容迁移方向相反,下线节点变为源节点,其他主节点变为目标节点,源节点需要把自身负责的4096个槽均匀地迁移到其他主节点上。

使用 redis-trib.rb reshard 命令完成槽迁移。由于每次执行reshard 命令只能有一个目标节点,因此需要执行3次reshard 命令

准备导出

指定目标节点

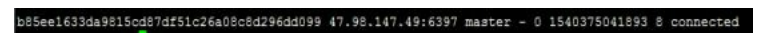

直到6397迁移干净,不放在任何节点

忘记节点

由于集群内的节点不停地通过 Gossip 消息彼此交换节点状态,因此需要通过一种健壮的机制让集群内所有节点忘记下线的节点。也就是说让其他节点不再与要下线节点进行 Gossip 消息交换。 Redis 提供了 cluster forget{downNodeId} 命令实现该功能,会把这个节点信息放入黑名单,但是60s之后会恢复。

生产环境当中使用 redis-trib.rb del-node {host:port}{downNodeId} 命令进行相关操作

注意: redis-trib rebalance 命令选择

适用于节点的槽不平衡的状态,有槽的节点

- 默认节点加入,要先做节点槽的迁移

- 节点已经迁移了所有的槽信息,并且已经从集群删除后,才可以使用平衡

redis5操作

删除一个Slave节点

[root@localhost new]# redis-cli -h 192.160.1.100 --cluster del-node 192.160.1.101:6379 a3107383b2f749381ea1aece64f4ba0e0e260097

>>> Removing node a3107383b2f749381ea1aece64f4ba0e0e260097 from cluster 192.160.1.101:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

移到槽节点

[root@localhost new]# redis-cli -h 192.160.1.100 --cluster reshard 192.160.1.100:6379

>>> Performing Cluster Check (using node 192.160.1.100:6379)

...

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 999

What is the receiving node ID? de97e718fc3af097ecc38e15e360aaf40835780a

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 5252b857cf46af189979a7aaf5d18ae314e44b4d

Source node #2: done

[root@localhost new]# redis-cli -h 192.160.1.100 cluster slots

[root@localhost new]# redis-cli check -h 192.160.1.100 --cluster help

Could not connect to Redis at 127.0.0.1:6379: Connection refused

[root@localhost new]# redis-cli -h 192.160.1.100 --cluster del-node 192.160.1.100:6379 5252b857cf46af189979a7aaf5d18ae314e44b4d

>>> Removing node 5252b857cf46af189979a7aaf5d18ae314e44b4d from cluster 192.160.1.100:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

[root@localhost new]# redis-cli -h 192.160.1.200 --cluster check 192.160.1.200:6379

192.160.1.200:6379 (982285a0...) -> 0 keys | 3877 slots | 1 slaves.

192.160.1.202:6379 (de97e718...) -> 0 keys | 4876 slots | 1 slaves.

192.160.1.201:6379 (6aef721d...) -> 0 keys | 7631 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.160.1.200:6379)

....

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@localhost new]# redis-cli -h 192.160.1.200 --cluster rebalance 192.160.1.200:6379

>>> Performing Cluster Check (using node 192.160.1.200:6379)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 3 nodes. Total weight = 3.00

Moving 1585 slots from 192.160.1.201:6379 to 192.160.1.200:6379

...

Moving 585 slots from 192.160.1.201:6379 to 192.160.1.202:6379

...

[root@localhost new]# redis-cli -h 192.160.1.200 --cluster check 192.160.1.200:6379

192.160.1.200:6379 (982285a0...) -> 0 keys | 5462 slots | 1 slaves.

192.160.1.202:6379 (de97e718...) -> 0 keys | 5461 slots | 1 slaves.

192.160.1.201:6379 (6aef721d...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 192.160.1.200:6379)

...

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

命令说明:

-- 检查集群

redis-cli --cluster check ip:port

-- 集群信息查看

redis-cli --cluster info ip:port

-- 修复集群

redis-cli --cluster fix ip:port

-- 平衡(rebalance)slot:

redis-cli -a mima --cluster rebalance ip:port

请求重定向

目前我们已经搭建好redis集群并且能理解了通信和伸缩细节,但是还没有使用客户端去操作集群。redis集群对客户端通信协议做了比较大的修改,为了追求性能最大化,并没有采用代理方式而是采用客户端直接连节点的方式。

在集群模式下,Redis 接收任何键相关命令时首先计算键对应的槽,再根据槽找出所对应的节点,如果节点是自身,则处理键命令;否则回复 MOVED 重定向错误,通知客户端请求正确的节点。这个过程称为 MOVED 重定向,这个过程之前已经体验过了

在不进行集群模式的连接情况下

[root@localhost ~]# redis-cli -h 192.160.1.200

192.160.1.200:6379> set name h

OK

192.160.1.200:6379> set nayi h

(error) MOVED 6301 192.160.1.202:6379

执行set命令成功因为刚刚好对应的槽位于我们的节点上,但是后面失败是因为不在我们的节点上;可以通过 cluster keyslot {key} 命令返回key所对应的槽

192.160.1.200:6379> cluster keyslot nayi

(integer) 6301

192.160.1.200:6379> cluster nodes

3234c63811c6c9c238c16a958d8433069d21d08f 192.160.1.204:6379@16379 slave 982285a0f4e1d1b6c861fdce379905bebc6402c5 0 1590152389000 11

connected

f433f9940fa8ce7492e3ead56112562d355dd24a 192.160.1.205:6379@16379 slave 6aef721d84d66c2b9a4f0d40ad5c8c3c7fa1c05b 0 1590152389598 8 connected

982285a0f4e1d1b6c861fdce379905bebc6402c5 192.160.1.200:6379@16379 myself,master - 0 1590152389000 11 connected 497-1332 1584-6209

de97e718fc3af097ecc38e15e360aaf40835780a 192.160.1.202:6379@16379 master - 0 1590152389000 12 connected 0-496 1333-1583 6210-6794 12256-

16383

4f57a888737be4291571948799af0afe3c4c0dbd 192.160.1.203:6379@16379 slave de97e718fc3af097ecc38e15e360aaf40835780a 0 1590152389000 12

connected

6aef721d84d66c2b9a4f0d40ad5c8c3c7fa1c05b 192.160.1.201:6379@16379 master - 0 1590152390012 8 connected 6795-12255

在正常模式下我们可以根据 MOVED {slot} {ip:port} 的信息找到对应的节点信息,并可以根据连接

当然在-c的命令下就会自动切换

[root@localhost ~]# redis-cli -h 192.160.1.200 -c

192.160.1.200:6379> set nayi h

-> Redirected to slot [6301] located at 192.160.1.202:6379

OK

192.160.1.202:6379>

redis-cli自动帮我们连接到正确的节点执行命令,这个过程是载入edis-cli内部维护,实际上就是在redis-cli接收到MOVED信息之后再次发起请求,并不在Redis节点中完成请求转发。节点对于不属于它的键命令只回复重定向响应,并不负责转发。

键命令执行步骤:计算槽,查找槽所对应的节点

计算槽

redis首先需要计算键对应的槽。根据键的有效部分使用CRC16函数计算出散列值,再取对16383的余数,使每个键都可以映射到0~16383槽范围内。

<?php

class cluster

{

/**

* 节点集合

* @var clusterNode

*/

public $nodes = [];

public $size;

public $failover_auth_time;

public $failover_auth_rank;

public $currentEpoch;

public function __construct(){}

public function keyHashSlot($key) {

/* start-end indexes of { and } */

$keylen = strlen($key);$s;

for ($s = 0; $s < $keylen; $s++)

if ($key[$s] == '{') break;

if ($s == $keylen) return crc16($key, $keylen) & 0x3FFF;

for ($e = $s + 1; $e < $keylen; $e++)

if ($key[$e] == '}') break;

if ($e == $keylen || $e == $s+1) return crc16($key, $keylen) & 0x3FFF;

return crc16($key + $s + 1, $e - $s - 1) & 0x3FFF;

}

}

根据伪代码可以看出来,如果键内容包含{ 和 } 大括号字符,则计算槽的有效部分是大括号的内容;否则采用简单全部内容计算槽。

cluster keyslot 命令就是采用keyHashSlot函数实现的

[root@localhost ~]# redis-cli -h 192.160.1.200

192.160.1.200:6379> cluster keyslot ytl

(integer) 12078

192.160.1.200:6379> cluster keyslot y{t}l

(integer) 15891

192.160.1.200:6379> cluster keyslot t

(integer) 15891

192.160.1.200:6379>

cluster keyslot y{t}l => cluster keyslot key{hash_tag} 这大括号中的内容叫做hash_tag,它提供不同的键可以具备相同slot的功能,常用批量操作;

比如在集群模式下使用mset/mget等命令优化批量调用时,键列表必须具有同的slot,否则会报错,这个时候可以利用hash_tag让不同的键具有相同的slot达到目的;

[root@localhost ~]# redis-cli -h 192.160.1.200 -c

192.160.1.200:6379> mset name{1000} u ok{1000} o

-> Redirected to slot [11326] located at 192.160.1.201:6379

OK

192.160.1.201:6379> mset name u ok o

(error) CROSSSLOT Keys in request don't hash to the same slot

192.160.1.201:6379> mget name{1000} ok{1000}

1) "u"

2) "o"

192.160.1.201:6379> mget name ok

(error) CROSSSLOT Keys in request don't hash to the same slot

192.160.1.201:6379>

槽节点查找

redis计算得到键对应的槽后,需要查找槽所对应的节点。集群内通过消息交换每个节点都会知道所有节点的槽信息,内部保存在clusterState结构中…

typedef struct clusterState {

clusterNode *myself; /* This node */

clusterNode *slots\[CLUSTER_SLOTS]; /* 记录槽和节点映射数组 */

...

} clusterState;

通过伪代码诠释

<?php

class cluster

{

/**

* 节点集合

* @var clusterNode

*/

public $nodes = [];

public $myself ;

// ...

/**

* [

* '槽标识' => 节点标识

* ]

* @var [type]

*/

public $slots = [

];

public function __construct(){}

public function keyHashSlot($key) {

// ....

}

// 请求重定向

public function clusterRedirectBlockedClientIfNeeded(client $c)

{

// 等到对应的key

$slot = $this->keyHashSlot($this->dictGetKey());

// 获取对应的集群节点

$node = $this->server->cluster->slots[$slot];

if ($node == $this->myself) {

return $this->dictReleaseIterator();

} else {

return "{$error} MOVED {$slot} {$node->ip}:{$node->port}";

}

}

public function dictGetKey()

{

return "key";

}

public function dictReleaseIterator() {}

}

根据代码看出节点对于判定键命令执行韩式MOVED重定向,都是建筑slots[CLUSTER_SLOTS]数组实现,根据moved重定向机制,客户端可以随机连接集群内任意redis获取键所在节点。

客户端连接

思路:

客户端(是使用redis 不是 redisCluster[课后尝试] )

明确:key=》slot(槽) [数据的集合 通过 crc16 标识 ]-》节点(ip:port)

- 初始化:建立连接,进行槽的映射(slot-》在那些节点)

- 命令执行:

2.1 根据key计算槽的标识

2.2 根据槽标识来查找节点,并且返回节点连接

2.3 执行命令 - 维护:定期的对于槽映射监控-》以及集群节点维护

使用swoole=》对属性维护

使用的是fpm=》对配置文件维护

目录结构

代码:

Cluster/RedisMS.php

<?php

namespace Shineyork\Redis\Cluster;

use Shineyork\Redis\Cluster\Tratis\Sentinel;

use Shineyork\Redis\Cluster\Tratis\MS;

use Shineyork\Redis\Cluster\Tratis\Cluster;

class RedisMS

{

use Sentinel;

use Cluster;

use MS;

protected $config;

/**

* 记录redis连接

* [

* "master" => \\Redis,

* "slaves "=> [

* 'slaveIP1:port' => \Redis

* 'slaveIP2:port' => \Redis

* 'slaveIP3:port' => \Redis

* ],

* 'sentinel' => [

*

* ],

* ]

*/

protected $connections;

protected $connIndexs;

public function __construct($config)

{

$this->config = $config;

$this->{$this->config['initType'] . "Init"}();

}

// ---初始化操作---

protected function isNormalInit()

{

$this->connections['master'] = $this->getRedis($this->config['master']['host'], $this->config['master']['port']);

}

/**

* 去维护从节点列表

*/

protected function maintain()

{

swoole_timer_tick(2000, function ($timer_id) use ($masterRedis) {

if ($this->config['initType'] == 'isSentinel') {

Input::info("哨兵检测");

$this->sentinelInit();

}

$this->delay();

});

}

protected function stringToArr($str, $flag1 = ',', $flag2 = '=')

{

$arr = explode($flag1, $str);

$ret = [];

foreach ($arr as $key => $value) {

$arr2 = explode($flag2, $value);

$ret[$arr2[0]] = $arr2[1];

}

return $ret;

}

protected function createConn($conns, $flag = 'slaves')

{

foreach ($conns as $key => $conn) {

if ($redis = $this->getRedis($conn['host'], $conn['port'])) {

$this->connections[$flag][$this->redisFlag($conn['host'], $conn['port'])] = $redis;

}

}

$this->connIndexs[$flag] = array_keys($this->connections[$flag]);

}

protected function redisFlag($host, $port)

{

return $host . ":" . $port;

}

public function getRedis($host, $port)

{

try {

$redis = new \Redis();

$redis->pconnect($host, $port);

return $redis;

} catch (\Exception $e) {

Input::info($this->redisFlag($host, $port), "连接有问题");

return null;

}

}

public function getconnIndexs()

{

return $this->connIndexs;

}

public function getMaster()

{

return $this->connections['master'];

}

public function getSlaves()

{

return $this->connections['slaves'];

}

public function oneConn($flag = 'slaves', $redisFlag = null)

{

if (!empty($redisFlag)) {

return $this->connections[$flag][$redisFlag];

}

$indexs = $this->connIndexs[$flag];

$i = mt_rand(0, count($indexs) - 1);

Input::info($indexs[$i], "选择的连接");

return $this->connections[$flag][$indexs[$i]];

}

public function runCall($command, $params = [])

{

try {

$redis = $this->{$this->config['runCall'][$this->config['initType']]}($command, $params);

return $redis->{$command}(...$params);

} catch (\Exception $e) {

}

}

}

Cluster/CRC16.php

<?php

namespace Shineyork\Redis\Cluster;

class CRC16

{

protected static $crc_table=array(

0x0000,0x1021,0x2042,0x3063,0x4084,0x50a5,0x60c6,0x70e7,

0x8108,0x9129,0xa14a,0xb16b,0xc18c,0xd1ad,0xe1ce,0xf1ef,

0x1231,0x0210,0x3273,0x2252,0x52b5,0x4294,0x72f7,0x62d6,

0x9339,0x8318,0xb37b,0xa35a,0xd3bd,0xc39c,0xf3ff,0xe3de,

0x2462,0x3443,0x0420,0x1401,0x64e6,0x74c7,0x44a4,0x5485,

0xa56a,0xb54b,0x8528,0x9509,0xe5ee,0xf5cf,0xc5ac,0xd58d,

0x3653,0x2672,0x1611,0x0630,0x76d7,0x66f6,0x5695,0x46b4,

0xb75b,0xa77a,0x9719,0x8738,0xf7df,0xe7fe,0xd79d,0xc7bc,

0x48c4,0x58e5,0x6886,0x78a7,0x0840,0x1861,0x2802,0x3823,

0xc9cc,0xd9ed,0xe98e,0xf9af,0x8948,0x9969,0xa90a,0xb92b,

0x5af5,0x4ad4,0x7ab7,0x6a96,0x1a71,0x0a50,0x3a33,0x2a12,

0xdbfd,0xcbdc,0xfbbf,0xeb9e,0x9b79,0x8b58,0xbb3b,0xab1a,

0x6ca6,0x7c87,0x4ce4,0x5cc5,0x2c22,0x3c03,0x0c60,0x1c41,

0xedae,0xfd8f,0xcdec,0xddcd,0xad2a,0xbd0b,0x8d68,0x9d49,

0x7e97,0x6eb6,0x5ed5,0x4ef4,0x3e13,0x2e32,0x1e51,0x0e70,

0xff9f,0xefbe,0xdfdd,0xcffc,0xbf1b,0xaf3a,0x9f59,0x8f78,

0x9188,0x81a9,0xb1ca,0xa1eb,0xd10c,0xc12d,0xf14e,0xe16f,

0x1080,0x00a1,0x30c2,0x20e3,0x5004,0x4025,0x7046,0x6067,

0x83b9,0x9398,0xa3fb,0xb3da,0xc33d,0xd31c,0xe37f,0xf35e,

0x02b1,0x1290,0x22f3,0x32d2,0x4235,0x5214,0x6277,0x7256,

0xb5ea,0xa5cb,0x95a8,0x8589,0xf56e,0xe54f,0xd52c,0xc50d,

0x34e2,0x24c3,0x14a0,0x0481,0x7466,0x6447,0x5424,0x4405,

0xa7db,0xb7fa,0x8799,0x97b8,0xe75f,0xf77e,0xc71d,0xd73c,

0x26d3,0x36f2,0x0691,0x16b0,0x6657,0x7676,0x4615,0x5634,

0xd94c,0xc96d,0xf90e,0xe92f,0x99c8,0x89e9,0xb98a,0xa9ab,

0x5844,0x4865,0x7806,0x6827,0x18c0,0x08e1,0x3882,0x28a3,

0xcb7d,0xdb5c,0xeb3f,0xfb1e,0x8bf9,0x9bd8,0xabbb,0xbb9a,

0x4a75,0x5a54,0x6a37,0x7a16,0x0af1,0x1ad0,0x2ab3,0x3a92,

0xfd2e,0xed0f,0xdd6c,0xcd4d,0xbdaa,0xad8b,0x9de8,0x8dc9,

0x7c26,0x6c07,0x5c64,0x4c45,0x3ca2,0x2c83,0x1ce0,0x0cc1,

0xef1f,0xff3e,0xcf5d,0xdf7c,0xaf9b,0xbfba,0x8fd9,0x9ff8,

0x6e17,0x7e36,0x4e55,0x5e74,0x2e93,0x3eb2,0x0ed1,0x1ef0

);

public static function redisCRC16 ($ptr, $len){

$crc = 0;

for ($i = 0; $i < $len; $i++)

$crc = ($crc<<8) ^ self::$crc_table[(($crc >> 8) ^ ord($ptr[$i])) & 0x00FF];

return $crc;

}

}

Cluster/Tratis/Cluster.php

<?php

namespace Shineyork\Redis\Cluster\Tratis;

use Shineyork\Redis\Cluster\Input;

use Shineyork\Redis\Cluster\CRC16;

trait Cluster

{

protected $clusterlFlag = 'cluster';

/**

* 记录slots 与节点的映射

* [

* 'slots-start:slots-end' => [

* 'm' => [

* 0 => 'ip:port',

* 1 => redisObject

* ],

* * 's' => [

* [

* 'ip:port' => redisObject

* ]

* ]

* ]

* ]

* @var array

*/

protected $slots;

public function isClusterInit()

{

// 1. 初始化连接

$this->initClusterNodeConns($this->config['cluster']['nodes']);

// 2. 初始化槽的映射

$this->reshardSlotsNode();

}

// 初始化连接

public function initClusterNodeConns($clusters)

{

$this->createConn($clusters, $this->clusterlFlag);

}

// 初始化槽的映射

public function reshardSlotsNode()

{

// 获取连接

$cluster = $this->oneConn($this->clusterlFlag);

// 获取槽映射信息

$slotsInfo = $cluster->rawCommand('cluster', 'slots');

// Input::info($slotsInfo, '获取的槽的映射信息');

foreach ($slotsInfo as $key => $slots) {

$masterFlag = $this->redisFlag($slots[2][0], $slots[2][1]);

// $slaveFlag = $this->redisFlag($slots[3][0], $slots[3][1]);

// 槽的标识

$slotRang = $slots[0]."-".$slots[1];

$this->slots[$slotRang] = [

'm'=>[

$masterFlag,

$this->oneConn($this->clusterlFlag, $masterFlag)

]

// 's'

];

}

Input::info($this->slots, '当前节点与槽的结构');

}

// 根据传递的key计算slot地址

public function keyHashSlot($key)

{

$s = $e =0;

$keylen = strlen($key);

for ($s = 0; $s < $keylen; $s++)

if ($key[$s] == '{') break;

// 根据整个长度计算slot

if ($s == $keylen) return CRC16::redisCRC16($key,$keylen) % 16384;

for ($e = $s+1; $e < $keylen; $e++)

if ($key[$e] == '}') break;

// 根据整个长度计算slot

if ($e == $keylen || $e == $s+1) return CRC16::redisCRC16($key, $keylen) % 16384;

// 根据{ } 内容计算长度

return CRC16::redisCRC16($key + $s + 1, $e - $s - 1) % 16384;

}

// 根据槽获取节点

public function getNodeBySlot($slot)

{

// array_walk($arr, function($value, $key){})

array_walk($this->slots, function($slotInfo, $slotRang) use ($slot, &$node){

$rang = \explode('-', $slotRang);

if ($slot >= $rang[0] && $slot <= $rang[1]) {

$node = $slotInfo;

}

});

return $node;

}

public function clusterCommand($command, $params)

{

$slot = $this->keyHashSlot($params[0]);

Input::info($slot, $params[0].'对应的数据槽');

$nodes = $this->getNodeBySlot($slot);

Input::info($nodes, '根据slot获取的节点');

return $nodes['m'][1];

}

}

6409

6409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?