推荐一个可视化工具:TensorBoard

注: 本次所使用的为AlexNet与ResNet34俩个网络,关于这俩个网络的详细信息可以在我另外俩篇blog查看

- ResNet——CNN经典网络模型详解(pytorch实现)

- AlexNet--CNN经典网络模型详解(pytorch实现)

查看中间层特征矩阵

AlexNet

alexnet_model.py

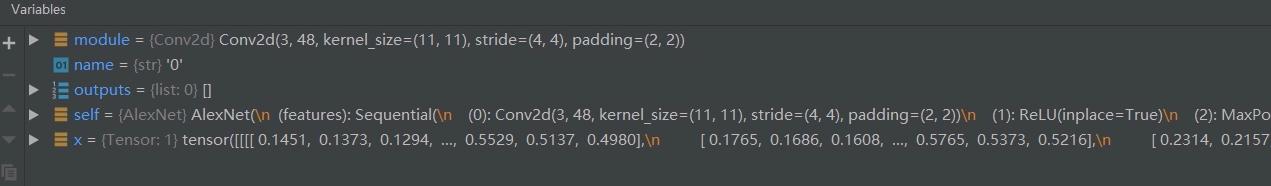

import torch.nn as nnimport torchclass AlexNet(nn.Module): def __init__(self, num_classes=1000, init_weights=False): super(AlexNet, self).__init__() self.features = nn.Sequential( nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2), # input[3, 224, 224] output[48, 55, 55] nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27] nn.Conv2d(48, 128, kernel_size=5, padding=2), # output[128, 27, 27] nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13] nn.Conv2d(128, 192, kernel_size=3, padding=1), # output[192, 13, 13] nn.ReLU(inplace=True), nn.Conv2d(192, 192, kernel_size=3, padding=1), # output[192, 13, 13] nn.ReLU(inplace=True), nn.Conv2d(192, 128, kernel_size=3, padding=1), # output[128, 13, 13] nn.ReLU(inplace=True), nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6] ) self.classifier = nn.Sequential( nn.Dropout(p=0.5), nn.Linear(128 * 6 * 6, 2048), nn.ReLU(inplace=True), nn.Dropout(p=0.5), nn.Linear(2048, 2048), nn.ReLU(inplace=True), nn.Linear(2048, num_classes), ) if init_weights: self._initialize_weights() def forward(self, x): outputs = [] for name, module in self.features.named_children(): x = module(x) if name in ["0", "3", "6"]: outputs.append(x) return outputs def _initialize_weights(self): for m in self.modules(): if isinstance(m, nn.Conv2d): nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') if m.bias is not None: nn.init.constant_(m.bias, 0) elif isinstance(m, nn.Linear): nn.init.normal_(m.weight, 0, 0.01) nn.init.constant_(m.bias, 0)在 for name, module in self.features.named_children():设置一个断点来确认name是否为conv

analyze_feature_map.py

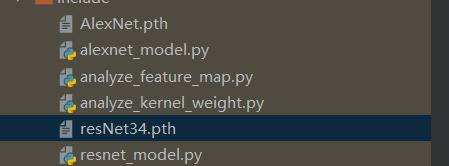

import torchfrom alexnet_model import AlexNetfrom resnet_model import resnet34import matplotlib.pyplot as pltimport numpy as npfrom PIL import Imagefrom torchvision import transforms#图像预处理,要与生成alexnet.pth文件的train预处理一致data_transform = transforms.Compose( [transforms.Resize((224, 224)), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])# data_transform = transforms.Compose(# [transforms.Resize(256),# transforms.CenterCrop(224),# transforms.ToTensor(),# transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])# create modelmodel = AlexNet(num_classes=5)# model = resnet34(num_classes=5)# load model weightsmodel_weight_path = "./AlexNet.pth" # "./resNet34.pth"model.load_state_dict(torch.load(model_weight_path))print(model)# load imageimg = Image.open("roses.jpg")# [N, C, H, W]img = data_transform(img)# expand batch dimensionimg = torch.unsqueeze(img, dim=0)# forwardout_put = model(img)for feature_map in out_put: # [N, C, H, W] -> [C, H, W] im = np.squeeze(feature_map.detach().numpy()) # [C, H, W] -> [H, W, C]print(model) im = np.transpose(im, [1, 2, 0]) # show top 12 feature maps plt.figure() for i in range(12): ax = plt.subplot(3, 4, i+1)#行,列,索引 # [H, W, C] plt.imshow(im[:, :, i], cmap='gray')#cmap默认为蓝绿图 plt.show()图中的俩个.pth文件为训练模型所生成,所以文件目录应该为

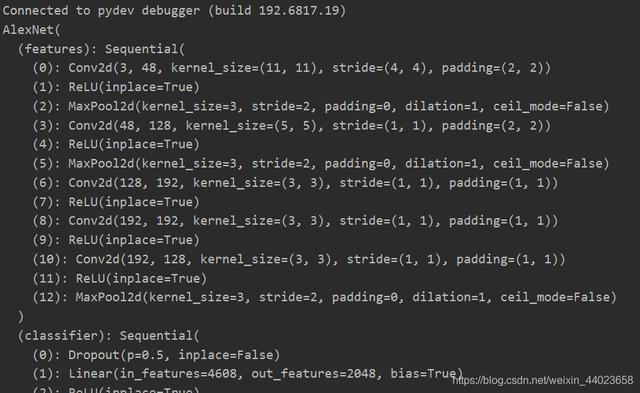

在out_put = model(img)设置一个断点,来查看print(model)的信息

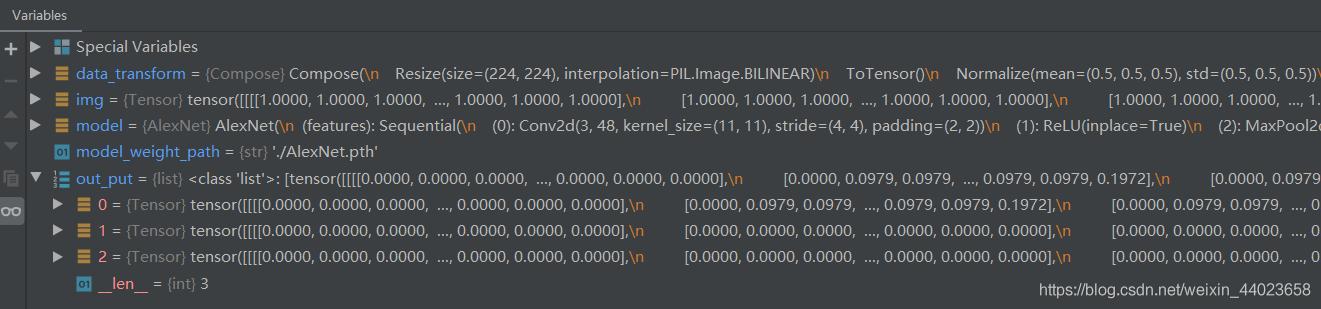

在for feature_map in out_put:设置断点out_put = model(img)

原图

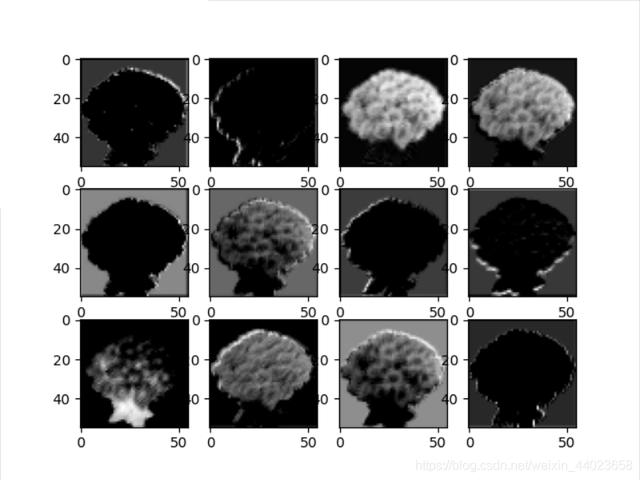

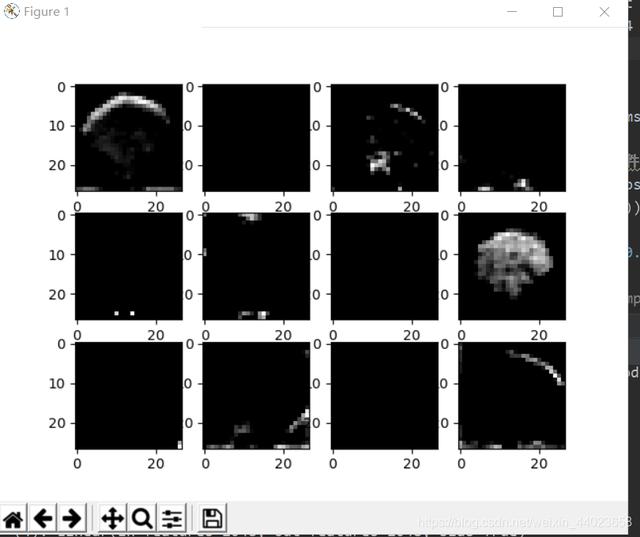

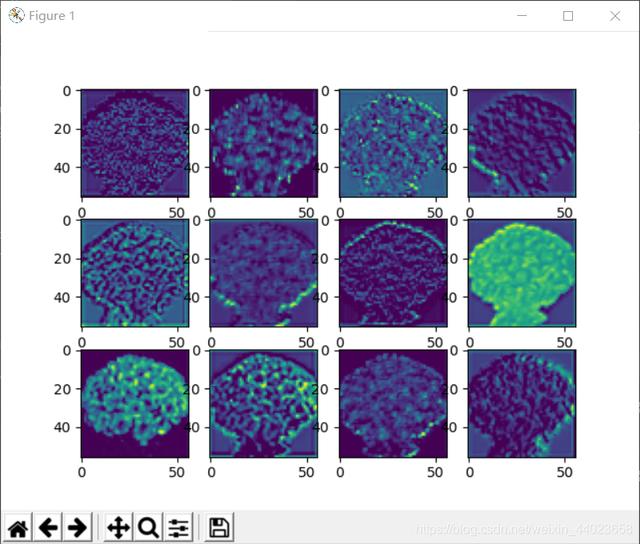

其输出为: conv1:

conv2:

conv3

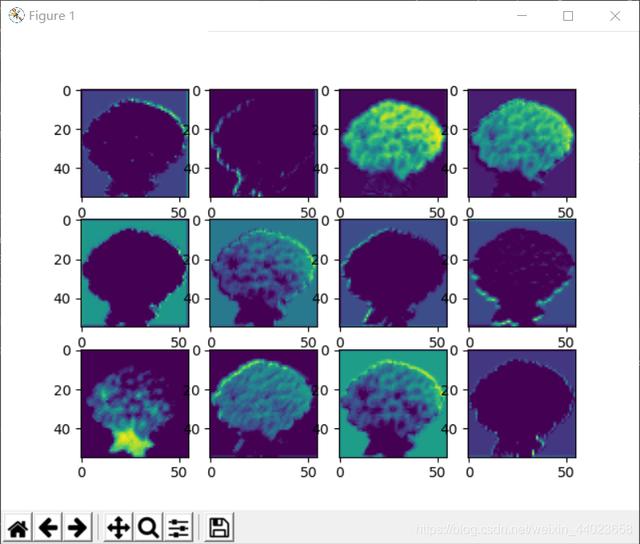

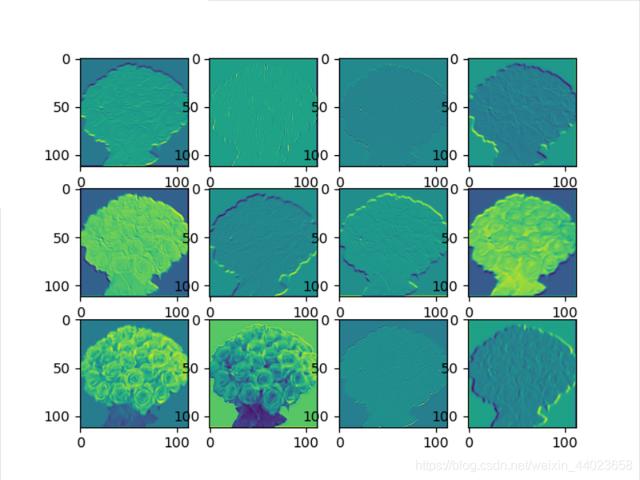

若将plt.imshow(im[:, :, i], cmap='gray')中 cmap='gray'去掉

ResnetNet34

resnet_model.py

import torch.nn as nnimport torchclass BasicBlock(nn.Module): expansion = 1 def __init__(self, in_channel, out_channel, stride=1, downsample=None): super(BasicBlock, self).__init__() self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=3, stride=stride, padding=1, bias=False) self.bn1 = nn.BatchNorm2d(out_channel) self.relu = nn.ReLU() self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel, kernel_size=3, stride=1, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(out_channel) self.downsample = downsample def forward(self, x): identity = x if self.downsample is not None: identity = self.downsample(x) out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) out += identity out = self.relu(out) return outclass Bottleneck(nn.Module): expansion = 4 def __init__(self, in_channel, out_channel, stride=1, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=1, stride=1, bias=False) # squeeze channels self.bn1 = nn.BatchNorm2d(out_channel) # ----------------------------------------- self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel, kernel_size=3, stride=stride, bias=False, padding=1) self.bn2 = nn.BatchNorm2d(out_channel) # ----------------------------------------- self.conv3 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel*self.expansion, kernel_size=1, stride=1, bias=False) # unsqueeze channels self.bn3 = nn.BatchNorm2d(out_channel*self.expansion) self.relu = nn.ReLU(inplace=True) self.downsample = downsample def forward(self, x): identity = x if self.downsample is not None: identity = self.downsample(x) out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) out = self.relu(out) out = self.conv3(out) out = self.bn3(out) out += identity out = self.relu(out) return outclass ResNet(nn.Module): def __init__(self, block, blocks_num, num_classes=1000, include_top=True): super(ResNet, self).__init__() self.include_top = include_top self.in_channel = 64 self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2, padding=3, bias=False) self.bn1 = nn.BatchNorm2d(self.in_channel) self.relu = nn.ReLU(inplace=True) self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1) self.layer1 = self._make_layer(block, 64, blocks_num[0]) self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2) self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2) self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2) if self.include_top: self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1) self.fc = nn.Linear(512 * block.expansion, num_classes) for m in self.modules(): if isinstance(m, nn.Conv2d): nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') def _make_layer(self, block, channel, block_num, stride=1): downsample = None if stride != 1 or self.in_channel != channel * block.expansion: downsample = nn.Sequential( nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False), nn.BatchNorm2d(channel * block.expansion)) layers = [] layers.append(block(self.in_channel, channel, downsample=downsample, stride=stride)) self.in_channel = channel * block.expansion for _ in range(1, block_num): layers.append(block(self.in_channel, channel)) return nn.Sequential(*layers) def forward(self, x): outputs = [] x = self.conv1(x) outputs.append(x) x = self.bn1(x) x = self.relu(x) x = self.maxpool(x) x = self.layer1(x)#仅查看layer1 outputs.append(x) # x = self.layer2(x) # x = self.layer3(x) # x = self.layer4(x) # # if self.include_top: # x = self.avgpool(x) # x = torch.flatten(x, 1) # x = self.fc(x) return outputsdef resnet34(num_classes=1000, include_top=True): return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)def resnet101(num_classes=1000, include_top=True): return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)analyze_feature_map.py

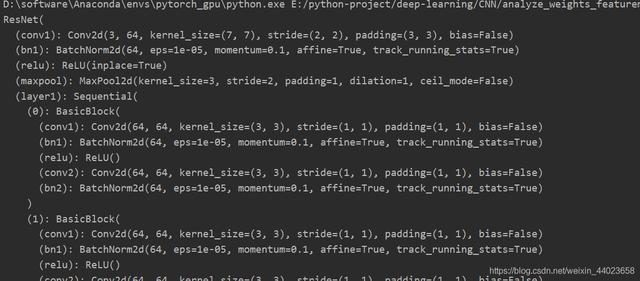

import torchfrom alexnet_model import AlexNetfrom resnet_model import resnet34import matplotlib.pyplot as pltimport numpy as npfrom PIL import Imagefrom torchvision import transforms#图像预处理,要与生成alexnet.pth文件的train预处理一致# data_transform = transforms.Compose(# [transforms.Resize((224, 224)),# transforms.ToTensor(),# transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])data_transform = transforms.Compose( [transforms.Resize(256), transforms.CenterCrop(224), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])# create model# model = AlexNet(num_classes=5)model = resnet34(num_classes=5)# load model weightsmodel_weight_path = "./resNet34.pth" # "./resNet34.pth"model.load_state_dict(torch.load(model_weight_path))print(model)# load imageimg = Image.open("roses.jpg")# [N, C, H, W]img = data_transform(img)# expand batch dimensionimg = torch.unsqueeze(img, dim=0)# forwardout_put = model(img)for feature_map in out_put: # [N, C, H, W] -> [C, H, W] im = np.squeeze(feature_map.detach().numpy()) # [C, H, W] -> [H, W, C]print(model) im = np.transpose(im, [1, 2, 0]) # show top 12 feature maps plt.figure() for i in range(12): ax = plt.subplot(3, 4, i+1)#行,列,索引 # [H, W, C] plt.imshow(im[:, :, i])#cmap默认为蓝绿图 plt.show()在img = Image.open("roses.jpg")设置断点打印model层结构为

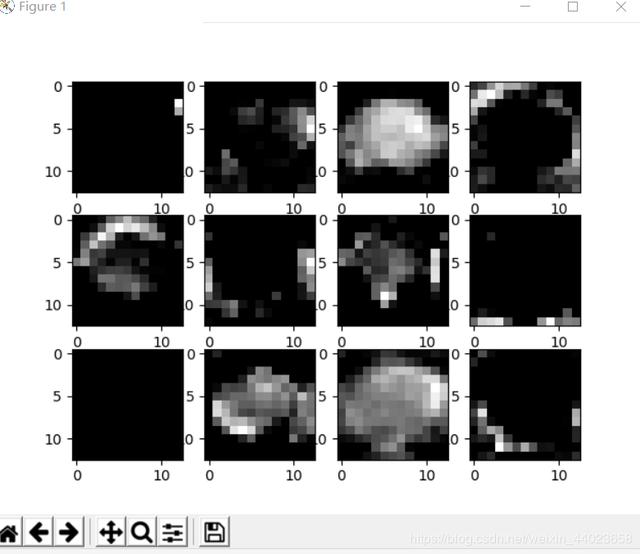

最终的输出为

对比可以发现resnet比alexnet更加好

查看卷积核参数

AlnexNet

analyze_kernel_weight.py

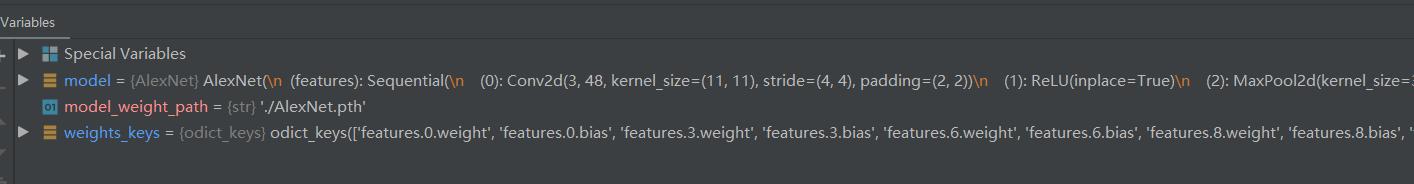

import torchfrom alexnet_model import AlexNetfrom resnet_model import resnet34import matplotlib.pyplot as pltimport numpy as np# create modelmodel = AlexNet(num_classes=5)# model = resnet34(num_classes=5)# load model weightsmodel_weight_path = "./AlexNet.pth" # "resNet34.pth"model.load_state_dict(torch.load(model_weight_path))print(model)weights_keys = model.state_dict().keys()for key in weights_keys: # remove num_batches_tracked para(in bn) if "num_batches_tracked" in key: continue # [kernel_number, kernel_channel, kernel_height, kernel_width] weight_t = model.state_dict()[key].numpy() # read a kernel information # k = weight_t[0, :, :, :] # calculate mean, std, min, max weight_mean = weight_t.mean() weight_std = weight_t.std(ddof=1) weight_min = weight_t.min() weight_max = weight_t.max() print("mean is {}, std is {}, min is {}, max is {}".format(weight_mean, weight_std, weight_max, weight_min)) # plot hist image plt.close() weight_vec = np.reshape(weight_t, [-1]) plt.hist(weight_vec, bins=50) plt.title(key) plt.show()在weights_keys = model.state_dict().keys()设置断点来单步运行查看weights_keys

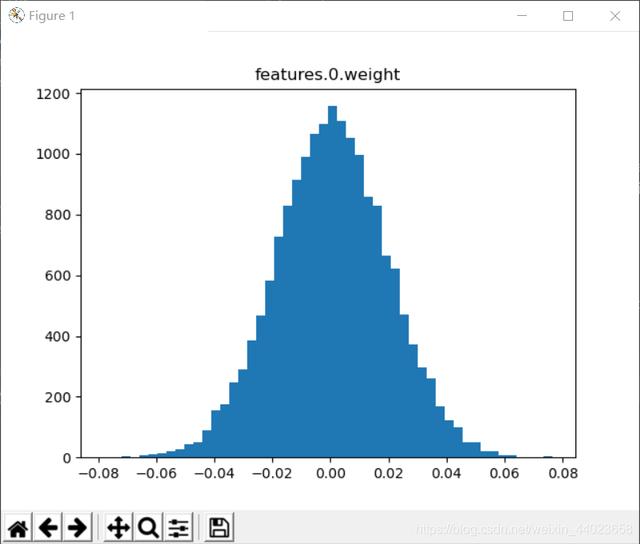

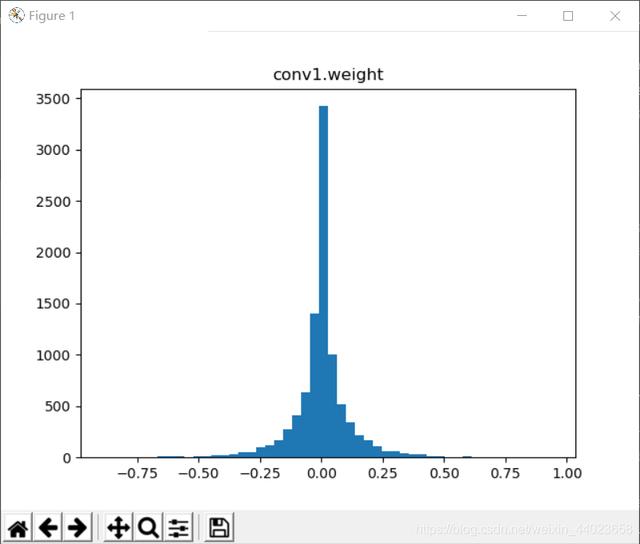

卷积核1kernel值分布

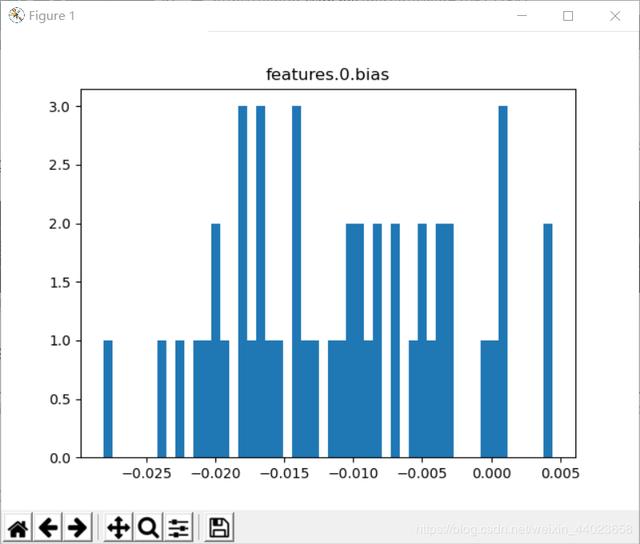

卷积核1偏置分布

下面也都一样

ResNet34

analyze_kernel_weight.py

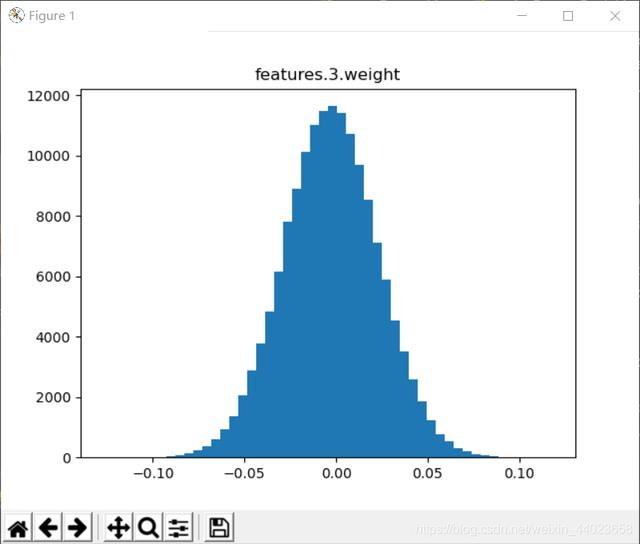

import torchfrom alexnet_model import AlexNetfrom resnet_model import resnet34import matplotlib.pyplot as pltimport numpy as np# create model# model = AlexNet(num_classes=5)model = resnet34(num_classes=5)# load model weightsmodel_weight_path = "./resNet34.pth" # "resNet34.pth"model.load_state_dict(torch.load(model_weight_path))print(model)weights_keys = model.state_dict().keys()for key in weights_keys: # remove num_batches_tracked para(in bn) if "num_batches_tracked" in key: continue # [kernel_number, kernel_channel, kernel_height, kernel_width] weight_t = model.state_dict()[key].numpy() # read a kernel information # k = weight_t[0, :, :, :] # calculate mean, std, min, max weight_mean = weight_t.mean() weight_std = weight_t.std(ddof=1) weight_min = weight_t.min() weight_max = weight_t.max() print("mean is {}, std is {}, min is {}, max is {}".format(weight_mean, weight_std, weight_max, weight_min)) # plot hist image plt.close() weight_vec = np.reshape(weight_t, [-1]) plt.hist(weight_vec, bins=50) plt.title(key) plt.show()卷积层

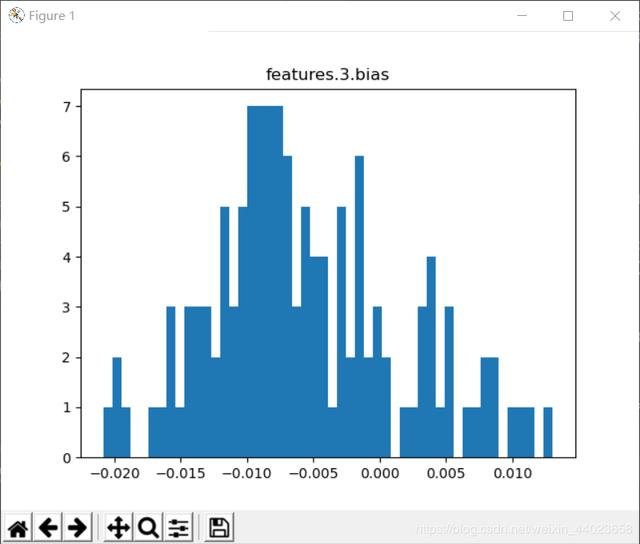

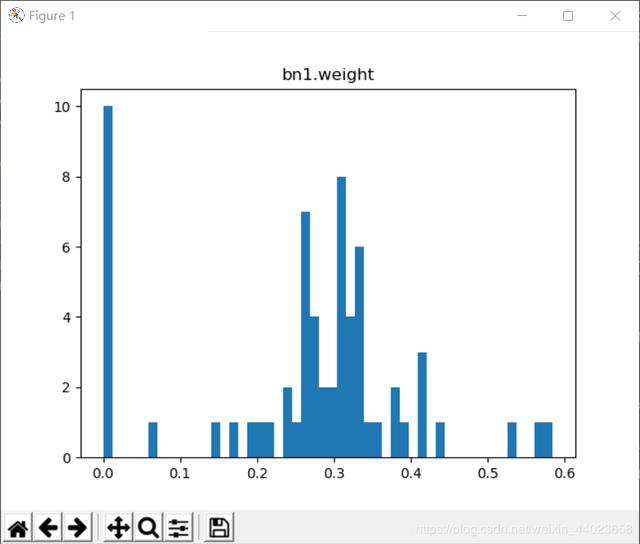

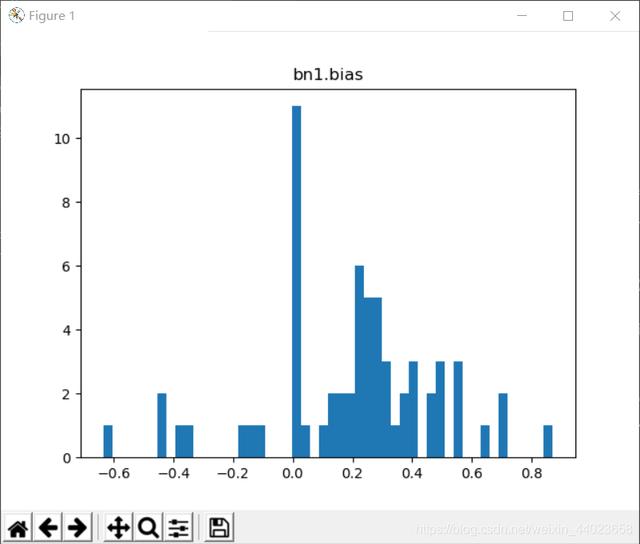

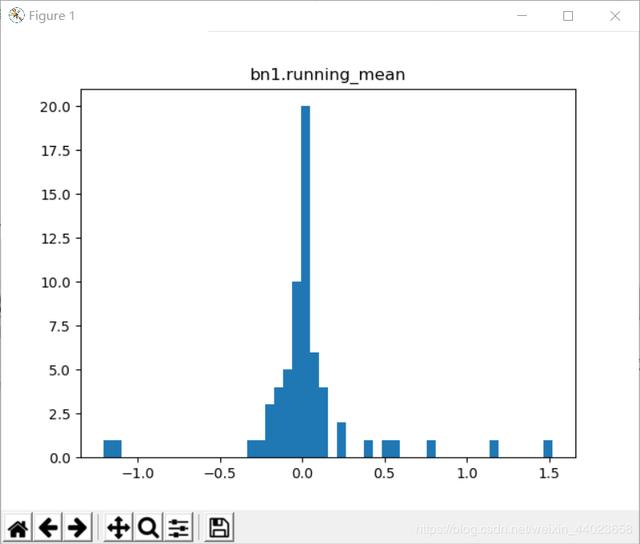

BN层 对BN忘记同学可以查看Batch Normalization(BN)超详细解析

weight为参数

bias为参数

mean就是参数

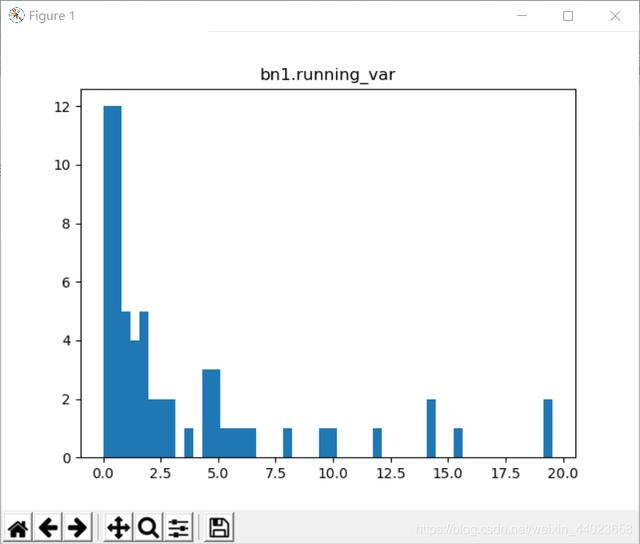

var就是

9709

9709

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?