1、spark thriftserver报以下错误,其他诸如hive/sparksql等方式均正常

ERROR ActorSystemImpl: Uncaught fatal error from thread [sparkDriverActorSystem-akka.actor.default-dispatcher-379] shutting down ActorSystem [sparkDriverActorSystem]

java.lang.OutOfMemoryError: Java heap space

原因:thriftserver的堆内存不足

解决办法: 重启thriftserver,并调大executor-memory内存(不能超过spark总剩余内存,如超过,可调大spark-env.sh中的SPARK_WORKER_MEMORY参数,并重启spark集群。

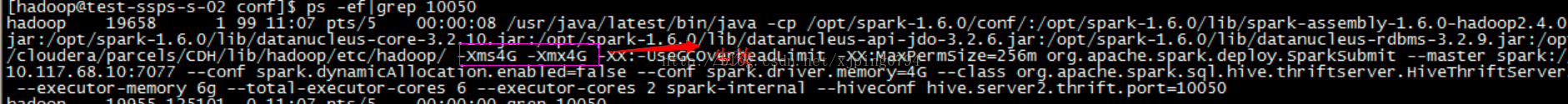

start-thriftserver.sh --master spark://masterip:7077 --executor-memory 2g --total-executor-cores 4 --executor-cores 1 --hiveconf hive.server2.thrift.port=10050 --conf spark.dynamicAllocation.enabled=false如果调大了executor的内存,依旧报此错误,仔细分析发现应该不是executor内存不足,而是driver内存不足,在standalone模式下默认给driver 1G内存,当我们提交应用启动driver时,如果读取数据太大,driver就可能报内存不足。

在spark-defaults.conf中调大driver参数

spark.driver.memory 2g同时在spark-env.sh中同样设置

spark.driver.memory 2g2、Caused by: java.lang.OutOfMemoryError: GC overhead limit exceeded

JDK6新增错误类型。当GC为释放很小空间占用大量时间时抛出。

在JVM中增加该选项 -XX:-UseGCOverheadLimit 关闭限制GC的运行时间(默认启用 )

在spark-defaults.conf中增加以下参数

spark.executor.extraJavaOptions -XX:-UseGCOverheadLimit

spark.driver.extraJavaOptions -XX:-UseGCOverheadLimit 3、spark 错误描述:

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category READ is not supported in state standby原因:

NN主从切换时,spark报上述错误,经分析spark-defaults.conf配置参数spark.eventLog.dir写死了其中一个NN的IP,导致主从切换时,无法读日志。

另外,跟应用程序使用的checkpoint也有关系,首次启动应用程序时,创建了一个新的sparkcontext,而该sparkcontext绑定了具体的NN ip,

往后每次程序重启,由于应用代码【StreamingContext.getOrCreate(checkpointDirectory, functionToCreateContext _)】将从已有checkpoint目录导入checkpoint 数据来重新创建 StreamingContext 实例。

如果 checkpointDirectory 存在,那么 context 将导入 checkpoint 数据。如果目录不存在,函数 functionToCreateContext 将被调用并创建新的 context。

故出现上述异常。

解决:

针对测试系统:

1、将某个NN固定IP改成nameservice对应的值

2、清空应用程序的checkpoint日志

3、重启应用后,切换NN,spark应用正常

4、获取每次内存GC信息

spark-defaults.conf中增加:

spark.executor.extraJavaOptions -XX:+PrintGC -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+PrintGCApplicationStoppedTime -XX:+PrintHeapAtGC -XX:+PrintGCApplicationConcurrentTime -Xloggc:gc.log4、练习HDFS文件的读取输出,写入,追加写入时,读取输出,写入都没问题,在追加写入时出现了问题。报错如下:

2017-07-14 10:50:00,046 WARN [org.apache.hadoop.hdfs.DFSClient] - DataStreamer Exception

java.io.IOException: Failed to replace a bad datanode on the existing pipeline due to no more good datanodes being available to try. (Nodes: current=[DatanodeInfoWithStorage[192.168.153.138:50010,DS-1757abd0-ffc8-4320-a277-3dfb1022533e,DISK], DatanodeInfoWithStorage[192.168.153.137:50010,DS-ddd9a357-58d6-4c2a-921e-c16087974edb,DISK]], original=[DatanodeInfoWithStorage[192.168.153.138:50010,DS-1757abd0-ffc8-4320-a277-3dfb1022533e,DISK], DatanodeInfoWithStorage[192.168.153.137:50010,DS-ddd9a357-58d6-4c2a-921e-c16087974edb,DISK]]). The current failed datanode replacement policy is DEFAULT, and a client may configure this via 'dfs.client.block.write.replace-datanode-on-failure.policy' in its configuration.

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.findNewDatanode(DFSOutputStream.java:918)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:992)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1160)

解决办法:

System.setProperty("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER")另一个错:

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.AlreadyBeingCreatedException): Failed to APPEND_FILE /weather/output/abc.txt for DFSClient_NONMAPREDUCE_1964095166_1 on 192.168.153.1 because this file lease is currently owned by DFSClient_NONMAPREDUCE_-590151867_1 on 192.168.153.1 System.setProperty("dfs.client.block.write.replace-datanode-on-failure.enable", "TRUE")5、timeout,报错:

17/10/18 17:33:46 WARN TaskSetManager: Lost task 1393.0 in stage 382.0 (TID 223626, test-ssps-s-04): ExecutorLostFailure (executor 0 exited caused by one of the running tasks)

Reason:Executor heartbeat timed out after 173568 ms

17/10/18 17:34:02 WARN NettyRpcEndpointRef: Error sending message [message = KillExecutors(app-20171017115441-0012,List(8))] in 2 attempts

org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout.org$apache$spark$rpc$RpcTimeout$$createRpcTimeoutException(RpcTimeout.scala:48)

网络或者gc引起,worker或executor没有接收到executor或task的心跳反馈。

提高 spark.network.timeout 的值,根据情况改成300(5min)或更高。

默认为 120(120s),配置所有网络传输的延时

spark.network.timeout 300 6、通过sparkthriftserver读取lzo文件报错:

ClassNotFoundException: Class com.hadoop.compression.lzo.LzoCodec not found 在spark-env.sh中增加如下配置:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/hadoop/hadoop-2.2.0/lib/native

export SPARK_LIBRARY_PATH=$SPARK_LIBRARY_PATH:/home/hadoop/hadoop-2.2.0/lib/native

export SPARK_CLASSPATH=$SPARK_CLASSPATH:/home/hadoop/hadoop-2.2.0/share/hadoop/yarn/*:/home/hadoop/hadoop-2.2.0/share/hadoop/yarn/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/common/*:/home/hadoop/hadoop-2.2.0/share/hadoop/common/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/hdfs/*:/home/hadoop/hadoop-2.2.0/share/hadoop/hdfs/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/mapreduce/*:/home/hadoop/hadoop-2.2.0/share/hadoop/mapreduce/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/tools/lib/*:/home/hadoop/spark-1.6.1-bin-2.2.0/lib/*并分发到各节点

重启spark thrift server执行正常

7、spark worker中发布executor频繁报错,陷入死循环(新建->失败->新建->失败.....)

work日志:

Asked to launch executor app-20171024225907-0018/77347 for org.apache.spark.sql.hive.thriftserver.HiveThriftServer2

worker.ExecutorRunner (Logging.scala:logInfo(58)) - Launch command: "/home/hadoop/jdk1.7.0_09/bin/java" ......

Executor app-20171024225907-0018/77345 finished with state EXITED message Command exited with code 53 exitStatus 53executor日志:

ERROR [main] storage.DiskBlockManager (Logging.scala:logError(95)) - Failed to create local dir in . Ignoring this directory.

java.io.IOException: Failed to create a temp directory (under ) after 10 attempts!再看配置文件spark-env.sh:

export SPARK_LOCAL_DIRS=/data/spark/data设置了spark本地目录,但机器上并没有创建该目录,所以引发错误。

./drun "mkdir -p /data/spark/data"

./drun "chown -R hadoop:hadoop /data/spark"创建后,重启worker未再出现错误。

8、spark worker异常退出:

worker中日志:

17/10/25 11:59:58 INFO worker.Worker: Master with url spark://10.10.10.82:7077 requested this worker to reconnect.

17/10/25 11:59:58 INFO worker.Worker: Not spawning another attempt to register with the master, since there is an attempt scheduled already.

17/10/25 11:59:59 INFO worker.Worker: Successfully registered with master spark://10.10.10.82:7077

17/10/25 11:59:59 INFO worker.Worker: Worker cleanup enabled; old application directories will be deleted in: /home/hadoop/spark-1.6.1-bin-2.2.0/work

17/10/25 12:00:00 INFO worker.Worker: Retrying connection to master (attempt # 1)

17/10/25 12:00:00 INFO worker.Worker: Master with url spark://10.10.10.82:7077 requested this worker to reconnect.

17/10/25 12:00:00 INFO worker.Worker: Master with url spark://10.10.10.82:7077 requested this worker to reconnect.

17/10/25 12:00:00 INFO worker.Worker: Not spawning another attempt to register with the master, since there is an attempt scheduled already.

17/10/25 12:00:00 INFO worker.Worker: Asked to launch executor app-20171024225907-0018/119773 for org.apache.spark.sql.hive.thriftserver.HiveThriftServer2

17/10/25 12:00:00 INFO worker.Worker: Connecting to master 10.10.10.82:7077...

17/10/25 12:00:00 INFO spark.SecurityManager: Changing view acls to: hadoop

17/10/25 12:00:00 INFO worker.Worker: Connecting to master 10.10.10.82:7077...

17/10/25 12:00:00 INFO spark.SecurityManager: Changing modify acls to: hadoop

17/10/25 12:00:00 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

17/10/25 12:00:01 ERROR worker.Worker: Worker registration failed: Duplicate worker ID

17/10/25 12:00:01 INFO worker.ExecutorRunner: Launch command: "/home/hadoop/jdk1.7.0_09/bin/java" "-cp" "/home/hadoop/spark-1.6.1-bin-2.2.0/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/yarn/*:/home/hadoop/hadoop-2.2.0/share/hadoop/yarn/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/common/*:/home/hadoop/hadoop-2.2.0/share/hadoop/common/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/hdfs/*:/home/hadoop/hadoop-2.2.0/share/hadoop/hdfs/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/mapreduce/*:/home/hadoop/hadoop-2.2.0/share/hadoop/mapreduce/lib/*:/home/hadoop/hadoop-2.2.0/share/hadoop/tools/lib/*:/home/hadoop/spark-1.6.1-bin-2.2.0/lib/*:/home/hadoop/spark-1.6.1-bin-2.2.0/conf/:/home/hadoop/spark-1.6.1-bin-2.2.0/lib/spark-assembly-1.6.1-hadoop2.2.0.jar:/home/hadoop/spark-1.6.1-bin-2.2.0/lib/datanucleus-api-jdo-3.2.6.jar:/home/hadoop/spark-1.6.1-bin-2.2.0/lib/datanucleus-rdbms-3.2.9.jar:/home/hadoop/spark-1.6.1-bin-2.2.0/lib/datanucleus-core-3.2.10.jar:/home/hadoop/hadoop-2.2.0/etc/hadoop/" "-Xms1024M" "-Xmx1024M" "-Dspark.driver.port=43546" "-XX:MaxPermSize=256m" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@10.10.10.80:43546" "--executor-id" "119773" "--hostname" "10.10.10.190" "--cores" "1" "--app-id" "app-20171024225907-0018" "--worker-url" "spark://Worker@10.10.10.190:55335"

17/10/25 12:00:02 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:02 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:02 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:03 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:04 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:05 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:05 INFO worker.ExecutorRunner: Killing process!

17/10/25 12:00:05 INFO util.ShutdownHookManager: Shutdown hook called

17/10/25 12:00:06 INFO util.ShutdownHookManager: Deleting directory /data/spark/data/spark-ab442bb5-62e6-4567-bc7c-8d00534ba1a3近期,频繁出现worker异常退出情况,从worker日志看到,master要求重新连接并注册,注册后,worker继续连接master,并反馈了一个错误信息:

ERROR worker.Worker: Worker registration failed: Duplicate worker ID之后就突然杀掉所有Executor然后退出worker。

出现该问题的机器内存都是16g(其他32g节点,没有出现worker退出问题)。

再查看该类节点yarn的 配置,发现分配yarn的资源是15g,怀疑问题节点yarn和spark同处高峰期,导致spark分配不到资源退出。

为验证猜想,查看NM日志,其时间发生点部分容器有自杀情况,但这个不能说明什么,因为其他时间点也存有该问题。

为查看问题时间点,节点中yarn资源使用情况,只能到active rm中查看rm日志,最后看到节点中在11:46:22到11:59:18,

问题节点yarn占用资源一直在。

如此,确实是该问题所致,将yarn资源调整至12g/10c,后续再继续观察。

8、spark (4个dd,11个nm)没有利用非datanode节点上的executor问题

问题描述:跑大job时,总任务数上万个,但是只利用了其中4个dd上的executor,另外7台没有跑或者跑很少。

分析:spark driver进行任务分配时,会希望每个任务正好分配到要计算的数据节点上,避免网络传输。但当task因为其所在数据节点资源正好被分配完而没机会再分配时,spark会等一段时间(由spark.locality.wait参数控制,默认3s),超过该时间,就会选择一个本地化差的级别进行计算。

解决办法: 将spark.locality.wait设置为0,不等待,任务直接分配,需重启服务生效

9、spark自带参数不生效问题

spark thrift测试系统经常出问题,调整了driver内存参数,依旧报问题。

常见问题状态:连接spark thrift无响应,一会提示OutOfMemoryError: Java heap space

后来发现设置的driver内存参数没有生效,环境配置文件spark-env.sh设置了SPARK_DAEMON_MEMORY=1024m,覆盖了启动参数设置的

--driver-memory 4G

,导致参数设置没生效

1319

1319

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?