-

问题:PyCharm中通过pyspark无法调起spark

2019-10-16 20:39:09,343 | Dummy-1:22492 | django.db.backends:90 | utils:execute | DEBUG | (0.000) SELECT @@SQL_AUTO_IS_NULL; args=None

2019-10-16 20:39:09,344 | Dummy-1:22492 | django.db.backends:90 | utils:execute | DEBUG | (0.000) SELECT VERSION(); args=None

System check identified no issues (0 silenced).

2019-10-16 20:39:09,923 | Dummy-1:22492 | django.db.backends:90 | utils:execute | DEBUG | (0.003) SHOW FULL TABLES; args=None

2019-10-16 20:39:09,927 | Dummy-1:22492 | django.db.backends:90 | utils:execute | DEBUG | (0.002) SELECTdjango_migrations.app,django_migrations.nameFROMdjango_migrations; args=()

October 16, 2019 - 20:39:09

Django version 1.10.1, using settings ‘mysite.settings’

Starting development server at http://0.0.0.0:8001/

Quit the server with CTRL-BREAK.

2019-10-16 20:39:22,058 | Thread-4:15288 | django.db.backends:90 | utils:execute | DEBUG | (0.000) SELECT @@SQL_AUTO_IS_NULL; args=None

2019-10-16 20:39:22,058 | Thread-4:15288 | django.db.backends:90 | utils:execute | DEBUG | (0.000) SELECT VERSION(); args=None

2019-10-16 20:39:22,070 | Thread-4:15288 | django.db.backends:90 | utils:execute | DEBUG | (0.000) SELECTdjango_session.session_key,django_session.session_data,django_session.expire_dateFROMdjango_sessionWHERE (django_session.session_key= ‘k4micifggqpl2xjc4wapt12wwq0j12b3’ ANDdjango_session.expire_date> ‘2019-10-16 20:39:22’); args=(‘k4micifggqpl2xjc4wapt12wwq0j12b3’, ‘2019-10-16 20:39:22’)

2019-10-16 20:39:22,073 | Thread-4:15288 | django.db.backends:90 | utils:execute | DEBUG | (0.001) SELECTauth_user.id,auth_user.password,auth_user.last_login,auth_user.is_superuser,auth_user.username,auth_user.first_name,auth_user.last_name,auth_user.email,auth_user.is_staff,auth_user.is_active,auth_user.date_joinedFROMauth_userWHEREauth_user.id= 1; args=(1,)

Using Spark’s default log4j profile: org/apache/spark/log4j-defaults.properties

19/10/16 20:39:22 ERROR SparkUncaughtExceptionHandler: Uncaught exception in thread Thread[main,5,main]

java.util.NoSuchElementException: key not found: _PYSPARK_DRIVER_CALLBACK_HOST

at scala.collection.MapLike c l a s s . d e f a u l t ( M a p L i k e . s c a l a : 228 ) a t s c a l a . c o l l e c t i o n . A b s t r a c t M a p . d e f a u l t ( M a p . s c a l a : 58 ) a t s c a l a . c o l l e c t i o n . M a p L i k e class.default(MapLike.scala:228) at scala.collection.AbstractMap.default(Map.scala:58) at scala.collection.MapLike class.default(MapLike.scala:228)atscala.collection.AbstractMap.default(Map.scala:58)atscala.collection.MapLikeclass.apply(MapLike.scala:141)

at scala.collection.AbstractMap.apply(Map.scala:58)

at org.apache.spark.api.python.PythonGatewayServerKaTeX parse error: Can't use function '$' in math mode at position 8: anonfun$̲main$1.apply$mc…runMain(SparkSubmit.scala:731)

at org.apache.spark.deploy.SparkSubmit$.doRunMain 1 ( S p a r k S u b m i t . s c a l a : 181 ) a t o r g . a p a c h e . s p a r k . d e p l o y . S p a r k S u b m i t 1(SparkSubmit.scala:181) at org.apache.spark.deploy.SparkSubmit 1(SparkSubmit.scala:181)atorg.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

19/10/16 20:39:22 INFO ShutdownHookManager: Shutdown hook called

2019-10-16 20:39:23,355 | Thread-4:15288 | django.server:131 | basehttp:log_message | ERROR | “GET /cif/cifmas_echart_0002/ HTTP/1.1” 500 102233 -

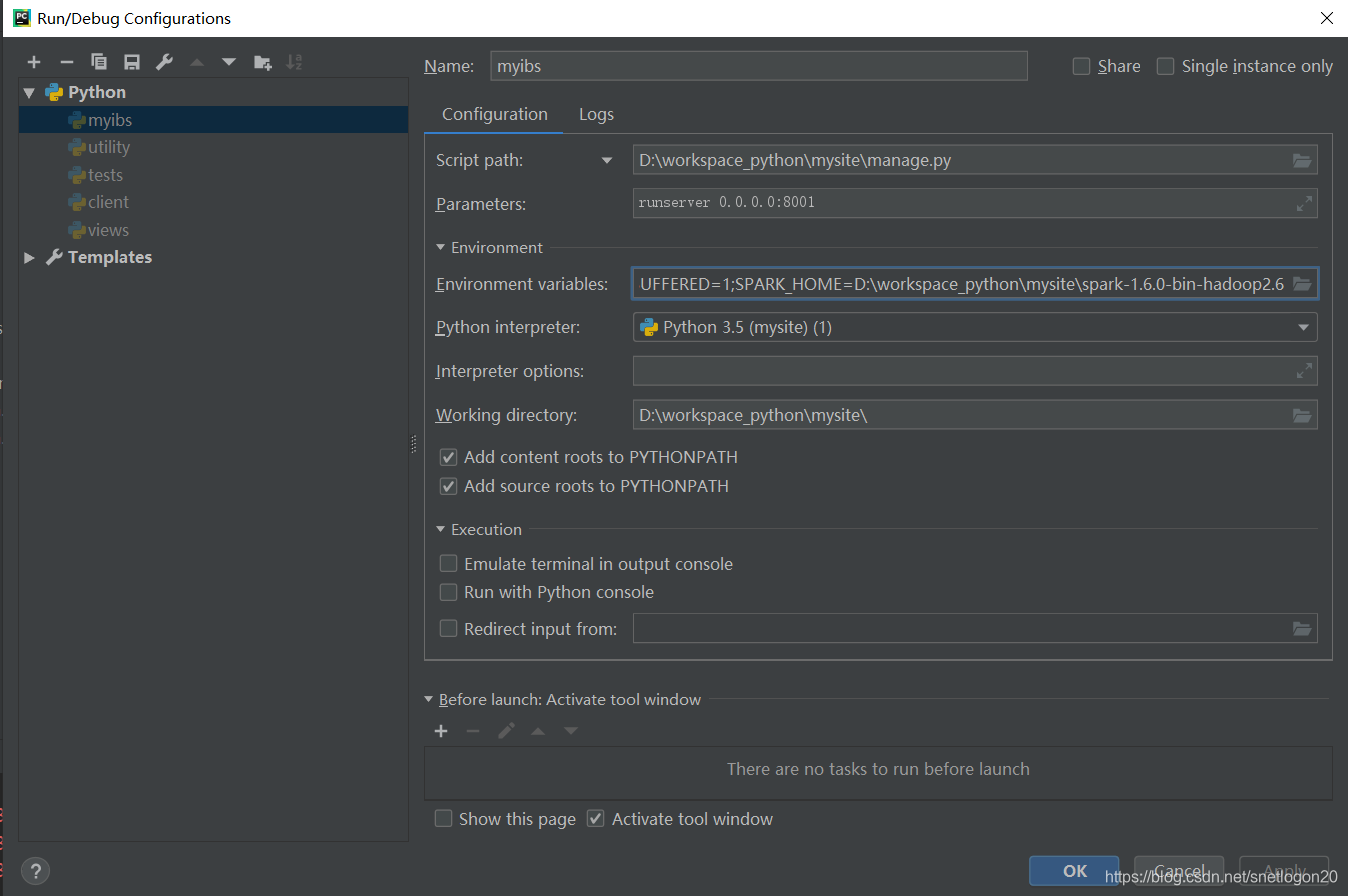

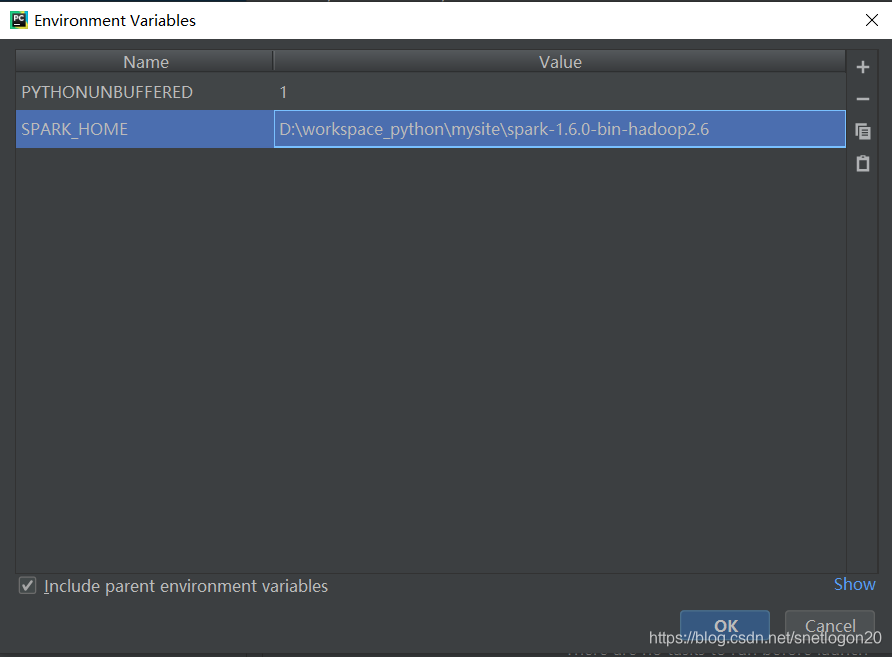

解决方法:在pycharm中增加变量,指向 spark目录即可。

PyCharm中通过pyspark调用spark报错的解决办法

最新推荐文章于 2024-04-24 17:17:35 发布

3246

3246

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?