全局状态和会话状态,对于程序员来说都是很熟悉的了,开发中会经常遇到,这里看下在Gradio中是怎么使用的,以及对GPT2的一点介绍

一、Global State全局状态

如果定义的函数想要访问外部的数据,可以将变量写在外面成为一个全局变量,这样就可以在函数内部访问它。例如,您可以在函数外部加载一个大型模型,并在函数内部使用它,这样每次函数调用都不需要重新加载模型。

看一个示例,显示排名前三的分数:

import gradio as gr

scores = [] #全局变量

def track_score(score):

scores.append(score)

top_scores = sorted(scores, reverse=True)[:3]

return top_scores

demo = gr.Interface(

track_score,

gr.Number(label="分数"),

gr.JSON(label="排名前三的分数")

)

demo.launch()其中的scores = [] 分数数组会在所有用户之间共享。如果多个用户访问此演示,他们的分数将被添加到同一列表中,并且返回的前三名分数将在这个共享参考中收集。

二、Session State会话状态

数据持久化的另一种类型是会话状态,其中数据在页面会话中的多个提交中持久化。但是,数据不会在模型的不同用户之间共享。要在会话状态中存储数据,需要做三件事:

1、向函数传递一个额外的参数,该参数表示接口的状态。

2、在函数结束时,将状态的更新值作为额外的返回值返回。

3、在创建接口时添加'state'输入和'state'输出组件

聊天机器人就是一个需要会话状态的例子——您希望访问用户以前提交的内容,但是您不能将聊天历史存储在全局变量中,因为这样聊天历史会在不同的用户之间混淆。这个大家也是很熟悉的,做登录的时候就属于这种,不同用户需要区别开来。

来看个简单的机器人聊天的示例(来自微软的DialoGPT),对官方例子做了点修改:

import gradio as gr

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

def predict(input, history=[]):

new_user_input_ids = tokenizer.encode(input + tokenizer.eos_token, return_tensors='pt')

# 添加到聊天历史记录

bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)

# 生成回答

history = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id).tolist()

# 将标记转换为文本,然后将响应分成几行

response = tokenizer.decode(history[0]).split("<|endoftext|>")

response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)]

return response, history

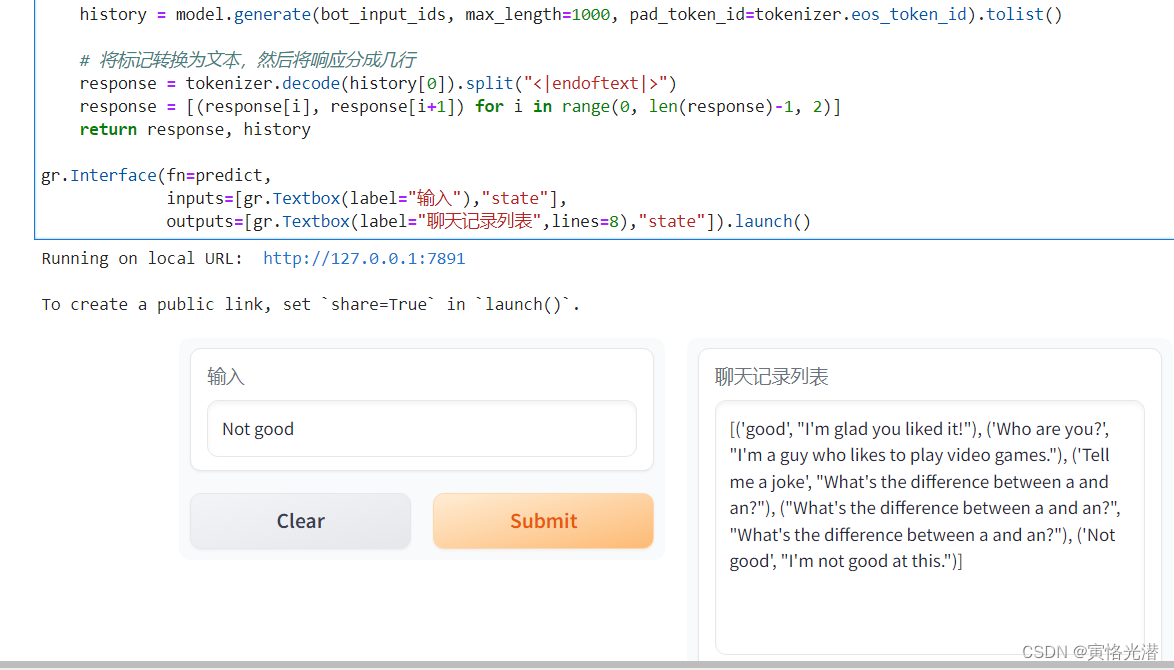

gr.Interface(fn=predict,

inputs=[gr.Textbox(label="输入"),"state"],

outputs=[gr.Textbox(label="聊天记录列表",lines=8),"state"]).launch()如果没有安装transformers模块将会找不到模块的错误,本人依然推荐大家带镜像的安装

ModuleNotFoundError: No module named 'transformers'

pip install transformers -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com 整体的调用还是比较简单的,就是先将输入做词嵌入(编码),通过GPT2模型的处理生成回答,然后将词嵌入做解码输出。

然后我们输入信息,就能和机器人进行聊天了,界面如下所示:

这个DialoGPT是基于GPT2的一个初级聊天,首先会将相关的配置和模型给下载下来。然后就是标准的输入和输出,左边输入想要聊天的内容,右边就是跟机器人聊天的历史聊天记录。

这个DialoGPT是基于GPT2的一个初级聊天,首先会将相关的配置和模型给下载下来。然后就是标准的输入和输出,左边输入想要聊天的内容,右边就是跟机器人聊天的历史聊天记录。

三、GPT2的相关

这里我们来了解下这个DialoGPT-medium模型的分词的相关配置等信息,属于transformers.models.gpt2.tokenization_gpt2_fast.GPT2TokenizerFast

打印看下它的信息print(tokenizer):

GPT2TokenizerFast(name_or_path='microsoft/DialoGPT-medium', vocab_size=50257, model_max_length=1024, is_fast=True, padding_side='right', truncation_side='right', special_tokens={'bos_token': AddedToken("<|endoftext|>", rstrip=False, lstrip=False, single_word=False, normalized=True), 'eos_token': AddedToken("<|endoftext|>", rstrip=False, lstrip=False, single_word=False, normalized=True), 'unk_token': AddedToken("<|endoftext|>", rstrip=False, lstrip=False, single_word=False, normalized=True)}, clean_up_tokenization_spaces=True)看下这个模型的结构print(model):

GPT2LMHeadModel(

(transformer): GPT2Model(

(wte): Embedding(50257, 1024)

(wpe): Embedding(1024, 1024)

(drop): Dropout(p=0.1, inplace=False)

(h): ModuleList(

(0): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(1): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(2): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(3): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(4): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(5): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(6): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(7): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(8): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(9): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(10): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(11): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(12): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(13): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(14): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(15): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(16): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(17): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(18): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(19): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(20): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(21): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(22): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

(23): GPT2Block(

(ln_1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(attn): GPT2Attention(

(c_attn): Conv1D()

(c_proj): Conv1D()

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D()

(c_proj): Conv1D()

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

)

(ln_f): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)

)

(lm_head): Linear(in_features=1024, out_features=50257, bias=False)

)有兴趣的可以查阅其余章节:

Gradio的web界面演示与交互机器学习模型,安装和使用《1》

Gradio的web界面演示与交互机器学习模型,主要特征《2》

4270

4270

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?