目录

cameraMetadata头部 : struct camera_metadata

camera metadata头部、entry区、data区关系

allocate_camera_metadata (分配metadata)

find_camera_metadata_entry(从metadata中根据tag查找value)

add_camera_metadata_entry(增加tag和value到metadata)

add_camera_metadata_entry_raw实现

delete_camera_metadata_entry(删除tag)

update_camera_metadata_entry(更新tag的value值)

update_camera_metadata_entry实现

背景

首先提出几个关于meta的问题:

- meta是什么?

- tagName,tagID,location,data他们是什么?怎么联系起来的?

- 用什么数据结构管理meta,怎么实现建、增、删、查、改的?

- 根据需求不同,都有哪些meta,他们有什么区别?

- framework meta与hal meta怎么交互,是否存在内存copy, 如何实现的(包括request和result)?

- meta owner是谁?buffer在哪里分配的?生命周期如何?内存大小如何决定?

- pipeline间,node间,meta怎么传递?

- 多帧合成pipeline的meta是怎么处理?

- input meta与output meta的关系? input merge到output?

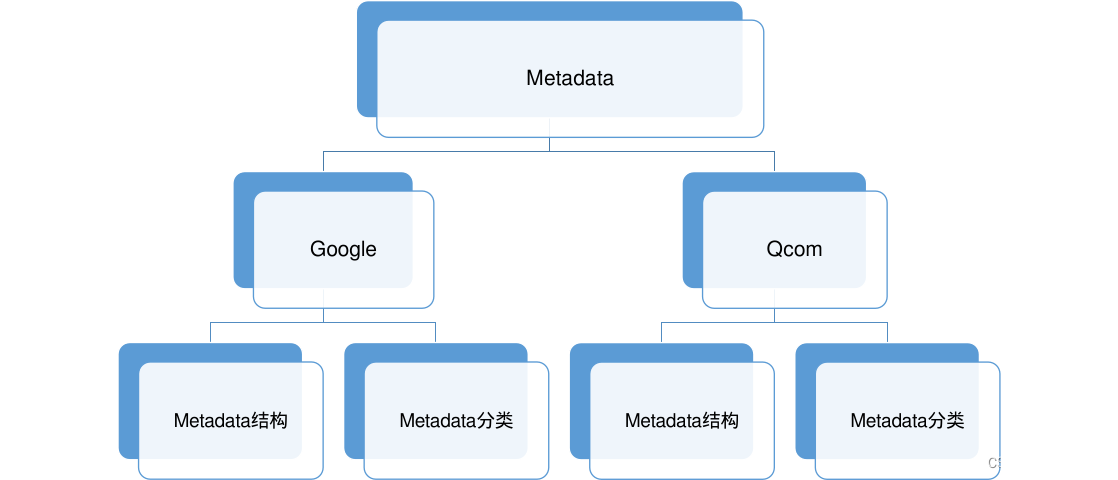

CameraMetadata基本概念

- Metadata 是整个 Android camera 中类似于高楼大厦中的管道系统一样,贯穿了整个 Camera 的 Framework 和 vendor HAL。Metadata 里面包含了所有的控制、参数、返回值等等。

- Metadata分为Android原生的Metadata和芯片商或者用户自定义的Metadata,即Android Tag和Vendor Tag。Vendor Tag在Hal中定义,并在camx和chi目录中使用。

- 如果是高通平台的话,一般至少存在三类metadata,一类是 Andriod metadata、Qcom metadata、手机厂商自己定义的 metadata。

camera meta简单来说就是用来描述图像特征的参数,比如常见的曝光、ISO等,不难理解他的数据结构应该是由N个key和value组成的。

camera参数多起来可能上百个,用什么样的数据结构来封装这些参数,封装成什么操作,在什么情况下使用这些操作?

如果让你实现对meta的管理,你会怎么实现?

先来看看google是怎么做的:

Google Metadata

Google Metadata相关代码位置:

/system/media/camera/include/system/camera_metadata_tags.h /system/media/camera/src/camera_metadata.c /system/media/camera/src/camera_metadata_tag_info.c

Google—Metadata结构

Metadata: An Array of Entries

主要分为: 1.cameraMetadata头部 2.camera_metadata_buffer_entry区 3.data区

官方注释

|

|

Aandroid API

cameraMetadata头部 : struct camera_metadata

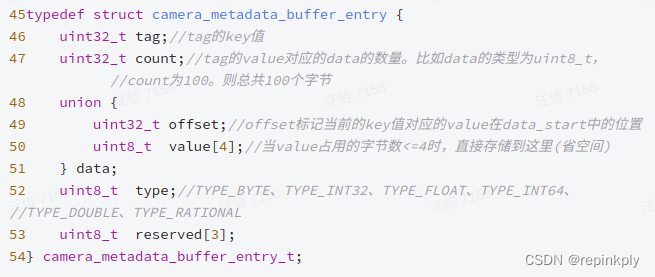

camera_metadata_buffer_entry

struct camera_metadata_entry

typedef struct camera_metadata_entry {

size_t index;//该entry在当前metadata里面的index(0~entry_count-1)

uint32_t tag;//tag的key值

uint8_t type;//TYPE_BYTE、 TYPE_INT32、TYPE_FLOAT、TYPE_INT64、TYPE_DOUBLE、TYPE_RATIONAL

size_t count;//tag的value对应的data的数量。比如data的类型为uint8_t,count为100。则总共为100个字节。

union {

uint8_t *u8;

int32_t *i32;

float *f;

int64_t *i64;

double *d;

camera_metadata_rational_t *r;

} data;// tag的value对应的data值

} camera_metadata_entry_t;data区为什么是一个联合体?

最重要的是data。data是一个联合体,为什么是一个联合体呢?目的就是为了节省空间:比如当类型为枚举时,或者一个整形,那么只需要放在value中存储就行。就没有必要用uint32_t来存储它。和MetaBuffer中的Content是一样的原理。在MetaBufer中,当要存储的内存大小是小于或者等于8个字节时,是直接存储在BYTE m_data[MaxInplaceTagSize];,当存储的内存大小是大于8个字节的,是存放在MemoryRegion中的,这个是高通自己实现的一套metadata框架,相对于Google metadata来说有自己的优势,比较灵活。

camera metadata头部、entry区、data区关系

CameraMetadata增删改查

allocate_camera_metadata (分配metadata)

camera_metadata_t *allocate_camera_metadata(size_ttagkaifa entry_capacity, size_t data_capacity) //申请一块内存给metadata,并且赋初始值

step1:计算metadata需要申请的内存字节数: memory_needed = sizeof(camera_metadata_t) + sizeof(camera_metadata_buffer_entry_t[entry_capacity]) + sizeof(uint8_t[data_capacity])

step2: 使用calloc函数申请内存:void *buffer = calloc(1, memory_needed);

step3: 调用place_camera_metadata() 函数为头部的机构体camera_metadata_t内部变量赋值,camera_metadata_t *metadata = place_camera_metadata(buffer, memory_needed, entry_capacity, data_capacity);

问题1:entry_capacity是怎么计算出来的?怎么知道当前一共有多少entry?data_capacity是怎么计算出来的?

entry_capacity就是预留的entry的数量,一般比可能用到的entry多预留一些。

data_capacity就是预留的data的大小,一般比所有data的总和多预留一些。

问题2:典型用法有哪些?什么时候用?

需要对已有meta做一些腾挪,或者自己创建的pipeline,尤其HAL层以上的pipeline需要新建对应的meta。

|

|

find_camera_metadata_entry(从metadata中根据tag查找value)

int find_camera_metadata_entry(camera_metadata_t *src, uint32_t tag, camera_metadata_entry_t *entry)

step1:从src中找到tag对应的entry的index

step2:根据index将entry拿出来

int find_camera_metadata_entry(camera_metadata_t *src, uint32_t tag, camera_metadata_entry_t *entry)

{

uint32_t index;

if (src->flags & FLAG_SORTED) //有排序,二分法查找

{

camera_metadata_buffer_entry_t *search_entry = NULL;

camera_metadata_buffer_entry_t key;

key.tag = tag;

search_entry = bsearch(&key, get_entries(src), src->entry_count, sizeof(camera_metadata_buffer_entry_t), compare_entry_tags);

if (search_entry == NULL) return NOT_FOUND;

index = search_entry - get_entries(src);

}

else

{ //线性查找

camera_metadata_buffer_entry_t *search_entry = get_entries(src);

for (index = 0; index < src->entry_count; index++, search_entry++) {

if (search_entry->tag == tag) {

break;

}

}

if (index == src->entry_count) return NOT_FOUND;

}

return get_camera_metadata_entry(src, index, entry);

}

// 根据index, 将对应的entry 拿出来

int get_camera_metadata_entry(camera_metadata_t *src, size_t index, camera_metadata_entry_t *entry)

{

camera_metadata_buffer_entry_t *buffer_entry = get_entries(src) + index;

entry->index = index;

entry->tag = buffer_entry->tag;

entry->type = buffer_entry->type;

entry->count = buffer_entry->count;

if (buffer_entry->count * camera_metadata_type_size[buffer_entry->type] > 4) // 大于4字节

{

entry->data.u8 = get_data(src) + buffer_entry->data.offset;

}

else

{

entry->data.u8 = buffer_entry->data.value; // // 小于4字节

}

}add_camera_metadata_entry(增加tag和value到metadata)

int add_camera_metadata_entry(camera_metadata_t *dst, uint32_t tag, const void *data, size_t data_count)

调用下面的add_camera_metadata_entry_raw(camera_metadata_t *dst, uint32_t tag, uint8_t type, const void *data, size_t data_count)

step1:从dst的entry末尾取出一个entry,*entry = get_entriesget_entries(dst) + dst→entry_count

step2:计算entry数据总字节数,如果总字节数 < 4, 直接使用memcpy把data复制到entry→data.value

step3:否则 就给entry->data.offset赋值为dst→data_count, 然后使用memcpy将数据拷贝到entry→data.offset位置

add_camera_metadata_entry_raw实现

|

|

add_camera_metadata_entry用法

|

|

delete_camera_metadata_entry(删除tag)

int delete_camera_metadata_entry(camera_metadata_t *dst, size_t index)

step1:如果entry大于4字节时,先删除旧的数据:把下个entry(当前的data.offset + entry_bytes)的data到后面的整个data区,向前移动entry_bytes个字节,覆盖掉当前entry的数据, 然后更新后面的entry的data.offset

step2:向前移动entry数组覆盖掉要删除的entry

int delete_camera_metadata_entry(camera_metadata_t *dst, size_t index)

{

camera_metadata_buffer_entry_t *entry = get_entries(dst) + index;

size_t data_bytes = calculate_camera_metadata_entry_data_size(entry->type, entry->count);

if (data_bytes > 0) //entry大于4字节

{

// 移动data数据,覆盖要删除的数据

uint8_t *start = get_data(dst) + entry->data.offset;

uint8_t *end = start + data_bytes;

size_t length = dst->data_count - entry->data.offset - data_bytes;

memmove(start, end, length);

// 更新entry的数据偏移

camera_metadata_buffer_entry_t *e = get_entries(dst);

for (i = 0; i < dst->entry_count; i++) {

if (calculate_camera_metadata_entry_data_size(e->type, e->count) > 0 && e->data.offset > entry->data.offset) {

e->data.offset -= data_bytes;

}

++e;

}

dst->data_count -= data_bytes;

}

// 移动entry数组

memmove(entry, entry + 1, sizeof(camera_metadata_buffer_entry_t) * (dst->entry_count - index - 1) );

dst->entry_count -= 1;

}update_camera_metadata_entry(更新tag的value值)

int update_camera_metadata_entry(camera_metadata_t *dst, size_t index, const void *data, size_t data_count, camera_metadata_entry_t *updated_entry)

step1:当要插入的数据和原来entry的数据长度不相等时,如果entry大于4字节时,先删除旧的数据:

把下个entry(当前的data.offset + entry_bytes)的data到后面的整个data区,向前移动entry_bytes个字节,

覆盖掉当前entry的数据, 然后更新后面的entry的data.offset,最后把新的tag数据追加到整个data区的后面

step2:当要插入的数据和原来entry的数据长度相等时,重复利用原来的data内存, 把新的tag数据拷贝到原来的entry的数据区

step3: 如果entry小于等于4字节,直接拷贝到entry→data.value中即可

update_camera_metadata_entry实现

int update_camera_metadata_entry(camera_metadata_t *dst,

size_t index,

const void *data,

size_t data_count,

camera_metadata_entry_t *updated_entry) {

if (dst == NULL) return ERROR;

if (index >= dst->entry_count) return ERROR;

camera_metadata_buffer_entry_t *entry = get_entries(dst) + index;

size_t data_bytes =

calculate_camera_metadata_entry_data_size(entry->type,

data_count);

size_t data_payload_bytes =

data_count * camera_metadata_type_size[entry->type];

size_t entry_bytes =

calculate_camera_metadata_entry_data_size(entry->type,

entry->count);

if (data_bytes != entry_bytes) {

// May need to shift/add to data array

if (dst->data_capacity < dst->data_count + data_bytes - entry_bytes) {

// No room

return ERROR;

}

if (entry_bytes != 0) {

// Remove old data

uint8_t *start = get_data(dst) + entry->data.offset;

uint8_t *end = start + entry_bytes;

size_t length = dst->data_count - entry->data.offset - entry_bytes;

memmove(start, end, length);

dst->data_count -= entry_bytes;

// Update all entry indices to account for shift

camera_metadata_buffer_entry_t *e = get_entries(dst);

size_t i;

for (i = 0; i < dst->entry_count; i++) {

if (calculate_camera_metadata_entry_data_size(

e->type, e->count) > 0 &&

e->data.offset > entry->data.offset) {

e->data.offset -= entry_bytes;

}

++e;

}

}

if (data_bytes != 0) {

// Append new data

entry->data.offset = dst->data_count;

memcpy(get_data(dst) + entry->data.offset, data, data_payload_bytes);

dst->data_count += data_bytes;

}

} else if (data_bytes != 0) {

// data size unchanged, reuse same data location

memcpy(get_data(dst) + entry->data.offset, data, data_payload_bytes);

}

if (data_bytes == 0) {

// Data fits into entry

memcpy(entry->data.value, data,

data_payload_bytes);

}

entry->count = data_count;

if (updated_entry != NULL) {

get_camera_metadata_entry(dst,

index,

updated_entry);

}

assert(validate_camera_metadata_structure(dst, NULL) == OK);

return OK;

}append_camera_metadata

int append_camera_metadata(camera_metadata_t *dst, const camera_metadata_t *src) //将src的metadata中的entry和data追加在dst后

step1:将src的entry_count个entry和data都拷贝到dst的entry后面和data后面

step2:更新dst的entry→data.offset和其他的成员

int append_camera_metadata(camera_metadata_t *dst, const camera_metadata_t *src)

{

memcpy(get_entries(dst) + dst->entry_count, get_entries(src), sizeof(camera_metadata_buffer_entry_t[src->entry_count]));

memcpy(get_data(dst) + dst->data_count, get_data(src), sizeof(uint8_t[src->data_count]));

//更新dst中新加入的entry->data.offset

if (dst->data_count != 0) {

camera_metadata_buffer_entry_t *entry = get_entries(dst) + dst->entry_count; //新增的src的entry 起始地址

for (size_t i = 0; i < src->entry_count; i++, entry++) {

if ( calculate_camera_metadata_entry_data_size(entry->type, entry->count) > 0 ) //data 大于4字节

{

entry->data.offset += dst->data_count; // entry->data.offset在src中本身是有偏移的,所以只需要对每个偏移加上 dst->data_count就可以了

}

}

}

if (dst->entry_count == 0) {

dst->flags |= src->flags & FLAG_SORTED; //dst为空,使用src的存储方式

} else if (src->entry_count != 0) {

dst->flags &= ~FLAG_SORTED; //dst和src都不为空,使用无排序方式

}

dst->entry_count += src->entry_count;

dst->data_count += src->data_count;

dst->vendor_id = src->vendor_id;

}clone_camera_metadata

camera_metadata_t *clone_camera_metadata(const camera_metadata_t *src) /

step1:申请一个camera_metadata_t的内存,大小和src一样大

step2:将src append到这块内存中

camera_metadata_t *clone_camera_metadata(const camera_metadata_t *src)

{

camera_metadata_t *clone = allocate_camera_metadata(get_camera_metadata_entry_count(src), get_camera_metadata_data_count(src));

if (clone != NULL)

{

append_camera_metadata(clone, src);

}

return clone;

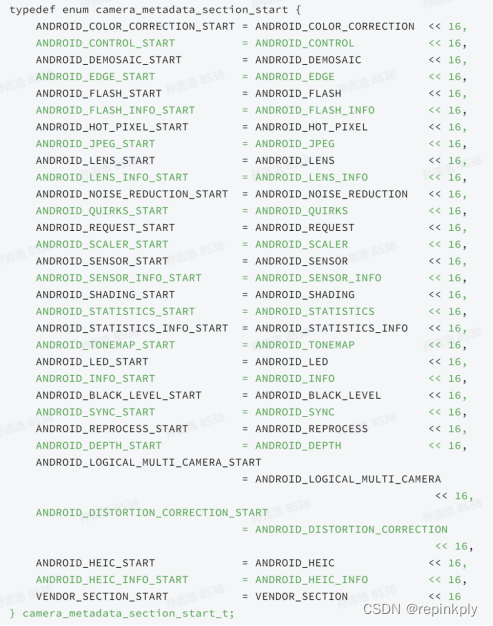

}metadata tag分类

tag从归属方可以被分类两类:

- android平台原生tag。如ANDROID_CONTROL_AE_MODE用于控制AE曝光方式(auto、manual等)

- vendor tag(platfrom如Qcom/SumSung/MTK新增tag)。

android tag

tag是通过section的方式来进行分类的,如下:

typedef enum camera_metadata_section {

ANDROID_COLOR_CORRECTION,

ANDROID_CONTROL,

ANDROID_DEMOSAIC,

ANDROID_EDGE,

ANDROID_FLASH,

ANDROID_FLASH_INFO,

ANDROID_HOT_PIXEL,

ANDROID_JPEG,

ANDROID_LENS,

ANDROID_LENS_INFO,

ANDROID_NOISE_REDUCTION,

ANDROID_QUIRKS,

ANDROID_REQUEST,

ANDROID_SCALER,

ANDROID_SENSOR,

ANDROID_SENSOR_INFO,

ANDROID_SHADING,

ANDROID_STATISTICS,

ANDROID_STATISTICS_INFO,

ANDROID_TONEMAP,

ANDROID_LED,

ANDROID_INFO,

ANDROID_BLACK_LEVEL,

ANDROID_SYNC,

ANDROID_REPROCESS,

ANDROID_DEPTH,

ANDROID_LOGICAL_MULTI_CAMERA,

ANDROID_DISTORTION_CORRECTION,

ANDROID_HEIC,

ANDROID_HEIC_INFO,

ANDROID_SECTION_COUNT,

VENDOR_SECTION = 0x8000

} camera_metadata_section_t;上面都是android原生tag的section,每一个section支持的tag总数最大是65536(1<<16)。

vendor tag

vendor tag必须从0x8000000开始使用

All Tags

Metadata分类

Per Camera Device

- 每个相机设备一个,设备数量基于get_number_of_camera

- 启动阶段获取到Provider,且只获取一次

Provider启动的时候通过dlopen和dlsym获得HAL层的::HAL_MODULE_INFO_SYM指针,类型为camera_module_t。 通过调用HAL层module的get_camera_info方法,从底层获得camera_info类型的变量, camera_info内部包含一个camera_metadata_t类型的成员变量

Per Session

- 每次配流时下发

- 一个场景下只下发一次配置参数

在Configure Stream时,在camera3_stream_configuration_t结构体中有camera_metadata_t类型的成员变量,HAL需要检查metadata中的参数值,并相应地配置内部camera pipeline。

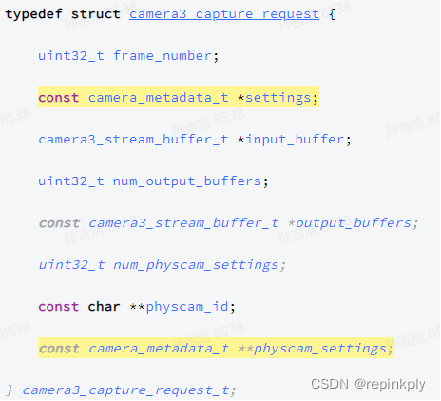

Per Request

- 每次下发request时下发到HAL

在下发request时,在camera3_capture_request_t结构体中有camera_metadata_t类型的成员变量。在hardware.h下camera3.h 下定义。

一个camera id,可能对应多个物理camera,camera3_capture_request_t中包含物理camera的数量,对应多个物理camera的情况下,会对应多个metadata

Per Result

- 每次返回result时上传到APP

在收到result时,在camera3_capture_result_t结构体中有camera_metadata_t类型的成员变量,在hardware camera3.h中定义。

一个camera id,可能对应多个物理camera, camera3_capture_result_t中包含物理camera的数量,对应多个物理camera的情况下,会对应多个metadata

Qcom—Metadata整体架构

content

MetaBuffer

Metadata Property

MetadataPool整体架构

- MetaPool机制,各部分是怎么组合关联的?

- MetadataPool各组件的创建(代码流程,configstream时)

- MetadataSlot与MetaBuffer绑定(代码流程,request)

- 通知机制的实现(怎么知道已经准备好了,准备好了之后怎么通知)

- MetadataPool处理多个request,以及node之间存在meta依赖的情况。

- DeferredRequestQueue是和Session一对一的,在session中的成员变量是m_pDeferredRequestQueue

- MetadataPool和Pipeline是多对一,一个Pipeline有多个不同种类的Pool,一种一个

- MetadataPool和MetadataSlot是一对多的关系

- MetadataSlot和MetaBuffer是一对一的关系

为什么引入MetadataPool机制?

MetadataPool处理多个request,以及node之间存在meta依赖的情况。

- 当metadata为Per request 和 Per result的时候,上面会发多个request下来。因为ProcessCaptureRequest是异步的。多个request是存放在队列中的。在pipeline中,会有outstanding多个request在排队中。所以metadatapool需要解决的问题就是,这样的事情。

metadatapool 和 metadataSlot是一对多的关系。

MetadataSlot* m_pSlots[MaxPerFramePoolWindowSize];

static const UINT32 MaxPerFramePoolWindowSize = (RequestQueueDepth + MaxExtraHALBuffer) * 2;

一般每一个node依赖前一个Node的Buffer,和前一个Node的产生的metadata。

2.实现Observer pattern 。在pipelie中,下一个node正常运行,需要上一个Node的metadata,这个时候就有依赖关系存在。怎么样通知下一个node已经准备好了,可以继续往下一个node往下传数据呢?

MetadataSlot与MetaBuffer的绑定

Session::ProcessCaptureRequest会把pipeline中的几个pool拿出来,根据requestid取模,用余数找到对应的slot,再把meta和slot进行attach。

Camx对meta的管理

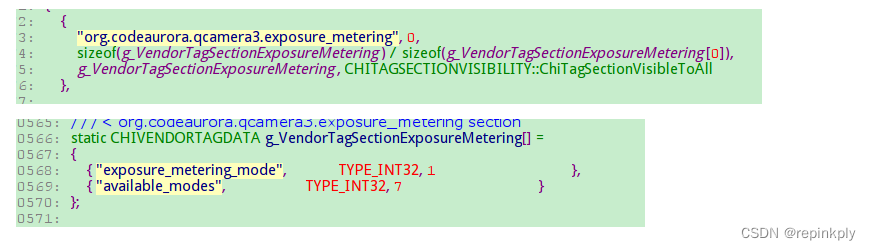

完整的TagID = (section << 16) + tag

sectionOffset为 (0x8000 << 16), section按遍历顺序,基于sectionOffset递增,tag按定义顺序递增

假如vendorTag的第一个tag为“org.codeaurora.qcamera3.exposure_metering”,“exposure_metering_mode”,

则section = 0x8000 + 1, tag = 0;tagID = 0x80010000

第二个tag为“org.codeaurora.qcamera3.exposure_metering”,“available_modes”,

则section = 0x8000 + 1, tag = 1;tagID =0x8001000

tagID什么时候,怎么确定的?

进程启动时,收集所有的vendor Tag:

从HW中获取vendor Tag 信息:

CHIVENDORTAGINFO hwVendorTagInfo ;

pHwEnvironment->GetHwStaticEntry()->QueryVendorTagsInfo(&hwVendorTagInfo);

QueryVendorTagsInfo是在Camxtitan17xcontext.cpp (vendor\qcom\proprietary\camx\src\hwl\titan17x)文件中实现的。

hwVendorTagInfo(g_HwVendorTagSections)的信息定义在Camxvendortagdefines.h (vendor\qcom\proprietary\camx\src\api\vendortag文件中。

获取core的vendorTag信息:

CHIVENDORTAGINFO coreVendorTagInfo = { g_CamXVendorTagSections, CAMX_ARRAY_SIZE(g_CamXVendorTagSections) };

获取core的compomentVendorTag信息:

从external compoment包括( 库的命名规则:VendorName.CategoryName.ModuleName.TargetName.Extension CategoryName: node, stats,hvx,chi) 获取vendor Tag。

InitializeVendorTagInfo

// VendorTagManager::AppendVendorTagInfo

CamxResult VendorTagManager::AppendVendorTagInfo(

const CHIVENDORTAGINFO* pVendorTagInfoToAppend)

{

CamxResult result = CamxResultSuccess;

CAMX_ASSERT(NULL != pVendorTagInfoToAppend);

// This function merge the incoming vendor sections with existing ones. here we allocate a new array of sections and copy

// the existing and incoming sections to the new array. After the successful merge, we will free the existing section

// array and assign the new array to m_vendorTagInfo.

if ((NULL != pVendorTagInfoToAppend) && (pVendorTagInfoToAppend->numSections > 0))

{

CHIVENDORTAGSECTIONDATA* pSectionData =

static_cast<CHIVENDORTAGSECTIONDATA*>(CAMX_CALLOC(sizeof(CHIVENDORTAGSECTIONDATA) *

(m_vendorTagInfo.numSections + pVendorTagInfoToAppend->numSections)));

if (NULL == pSectionData)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Out of memory!");

result = CamxResultENoMemory;

}

else

{

UINT32 sectionCount = 0;

for (UINT32 i = 0; i < m_vendorTagInfo.numSections; i++)

{

result = CopyVendorTagSectionData(&pSectionData[i], &m_vendorTagInfo.pVendorTagDataArray[i]);

if (CamxResultSuccess != result)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Failed to copy existing vendor tag section!");

break;

}

else

{

sectionCount++;

}

}

if (CamxResultSuccess == result)

{

CAMX_ASSERT(sectionCount == m_vendorTagInfo.numSections);

for (UINT32 i = 0; i < pVendorTagInfoToAppend->numSections; i++)

{

result = CopyVendorTagSectionData(&pSectionData[m_vendorTagInfo.numSections + i],

&pVendorTagInfoToAppend->pVendorTagDataArray[i]);

if (CamxResultSuccess != result)

{

CAMX_LOG_ERROR(CamxLogGroupCore, "Failed to copy pass in vendor tag section!");

break;

}

else

{

sectionCount++;

}

}

}

if (CamxResultSuccess == result)

{

CAMX_ASSERT(sectionCount == (pVendorTagInfoToAppend->numSections + m_vendorTagInfo.numSections));

FreeVendorTagInfo(&m_vendorTagInfo);

m_vendorTagInfo.pVendorTagDataArray = pSectionData;

m_vendorTagInfo.numSections = sectionCount; //此section有几个tag

}

else

{

CAMX_ASSERT(sectionCount != (pVendorTagInfoToAppend->numSections + m_vendorTagInfo.numSections));

CAMX_LOG_ERROR(CamxLogGroupCore, "Failed to append vendor tag section!");

for (UINT32 i = 0; i < sectionCount; i++)

{

FreeVendorTagSectionData(&pSectionData[i]);

}

CAMX_FREE(pSectionData);

pSectionData = NULL;

}

}

}

return result;

}VendorTagManager::GetAllTags

获取所有vendorTag,整合成一张table,可方便的通过index访问所有vendorTag。

// VendorTagManager::GetAllTags

VOID VendorTagManager::GetAllTags(

VendorTag* pVendorTags,

CHITAGSECTIONVISIBILITY visibility)

{

const CHIVENDORTAGINFO* pVendorTagInfo = VendorTagManager::GetInstance()->GetVendorTagInfo();

UINT32 size = 0;

if (NULL != pVendorTagInfo)

{

for (UINT32 i = 0; i < pVendorTagInfo->numSections; i++)

{

if ((pVendorTagInfo->pVendorTagDataArray[i].visbility == ChiTagSectionVisibleToAll) ||

ChiTagSectionVisibleToAll == visibility ||

Utils::IsBitMaskSet(pVendorTagInfo->pVendorTagDataArray[i].visbility,

static_cast<UINT32>(visibility)))

{

for (UINT32 j = 0; j < pVendorTagInfo->pVendorTagDataArray[i].numTags; j++)

{

pVendorTags[size + j] = pVendorTagInfo->pVendorTagDataArray[i].firstVendorTag + j; //所有vendorTag的完整tagID

}

size += pVendorTagInfo->pVendorTagDataArray[i].numTags;

}

}

}

}

//维护[tagName, tagID]的哈希表,为什么?

VendorTagManager::QueryVendorTagLocation(

for (UINT32 i = 0; i < pVendorTagInfo->numSections; i++)

{

if (0 == OsUtils::StrCmp(pVendorTagInfo->pVendorTagDataArray[i].pVendorTagSectionName, pSectionName))

{

for (UINT32 j = 0; j < pVendorTagInfo->pVendorTagDataArray[i].numTags; j++)

{

if (0 == OsUtils::StrCmp(pVendorTagInfo->pVendorTagDataArray[i].pVendorTagaData[j].pVendorTagName,

pTagName))

{

*pTagLocation = pVendorTagInfo->pVendorTagDataArray[i].firstVendorTag + j;

if (TRUE == validKeyStr)

{

VendorTagManager::GetInstance()->AddTagToHashMap(hash, pTagLocation);

}

result = CamxResultSuccess;

break;

}

}

break;

}

}Property

property是高通私有的tag,一般由高通定义,不希望OEM做修改,他们的section分布如下:

/// @brief Additional classification of tags/properties

enum class PropertyGroup : UINT32

{

Result = 0x3000, ///< Properties found in the result pool

Internal = 0x4000, ///< Properties found in the internal pool

Usecase = 0x5000, ///< Properties found in the usecase pool

DebugData = 0x6000, ///< Properties found in the debug data pool

};

static const PropertyID PropertyIDPerFrameResultBegin = static_cast<UINT32>(PropertyGroup::Result) << 16;

static const PropertyID PropertyIDPerFrameInternalBegin = static_cast<UINT32>(PropertyGroup::Internal) << 16;

static const PropertyID PropertyIDUsecaseBegin = static_cast<UINT32>(PropertyGroup::Usecase) << 16;

static const PropertyID PropertyIDPerFrameDebugDataBegin = static_cast<UINT32>(PropertyGroup::DebugData) << 16;他们的定义如下:

/* Beginning of MainProperty */

static const PropertyID PropertyIDAECFrameControl = PropertyIDPerFrameResultBegin + 0x00;

static const PropertyID PropertyIDAECFrameInfo = PropertyIDPerFrameResultBegin + 0x01;

static const PropertyID PropertyIDAWBFrameControl = PropertyIDPerFrameResultBegin + 0x02;

static const PropertyID PropertyIDAWBFrameInfo = PropertyIDPerFrameResultBegin + 0x03;

static const PropertyID PropertyIDAFFrameControl = PropertyIDPerFrameResultBegin + 0x04;

static const PropertyID PropertyIDAFFrameInfo = PropertyIDPerFrameResultBegin + 0x05;

static const PropertyID PropertyIDAFPDFrameInfo = PropertyIDPerFrameResultBegin + 0x06;

static const PropertyID PropertyIDAFBAFDependencyMet = PropertyIDPerFrameResultBegin + 0x07;

static const PropertyID PropertyIDAFStatsProcessingDone = PropertyIDPerFrameResultBegin + 0x08;

...

/* Beginning of InternalProperty */

static const PropertyID PropertyIDAECInternal = PropertyIDPerFrameInternalBegin + 0x00;

static const PropertyID PropertyIDAFInternal = PropertyIDPerFrameInternalBegin + 0x01;

static const PropertyID PropertyIDASDInternal = PropertyIDPerFrameInternalBegin + 0x02;

static const PropertyID PropertyIDAWBInternal = PropertyIDPerFrameInternalBegin + 0x03;

static const PropertyID PropertyIDAFDInternal = PropertyIDPerFrameInternalBegin + 0x04;

static const PropertyID PropertyIDBasePDInternal = PropertyIDPerFrameInternalBegin + 0x05;

static const PropertyID PropertyIDISPAECBG = PropertyIDPerFrameInternalBegin + 0x06;

static const PropertyID PropertyIDISPAWBBGConfig = PropertyIDPerFrameInternalBegin + 0x07;

static const PropertyID PropertyIDISPBFConfig = PropertyIDPerFrameInternalBegin + 0x08;

...

/* Beginning of UsecaseProperty */

static const PropertyID PropertyIDUsecaseSensorModes = PropertyIDUsecaseBegin + 0x00;

static const PropertyID PropertyIDUsecaseBatch = PropertyIDUsecaseBegin + 0x01;

static const PropertyID PropertyIDUsecaseFPS = PropertyIDUsecaseBegin + 0x02;

static const PropertyID PropertyIDUsecaseLensInfo = PropertyIDUsecaseBegin + 0x03;

static const PropertyID PropertyIDUsecaseCameraModuleInfo = PropertyIDUsecaseBegin + 0x04;

static const PropertyID PropertyIDUsecaseSensorCurrentMode = PropertyIDUsecaseBegin + 0x05;

static const PropertyID PropertyIDUsecaseSensorTemperatureInfo = PropertyIDUsecaseBegin + 0x06;

static const PropertyID PropertyIDUsecaseAWBFrameControl = PropertyIDUsecaseBegin + 0x07;

static const PropertyID PropertyIDUsecaseAECFrameControl = PropertyIDUsecaseBegin + 0x08;

...

/* Beginning of DebugDaGetAndroidMetaIDDebugDataAEC = PropertyIDPerFrameDebugDataBegin + 0x01;

static const PropertyID PropertyIDDebugDataAWB = PropertyIDPerFrameDebugDataBegin + 0x02;

static const PropertyID PropertyIDDebugDataAF = PropertyIDPerFrameDebugDataBegin + 0x03;

static const PropertyID PropertyIDDebugDataAFD = PropertyIDPerFrameDebugDataBegin + 0x04;

static const PropertyID PropertyIDTuningDataIFE = PropertyIDPerFrameDebugDataBegin + 0x05;

static const PropertyID PropertyIDTuningDataIPE = PropertyIDPerFrameDebugDataBegin + 0x06;

static const PropertyID PropertyIDTuningDataBPS = PropertyIDPerFrameDebugDataBegin + 0x07;

static const PropertyID PropertyIDTuningDataTFE = PropertyIDPerFrameDebugDataBegin + 0x08;

...问题1:以上Property有什么区别,分别在什么case下用,怎么用?

后面的metaPool会提到

问题2:PropertyID怎么跟tagName匹配的?

Property只有mainProperty也就是resultProperty才对外暴露,存放在PrivatePropertyTag

///< org.codeaurora.qcamera3.internal_private section

s_VendorTagManagerSingleton.m_cachedVendorTags[VendorTagIndex::PrivatePropertyTag].pSectionName

= "org.codeaurora.qcamera3.internal_private";

s_VendorTagManagerSingleton.m_cachedVendorTags[VendorTagIndex::PrivatePropertyTag].pTagName

= "private_property";问题3:mainProperty在PrivatePropertyTag是怎么存放的?

MetaBuffer::LinearMap::GetAndroidMeta时,将所有对android可暴露的tag从camxMetaBuffer解析出来,

包含camera meta,vendorTag,Property,具体哪些tag暴露可根据策略调整

//1.遍历部分mainProperty的tagID和data地址

for (UINT32 tagIndex = propertyStartIndex; tagIndex < propertyEndIndex; ++tagIndex)

{

const MetadataInfo* pInfo = HAL3MetadataUtil::GetMetadataInfoByIndex(tagIndex);

Content& rContent = m_pMetadataOffsetTable[tagIndex];

if (TRUE == rContent.IsValid())

{

result = HAL3MetadataUtil::AppendPropertyPackingInfo(

rContent.m_tag,

rContent.m_pVaddr,

&packingInfo);

}

}

//2.打包以上mainProperty的tagID和data值,头部4字节存放一共多少个Property

//后面4字节为tagID,紧接着是dataValue;

//下一个4字节为tagID,紧接着继续是dataValue...

//dataValue长度是在camx预先定义好的,所以不需要一起打包进去

HAL3MetadataUtil::PackPropertyInfoToBlob(&packingInfo, pPropertyBlob);

//3.更新打包后的Property集合到PrivatePropertyTag

CamxResult resultLocal = HAL3MetadataUtil::UpdateMetadata(

pAndroidMeta,

propertyBlobId,

pPropertyBlob,

PropertyBlobSize,

TRUE);

CamxResult HAL3MetadataUtil::PackPropertyInfoToBlob(

PropertyPackingInfo* pPackingInfo,

VOID* pPropertyBlob)

{

CamxResult result = CamxResultSuccess;

if (NULL != pPropertyBlob)

{

UINT32* pCount = reinterpret_cast<UINT32*>(pPropertyBlob);

*pCount = pPackingInfo->count;

VOID* pWriteOffset = Utils::VoidPtrInc(pPropertyBlob, sizeof(pPackingInfo->count));

for (UINT32 index = 0; index < pPackingInfo->count; index++)

{

// Write TagId

SIZE_T tagSize = GetPropertyTagSize(pPackingInfo->tagId[index]);

Utils::Memcpy(pWriteOffset, &pPackingInfo->tagId[index], sizeof(UINT32));

pWriteOffset = Utils::VoidPtrInc(pWriteOffset, sizeof(UINT32));

// Write tag address

Utils::Memcpy(pWriteOffset, pPackingInfo->pAddress[index], tagSize);

pWriteOffset = Utils::VoidPtrInc(pWriteOffset, tagSize);

}

}

else

{

CAMX_LOG_ERROR(CamxLogGroupMeta, "Property blob is null");

result = CamxResultEFailed;

}

return result;

}

CamxResult HAL3MetadataUtil::UnPackBlobToPropertyInfo(

PropertyPackingInfo* pPackingInfo,

VOID* pPropertyBlob)

{

// First 4 bytes correspoint to number of tags

CamxResult result = CamxResultSuccess;

if (NULL != pPropertyBlob)

{

UINT32* pCount = reinterpret_cast<UINT32*>(pPropertyBlob);

pPackingInfo->count = *pCount;

VOID* pReadOffset = Utils::VoidPtrInc(pPropertyBlob, sizeof(pPackingInfo->count));

for (UINT32 i = 0; i < pPackingInfo->count; i++)

{

// First 4 bytes correspond to tagId followed by the tagValue

pPackingInfo->tagId[i] = *reinterpret_cast<UINT32*>(pReadOffset);

pReadOffset = Utils::VoidPtrInc(pReadOffset, sizeof(UINT32));

SIZE_T tagSize = GetPropertyTagSize(pPackingInfo->tagId[i]);

pPackingInfo->pAddress[i] = reinterpret_cast<BYTE*>(pReadOffset);

pReadOffset = Utils::VoidPtrInc(pReadOffset, tagSize);

}

}

else

{

CAMX_LOG_ERROR(CamxLogGroupMeta, "Property blob is null");

result = CamxResultEFailed;

}

return result;

}

meta的统一管理

//未完待续....,后续跟新...

1122

1122

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?