TensoeBoard是一个内置于TensorFlow中的基于浏览器的可视化工具,只有当Keras使用TensorFlow后端时,这一方法才能用于Keras模型!

主要功能:

- 在训练过程中以可视化的方式监控指标

- 将模型架构可视化

- 将激活和梯度的直方图可视化

- 以三维的形式研究嵌入

案例如下:

代码清单1:准备和处理数据

import keras

from keras import layers

from keras.datasets import imdb

from keras.preprocessing import sequence

max_features = 2000

max_len = 500

(x_train, y_train), (x_test, y_test) = imdb.load_data(path='D:/jupyter/deepLearning/keras_03/imdb.npz',

num_words=max_features)

x_train = sequence.pad_sequences(x_train, maxlen=max_len)

print(x_train.shape) #(25000, 500)

x_test = sequence.pad_sequences(x_test, maxlen=max_len)

代码清单2:构建使用了TensorBoard的文本分类模型

model = keras.models.Sequential()

model.add(layers.Embedding(max_features, 128, input_length=max_len, name='embed'))

model.add(layers.Conv1D(32, 7, activation='relu'))

model.add(layers.MaxPooling1D(5))

model.add(layers.Conv1D(32, 7, activation='relu'))

model.add(layers.GlobalMaxPooling1D())

model.add(layers.Dense(1))

model.summary()

#由于电脑内存过小,无法写入25000行的数据,因此取前1000行

print(x_train[:1000].shape) #(1000, 500)

model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['acc'])

模型为:

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embed (Embedding) (None, 500, 128) 256000

_________________________________________________________________

conv1d_5 (Conv1D) (None, 494, 32) 28704

_________________________________________________________________

max_pooling1d_3 (MaxPooling1 (None, 98, 32) 0

_________________________________________________________________

conv1d_6 (Conv1D) (None, 92, 32) 7200

_________________________________________________________________

global_max_pooling1d_3 (Glob (None, 32) 0

_________________________________________________________________

dense_3 (Dense) (None, 1) 33

=================================================================

Total params: 291,937

Trainable params: 291,937

Non-trainable params: 0

_________________________________________________________________

在使用TensorBoard之前,首先需要创建一个目录,用来保存生成的日志文件,可手动创建也可使用终端命令创建。

$ mkdir my_log_dir

代码清单3:使用一个TensorBoard回调函数来训练模型

callbacks = [

keras.callbacks.TensorBoard(

log_dir='my_log_dir', #将日志写入这个位置

histogram_freq=1, #每一轮之后记录激活的直方图

embeddings_freq=1, #每一轮之后记录嵌入数据

embeddings_data=x_train[:1000].astype('float32') #指定embeddings_data的值

)

]

history = model.fit(x_train, y_train,

epochs=20,

batch_size=128,

validation_split=0.2,

callbacks=callbacks

)

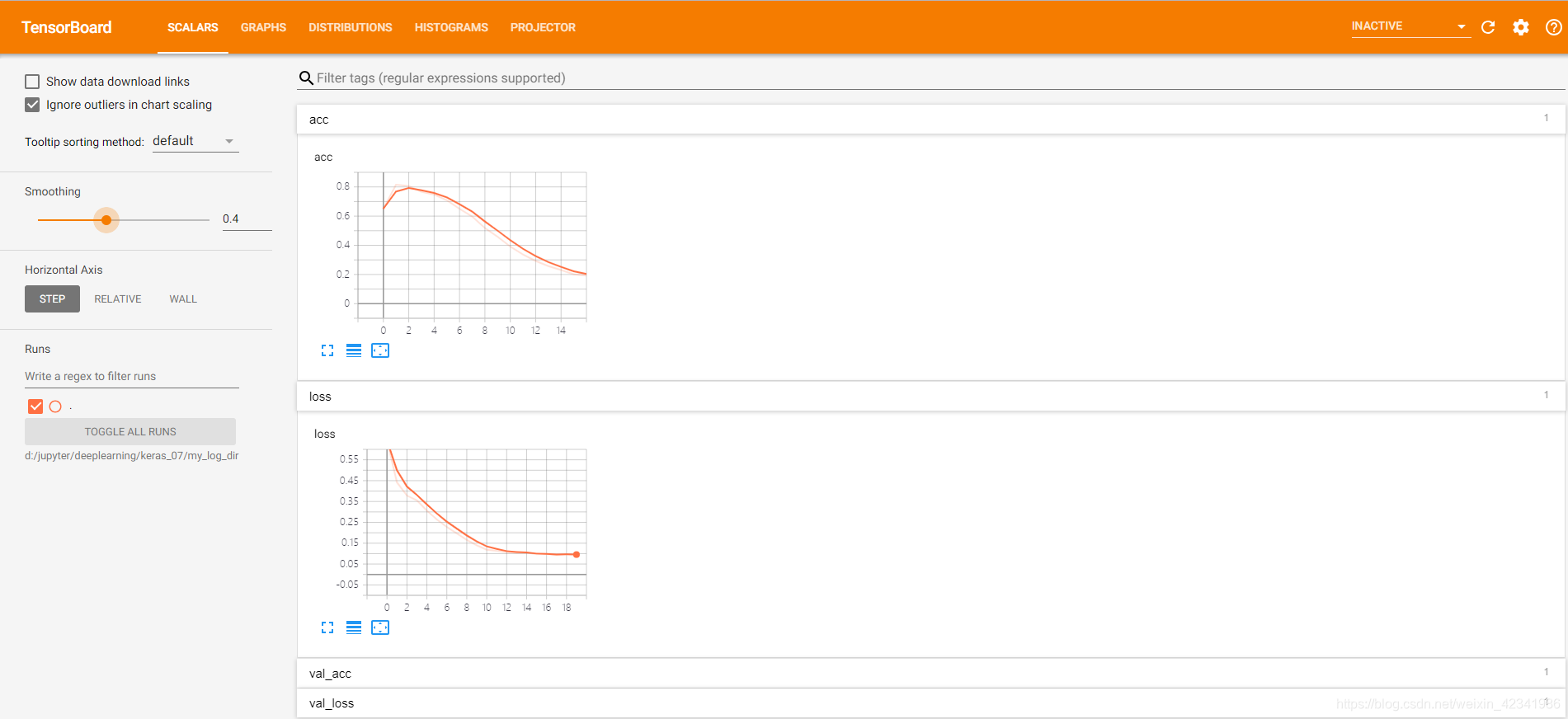

训练结果:

Epoch 1/20

20000/20000 [==============================] - 56s 3ms/step - loss: 0.6435 - acc: 0.6508 - val_loss: 0.4401 - val_acc: 0.8288

Epoch 2/20

20000/20000 [==============================] - 52s 3ms/step - loss: 0.4420 - acc: 0.8156 - val_loss: 0.4183 - val_acc: 0.8276

Epoch 3/20

20000/20000 [==============================] - 53s 3ms/step - loss: 0.3784 - acc: 0.8068 - val_loss: 0.5109 - val_acc: 0.7784

Epoch 4/20

20000/20000 [==============================] - 52s 3ms/step - loss: 0.3555 - acc: 0.7663 - val_loss: 0.5731 - val_acc: 0.7430

Epoch 5/20

20000/20000 [==============================] - 50s 3ms/step - loss: 0.3065 - acc: 0.7478 - val_loss: 0.5300 - val_acc: 0.7220

Epoch 6/20

20000/20000 [==============================] - 50s 2ms/step - loss: 0.2636 - acc: 0.7081 - val_loss: 0.6136 - val_acc: 0.6452

Epoch 7/20

20000/20000 [==============================] - 49s 2ms/step - loss: 0.2279 - acc: 0.6501 - val_loss: 0.8555 - val_acc: 0.5330

Epoch 8/20

20000/20000 [==============================] - 49s 2ms/step - loss: 0.1970 - acc: 0.5973 - val_loss: 0.7554 - val_acc: 0.5342

Epoch 9/20

20000/20000 [==============================] - 49s 2ms/step - loss: 0.1656 - acc: 0.5182 - val_loss: 0.9405 - val_acc: 0.4626

Epoch 10/20

20000/20000 [==============================] - 49s 2ms/step - loss: 0.1398 - acc: 0.4587 - val_loss: 0.9341 - val_acc: 0.3938

Epoch 11/20

20000/20000 [==============================] - 50s 2ms/step - loss: 0.1186 - acc: 0.3914 - val_loss: 1.8058 - val_acc: 0.2952

Epoch 12/20

20000/20000 [==============================] - 48s 2ms/step - loss: 0.1136 - acc: 0.3367 - val_loss: 1.0965 - val_acc: 0.3082

Epoch 13/20

20000/20000 [==============================] - 52s 3ms/step - loss: 0.1054 - acc: 0.2928 - val_loss: 1.0176 - val_acc: 0.3258

Epoch 14/20

20000/20000 [==============================] - 52s 3ms/step - loss: 0.1041 - acc: 0.2577 - val_loss: 1.1444 - val_acc: 0.2890

Epoch 15/20

20000/20000 [==============================] - 53s 3ms/step - loss: 0.1043 - acc: 0.2308 - val_loss: 1.1493 - val_acc: 0.2668

Epoch 16/20

20000/20000 [==============================] - 51s 3ms/step - loss: 0.0967 - acc: 0.2010 - val_loss: 1.1937 - val_acc: 0.2506

Epoch 17/20

20000/20000 [==============================] - 51s 3ms/step - loss: 0.0974 - acc: 0.1917 - val_loss: 1.2121 - val_acc: 0.2420

Epoch 18/20

20000/20000 [==============================] - 52s 3ms/step - loss: 0.0938 - acc: 0.1641 - val_loss: 1.2119 - val_acc: 0.2426

Epoch 19/20

20000/20000 [==============================] - 50s 3ms/step - loss: 0.0980 - acc: 0.1585 - val_loss: 1.2173 - val_acc: 0.2358

Epoch 20/20

20000/20000 [==============================] - 53s 3ms/step - loss: 0.0944 - acc: 0.1477 - val_loss: 1.4213 - val_acc: 0.2312

在命令行启动TensorBoard服务器,指示它读取回调函数当前正在写入的日志。

tensorboard --logdir "d:/jupyter/deeplearning/keras_07/my_log_dir"

在浏览器打开http://localhost:6006,可以查看可视化。

**指标监控:**精度函数和损失函数

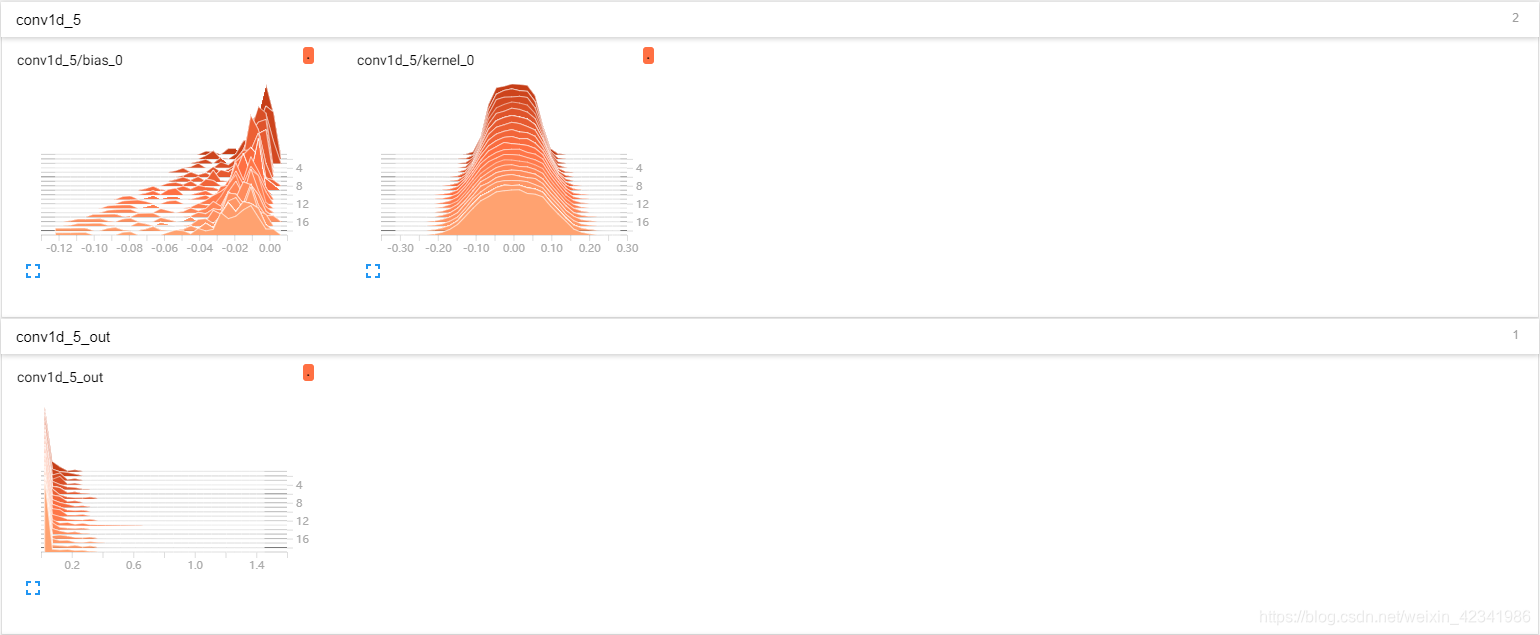

激活直方图: 每层的激活值

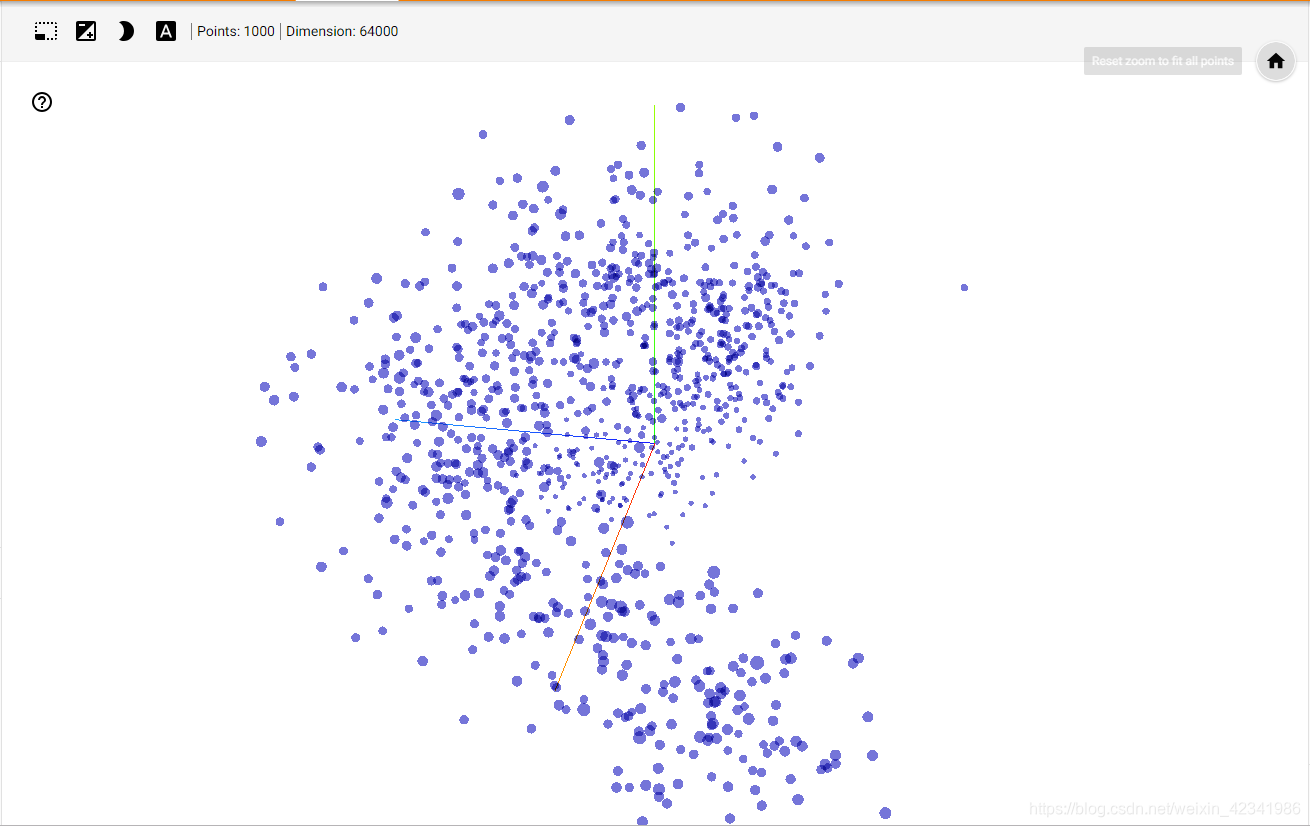

**交互式的三维词嵌入可视化:**可以查看输入此表中1000个单词的嵌入位置和空间关系,由第一个Embedding层学到的,因为嵌入空间是128维的,所以TensorBoard会使用选择的降维算法自动将其降至二维或者三维,选择的算法为PCA。

2520

2520

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?