2019.11.07—实现自己TensorFlow模型的fp16量化

环境:TensorFlow1.15+cuda10.0

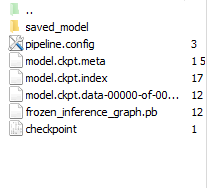

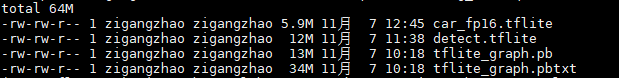

我采用TensorFlow Object Detection ApI训练的一个模型,采用的网络是ssdlite_mobilenet_v2,自己训练完成后运行export_inference_graph.py得到的模型如下:

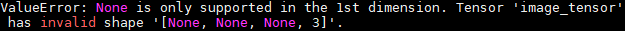

我试着按照官网教程转化自己训练模型的saved_model.pb到.tflite,遇到问题:

Describe the problem

I want to covert a .pb model to .tflite one. The model is train with tensorflow object detection API. The input tensor shape is (None, None, None, 3) ,but it seems that tflite_convert doesn't support this kind of input.

之后我试着将frozen_inference_graph.pb转化为.tflite。

代码如下:

import tensorflow as tf

graph_def_file=" /inference_graph/frozen_inference_graph.pb"

input_arrays=["image_tensor"]

output_arrays=["detection_boxes","detection_scores","detection_classes","num_detections"]

input_tensor={"image_tensor":[1,300,300,3]}

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_arrays, output_arrays,input_tensor)

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

tflite_model = converter.convert()

open("detect.tflite", "wb").write(tflite_model)报错如下:

Solution:

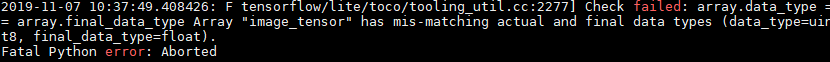

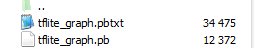

运行/models/research/object_detection/export_tflite_ssd_graph.py instead of export_inference_graph.py,得到tflite_graph.pb模型,再对tflite_graph.pb进行.tflite转化。

代码如下:

import tensorflow as tf

graph_def_file=" /quant/tflite_graph.pb"

input_arrays=["normalized_input_image_tensor"]

output_arrays=['TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3']

input_tensor={"normalized_input_image_tensor":[1,300,300,3]}

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_arrays, output_arrays,input_tensor)

converter.allow_custom_ops=True

tflite_model = converter.convert()

open("detect.tflite", "wb").write(tflite_model)成功得到detect.tflite模型

接着实现对tflite_graph.pb模型的fp16量化,代码如下:

import tensorflow as tf

graph_def_file=" /quant/tflite_graph.pb"

input_arrays=["normalized_input_image_tensor"]

output_arrays=['TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3']

input_tensor={"normalized_input_image_tensor":[1,300,300,3]}

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_arrays, output_arrays,input_tensor)

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

converter.allow_custom_ops=True

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.lite.constants.FLOAT16]

#converter.post_training_quantize=True

tflite_fp16_model = converter.convert()

open("car_fp16.tflite", "wb").write(tflite_fp16_model)成功得到量化后的模型。(好曲折,困难重重)

附注:

01.Tensorflow Lite介绍:

https://segmentfault.com/a/1190000018741468?utm_source=tag-newest

02.tf.lite.TFLiteConverter函数介绍:

https://www.w3cschool.cn/tensorflow_python/tf_lite_TFLiteConverter.html

补充实验:之前frozen_inference_graph.pb转tflite没成功,后来发现是在转换的输入输出结点没有对上,再改正之后,可以转换

import tensorflow as tf

graph_def_file = "frozen_inference_graph.pb"

input_names = ["FeatureExtractor/MobilenetV2/MobilenetV2/input"]

print(input_names[0])

output_names = ["concat", "concat_1"]

input_tensor = {input_names[0]:[1,300,300,3]}

#from_frozen_graph.pb-->tflite

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_names, output_names, input_tensor)

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

tflite_fp32_model = converter.convert()

open("fp32.tflite", "wb").write(tflite_fp32_model)

#fp16 quant

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_names, output_names, input_tensor)

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

converter.allow_custom_ops = True

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.lite.constants.FLOAT16]

tflite_fp16_model = converter.convert()

open("fp16.tflite", "wb").write(tflitef_p16_model)

#int8 quant(混合量化,仅仅量化权重)[-127,128]

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_names, output_names, input_tensor)

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

#converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.post_training_quantize = True

tflite_int8_model = converter.convert()

open("int8.tflite", "wb").write(tflite_int8_model)

#unit8 quant [0,255]

converter = tf.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_names, output_names, input_tensor)

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS,tf.lite.OpsSet.SELECT_TF_OPS]

converter.inference_type = tf.lite.constants.QUANTIZED_UINT8

input_arrays = converter.get_input_arrays()

converter.quantized_input_stats = {input_arrays[0]: (0, 255)} # mean, std_dev

converter.default_ranges_stats = (0, 255) #参数设置必须有,但是好像不起作用

converter.inference_type = tf.lite.constants.QUANTIZED_UINT8

tflite_uint8_model = converter.convert()

open("uint8.tflite", "wb").write(tflite_uint8_model)

本文介绍了如何使用TensorFlow 1.15将基于Object Detection API训练的ssdlite_mobilenet_v2模型转换为.tflite格式,并详细记录了在转换过程中遇到的问题及解决方案,包括将saved_model.pb转为.tflite的错误以及如何进行fp16量化的过程。

本文介绍了如何使用TensorFlow 1.15将基于Object Detection API训练的ssdlite_mobilenet_v2模型转换为.tflite格式,并详细记录了在转换过程中遇到的问题及解决方案,包括将saved_model.pb转为.tflite的错误以及如何进行fp16量化的过程。

736

736

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?