开始用python写的KNN,但是不支持GPU,跑的太慢了,果断放弃,找了半天,终于找到TensorFlow CNN实现的了。

之前按照莫烦的视频写过一个TensorFlow识别mnist数据集的demo,但是只输出准确率,不知道怎么去读csv文件,把向量喂入tf,还有保存训练出来的权重,说到底还是对TensorFlow不熟,果断去补TensorFlow了。

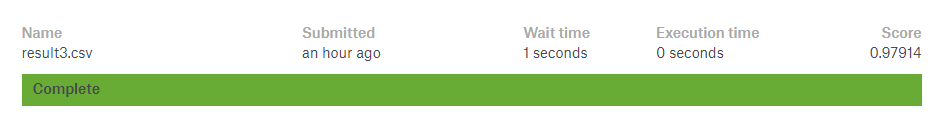

这个也是用了两个卷积、池化,两个全连接层,然后softmax分类,准确率97.9%,具体的看代码吧^_^

import numpy as np

import tensorflow as tf

import os

import pandas as pd

#读取csv, x_train为训练集矩阵42000*784, x_test为测试集矩阵28000*784, label_train为训练集标签42000*1

train = pd.read_csv('E:/all/train.csv')

test = pd.read_csv('E:/all/test.csv')

x_train = train.iloc[:, 1:].values

x_train = x_train.astype(np.float)

x_test = test.iloc[:, :].values

x_test = x_test.astype(np.float)

x_train = np.multiply(x_train, 1.0/255)

x_test = np.multiply(x_test, 1.0/255)

image_size = x_train.shape[1]

image_height = image_width = np.ceil(np.sqrt(image_size)).astype(np.uint8)

label_train = train.iloc[:, 0].values

label_count = np.unique(label_train).shape[0]

def dense_to_one_hot(label_dense, count): #变成了(42000,10)的矩阵,第i个数标签为j则(i,j)为1

num_labels = label_dense.shape[0]

index_offset = np.arange(num_labels) * count

labels_one_hot = np.zeros((num_labels, count))

labels_one_hot.flat[index_offset+label_dense.ravel()] = 1

return labels_one_hot

#把label_train转成one-hot的labels,为42000*10

labels = dense_to_one_hot(label_train, label_count)

labels = labels.astype(np.uint8)

#分批次,一个批次大小为128,这样就分成了42000/128批

batch_size = 128

n_batch = int(len(x_train)/batch_size)

#定义x,y

x = tf.placeholder(tf.float32, [None,784])

y = tf.placeholder(tf.float32, [None, 10])

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, w):

return tf.nn.conv2d(x, w, strides=[1,1,1,1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

#把x重构成28*28的

x_image = tf.reshape(x, [-1, 28, 28, 1])

#两个卷积、池化,两个全连接层,然后softmax分类(其中dropout防止过拟合)

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1)+b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2)+b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

w_fc1 = weight_variable([7*7*64,1024])

b_fc1 = bias_variable([1024])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, w_fc1)+b_fc1)

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

w_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv = tf.matmul(h_fc1_drop, w_fc2) + b_fc2

prediction = tf.nn.softmax(y_conv)

#loss梯度下降

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=y_conv))

train_step_1 = tf.train.AdadeltaOptimizer(0.1).minimize(loss)

#计算准确率

correction_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_conv, 1))

accuracy = tf.reduce_mean(tf.cast(correction_prediction, tf.float32))

global_step = tf.Variable(0, name='global_step', trainable=False)

#初始化TensorFlow

init = tf.global_variables_initializer()

saver = tf.train.Saver()

with tf.Session() as sess:

sess.run(init)

#迭代20次,每次都把全部数据分成42000/128个批次,分批喂入tf

for epoch in range(20):

print('epoch', epoch + 1)

for batch in range(n_batch):

batch_x = x_train[(batch)*batch_size:(batch+1)*batch_size]

batch_y = labels[(batch)*batch_size:(batch+1)*batch_size]

sess.run(train_step_1, feed_dict={x:batch_x, y:batch_y, keep_prob:0.5})

batch_x = x_train[n_batch*batch_size:]

batch_y = labels[n_batch*batch_size:]

sess.run(train_step_1, feed_dict={x: batch_x, y: batch_y, keep_prob: 0.5})

#保存训练出来的模型(权重)

saver.save(sess, 'E:/1/model.ckpt')

#读取模型,全部喂入tf,预测值,写入result.csv

saver.restore(sess, 'E:/1/model.ckpt')

filename = 'E:/1/result3.csv'

test_batch_x = x_test[:]

myPrediction = sess.run(prediction, feed_dict={x:test_batch_x, keep_prob:1.0})

label_test = np.argmax(myPrediction, axis=1)

pd.DataFrame(label_test).to_csv(filename)

1714

1714

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?