一:项目选型:

二:电商用户行为分析

• 统计分析 实时统计 —Flink

– 点击、浏览

– 热门商品、近期热门商品、分类热门商品,流量统计

• 偏好统计 离线分析(数据量大)----mr,spark

– 收藏、喜欢、评分、打标签

– 用户画像,推荐列表(结合特征工程和机器学习算法)

• 风险控制-------实时风控—Flink

– 下订单、支付、登录

– 刷单监控,订单失效监控,恶意登录(短时间内频繁登录失败)监控

三:实时统计分析;

• 实时热门商品统计

• 实时热门页面流量统计

• 实时访问流量统计

• APP 市场推广统计

• 页面广告点击量统计

四:业务流程及风险控制

• 页面广告黑名单过滤

• 恶意登录监控

• 订单支付失效监控

• 支付实时对账

五:用户行为数据:UserBehavior.csv etl后的数

据 用户id 商品ID 商品所属类别 用户行为 时间戳

userId itemId categoryId 以秒为单位

(Long) (Long) (int)

六:web服务器日志:apache.log

IP userID 访问时间 访问方法 url

83.149.9.216 - - 17/05/2015:10:05:03 +0000 GET /presentations/logstash-monitorama-2013/images/kibana-search.png

83.149.9.216 - - 17/05/2015:10:05:43 +0000 GET /presentations/logstash-monitorama-2013/images/kibana-dashboard3.png

83.149.9.216 - - 17/05/2015:10:05:47 +0000 GET /presentations/logstash-monitorama-2013/plugin/highlight/highlight.js

七:项目模块

基本需求

–统计近1小时内的热门商品,每5分钟更新一次

–热门度用浏览次数(‘pv’)来衡量。

解决思路

对pv进行count.构建滑动窗口,窗口长度1小时,滑动距离5分钟。

DataStream-----keyBye(“itemId”)----window(1hour,5second)

*单个窗口内的商品排名

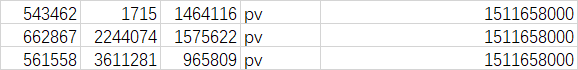

*输出结果***********ItemViewCount(itemld,windowEnd,count)

一:新建maven项目:com.atguigu.UserBehaviorAnalySis

二:新建模块HotitemsAnalysis(实时热门商品统计分析)

为了统一管理 在父项目中声明Pom文件 控制模块中的版本 不可用 还是直接导入具体版本

4.0.0

<groupId>com.atguigu</groupId>

<artifactId>UserBehaviorAnalySis</artifactId>

<packaging>pom</packaging>

<version>1.0-SNAPSHOT</version>

<modules>

<module>HotitemsAnalysis</module>

</modules>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.11.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.11_2.12</artifactId>

<version>1.10.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- 该插件用于将Scala 代码编译成class 文件-->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>4.4.0</version>

<executions>

<execution>

<!-- 声明绑定到maven 的compile 阶段-->

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.3.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>

jar-with-dependencies

</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

package com.hotitems_analysis

import java.sql.Timestamp

import java.util

import java.util.Properties

import org.apache.flink.api.common.functions.AggregateFunction

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.common.state.{ListState, ListStateDescriptor}

import org.apache.flink.api.java.tuple.{Tuple, Tuple1}

import org.apache.flink.configuration.Configuration

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.streaming.api.functions.KeyedProcessFunction

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.WindowFunction

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011

import org.apache.flink.util.Collector

import scala.collection.mutable.ListBuffer

object Hotitems {

def main(args: Array[String]): Unit = {

//1.创建流处理环境

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

//2.设置event时间语义

//env.setParallelism(1)

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

//3.定义输入数据流

//val inputStream: DataStream[String] = env.readTextFile(“in/User2.csv”)

//4.定义kafka输入源

val properties = new Properties()

properties.setProperty(“bootstrap.servers”, “hadoop203:9092”)

properties.setProperty(“group.id”, “consumer-group”)

properties.setProperty(“key.deserializer”,

“org.apache.kafka.common.serialization.StringDeserializer”)

properties.setProperty(“value.deserializer”,

“org.apache.kafka.common.serialization.StringDeserializer”)

val inputStream: DataStream[String] = env.addSource(new FlinkKafkaConsumer011[String](“hotitiems”,new SimpleStringSchema(),properties))

//4.基于数据转换为样例类,并提取时间戳指定wartermark 数据经过ETL 没有乱序数据 所以按照升序即可 也无序生成watermark

val dataStream: DataStream[UserBehavior] = inputStream

.map(data => {

val arr = data.split(",")

UserBehavior(arr(0).toLong, arr(1).toLong, arr(2).toInt, arr(3), arr(4).toLong)

}).assignAscendingTimestamps(.timestamp * 1000L)

//得到窗口聚合结果[ItemViewCount]

val aggStream: DataStream[ItemViewCount] = dataStream

.filter(.behavior == “pv”) //过滤pv

.keyBy(“itemId”) //按照itemId分组

.timeWindow(Time.hours(1), Time.seconds(5)) //设置滑动窗口 每小时一个窗口 步长5分钟

.aggregate(new CountAgg(), new ItemViewWindowResult())

//经过过滤开窗后得到ItemViewCount(商品ID,关窗时间,窗口内被点击次数,)

//按照windowEnd分组

val resultStream: DataStream[String] = aggStream

.keyBy(“windowEnd”) //按照关窗时间分组

.process(new TopNHotItems(5))

resultStream.print()//自定义处理流程

env.execute()

}

}

//自定义预聚合函数===增量聚合函数[UserBehavior,count,count]

//输入类型UserBehavior 中间状态 count 输出结果 count

class CountAgg() extends AggregateFunction[UserBehavior,Long,Long]{

//每来一条数据调用一次 add count+1内部状态的变化

override def add(value: UserBehavior, accumulator: Long): Long = accumulator+1

//初始化时聚合的状态

override def createAccumulator(): Long = 0

//返回值

override def getResult(accumulator: Long): Long = accumulator

//sensionwindow时窗口合并

override def merge(a: Long, b: Long): Long = a+b

}

// CountAgg()的输出类型 就是ItemViewWindowResult的输入类型 输出类型

class ItemViewWindowResult() extends WindowFunction[Long,ItemViewCount,Tuple,TimeWindow]{

override def apply(key: Tuple, window: TimeWindow, input: Iterable[Long], out: Collector[ItemViewCount]): Unit = {

val itemId =key.asInstanceOf[Tuple1[Long]].f0 //注意 是java的tuple1

val windowEnd=window.getEnd

val count=input.iterator.next()

out.collect(ItemViewCount(itemId,windowEnd,count))

}

}

class TopNHotItems(topSize:Int) extends KeyedProcessFunction[Tuple,ItemViewCount,String]{

//先定义状态 :ListState 每一个窗口都需要有这样一个窗口 保存状态

private var itemViewCountListState:ListState[ItemViewCount]=_

override def open(parameters: Configuration): Unit = {

itemViewCountListState = getRuntimeContext.getListState(new ListStateDescriptorItemViewCount)

}

override def processElement(value: ItemViewCount, ctx: KeyedProcessFunction[Tuple, ItemViewCount, String]#Context, out: Collector[String]): Unit = {

//每来一条数据直接加入ListState

itemViewCountListState.add(value)

//注册定时器 windowEnd+1之后触发

ctx.timerService().registerEventTimeTimer(value.windowEnd+1)

}

//定时器触发时,表示窗口内数据到齐,可以排序

override def onTimer(timestamp: Long, ctx: KeyedProcessFunction[Tuple, ItemViewCount, String]#OnTimerContext, out: Collector[String]): Unit = {

//为了方便排序,另外定义一个LIstBuffer.保存liststate里面的所有数据用于排序

val allItemViewCount: ListBuffer[ItemViewCount] = ListBuffer()

val iter: util.Iterator[ItemViewCount] = itemViewCountListState.get().iterator()

while (iter.hasNext) {

allItemViewCount += iter.next()

}

//清空状态

itemViewCountListState.clear()

val sortedItemViewCounts: ListBuffer[ItemViewCount] = allItemViewCount.sortBy(_.count)(Ordering.Long.reverse) //按照长整型值倒叙排序

sortedItemViewCounts.take(topSize)

//将排名信息格式化成string 便于打印输出

val result = new StringBuilder

result.append(“窗口结束时间”).append(new Timestamp(timestamp - 1))

//遍历结果列表中的ItemViewCount

for (i <- sortedItemViewCounts.indices){

val currentItemViewCount = sortedItemViewCounts(i)

result.append(“NO”).append(i+1).append("😊

.append(“商品ID=”).append(currentItemViewCount.itemId).append("\t")

.append(“热门度=”).append(currentItemViewCount.count).append("\n")

}

result.append("===========================\n\n")

Thread.sleep(1000)

out.collect(result.toString())

}

}

package com.hotitems_analysis

import java.util.Properties

import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord}

import scala.io.BufferedSource

object KafkaProducerUtil {

def main(args: Array[String]): Unit = {

writeToKafka(“hotitiems”)

}

//

def writeToKafka(topic: String): Unit ={

val properties = new Properties()

properties.setProperty(“bootstrap.servers”, “hadoop203:9092”)

properties.setProperty(“group.id”, “consumer-group”)

properties.setProperty(“key.serializer”,

“org.apache.kafka.common.serialization.StringSerializer”)

properties.setProperty(“value.serializer”,

“org.apache.kafka.common.serialization.StringSerializer”)

val producer = new KafkaProducerString,String

//从文件读取数据 逐行写入kafka

val source: BufferedSource = io.Source.fromFile(“in/UserBehavior.csv”)

for(line <- source.getLines()){

val record = new ProducerRecordString,String

producer.send(record)

}

producer.close()

}

}

663

663

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?