Datawhale 零基础入门数据挖掘-Task5 模型融合

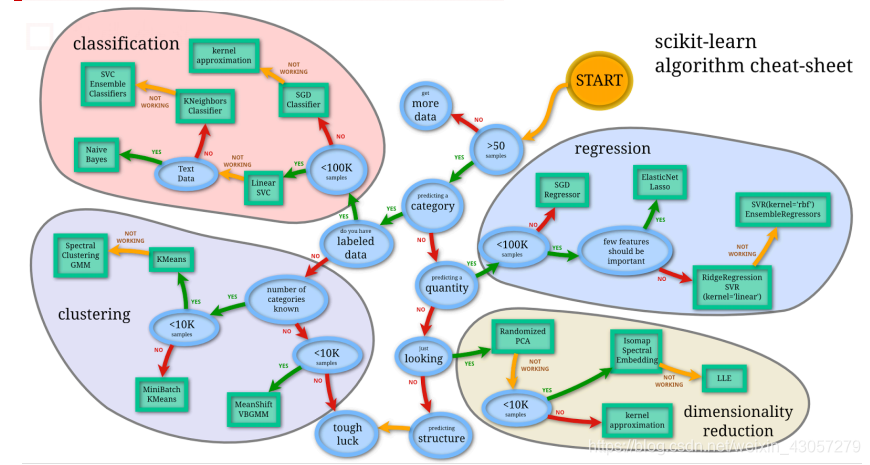

神图镇楼

模态处理

模型融合是比赛后期一个重要的环节,大体来说有如下的类型方式。

- 简单加权融合:

- 回归(分类概率):算术平均融合(Arithmetic mean),几何平均融合(Geometric mean);

- 分类:投票(Voting)

- 综合:排序融合(Rank averaging),log融合

- stacking/blending:

- 构建多层模型,并利用预测结果再拟合预测。

- boosting/bagging(在xgboost,Adaboost,GBDT中已经用到):

- 多树的提升方法

为了提升模型的泛化能力,避免出现过拟合,一般采用两种方法

- 一次模型尽量选择线性模型

- 利用k-folder cross validation

把training data分为k folder,然后选取k-1个进行training,另外一个用作testing

方法简述

回归\分类概率-融合:

-

简单加权平均,结果直接融合

计算MAE,mean,median

加权结果相对于之前的结果是有提升的 -

Stacking融合(回归)

模型结果相对于之前有进一步的提升,这是我们需要注意的一点是,对于第二层Stacking的模型不宜选取的过于复杂,这样会导致模型在训练集上过拟合,从而使得在测试集上并不能达到很好的效果。

分类模型融合:

- Voting投票机制:投票机制,分为软投票和硬投票两种,其原理采用少数服从多数的思想。

- 硬投票:对多个模型直接进行投票,不区分模型结果的相对重要度,最终投票数最多的类为最终被预测的类。

- 软投票:和硬投票原理相同,增加了设置权重的功能,可以为不同模型设置不同权重,进而区别模型不同的重要度。

- 分类的Stacking\Blending融合:

stacking是一种分层模型集成框架。

以两层为例,第一层由多个基学习器组成,其输入为原始训练集,第二层的模型则是以第一层基学习器的输出作为训练集进行再训练,从而得到完整的stacking模型, stacking两层模型都使用了全部的训练数据。Blending,其实和Stacking是一种类似的多层模型融合的形式

其主要思路是把原始的训练集先分成两部分,比如70%的数据作为新的训练集,剩下30%的数据作为测试集。

在第一层,我们在这70%的数据上训练多个模型,然后去预测那30%数据的label,同时也预测test集的label。

在第二层,我们就直接用这30%数据在第一层预测的结果做为新特征继续训练,然后用test集第一层预测的label做特征,用第二层训练的模型做进一步预测

优点:

- 比stacking简单(因为不用进行k次的交叉验证来获得stacker feature)

- 避开了一个信息泄露问题:generlizers和stacker使用了不一样的数据集

缺点:

- 使用了很少的数据(第二阶段的blender只使用training set10%的量)

- blender可能会过拟合

- stacking使用多次的交叉验证会比较稳健 ‘’’

分类的Stacking融合(利用mlxtend):

>import warnings

warnings.filterwarnings('ignore')

import itertools

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

from sklearn import datasets

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier

from mlxtend.classifier import StackingClassifier

from sklearn.model_selection import cross_val_score

from mlxtend.plotting import plot_learning_curves

from mlxtend.plotting import plot_decision_regions

# 以python自带的鸢尾花数据集为例

iris = datasets.load_iris()

X, y = iris.data[:, 1:3], iris.target

clf1 = KNeighborsClassifier(n_neighbors=1)

clf2 = RandomForestClassifier(random_state=1)

clf3 = GaussianNB()

lr = LogisticRegression()

sclf = StackingClassifier(classifiers=[clf1, clf2, clf3],

meta_classifier=lr)

label = ['KNN', 'Random Forest', 'Naive Bayes', 'Stacking Classifier']

clf_list = [clf1, clf2, clf3, sclf]

fig = plt.figure(figsize=(10,8))

gs = gridspec.GridSpec(2, 2)

grid = itertools.product([0,1],repeat=2)

clf_cv_mean = []

clf_cv_std = []

for clf, label, grd in zip(clf_list, label, grid):

scores = cross_val_score(clf, X, y, cv=3, scoring='accuracy')

print("Accuracy: %.2f (+/- %.2f) [%s]" %(scores.mean(), scores.std(), label))

clf_cv_mean.append(scores.mean())

clf_cv_std.append(scores.std())

clf.fit(X, y)

ax = plt.subplot(gs[grd[0], grd[1]])

fig = plot_decision_regions(X=X, y=y, clf=clf)

plt.title(label)

plt.show()

可以发现 基模型 用 ‘KNN’, ‘Random Forest’, ‘Naive Bayes’ 然后再这基础上 次级模型加一个 ‘LogisticRegression’,模型测试效果有着很好的提升。

其他方法

将特征放进模型中预测,并将预测结果变换并作为新的特征加入原有特征中再经过模型预测结果 (Stacking变化)(可以反复预测多次将结果加入最后的特征中)

代码实现

import pandas as pd

import numpy as np

import warnings

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

warnings.filterwarnings('ignore')

%matplotlib inline

import itertools

import matplotlib.gridspec as gridspec

from sklearn import datasets

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier

# from mlxtend.classifier import StackingClassifier

from sklearn.model_selection import cross_val_score, train_test_split

# from mlxtend.plotting import plot_learning_curves

# from mlxtend.plotting import plot_decision_regions

from sklearn.model_selection import StratifiedKFold

from sklearn.model_selection import train_test_split

from sklearn import linear_model

from sklearn import preprocessing

from sklearn.svm import SVR

from sklearn.decomposition import PCA,FastICA,FactorAnalysis,SparsePCA

import lightgbm as lgb

import xgboost as xgb

from sklearn.model_selection import GridSearchCV,cross_val_score

from sklearn.ensemble import RandomForestRegressor,GradientBoostingRegressor

from sklearn.metrics import mean_squared_error, mean_absolute_error

## 数据读取

Train_data = pd.read_csv('datalab/231784/used_car_train_20200313.csv', sep=' ')

TestA_data = pd.read_csv('datalab/231784/used_car_testA_20200313.csv', sep=' ')

print(Train_data.shape)

print(TestA_data.shape)

Train_data.head()

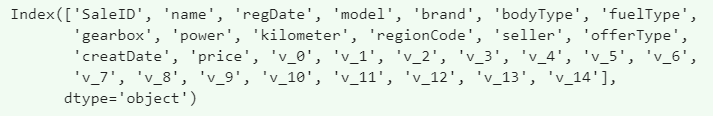

numerical_cols = Train_data.select_dtypes(exclude = 'object').columns

print(numerical_cols)

feature_cols = [col for col in numerical_cols if col not in ['SaleID','name','regDate','price']]

X_data = Train_data[feature_cols]

Y_data = Train_data['price']

X_test = TestA_data[feature_cols]

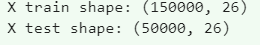

print('X train shape:',X_data.shape)

print('X test shape:',X_test.shape)

def Sta_inf(data):

print('_min',np.min(data))

print('_max:',np.max(data))

print('_mean',np.mean(data))

print('_ptp',np.ptp(data))

print('_std',np.std(data))

print('_var',np.var(data))

print('Sta of label:')

Sta_inf(Y_data)

Sta of label:

_min 11

_max: 99999

_mean 5923.32733333

_ptp 99988

_std 7501.97346988

_var 56279605.9427

X_data = X_data.fillna(-1)

X_test = X_test.fillna(-1)

def build_model_lr(x_train,y_train):

reg_model = linear_model.LinearRegression()

reg_model.fit(x_train,y_train)

return reg_model

def build_model_ridge(x_train,y_train):

reg_model = linear_model.Ridge(alpha=0.8)#alphas=range(1,100,5)

reg_model.fit(x_train,y_train)

return reg_model

def build_model_lasso(x_train,y_train):

reg_model = linear_model.LassoCV()

reg_model.fit(x_train,y_train)

return reg_model

def build_model_gbdt(x_train,y_train):

estimator =GradientBoostingRegressor(loss='ls',subsample= 0.85,max_depth= 5,n_estimators = 100)

param_grid = {

'learning_rate': [0.05,0.08,0.1,0.2],

}

gbdt = GridSearchCV(estimator, param_grid,cv=3)

gbdt.fit(x_train,y_train)

print(gbdt.best_params_)

# print(gbdt.best_estimator_ )

return gbdt

def build_model_xgb(x_train,y_train):

model = xgb.XGBRegressor(n_estimators=120, learning_rate=0.08, gamma=0, subsample=0.8,\

colsample_bytree=0.9, max_depth=5) #, objective ='reg:squarederror'

model.fit(x_train, y_train)

return model

def build_model_lgb(x_train,y_train):

estimator = lgb.LGBMRegressor(num_leaves=63,n_estimators = 100)

param_grid = {

'learning_rate': [0.01, 0.05, 0.1],

}

gbm = GridSearchCV(estimator, param_grid)

gbm.fit(x_train, y_train)

return gbm

XGBoost的五折交叉回归验证实现

## xgb

xgr = xgb.XGBRegressor(n_estimators=120, learning_rate=0.1, subsample=0.8,\

colsample_bytree=0.9, max_depth=7) # ,objective ='reg:squarederror'

scores_train = []

scores = []

## 5折交叉验证方式

sk=StratifiedKFold(n_splits=5,shuffle=True,random_state=0)

for train_ind,val_ind in sk.split(X_data,Y_data):

train_x=X_data.iloc[train_ind].values

train_y=Y_data.iloc[train_ind]

val_x=X_data.iloc[val_ind].values

val_y=Y_data.iloc[val_ind]

xgr.fit(train_x,train_y)

pred_train_xgb=xgr.predict(train_x)

pred_xgb=xgr.predict(val_x)

score_train = mean_absolute_error(train_y,pred_train_xgb)

scores_train.append(score_train)

score = mean_absolute_error(val_y,pred_xgb)

scores.append(score)

print('Train mae:',np.mean(score_train))

print('Val mae',np.mean(scores))

Train mae: 558.212360169

Val mae 693.120168439

划分数据集,并用多种方法训练和预测

## Split data with val

x_train,x_val,y_train,y_val = train_test_split(X_data,Y_data,test_size=0.3)

## Train and Predict

print('Predict LR...')

model_lr = build_model_lr(x_train,y_train)

val_lr = model_lr.predict(x_val)

subA_lr = model_lr.predict(X_test)

print('Predict Ridge...')

model_ridge = build_model_ridge(x_train,y_train)

val_ridge = model_ridge.predict(x_val)

subA_ridge = model_ridge.predict(X_test)

print('Predict Lasso...')

model_lasso = build_model_lasso(x_train,y_train)

val_lasso = model_lasso.predict(x_val)

subA_lasso = model_lasso.predict(X_test)

print('Predict GBDT...')

model_gbdt = build_model_gbdt(x_train,y_train)

val_gbdt = model_gbdt.predict(x_val)

subA_gbdt = model_gbdt.predict(X_test)

一般比赛中比较显著的是XGB和lgb,catboost也可以

print('predict XGB...')

model_xgb = build_model_xgb(x_train,y_train)

val_xgb = model_xgb.predict(x_val)

subA_xgb = model_xgb.predict(X_test)

print('predict lgb...')

model_lgb = build_model_lgb(x_train,y_train)

val_lgb = model_lgb.predict(x_val)

subA_lgb = model_lgb.predict(X_test)

print('predict catboost...')

model_lgb = build_model_lgb(x_train,y_train)

val_lgb = model_lgb.predict(x_val)

subA_lgb = model_lgb.predict(X_test)

print('Sta inf of lgb:')

Sta_inf(subA_lgb)

- 加权融合

def Weighted_method(test_pre1,test_pre2,test_pre3,w=[1/3,1/3,1/3]):

Weighted_result = w[0]*pd.Series(test_pre1)+w[1]*pd.Series(test_pre2)+w[2]*pd.Series(test_pre3)

return Weighted_result

## Init the Weight

w = [0.3,0.4,0.3]

## 测试验证集准确度

val_pre = Weighted_method(val_lgb,val_xgb,val_gbdt,w)

MAE_Weighted = mean_absolute_error(y_val,val_pre)

print('MAE of Weighted of val:',MAE_Weighted)

## 预测数据部分

subA = Weighted_method(subA_lgb,subA_xgb,subA_gbdt,w)

print('Sta inf:')

Sta_inf(subA)

## 生成提交文件

sub = pd.DataFrame()

sub['SaleID'] = X_test.index

sub['price'] = subA

sub.to_csv('./sub_Weighted.csv',index=False)

## 与简单的LR(线性回归)进行对比

val_lr_pred = model_lr.predict(x_val)

MAE_lr = mean_absolute_error(y_val,val_lr_pred)

print('MAE of lr:',MAE_lr)

输出 MAE of lr

- Starking融合

## Starking

## 第一层

train_lgb_pred = model_lgb.predict(x_train)

train_xgb_pred = model_xgb.predict(x_train)

train_gbdt_pred = model_gbdt.predict(x_train)

Strak_X_train = pd.DataFrame()

Strak_X_train['Method_1'] = train_lgb_pred

Strak_X_train['Method_2'] = train_xgb_pred

Strak_X_train['Method_3'] = train_gbdt_pred

Strak_X_val = pd.DataFrame()

Strak_X_val['Method_1'] = val_lgb

Strak_X_val['Method_2'] = val_xgb

Strak_X_val['Method_3'] = val_gbdt

Strak_X_test = pd.DataFrame()

Strak_X_test['Method_1'] = subA_lgb

Strak_X_test['Method_2'] = subA_xgb

Strak_X_test['Method_3'] = subA_gbdt

Strak_X_test.head()

## level2-method

model_lr_Stacking = build_model_lr(Strak_X_train,y_train)

## 训练集

train_pre_Stacking = model_lr_Stacking.predict(Strak_X_train)

print('MAE of Stacking-LR:',mean_absolute_error(y_train,train_pre_Stacking))

## 验证集

val_pre_Stacking = model_lr_Stacking.predict(Strak_X_val)

print('MAE of Stacking-LR:',mean_absolute_error(y_val,val_pre_Stacking))

## 预测集

print('Predict Stacking-LR...')

subA_Stacking = model_lr_Stacking.predict(Strak_X_test)

subA_Stacking[subA_Stacking<10]=10 ## 去除过小的预测值

sub = pd.DataFrame()

sub['SaleID'] = TestA_data.SaleID

sub['price'] = subA_Stacking

sub.to_csv('./sub_Stacking.csv',index=False)

大佬总结(ML67)

- 结果层面的融合,这种是最常见的融合方法,其可行的融合方法也有很多,比如根据结果的得分进行加权融合,还可以做Log,exp处理等。在做结果融合的时候,有一个很重要的条件是模型结果的得分要比较近似,然后结果的差异要比较大,这样的结果融合往往有比较好的效果提升。

- 特征层面的融合,这个层面其实感觉不叫融合,准确说可以叫分割,很多时候如果我们用同种模型训练,可以把特征进行切分给不同的模型,然后在后面进行模型或者结果融合有时也能产生比较好的效果。

- 模型层面的融合,模型层面的融合可能就涉及模型的堆叠和设计,比如加Staking层,部分模型的结果作为特征输入等,这些就需要多实验和思考了,基于模型层面的融合最好不同模型类型要有一定的差异,用同种模型不同的参数的收益一般是比较小的。

自我总结

其实对于这次的比赛,因为处于论文准备阶段,加之基础薄弱,大部分时间都是划水过去的,但是通过这次的锻炼,对之前学到的知识点有了比较清楚地认识和概括,加深了印象,对于很多拿捏不准的问题也有了比较满意的答复。

总的来说收获满满,然后我的队友很优秀,暨南大学的小伙子,有想法肯实干。他的他是很努力也让我感觉很有干劲。

加油,大家都会越来越优秀的,我要努力啃论文了。

2514

2514

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?