官网https://flink.apache.org/

简介

Apache Flink — Stateful Computations over Data Streams

我们前面学习的离线处理一般是不需要状态的(这个批次同上一批次没多少关系),不过也有项目用到state watermark,而Flink是基于流处理,有状态的

- 基于事件驱动

流式处理框架,比如storm等都有这个特点

对比Flink以及Spark:

- Flink :来一条处理一条

- Spark:是微批次的 eg:3s ,处理 3s的这些数据(本质上还是批处理框架)

虽然都是流批一体:但是Spark中流是批的特例,而Flink中批是流的特例

- Exactly-once state consistency(状态一致性问题)

- Event-time processing(基于时间做处理,对于Event-time,不管是在Flink中还是在其他应用程序中,一般都有三个时间:日志产生时间、处理时间、数据接入到分布式框架的时间,要想准备不论从这三个时间中的任意一个都没法做到准确,而基于Event-time就可以保证准确性,生产上90%都是基于Event-time来处理的)

- Sophisticated late data handling延迟数据的处理(延迟数据是避免不了的),不过目前我所知道的离线处理中,Spark是可以解决延迟数据的问题

API层面来讲,Flink提供了三种API,对于每一种API的不同是基于conciseness简洁and expressiveness复杂的权衡,类比于我们前面的Spark中越高级的API开发起来越简单

maintaining multiple terabytes of state:

注意:state维护在hdfs 有什么好处和坏处呢?

相比较Spark有几点Flink做的还是不错的:

- 处理时间,基于Event-time,有日志产生时间、处理时间、数据接入到分布式框架的时间

- watermark水印

- state状态管理

- 容错机制

Run Applications at any Scale

Flink is designed to run stateful streaming applications at any scale. Applications are parallelized into possibly thousands of tasks that are distributed and concurrently executed in a cluster. Therefore, an application can leverage virtually unlimited amounts of CPUs, main memory, disk and network IO. Moreover, Flink easily maintains very large application state. Its asynchronous and incremental checkpointing algorithm ensures minimal impact on processing latencies while guaranteeing exactly-once state consistency.

Users reported impressive scalability numbers for Flink applications running in their production environments, such as

applications processing multiple trillions of events per day,

applications maintaining multiple terabytes of state, and

applications running on thousands of cores

最新稳定版本文档https://ci.apache.org/projects/flink/flink-docs-release-1.10/

编译

https://ci.apache.org/projects/flink/flink-docs-release-1.10/dev/projectsetup/scala_api_quickstart.html

我们选择scala版的

我们采用以下这种方式

mvn archetype:generate \

-DarchetypeGroupId=org.apache.flink \

-DarchetypeArtifactId=flink-quickstart-scala \

-DarchetypeVersion=1.9.0

//创建工程采用这种方式,命令执行完成后,在本地生成一个工程,通过IDEA直接导进来就OK了

批处理编程方式:

set up the batch execution environment

ExecutionEnvironment.getExecutionEnvironment

Start with getting some data from the environment

then, transform the resulting DataSet[String] using operations

execute program

(x,...)

RichXXXFunction

生命周期函数

open 初始化方法

close 资源释放

getRuntimeContext 拿到整个作业运行时上下文

基于IDEA开发

pom.xml:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.yt</groupId>

<artifactId>Flink</artifactId>

<version>1.0</version>

<inceptionYear>2008</inceptionYear>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<flink.version>1.9.0</flink.version>

<scala.binary.version>2.11</scala.binary.version>

<scala.version>2.11.12</scala.version>

<hadoop.version>2.7.2</hadoop.version>

</properties>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.7</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!--Flink依赖-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.5</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>

批处理:

package com.ruozedata.flink.fink01

import org.apache.flink.api.scala.{DataSet, ExecutionEnvironment}

import org.apache.flink.api.scala._

object BatchJob {

def main(args: Array[String]): Unit = {

// 获取批处理上下文 <== SparkContext

val env = ExecutionEnvironment.getExecutionEnvironment

// 读取数据

val text: DataSet[String] = env.readTextFile("data/wc.data")

val value: DataSet[(String, Int)] = text.flatMap(_.toLowerCase.split(","))

.filter(_.nonEmpty)

.map((_, 1))

// transformation

val result = value.groupBy(0) // 0表示单词

.sum(1)

// sink output

result.print()

}

}

结果:

(jepson,2)

(leo,2)

(star,3)

(ruoze,3)

流处理

[hadoop@hadoop001 ~]$ nc -lk 1777

leo,leo,leo

summer,summer

package com.ruozedata.flink.fink01

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.api.scala._

object StreamingJob {

def main(args: Array[String]): Unit = {

// set up the streaming execution environment

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 接收数据

val text: DataStream[String] = env.socketTextStream("hadoop001", 1777)

// transformation

text.flatMap(_.toLowerCase.split(","))

.filter(_.nonEmpty)

.map((_, 1))

.keyBy(0).sum(1).print()

env.execute(this.getClass.getSimpleName)

}

}

结果:

1> (leo,1)

1> (leo,2)

1> (leo,3)

3> (summer,1)

3> (summer,2)

#Flink是进来一个处理一个,而前面的数字是并行度,根据core的数量

[hadoop@hadoop001 ~]$ nc -lk 1777

leo,leo,leo

summer,summer

leo,leo,leo

summer,summer

package com.ruozedata.flink.fink01

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.api.scala._

object StreamingJob {

def main(args: Array[String]): Unit = {

// set up the streaming execution environment

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 接收数据

val text: DataStream[String] = env.socketTextStream("hadoop001", 1777)

// transformation

text.flatMap(_.toLowerCase.split(","))

.filter(_.nonEmpty)

.map((_, 1))

.keyBy(0).sum(1).print("ruozedata")

.setParallelism(2)

env.execute(this.getClass.getSimpleName)

}

}

结果:

ruozedata:2> (leo,1)

ruozedata:2> (leo,3)

ruozedata:2> (leo,5)

ruozedata:1> (leo,2)

ruozedata:1> (leo,4)

ruozedata:1> (leo,6)

ruozedata:1> (summer,1)

ruozedata:2> (summer,2)

ruozedata:1> (summer,3)

ruozedata:2> (summer,4)

并行度为1:结果

ruozedata> (leo,1)

ruozedata> (leo,2)

ruozedata> (leo,3)

ruozedata> (leo,4)

ruozedata> (leo,5)

ruozedata> (leo,6)

ruozedata> (summer,1)

ruozedata> (summer,2)

ruozedata> (summer,3)

ruozedata> (summer,4)

依赖

Configuring Dependencies, Connectors, Libraries

Every Flink application depends on a set of Flink libraries. At the bare minimum, the application depends on the Flink APIs. Many applications depend in addition on certain connector libraries (like Kafka, Cassandra, etc.). When running Flink applications (either in a distributed deployment, or in the IDE for testing), the Flink runtime library must be available as well.

Flink的每个应用程序是有一些libraries的,在最小的,是依赖于Flink的Api(对于我们上述的测试项目就是最小的)。如果还有一些其他的应用程序需要添加connector (Streaming Connectors其实就是外部数据源,后面会做详细概述),当你运行Flink应用程序时,可以在分布式,也可以在IDE中

At the bare minimum, the application depends on the Flink APIs. Many applications depend in addition on certain connector libraries (like Kafka, Cassandra, etc.)

注意:connector libraries

https://ci.apache.org/projects/flink/flink-docs-release-1.10/dev/projectsetup/dependencies.html

Setting up a Project: Basic Dependencies

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>1.10.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.10.0</version>

<scope>provided</scope>

</dependency>

Basic API Concepts(通用的)

Flink programs are regular programs that implement transformations on distributed collections (e.g., filtering, mapping, updating state, joining, grouping, defining windows, aggregating). Collections are initially created from sources (e.g., by reading from files, kafka topics, or from local, in-memory collections). Results are returned via sinks, which may for example write the data to (distributed) files, or to standard output (for example, the command line terminal). Flink programs run in a variety of contexts, standalone, or embedded in other programs. The execution can happen in a local JVM, or on clusters of many machines.

Depending on the type of data sources, i.e. bounded or unbounded sources, you would either write a batch program or a streaming program where the DataSet API is used for batch and the DataStream API is used for streaming. This guide will introduce the basic concepts that are common to both APIs but please see our Streaming Guide and Batch Guide for concrete information about writing programs with each API.

NOTE: When showing actual examples of how the APIs can be used we will use StreamingExecutionEnvironment and the DataStream API. The concepts are exactly the same in the DataSet API, just replace by ExecutionEnvironment and DataSet.

Anatomy of a Flink Program(开发套路)

Flink programs look like regular programs that transform collections of data. Each program consists of the same basic parts:

- Obtain an execution environment,

- Load/create the initial data,

- Specify transformations on this data,

- Specify where to put the results of your computations,

- Trigger the program execution

对于第一个Obtain an execution environment拿上下文,全部使用getExecutionEnvironment就可以了,虽然可以创建本地、远程的,具体可以查看源码

第二个初始化数据,对于流处理,读文本方式肯定是不行的,最好是用类似于kafka的消息队列,这才是正确的组合

Lazy Evaluation(延迟执行)

All Flink programs are executed lazily: When the program’s main method is executed, the data loading and transformations do not happen directly. Rather, each operation is created and added to the program’s plan. The operations are actually executed when the execution is explicitly triggered by an execute() call on the execution environment. Whether the program is executed locally or on a cluster depends on the type of execution environment

The lazy evaluation lets you construct sophisticated programs that Flink executes as one holistically planned unit.

同Spark中没有遇到action就不会触发一样,添加到DAG中

Specifying Keys

指定key编程,这个是重点

也就是前置条件必须是(x,…)这个格式

Define keys for Tuples

Define keys using Field Expressions 字段表达式

case class最大的好处是默认就有序列化的

[hadoop@hadoop001 ~]$ nc -lk 1777

leo,leo,leo,leo

package com.ruozedata.flink.fink01

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.api.scala._

object StreamingJob {

def main(args: Array[String]): Unit = {

// set up the streaming execution environment

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 接收数据

val text: DataStream[String] = env.socketTextStream("hadoop001", 1777)

// transformation

// text.flatMap(_.toLowerCase.split(","))

// .filter(_.nonEmpty)

// .map((_, 1))

// .keyBy(0).sum(1).print("ruozedata")

// .setParallelism(1)

val result = text.flatMap(_.toLowerCase.split(","))

.filter(_.nonEmpty)

.map(x => WC(x, 1))

.keyBy(_.word).sum("count").print("ruozedata")

.setParallelism(1)

// execute program

env.execute(this.getClass.getSimpleName)

}

}

case class WC(word: String, count: Int)

结果:

ruozedata> WC(leo,1)

ruozedata> WC(leo,2)

ruozedata> WC(leo,3)

ruozedata> WC(leo,4)

Specifying Transformation Functions 指定Transformation 自定义函数

package com.ruozedata.flink.fink01

object Domain {

case class Access(time:Long, domain:String, traffic:Long)

}

package com.ruozedata.flink.fink01

import com.ruozedata.flink.fink01.Domain.Access

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.api.scala._

object SpecifyingTransformationFunctionsApp {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val stream = env.readTextFile("data/access.log")

val accessStream = stream.map(x => {

val splits = x.split(",")

Access(splits(0).toLong, splits(1), splits(2).toLong)

})

accessStream.print()

env.execute(this.getClass.getSimpleName)

}

}

运行结果:

Access(201912120010,ruozedata.com,2000)

Access(201912120010,dongqiudi.com,6000)

Access(201912120010,zhibo8.com,5000)

Access(201912120010,ruozedata.com,4000)

Access(201912120010,dongqiudi.com,1000)

需求1:过滤traffic > 4000

方式1:Lambda Functions

package com.ruozedata.flink.fink01

import com.ruozedata.flink.fink01.Domain.Access

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.api.scala._

object SpecifyingTransformationFunctionsApp {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val stream = env.readTextFile("data/access.log")

val accessStream = stream.map(x => {

val splits = x.split(",")

Access(splits(0).toLong, splits(1), splits(2).toLong)

})

// 过滤traffic > 4000

accessStream.filter(_.traffic > 4000).print()

env.execute(this.getClass.getSimpleName)

}

}

运行结果:

Access(201912120010,dongqiudi.com,6000)

Access(201912120010,zhibo8.com,5000)

//也可以使用如下方式,把参数传进来

object SpecifyingTransformationFunctionsApp {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1) //这里并行度可以统一设置

val stream = env.readTextFile("data/access.log")

val accessStream = stream.map(x => {

val splits = x.split(",")

Access(splits(0).toLong, splits(1), splits(2).toLong)

})

accessStream.filter(new RuozedataFilter02(5000)).print()

env.execute(this.getClass.getSimpleName)

}

}

//传入的是泛型,这里传入Access,因为我们上面处理完,要基于Access进行处理

// 对于上面的map、filter等都有XXXFunction,Flink中还有个更牛逼的RichXXXFunction

class RuozedataFilter extends FilterFunction[Access] {

override def filter(value: Access): Boolean = value.traffic > 4000

}

//由于上面traffic>4000是写死的,所以我们可以用以下方式把参数传进来

class RuozedataFilter02(traffic: Long) extends FilterFunction[Access] {

override def filter(value: Access): Boolean = value.traffic > traffic

}

方式二:

//使用匿名内部类

package com.ruozedata.flink.fink01

import com.ruozedata.flink.fink01.Domain.Access

import org.apache.flink.api.common.functions.FilterFunction

import org.apache.flink.api.scala._

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

object SpecifyingTransformationFunctionsApp {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1) //这里并行度可以统一设置

val stream = env.readTextFile("data/access.log")

val accessStream = stream.map(x => {

val splits = x.split(",")

Access(splits(0).toLong, splits(1), splits(2).toLong)

})

// accessStream.filter(new RuozedataFilter02(5000)).print()

//使用匿名内部类

accessStream.filter(new FilterFunction[Access] {

override def filter(t: Access): Boolean = t.traffic > 4000

}).print()

env.execute(this.getClass.getSimpleName)

}

}

//传入的是泛型,这里传入Access,因为我们上面处理完,要基于Access进行处理

// 对于上面的map、filter等都有XXXFunction,Flink中还有个更牛逼的RichXXXFunction

class RuozedataFilter extends FilterFunction[Access] {

override def filter(value: Access): Boolean = value.traffic > 4000

}

//由于上面traffic>4000是写死的,所以我们可以用以下方式把参数传进来

class RuozedataFilter02(traffic: Long) extends FilterFunction[Access] {

override def filter(value: Access): Boolean = value.traffic > traffic

}

//当然匿名内部类这样写比较难看

这里面的生命周期函数

RichXXXFunction

生命周期函数

open 初始化方法

close 资源释放

getRuntimeContext 拿到整个作业运行时上下文

可以先回忆下MapReduce中的Mapper类,异曲同工

其中有setup、cleanup这些方法,这就比如去连接数据库,需要初始化、连接释放等

方式3:Rich functions(类似于隐式转换,增强)

package com.ruozedata.flink.fink01

import com.ruozedata.flink.fink01.Domain.Access

import org.apache.flink.api.common.functions.{FilterFunction, RichMapFunction, RuntimeContext}

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.api.scala._

import org.apache.flink.configuration.Configuration

object SpecifyingTransformationFunctionsApp {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(2) //这里并行度可以统一设置

val stream = env.readTextFile("data/access.log")

// val accessStream = stream.map(x => {

// val splits = x.split(",")

// Access(splits(0).toLong, splits(1), splits(2).toLong)

// })

val accessStream: DataStream[Access] = stream.map(new RuozedataMap)

accessStream.filter(_.traffic > 4000).print()

//使用匿名内部类

// accessStream.filter(new FilterFunction[Access] {

// override def filter(t: Access): Boolean = t.traffic > 4000

// }).print()

env.execute(this.getClass.getSimpleName)

}

}

//传入的是泛型,这里传入Access,因为我们上面处理完,要基于Access进行处理

// 对于上面的map、filter等都有XXXFunction,Flink中还有个更牛逼的RichXXXFunction

class RuozedataFilter extends FilterFunction[Access] {

override def filter(value: Access): Boolean = value.traffic > 4000

}

//由于上面traffic>4000是写死的,所以我们可以用以下方式把参数传进来

class RuozedataFilter02(traffic: Long) extends FilterFunction[Access] {

override def filter(value: Access): Boolean = value.traffic > traffic

}

//这里定义一个RuozedataMap增强类,把所有的读都放在这个类

//RichMapFunction有<IN, OUT>这两个类型

//而对于RuozedataMap生命周期中还会有open、close等方法

class RuozedataMap extends RichMapFunction[String, Access] {

override def map(value: String): Access = {

val splits = value.split(",")

Access(splits(0).toLong, splits(1), splits(2).toLong)

}

override def open(parameters: Configuration): Unit = {

super.open(parameters)

println("~~~~~~~~~~~open~~~~~~~")

}

override def close(): Unit = {

super.close()

}

//拿到上下文

override def getRuntimeContext: RuntimeContext = {

super.getRuntimeContext

}

}

结果: 并行度为2的时候 ,open次数就是2

~~~~~~~~~~~open~~~~~~~

~~~~~~~~~~~open~~~~~~~

1> Access(201912120010,dongqiudi.com,6000)

1> Access(201912120010,zhibo8.com,5000)

这块要注意 :MySQL获取connection 放在open方法里 ,用完close掉

还要注意 不同的并行读 调用了几次

//并行度为3的时候

~~~~~~~~~~~open~~~~~~~

~~~~~~~~~~~open~~~~~~~

~~~~~~~~~~~open~~~~~~~

3> Access(201912120010,zhibo8.com,5000)

2> Access(201912120010,dongqiudi.com,6000)

结果:并行度为1的时候

~~~~~~~~~~~open~~~~~~~

Access(201912120010,dongqiudi.com,6000)

Access(201912120010,zhibo8.com,5000)

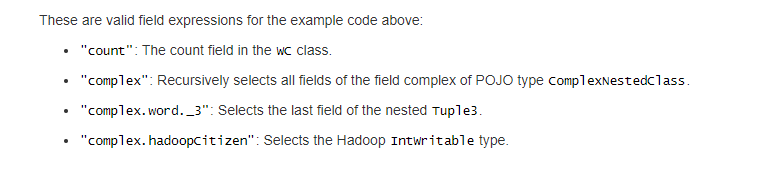

数据类型

Tuples and Case Classes

Scala case classes (and Scala tuples which are a special case of case classes), are composite types that contain a fixed number of fields with various types. Tuple fields are addressed by their 1-offset names such as _1 for the first field. Case class fields are accessed by their name.

case class WordCount(word: String, count: Int)

val input = env.fromElements(

WordCount("hello", 1),

WordCount("world", 2)) // Case Class Data Set

input.keyBy("word")// key by field expression "word"

val input2 = env.fromElements(("hello", 1), ("world", 2)) // Tuple2 Data Set

input2.keyBy(0, 1) // key by field positions 0 and 1

POJOs

class WordWithCount(var word: String, var count: Int) {

def this() {

this(null, -1)

}

}

val input = env.fromElements(

new WordWithCount("hello", 1),

new WordWithCount("world", 2)) // Case Class Data Set

input.keyBy("word")// key by field expression "word"

Primitive Types

Flink supports all Java and Scala primitive types such as Integer, String, and Double.

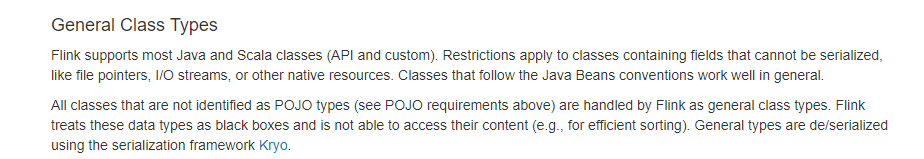

General Class Types

Values

Hadoop Writables

You can use types that implement the org.apache.hadoop.Writable interface. The serialization logic defined in the write()and readFields() methods will be used for serialization.

Special Types

You can use special types, including Scala’s Either, Option, and Try. The Java API has its own custom implementation of Either. Similarly to Scala’s Either, it represents a value of two possible types, Left or Right. Either can be useful for error handling or operators that need to output two different types of records.

Type Erasure & Type Inference

这块可以不用关注,我们用scala

1946

1946

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?