本章主要介绍FFmpeg基础组件AVFrame.

1.结构体成员

我们把所有的代码先粘贴上来,在后边一个一个解释。

typedef struct AVFrame {

#define AV_NUM_DATA_POINTERS 8

/**

* pointer to the picture/channel planes.

* This might be different from the first allocated byte. For video,

* it could even point to the end of the image data.

*

* All pointers in data and extended_data must point into one of the

* AVBufferRef in buf or extended_buf.

*

* Some decoders access areas outside 0,0 - width,height, please

* see avcodec_align_dimensions2(). Some filters and swscale can read

* up to 16 bytes beyond the planes, if these filters are to be used,

* then 16 extra bytes must be allocated.

*

* NOTE: Pointers not needed by the format MUST be set to NULL.

*

* @attention In case of video, the data[] pointers can point to the

* end of image data in order to reverse line order, when used in

* combination with negative values in the linesize[] array.

*/

uint8_t *data[AV_NUM_DATA_POINTERS];

/**

* For video, a positive or negative value, which is typically indicating

* the size in bytes of each picture line, but it can also be:

* - the negative byte size of lines for vertical flipping

* (with data[n] pointing to the end of the data

* - a positive or negative multiple of the byte size as for accessing

* even and odd fields of a frame (possibly flipped)

*

* For audio, only linesize[0] may be set. For planar audio, each channel

* plane must be the same size.

*

* For video the linesizes should be multiples of the CPUs alignment

* preference, this is 16 or 32 for modern desktop CPUs.

* Some code requires such alignment other code can be slower without

* correct alignment, for yet other it makes no difference.

*

* @note The linesize may be larger than the size of usable data -- there

* may be extra padding present for performance reasons.

*

* @attention In case of video, line size values can be negative to achieve

* a vertically inverted iteration over image lines.

*/

int linesize[AV_NUM_DATA_POINTERS];

/**

* pointers to the data planes/channels.

*

* For video, this should simply point to data[].

*

* For planar audio, each channel has a separate data pointer, and

* linesize[0] contains the size of each channel buffer.

* For packed audio, there is just one data pointer, and linesize[0]

* contains the total size of the buffer for all channels.

*

* Note: Both data and extended_data should always be set in a valid frame,

* but for planar audio with more channels that can fit in data,

* extended_data must be used in order to access all channels.

*/

uint8_t **extended_data;

/**

* @name Video dimensions

* Video frames only. The coded dimensions (in pixels) of the video frame,

* i.e. the size of the rectangle that contains some well-defined values.

*

* @note The part of the frame intended for display/presentation is further

* restricted by the @ref cropping "Cropping rectangle".

* @{

*/

int width, height;

/**

* @}

*/

/**

* number of audio samples (per channel) described by this frame

*/

int nb_samples;

/**

* format of the frame, -1 if unknown or unset

* Values correspond to enum AVPixelFormat for video frames,

* enum AVSampleFormat for audio)

*/

int format;

/**

* 1 -> keyframe, 0-> not

*/

int key_frame;

/**

* Picture type of the frame.

*/

enum AVPictureType pict_type;

/**

* Sample aspect ratio for the video frame, 0/1 if unknown/unspecified.

*/

AVRational sample_aspect_ratio;

/**

* Presentation timestamp in time_base units (time when frame should be shown to user).

*/

int64_t pts;

/**

* DTS copied from the AVPacket that triggered returning this frame. (if frame threading isn't used)

* This is also the Presentation time of this AVFrame calculated from

* only AVPacket.dts values without pts values.

*/

int64_t pkt_dts;

/**

* Time base for the timestamps in this frame.

* In the future, this field may be set on frames output by decoders or

* filters, but its value will be by default ignored on input to encoders

* or filters.

*/

AVRational time_base;

/**

* picture number in bitstream order

*/

int coded_picture_number;

/**

* picture number in display order

*/

int display_picture_number;

/**

* quality (between 1 (good) and FF_LAMBDA_MAX (bad))

*/

int quality;

/**

* for some private data of the user

*/

void *opaque;

/**

* When decoding, this signals how much the picture must be delayed.

* extra_delay = repeat_pict / (2*fps)

*/

int repeat_pict;

/**

* The content of the picture is interlaced.

*/

int interlaced_frame;

/**

* If the content is interlaced, is top field displayed first.

*/

int top_field_first;

/**

* Tell user application that palette has changed from previous frame.

*/

int palette_has_changed;

/**

* reordered opaque 64 bits (generally an integer or a double precision float

* PTS but can be anything).

* The user sets AVCodecContext.reordered_opaque to represent the input at

* that time,

* the decoder reorders values as needed and sets AVFrame.reordered_opaque

* to exactly one of the values provided by the user through AVCodecContext.reordered_opaque

*/

int64_t reordered_opaque;

/**

* Sample rate of the audio data.

*/

int sample_rate;

/**

* Channel layout of the audio data.

*/

uint64_t channel_layout;

/**

* AVBuffer references backing the data for this frame. All the pointers in

* data and extended_data must point inside one of the buffers in buf or

* extended_buf. This array must be filled contiguously -- if buf[i] is

* non-NULL then buf[j] must also be non-NULL for all j < i.

*

* There may be at most one AVBuffer per data plane, so for video this array

* always contains all the references. For planar audio with more than

* AV_NUM_DATA_POINTERS channels, there may be more buffers than can fit in

* this array. Then the extra AVBufferRef pointers are stored in the

* extended_buf array.

*/

AVBufferRef *buf[AV_NUM_DATA_POINTERS];

/**

* For planar audio which requires more than AV_NUM_DATA_POINTERS

* AVBufferRef pointers, this array will hold all the references which

* cannot fit into AVFrame.buf.

*

* Note that this is different from AVFrame.extended_data, which always

* contains all the pointers. This array only contains the extra pointers,

* which cannot fit into AVFrame.buf.

*

* This array is always allocated using av_malloc() by whoever constructs

* the frame. It is freed in av_frame_unref().

*/

AVBufferRef **extended_buf;

/**

* Number of elements in extended_buf.

*/

int nb_extended_buf;

AVFrameSideData **side_data;

int nb_side_data;

/**

* @defgroup lavu_frame_flags AV_FRAME_FLAGS

* @ingroup lavu_frame

* Flags describing additional frame properties.

*

* @{

*/

/**

* The frame data may be corrupted, e.g. due to decoding errors.

*/

#define AV_FRAME_FLAG_CORRUPT (1 << 0)

/**

* A flag to mark the frames which need to be decoded, but shouldn't be output.

*/

#define AV_FRAME_FLAG_DISCARD (1 << 2)

/**

* @}

*/

/**

* Frame flags, a combination of @ref lavu_frame_flags

*/

int flags;

/**

* MPEG vs JPEG YUV range.

* - encoding: Set by user

* - decoding: Set by libavcodec

*/

enum AVColorRange color_range;

enum AVColorPrimaries color_primaries;

enum AVColorTransferCharacteristic color_trc;

/**

* YUV colorspace type.

* - encoding: Set by user

* - decoding: Set by libavcodec

*/

enum AVColorSpace colorspace;

enum AVChromaLocation chroma_location;

/**

* frame timestamp estimated using various heuristics, in stream time base

* - encoding: unused

* - decoding: set by libavcodec, read by user.

*/

int64_t best_effort_timestamp;

/**

* reordered pos from the last AVPacket that has been input into the decoder

* - encoding: unused

* - decoding: Read by user.

*/

int64_t pkt_pos;

/**

* duration of the corresponding packet, expressed in

* AVStream->time_base units, 0 if unknown.

* - encoding: unused

* - decoding: Read by user.

*/

int64_t pkt_duration;

/**

* metadata.

* - encoding: Set by user.

* - decoding: Set by libavcodec.

*/

AVDictionary *metadata;

/**

* decode error flags of the frame, set to a combination of

* FF_DECODE_ERROR_xxx flags if the decoder produced a frame, but there

* were errors during the decoding.

* - encoding: unused

* - decoding: set by libavcodec, read by user.

*/

int decode_error_flags;

#define FF_DECODE_ERROR_INVALID_BITSTREAM 1

#define FF_DECODE_ERROR_MISSING_REFERENCE 2

#define FF_DECODE_ERROR_CONCEALMENT_ACTIVE 4

#define FF_DECODE_ERROR_DECODE_SLICES 8

/**

* number of audio channels, only used for audio.

* - encoding: unused

* - decoding: Read by user.

*/

int channels;

/**

* size of the corresponding packet containing the compressed

* frame.

* It is set to a negative value if unknown.

* - encoding: unused

* - decoding: set by libavcodec, read by user.

*/

int pkt_size;

/**

* For hwaccel-format frames, this should be a reference to the

* AVHWFramesContext describing the frame.

*/

AVBufferRef *hw_frames_ctx;

/**

* AVBufferRef for free use by the API user. FFmpeg will never check the

* contents of the buffer ref. FFmpeg calls av_buffer_unref() on it when

* the frame is unreferenced. av_frame_copy_props() calls create a new

* reference with av_buffer_ref() for the target frame's opaque_ref field.

*

* This is unrelated to the opaque field, although it serves a similar

* purpose.

*/

AVBufferRef *opaque_ref;

/**

* @anchor cropping

* @name Cropping

* Video frames only. The number of pixels to discard from the the

* top/bottom/left/right border of the frame to obtain the sub-rectangle of

* the frame intended for presentation.

* @{

*/

size_t crop_top;

size_t crop_bottom;

size_t crop_left;

size_t crop_right;

/**

* @}

*/

/**

* AVBufferRef for internal use by a single libav* library.

* Must not be used to transfer data between libraries.

* Has to be NULL when ownership of the frame leaves the respective library.

*

* Code outside the FFmpeg libs should never check or change the contents of the buffer ref.

*

* FFmpeg calls av_buffer_unref() on it when the frame is unreferenced.

* av_frame_copy_props() calls create a new reference with av_buffer_ref()

* for the target frame's private_ref field.

*/

AVBufferRef *private_ref;

} AVFrame;

AVFrame中核心成员,我们常用的就是下面几个

typedef struct AVFrame {

...

uint8_t *data[AV_NUM_DATA_POINTERS]

int linesize[AV_NUM_DATA_POINTERS];

uint8_t **extended_data;uint8_t **extended_data;

int width, height;

int format;

int key_frame;

int64_t pts;

int64_t pkt_dts;

AVRational time_base;

AVBufferRef *buf[AV_NUM_DATA_POINTERS];

...

}

这个结构体主要保存解码后的YUV数据,特别注意的是AVBufferRef *buf[AV_NUM_DATA_POINTERS];这个成员,因为它用来进行分配内存的释放。

其实在很多地方用到了AVBufferRef ,原理就是其中内部使用了一个引用,当引用为1的时候,就把内部指向的memory释放掉。

/**

* A reference counted buffer type. It is opaque and is meant to be used through

* references (AVBufferRef).

*/

typedef struct AVBuffer AVBuffer;

/**

* A reference to a data buffer.

*

* The size of this struct is not a part of the public ABI and it is not meant

* to be allocated directly.

*/

typedef struct AVBufferRef {

AVBuffer *buffer;

/**

* The data buffer. It is considered writable if and only if

* this is the only reference to the buffer, in which case

* av_buffer_is_writable() returns 1.

*/

uint8_t *data;

/**

* Size of data in bytes.

*/

size_t size;

} AVBufferRef;

其中data就是yuv数据,size就是数据大小,我们看到里面的AVBuffer ,其详细结构如下,其中refcount就是引用数量,free就是释放函数

struct AVBuffer {

uint8_t *data; /**< data described by this buffer */

size_t size; /**< size of data in bytes */

/**

* number of existing AVBufferRef instances referring to this buffer

*/

atomic_uint refcount;

/**

* a callback for freeing the data

*/

void (*free)(void *opaque, uint8_t *data);

/**

* an opaque pointer, to be used by the freeing callback

*/

void *opaque;

/**

* A combination of AV_BUFFER_FLAG_*

*/

int flags;

/**

* A combination of BUFFER_FLAG_*

*/

int flags_internal;

};

关于这一块智能引用,其提供了一些函数族

/**

* Allocate an AVBuffer of the given size using av_malloc().

*

* @return an AVBufferRef of given size or NULL when out of memory

*/

AVBufferRef *av_buffer_alloc(size_t size);

/**

* Same as av_buffer_alloc(), except the returned buffer will be initialized

* to zero.

*/

AVBufferRef *av_buffer_allocz(size_t size);

/**

* Create an AVBuffer from an existing array.

*

* If this function is successful, data is owned by the AVBuffer. The caller may

* only access data through the returned AVBufferRef and references derived from

* it.

* If this function fails, data is left untouched.

* @param data data array

* @param size size of data in bytes

* @param free a callback for freeing this buffer's data

* @param opaque parameter to be got for processing or passed to free

* @param flags a combination of AV_BUFFER_FLAG_*

*

* @return an AVBufferRef referring to data on success, NULL on failure.

*/

AVBufferRef *av_buffer_create(uint8_t *data, size_t size,

void (*free)(void *opaque, uint8_t *data),

void *opaque, int flags);

/**

* Default free callback, which calls av_free() on the buffer data.

* This function is meant to be passed to av_buffer_create(), not called

* directly.

*/

void av_buffer_default_free(void *opaque, uint8_t *data);

/**

* Create a new reference to an AVBuffer.

*

* @return a new AVBufferRef referring to the same AVBuffer as buf or NULL on

* failure.

*/

AVBufferRef *av_buffer_ref(const AVBufferRef *buf);

/**

* Free a given reference and automatically free the buffer if there are no more

* references to it.

*

* @param buf the reference to be freed. The pointer is set to NULL on return.

*/

void av_buffer_unref(AVBufferRef **buf);

/**

* @return 1 if the caller may write to the data referred to by buf (which is

* true if and only if buf is the only reference to the underlying AVBuffer).

* Return 0 otherwise.

* A positive answer is valid until av_buffer_ref() is called on buf.

*/

int av_buffer_is_writable(const AVBufferRef *buf);

/**

* @return the opaque parameter set by av_buffer_create.

*/

void *av_buffer_get_opaque(const AVBufferRef *buf);

int av_buffer_get_ref_count(const AVBufferRef *buf);

这些函数族用户是用不到的,一般在ffmpeg内部模块中使用到。比如AVFrame函数族中。

其余根据字面意思很好理解。其中linesize表示每个plane的步长。

比如YUV420的数,其数据长度大小为:

if (frame->pixel_format == TOPSCODEC_PIX_FMT_I420 ||

frame->pixel_format == TOPSCODEC_PIX_FMT_NV12 ||

frame->pixel_format == TOPSCODEC_PIX_FMT_NV21 ||

frame->pixel_format == TOPSCODEC_PIX_FMT_P010 ||

frame->pixel_format == TOPSCODEC_PIX_FMT_P010LE) {

int Y = frame->linesize[0] * frame->height;

int U = frame->linesize[1] * ((frame->height + 1) / 2);

int V = frame->linesize[2] * ((frame->height + 1) / 2);

}

也就是说yuv数据的宽度是多大。

其实这个并不用手工来计算,ffmpeg框架提供了专用的函数来处理:

int av_image_fill_plane_sizes(size_t sizes[4], enum AVPixelFormat pix_fmt,

int height, const ptrdiff_t linesizes[4])

这个函数用来计算每个plane的长度是多少,通过传入参数我们很容易看到height.原理和我们上面一样。

下面这个函数比较特殊,可以计算linesizes,也就是每个通道的stride.

int av_image_fill_linesizes(int linesizes[4], enum AVPixelFormat pix_fmt, int width)

这个函数专门通过width和pix_fmt来计算linesizes[4],这样就不用我们通过手动来去计算了。

还有另外一个函数用来计算每个planes数据长度

该类函数族ffmpeg提供了很多,具体在imgutils.h中

/**

* Compute the size of an image line with format pix_fmt and width

* width for the plane plane.

*

* @return the computed size in bytes

*/

int av_image_get_linesize(enum AVPixelFormat pix_fmt, int width, int plane);

/**

* Fill plane linesizes for an image with pixel format pix_fmt and

* width width.

*

* @param linesizes array to be filled with the linesize for each plane

* @return >= 0 in case of success, a negative error code otherwise

*/

int av_image_fill_linesizes(int linesizes[4], enum AVPixelFormat pix_fmt, int width);

/**

* Fill plane sizes for an image with pixel format pix_fmt and height height.

*

* @param size the array to be filled with the size of each image plane

* @param linesizes the array containing the linesize for each

* plane, should be filled by av_image_fill_linesizes()

* @return >= 0 in case of success, a negative error code otherwise

*

* @note The linesize parameters have the type ptrdiff_t here, while they are

* int for av_image_fill_linesizes().

*/

int av_image_fill_plane_sizes(size_t size[4], enum AVPixelFormat pix_fmt,

int height, const ptrdiff_t linesizes[4]);

/**

* Fill plane data pointers for an image with pixel format pix_fmt and

* height height.

*

* @param data pointers array to be filled with the pointer for each image plane

* @param ptr the pointer to a buffer which will contain the image

* @param linesizes the array containing the linesize for each

* plane, should be filled by av_image_fill_linesizes()

* @return the size in bytes required for the image buffer, a negative

* error code in case of failure

*/

int av_image_fill_pointers(uint8_t *data[4], enum AVPixelFormat pix_fmt, int height,

uint8_t *ptr, const int linesizes[4]);

/**

* Allocate an image with size w and h and pixel format pix_fmt, and

* fill pointers and linesizes accordingly.

* The allocated image buffer has to be freed by using

* av_freep(&pointers[0]).

*

* @param align the value to use for buffer size alignment

* @return the size in bytes required for the image buffer, a negative

* error code in case of failure

*/

int av_image_alloc(uint8_t *pointers[4], int linesizes[4],

int w, int h, enum AVPixelFormat pix_fmt, int align);

/**

* Copy image plane from src to dst.

* That is, copy "height" number of lines of "bytewidth" bytes each.

* The first byte of each successive line is separated by *_linesize

* bytes.

*

* bytewidth must be contained by both absolute values of dst_linesize

* and src_linesize, otherwise the function behavior is undefined.

*

* @param dst_linesize linesize for the image plane in dst

* @param src_linesize linesize for the image plane in src

*/

void av_image_copy_plane(uint8_t *dst, int dst_linesize,

const uint8_t *src, int src_linesize,

int bytewidth, int height);

/**

* Copy image in src_data to dst_data.

*

* @param dst_linesizes linesizes for the image in dst_data

* @param src_linesizes linesizes for the image in src_data

*/

void av_image_copy(uint8_t *dst_data[4], int dst_linesizes[4],

const uint8_t *src_data[4], const int src_linesizes[4],

enum AVPixelFormat pix_fmt, int width, int height);

/**

* Setup the data pointers and linesizes based on the specified image

* parameters and the provided array.

*

* The fields of the given image are filled in by using the src

* address which points to the image data buffer. Depending on the

* specified pixel format, one or multiple image data pointers and

* line sizes will be set. If a planar format is specified, several

* pointers will be set pointing to the different picture planes and

* the line sizes of the different planes will be stored in the

* lines_sizes array. Call with src == NULL to get the required

* size for the src buffer.

*

* To allocate the buffer and fill in the dst_data and dst_linesize in

* one call, use av_image_alloc().

*

* @param dst_data data pointers to be filled in

* @param dst_linesize linesizes for the image in dst_data to be filled in

* @param src buffer which will contain or contains the actual image data, can be NULL

* @param pix_fmt the pixel format of the image

* @param width the width of the image in pixels

* @param height the height of the image in pixels

* @param align the value used in src for linesize alignment

* @return the size in bytes required for src, a negative error code

* in case of failure

*/

int av_image_fill_arrays(uint8_t *dst_data[4], int dst_linesize[4],

const uint8_t *src,

enum AVPixelFormat pix_fmt, int width, int height, int align);

/**

* Return the size in bytes of the amount of data required to store an

* image with the given parameters.

*

* @param pix_fmt the pixel format of the image

* @param width the width of the image in pixels

* @param height the height of the image in pixels

* @param align the assumed linesize alignment

* @return the buffer size in bytes, a negative error code in case of failure

*/

int av_image_get_buffer_size(enum AVPixelFormat pix_fmt, int width, int height, int align);

/**

* Copy image data from an image into a buffer.

*

* av_image_get_buffer_size() can be used to compute the required size

* for the buffer to fill.

*

* @param dst a buffer into which picture data will be copied

* @param dst_size the size in bytes of dst

* @param src_data pointers containing the source image data

* @param src_linesize linesizes for the image in src_data

* @param pix_fmt the pixel format of the source image

* @param width the width of the source image in pixels

* @param height the height of the source image in pixels

* @param align the assumed linesize alignment for dst

* @return the number of bytes written to dst, or a negative value

* (error code) on error

*/

int av_image_copy_to_buffer(uint8_t *dst, int dst_size,

const uint8_t * const src_data[4], const int src_linesize[4],

enum AVPixelFormat pix_fmt, int width, int height, int align);

/**

* Check if the given dimension of an image is valid, meaning that all

* bytes of the image can be addressed with a signed int.

*

* @param w the width of the picture

* @param h the height of the picture

* @param log_offset the offset to sum to the log level for logging with log_ctx

* @param log_ctx the parent logging context, it may be NULL

* @return >= 0 if valid, a negative error code otherwise

*/

int av_image_check_size(unsigned int w, unsigned int h, int log_offset, void *log_ctx);

/**

* Check if the given dimension of an image is valid, meaning that all

* bytes of a plane of an image with the specified pix_fmt can be addressed

* with a signed int.

*

* @param w the width of the picture

* @param h the height of the picture

* @param max_pixels the maximum number of pixels the user wants to accept

* @param pix_fmt the pixel format, can be AV_PIX_FMT_NONE if unknown.

* @param log_offset the offset to sum to the log level for logging with log_ctx

* @param log_ctx the parent logging context, it may be NULL

* @return >= 0 if valid, a negative error code otherwise

*/

int av_image_check_size2(unsigned int w, unsigned int h, int64_t max_pixels, enum AVPixelFormat pix_fmt, int log_offset, void *log_ctx);

2.成员函数

const char *av_get_colorspace_name(enum AVColorSpace val);

AVFrame *av_frame_alloc(void);

void av_frame_free(AVFrame **frame);

int av_frame_ref(AVFrame *dst, const AVFrame *src);

AVFrame *av_frame_clone(const AVFrame *src);

void av_frame_move_ref(AVFrame *dst, AVFrame *src);

int av_frame_get_buffer(AVFrame *frame, int align);

int av_frame_is_writable(AVFrame *frame);

int av_frame_make_writable(AVFrame *frame);

int av_frame_copy(AVFrame *dst, const AVFrame *src);

int av_frame_copy_props(AVFrame *dst, const AVFrame *src);

AVFrameSideData *av_frame_new_side_data(AVFrame *frame,

enum AVFrameSideDataType type,

size_t size);

AVFrameSideData *av_frame_new_side_data_from_buf(AVFrame *frame,

enum AVFrameSideDataType type,

AVBufferRef *buf);

void av_frame_remove_side_data(AVFrame *frame, enum AVFrameSideDataType type);

const char *av_frame_side_data_name(enum AVFrameSideDataType type);

上面函数核心就是操作AVFrame。

AVFrame Host内存的获取 av_frame_get_buffer

ffmpeg提供了一个比较特殊的接口

/**

* Allocate new buffer(s) for audio or video data.

*

* The following fields must be set on frame before calling this function:

* - format (pixel format for video, sample format for audio)

* - width and height for video

* - nb_samples and channel_layout for audio

*

* This function will fill AVFrame.data and AVFrame.buf arrays and, if

* necessary, allocate and fill AVFrame.extended_data and AVFrame.extended_buf.

* For planar formats, one buffer will be allocated for each plane.

*

* @warning: if frame already has been allocated, calling this function will

* leak memory. In addition, undefined behavior can occur in certain

* cases.

*

* @param frame frame in which to store the new buffers.

* @param align Required buffer size alignment. If equal to 0, alignment will be

* chosen automatically for the current CPU. It is highly

* recommended to pass 0 here unless you know what you are doing.

*

* @return 0 on success, a negative AVERROR on error.

*/

int av_frame_get_buffer(AVFrame *frame, int align)

这个函数是用来从ffmpeg内存池中为frame中获取buf,从上面的注释可以看到,如果想要获取buf,必须要设置

/*

* The following fields must be set on frame before calling this function:

* - format (pixel format for video, sample format for audio)

* - width and height for video

* - nb_samples and channel_layout for audio

* /

查看详细的函数代码:

int av_frame_get_buffer(AVFrame *frame, int align)

{

if (frame->format < 0)

return AVERROR(EINVAL);

if (frame->width > 0 && frame->height > 0)

return get_video_buffer(frame, align);

else if (frame->nb_samples > 0 && (frame->channel_layout || frame->channels > 0))

return get_audio_buffer(frame, align);

return AVERROR(EINVAL);

}

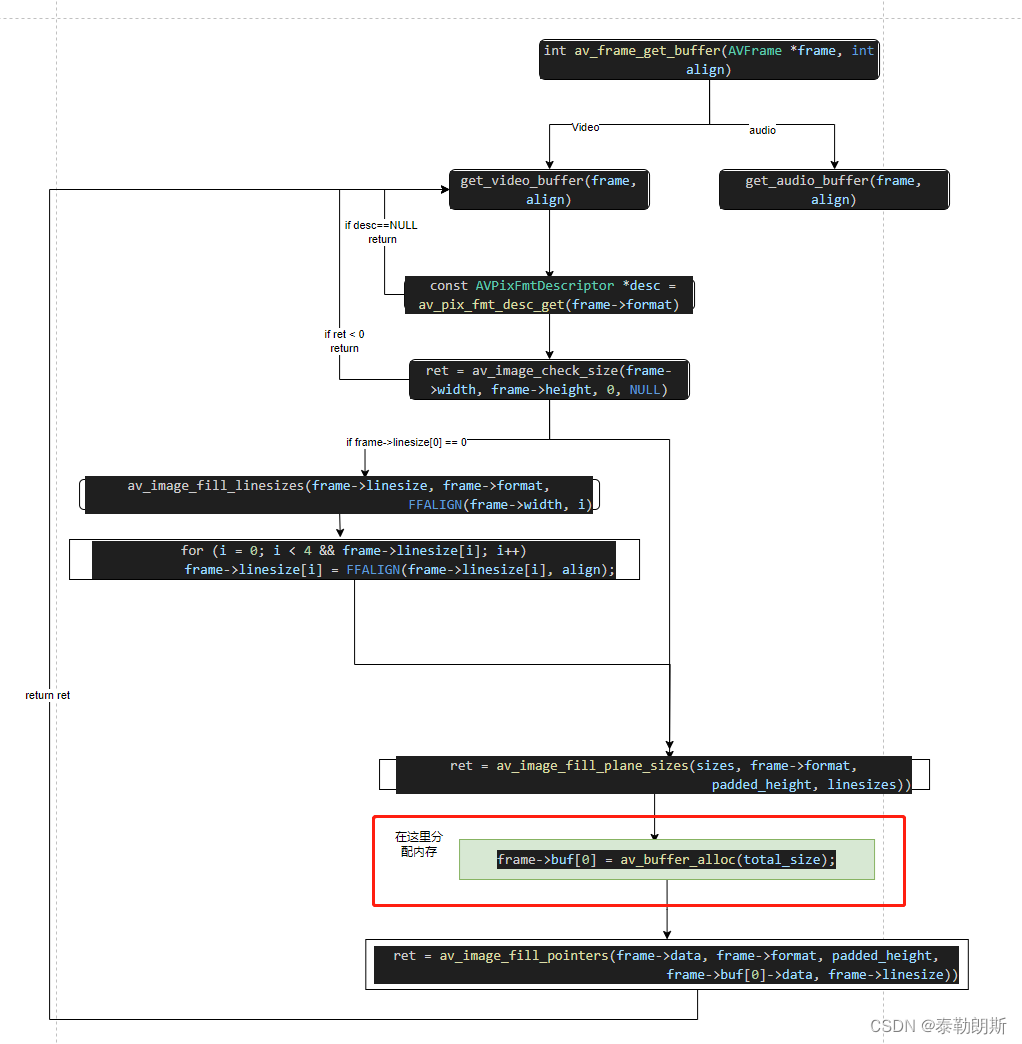

详细的调用流程如下:

AVFrame device内存获取av_hwframe_get_buffer()

既然有host的内存获取,那么就有device内存的获取

/**

* Allocate a new frame attached to the given AVHWFramesContext.

*

* @param hwframe_ctx a reference to an AVHWFramesContext

* @param frame an empty (freshly allocated or unreffed) frame to be filled with

* newly allocated buffers.

* @param flags currently unused, should be set to zero

* @return 0 on success, a negative AVERROR code on failure

*/

int av_hwframe_get_buffer(AVBufferRef *hwframe_ctx, AVFrame *frame, int flags);

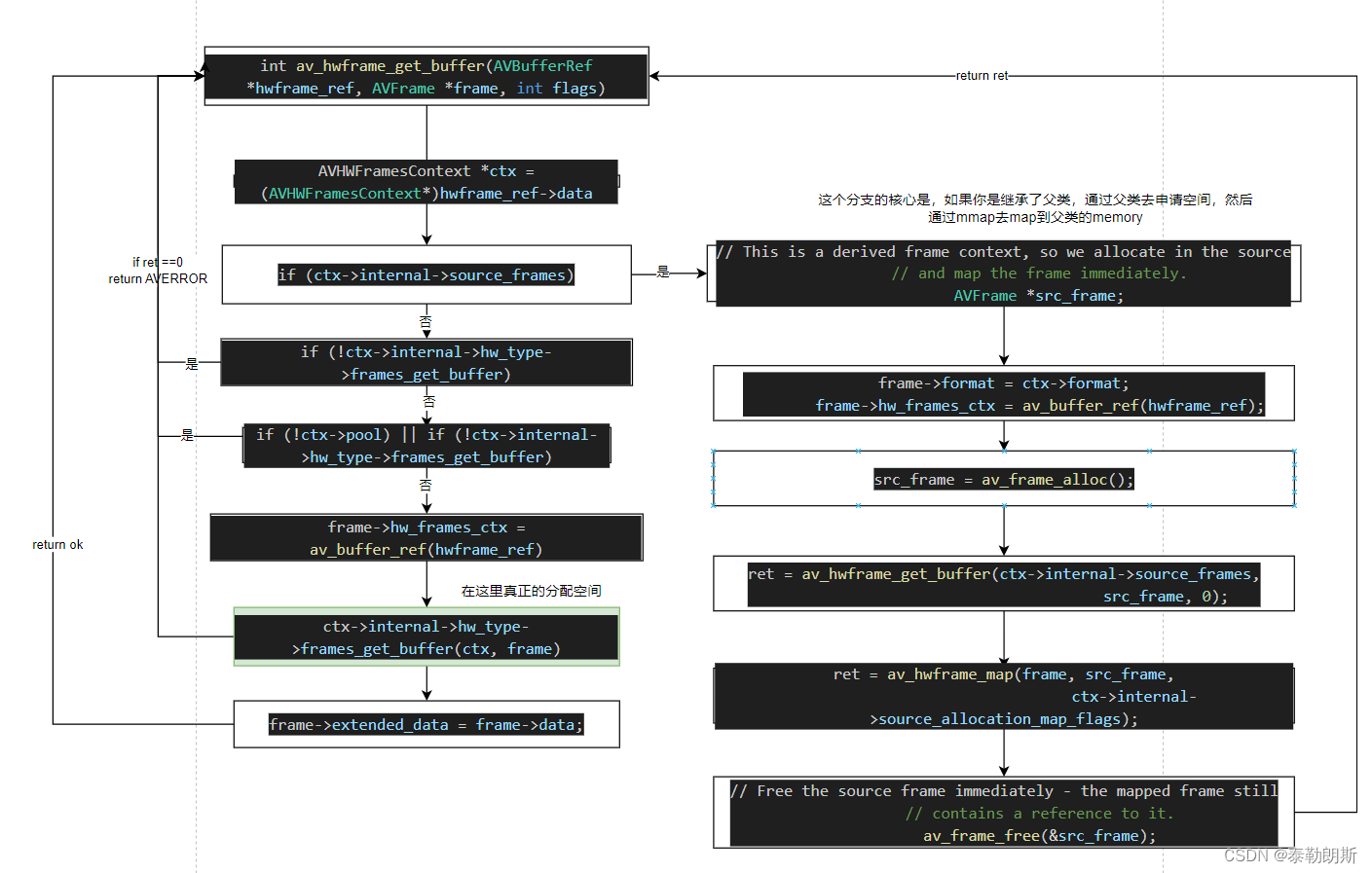

其调用流程如下,注意分支代码,这里是继承了父类后,通过mmap去map到父类的内存上去。

463

463

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?