1.在hive创建数据库的情况下,impala无法自动刷新元数据

1.1 发现问题

在CDH6.1版本下创建数据库, 如在hive中create database test_db; 再在impala中 show databases;

没有显示test_db,说明test_db并没有刷新到Impala的catalog中,通过查找Impala Catalog的role log,发现如下的异常日志:

Unexpected exception received while processing event

Java exception follows:

org.apache.impala.catalog.events.MetastoreNotificationException: EventId: 591869 EventType: CREATE_DATABASE Database object is null in the event. This could be a metastore configuration problem. Check if hive.metastore.notifications.add.thrift.objects is set to true in metastore configuration

at org.apache.impala.catalog.events.MetastoreEvents$CreateDatabaseEvent.<init>(MetastoreEvents.java:1108)

at org.apache.impala.catalog.events.MetastoreEvents$CreateDatabaseEvent.<init>(MetastoreEvents.java:1089)

at org.apache.impala.catalog.events.MetastoreEvents$MetastoreEventFactory.get(MetastoreEvents.java:168)

at org.apache.impala.catalog.events.MetastoreEvents$MetastoreEventFactory.getFilteredEvents(MetastoreEvents.java:205)

at org.apache.impala.catalog.events.MetastoreEventsProcessor.processEvents(MetastoreEventsProcessor.java:601)

at org.apache.impala.catalog.events.MetastoreEventsProcessor.processEvents(MetastoreEventsProcessor.java:513)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.NullPointerException

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:191)

at org.apache.impala.catalog.events.MetastoreEvents$CreateDatabaseEvent.<init>(MetastoreEvents.java:1106)

... 12 more

|

重要的部分在: CREATE_DATABASE Database object is null in the event. This could be a metastore configuration problem. Check if hive.metastore.notifications.add.thrift.objects is set to true in metastore configuration

但是我们确实是配置了这个配置,确没生效,我们在元数据库metastore的notification_log中查到这个event的message

{"server":"","servicePrincipal":"","db":"test_db","timestamp":1622247221,"location":"hdfs://nameservice1/user/hive/warehouse/test_db.db","ownerType":"USER","ownerName":"admin"}

|

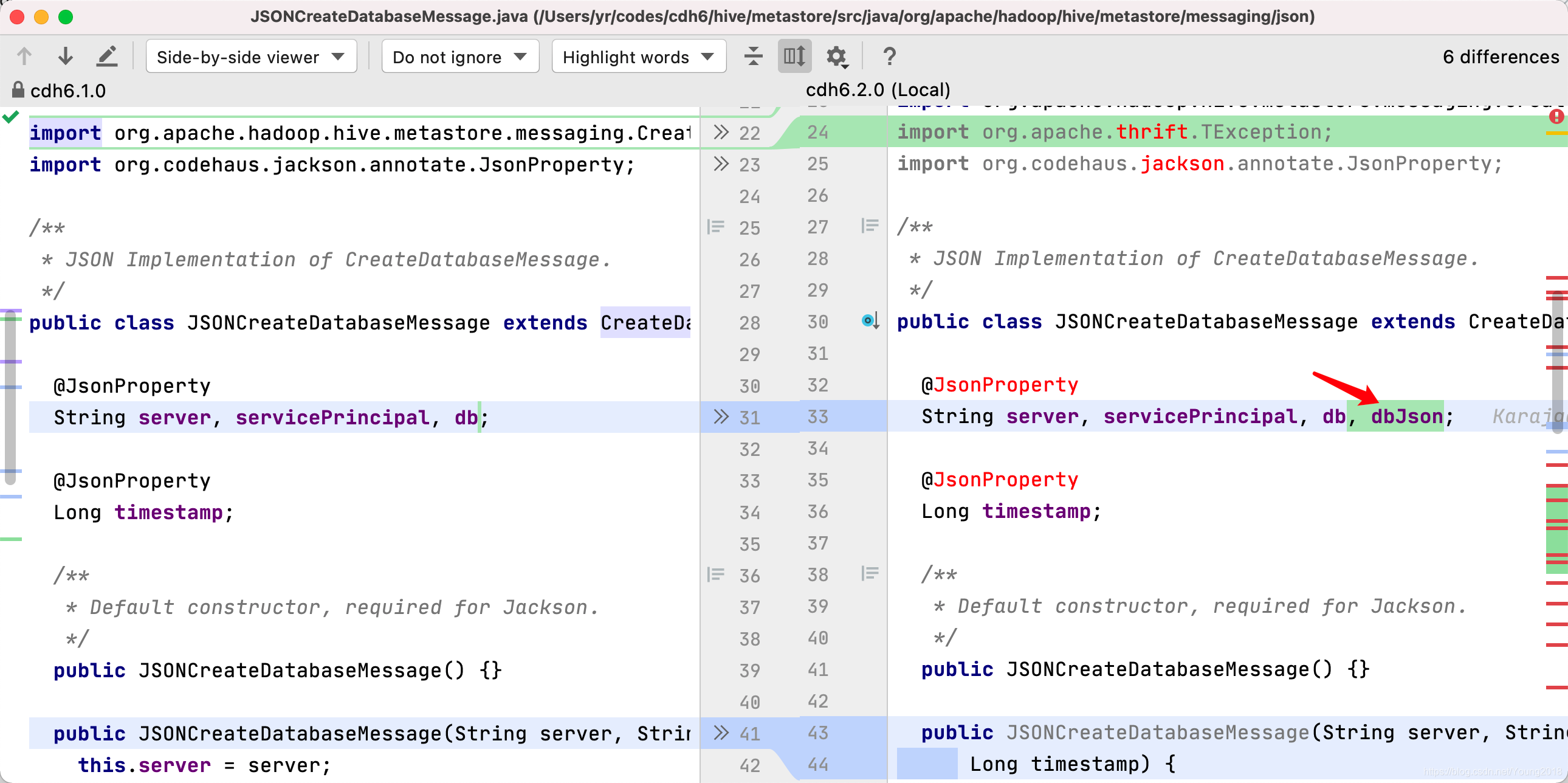

由于参考资料[2]是在CDH 6.3.2环境下搭建的,我在测试环境搭了一台单节点的CDH6.3.2, 起来后,同样的在新的CDH6.3.2环境中配置自动刷新元数据功能,发现了创建database的message为:

{"server":"","servicePrincipal":"","db":"test_db","dbJson":"{\"1\":{\"str\":\"davie_test\"},\"3\":{\"str\":\"hdfs://nameservice1/user/hive/warehouse/test_db.db\"},\"6\":{\"str\":\"admin\"},\"7\":{\"i32\":1},\"9\":{\"i32\":1622248258}}","timestamp":1622248259,"location":"hdfs://nameservice1/user/hive/warehouse/davie_test.db","ownerType":"USER","ownerName":"admin"};

|

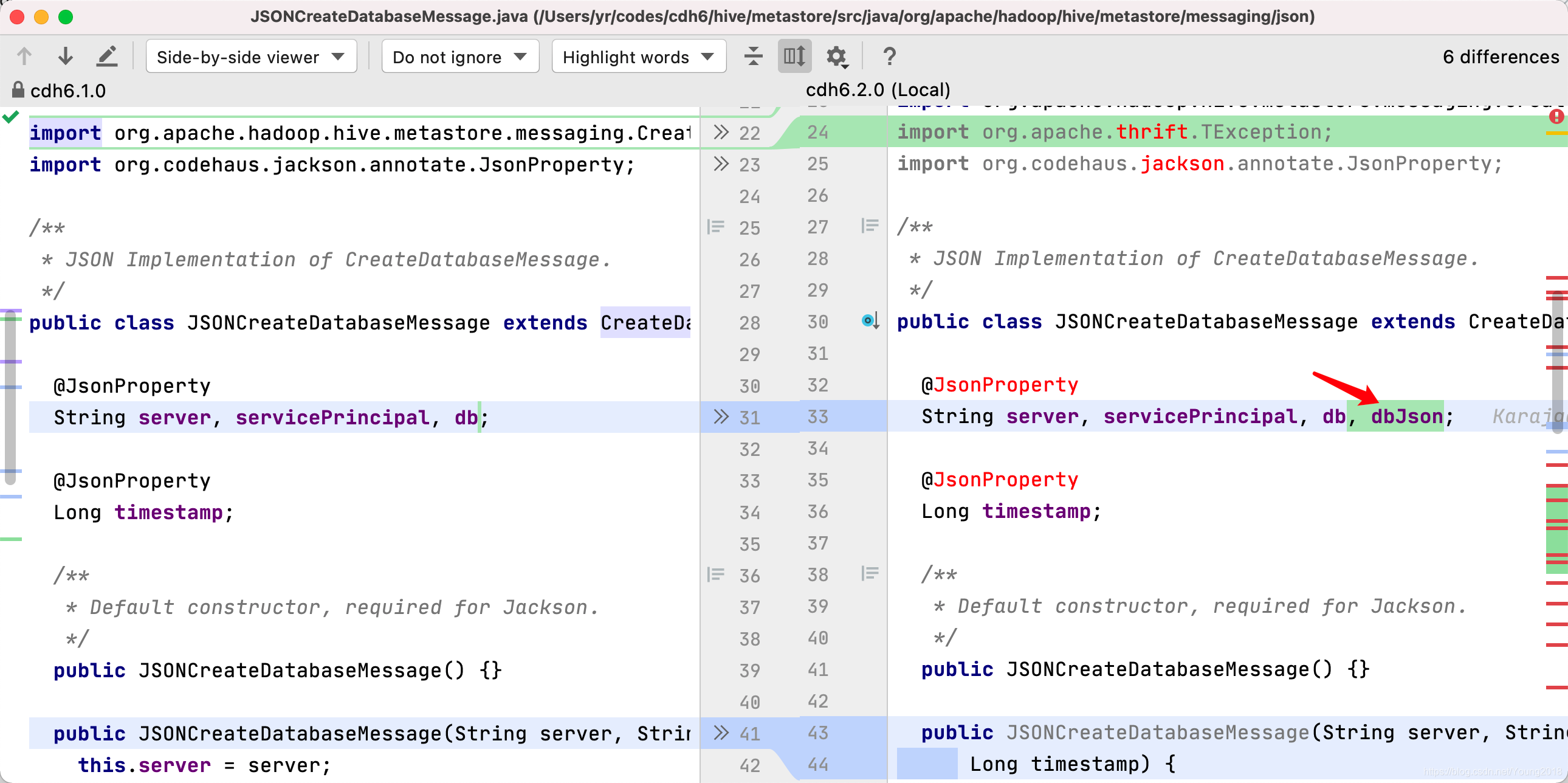

两者一对比,发现在CDH6.1.0的Hive中,message中缺少了dbJson这个字段

在IDEA中查找代码发现,确实是少了这个字段.

想要解锁impala元数据自动刷新功能,只能再升级一下Hive了。

2. Hive升级到 2.1.1-cdh6.3.2版本

2.1编译打包

具体的步骤是下载Cloudera Hive 代码,然后编译打包

git clone --single-branch --branch cdh6.3.2-release https://github.com/cloudera/hive.git hive

mvn clean package -DskipTests -Pdist

|

在编译的过程中,发现有些Cloudera的包下载不下来的,需要新添加mirror

<repository>

<id>nexus-aliyun</id>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

<name>nexus-aliyun</name>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>cloudera-repos</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

<name>CDH Releases Repository</name>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

|

Maven下载依赖的过程中,发现Hbase的依赖javax.el一直都下不下来,报以下的错误

Could not find artifact org.glassfish:javax.el:pom:3.0.1-b06-SNAPSHOT

在pom.xml文件中添加javax.el的dependency并指定版本,重新再运行maven命令即可

<glassfish.el.version>3.0.1-b06</glassfish.el.version>

<dependency>

<groupId>org.glassfish</groupId>

<artifactId>javax.el</artifactId>

<version>${glassfish.el.version}</version>

</dependency>

|

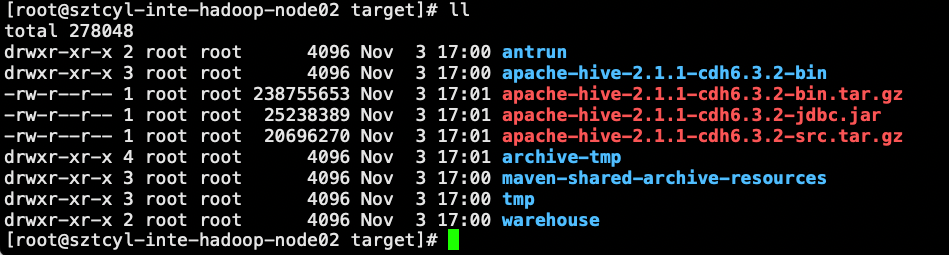

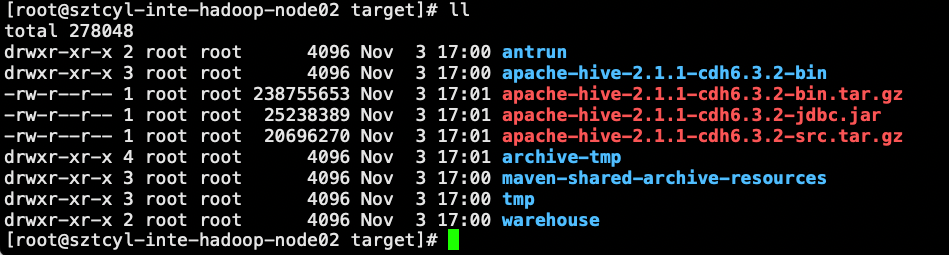

正常编译好后,可以在 hive/packaging/target目录下看到 apache-hive-2.1.1-cdh6.3.2-bin.tar.gz 文件

2.2 元数据备份

然后把这个文件拷贝到CDH集群,解压,对元数据进行升级,升级前进行元数据备份。

mysqldump -uroot -ptest metastore > ./metastore.sql

|

2.3 元数据升级

备份完成后登录metastore元数据库,运行以下命令对CDH6.1.0的元数据进行升级

source $HIVE_6.3.2/scripts/metastore/upgrade/mysql/upgrade-2.1.1-cdh6.1.0-to-2.1.1-cdh6.2.0.mysql.sql

我们查看 upgrade-2.1.1-cdh6.1.0-to-2.1.1-cdh6.2.0.mysql.sql 这个脚本,发现只是对 DBS 表新增了一个CREATE_TIME字段,然后再更新了一些CDH_VERSION的SCHEMA_VERSION信息,没有重大的变更。

2.4 更新Hive lib目录

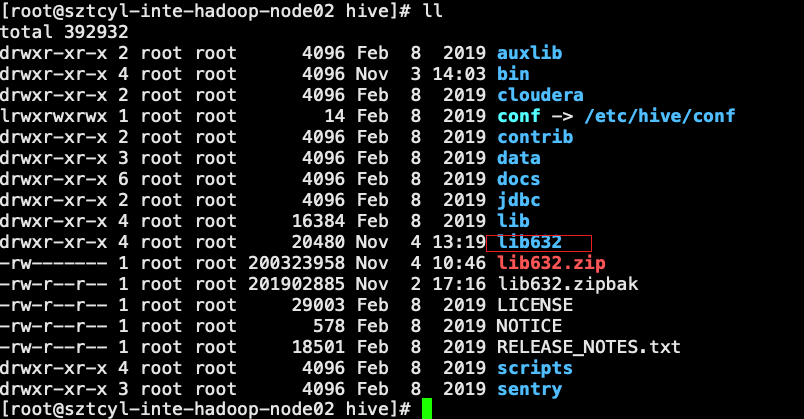

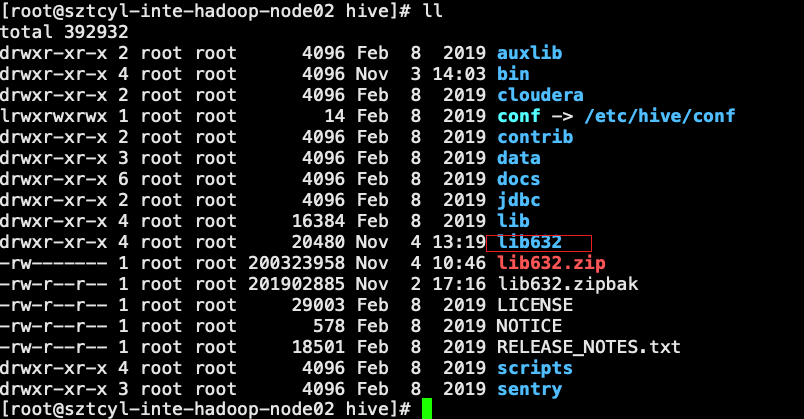

再在 /opt/cloudera/parcels/CDH/lib/hive/目录下新建 lib632目录

mkdir /opt/cloudera/parcels/CDH/lib/hive/lib632

|

将CDH6.3.2的Hive的lib下的文件拷贝到lib632目录下

cp -r /root/cdh-hive-cdh6.3.2-release/packaging/target/apache-hive-2.1.1-cdh6.3.2-bin/apache-hive-2.1.1-cdh6.3.2-bin/lib/* /opt/cloudera/parcels/CDH-6.1.1-1.cdh6.1.1.p0.875250/lib/hive/lib632/

|

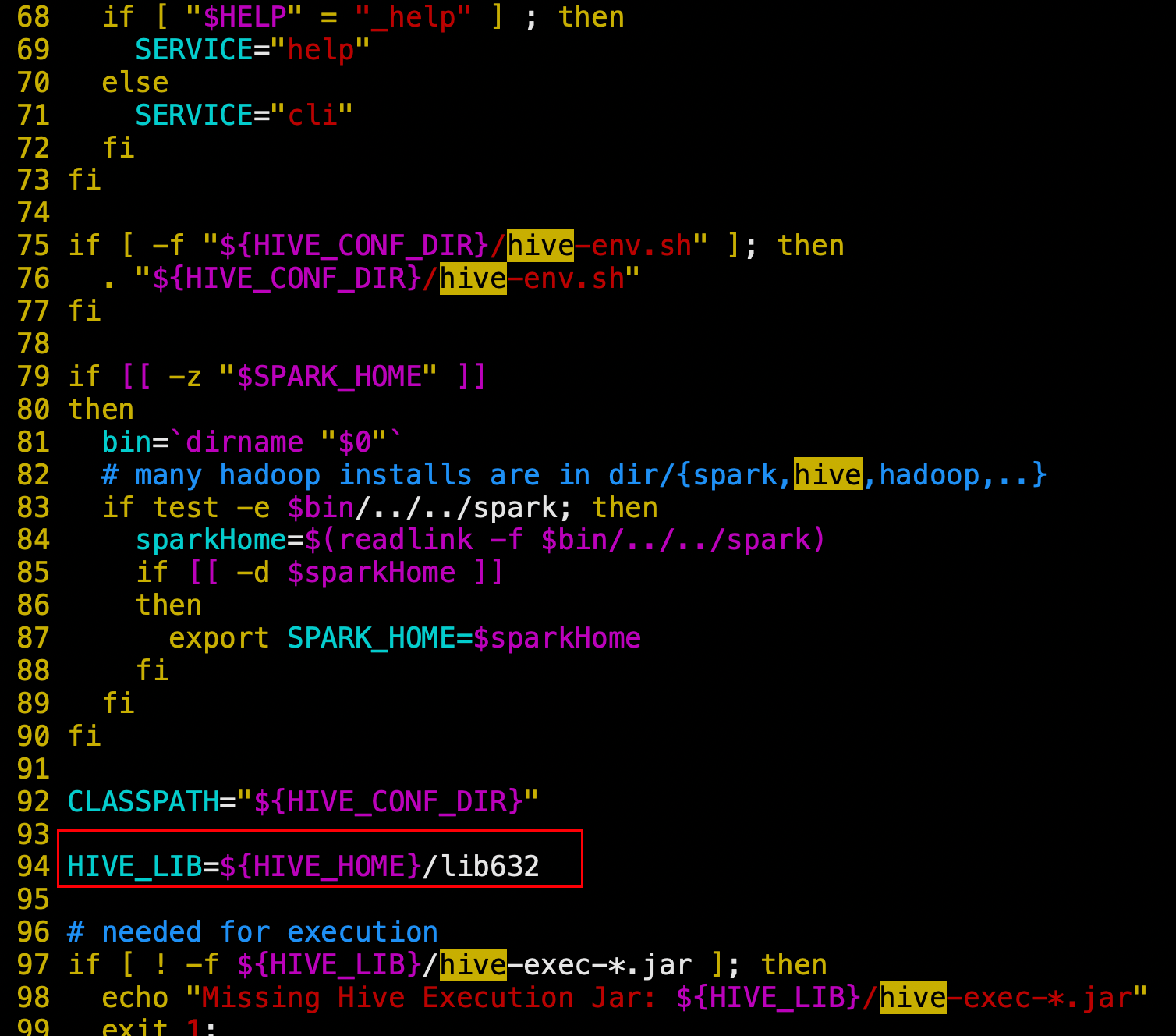

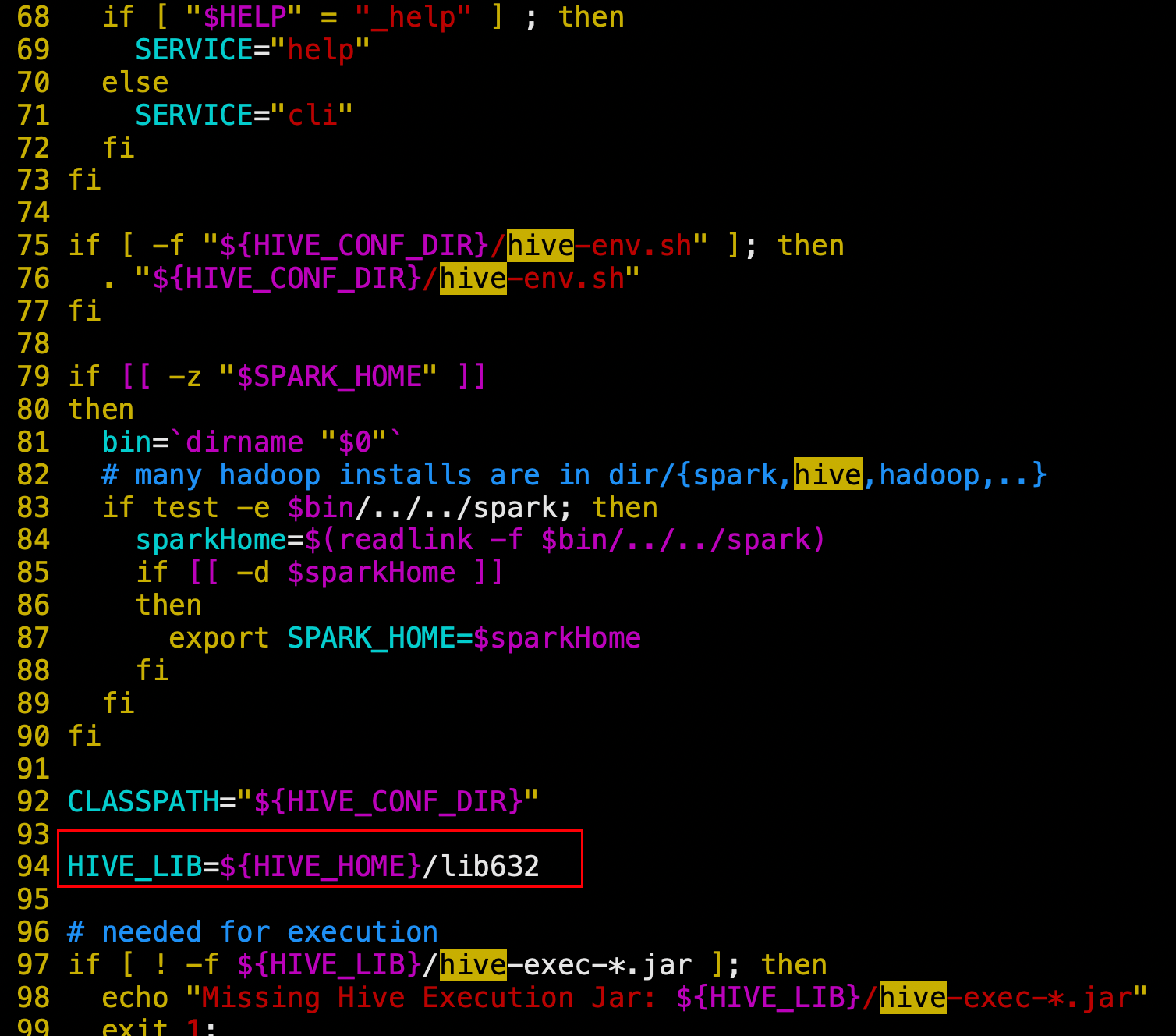

再修改hive脚本指定的lib目录

vim /opt/cloudera/parcels/CDH-6.1.1-1.cdh6.1.1.p0.875250/lib/hive/bin/hive

|

在94行,将 HIVE_LIB=${HIVE_HOME}/lib 改成 HIVE_LIB=${HIVE_HOME}/lib632

完成之后,在CM上重启Hive相关的服务

2.5 Hive升级验证

对Hive的功能进行验证,包括hive sql执行,hive udf测试, hive相关的组件(hbase impala sqoop)测试等

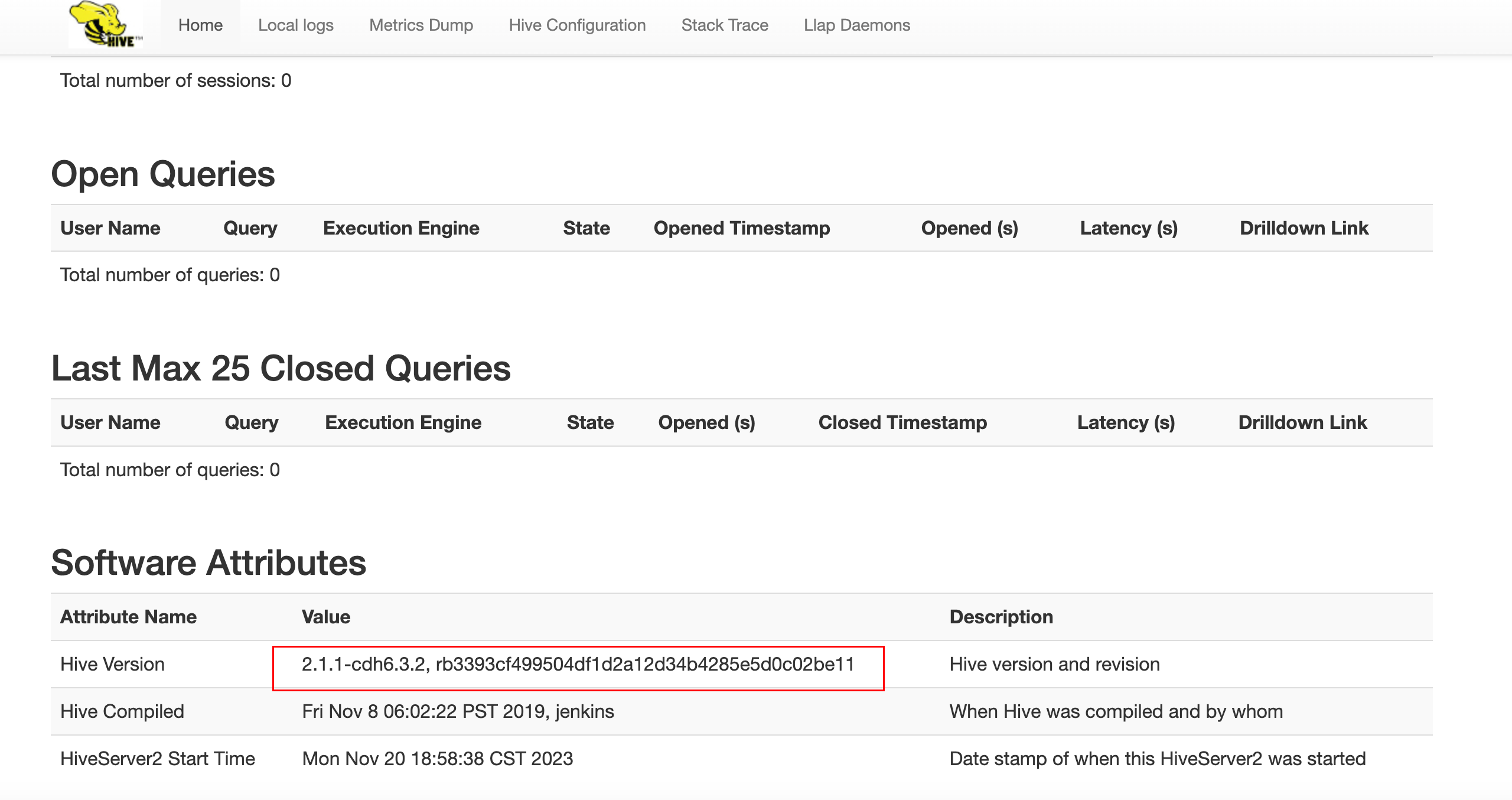

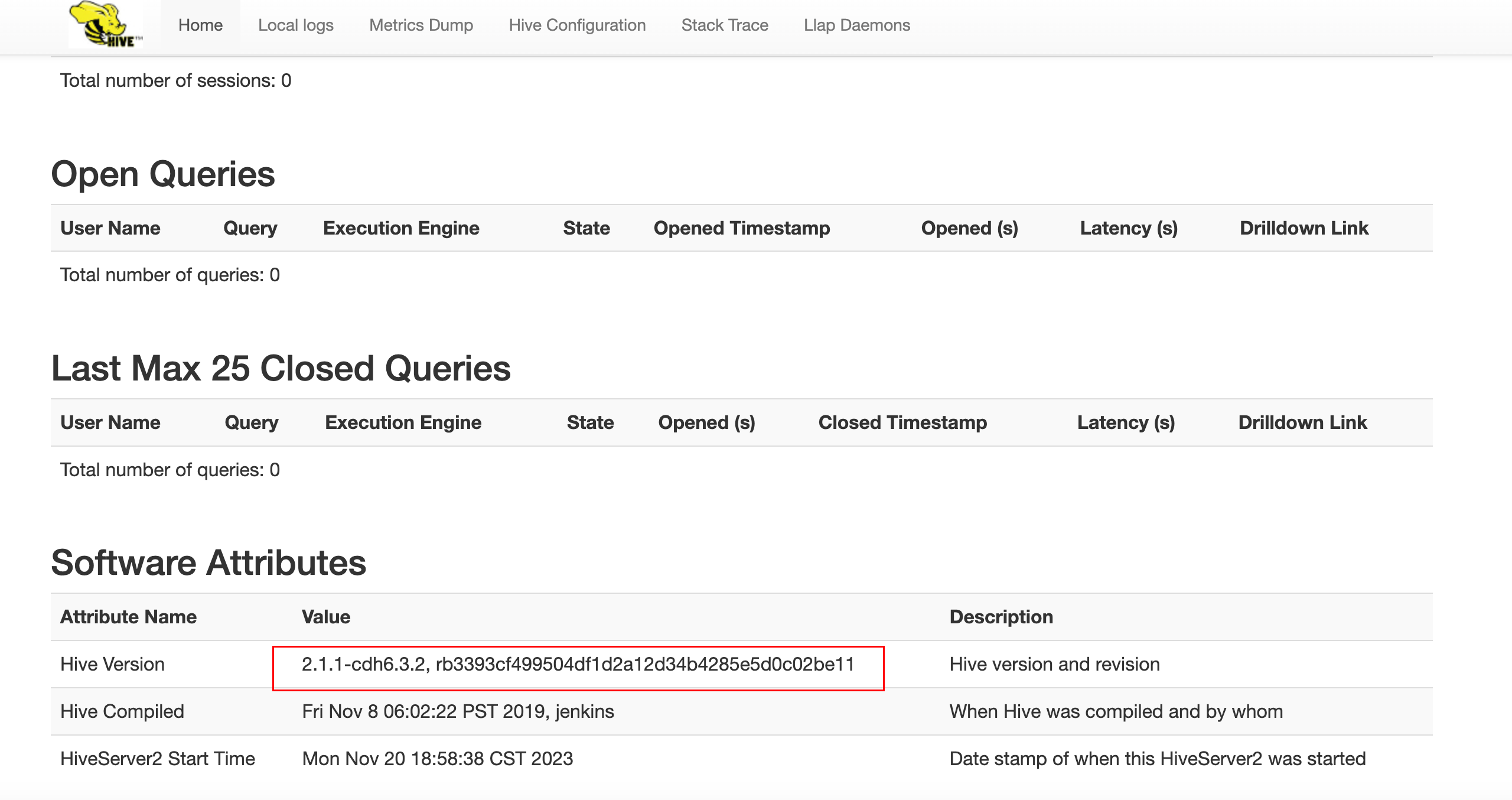

2.6升级后的版本查看

3.问题解决:

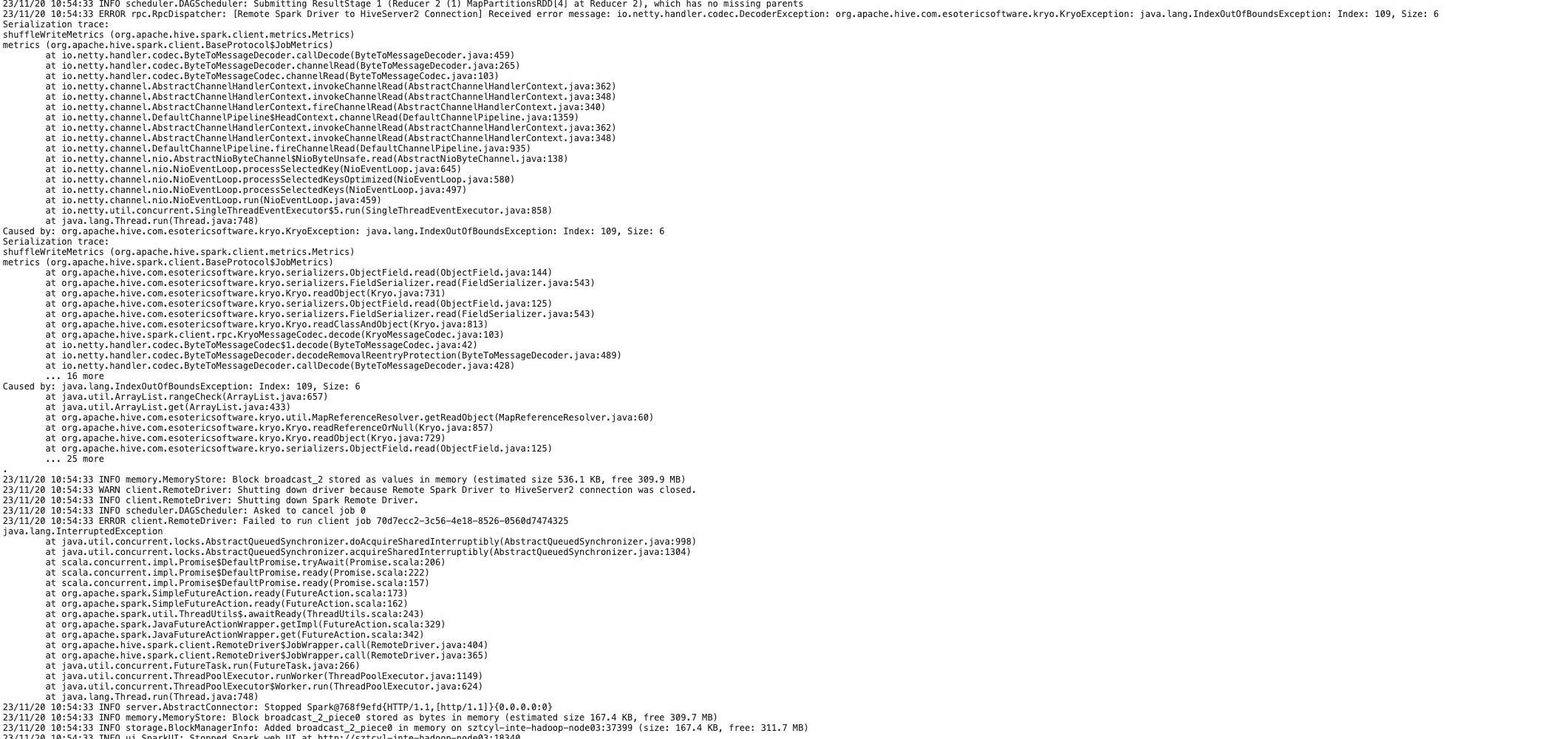

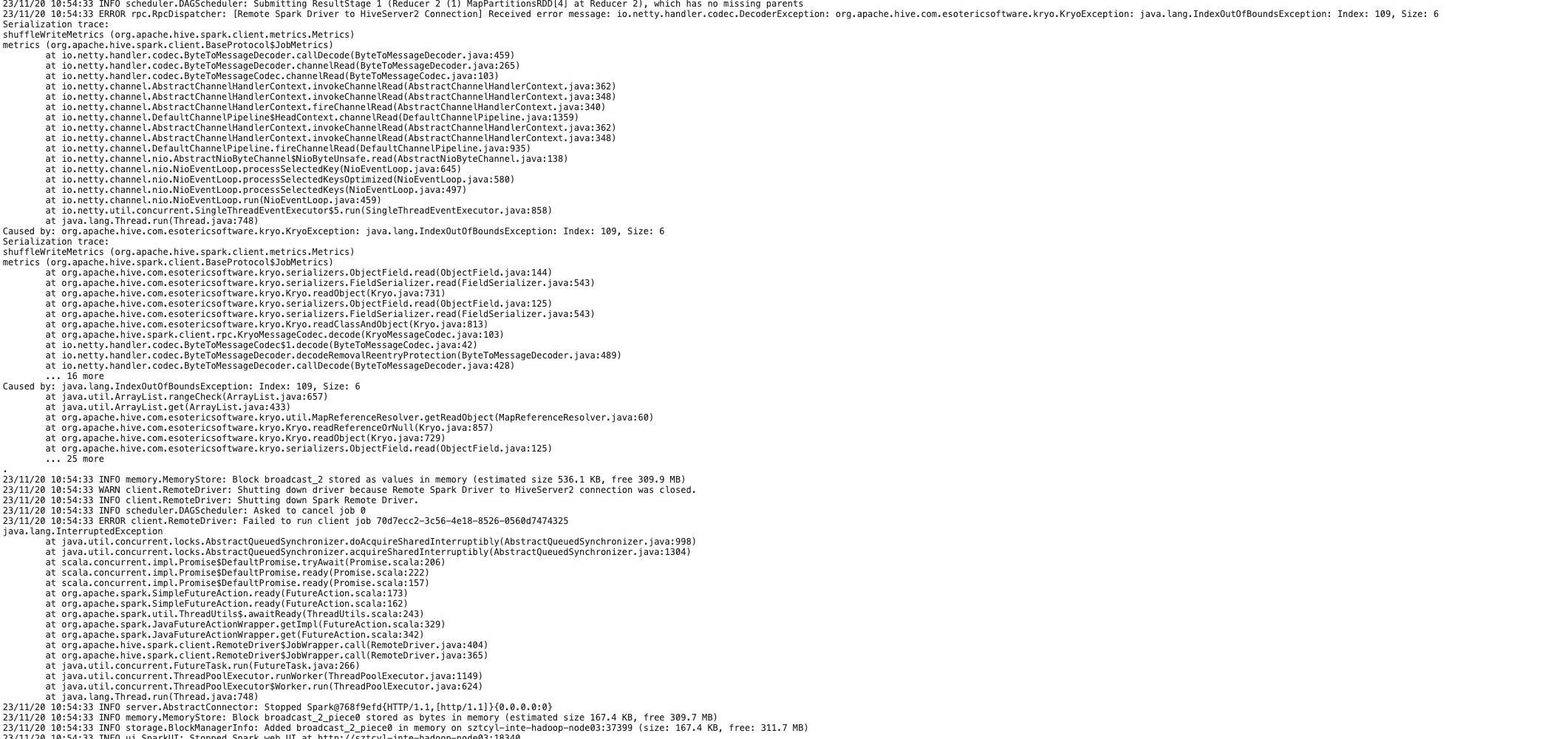

升级后,计算引擎切换到spark的跑任务使用count或者其他的函数的时候报错处理Remote Spark Driver to HiveServer2 Connection] Received error message: io.netty.handler.codec.DecoderException: org.apache.hive.com.esotericsoftware.kryo.KryoException: java.lang.IndexOutOfBoundsException: Index: 109, Size: 6

Serialization trace:

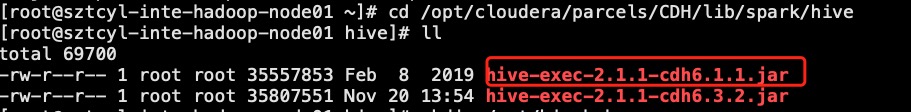

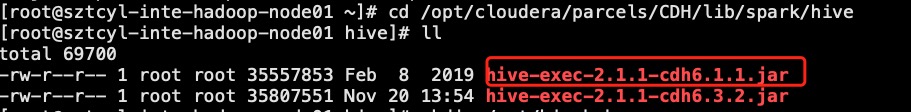

主要是因为spark引入的还是cdh6.1.1的hive的exec的包

只需要把这个cdh6.1.1的hive包exec在spark的hive文件夹中更新掉CDH6.3.2的即可 。

314

314

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?