一 概述

Android 系统采用 ART 虚拟机,所有的 Java 进程都运行在 ART 之上,当应用发生 ANR,其中最终的一个环节便是向目标进程发送信号 SIGNAL_QUIT,传统的 linux 则是终止程序并输出 core;而对于 Android 进程来说当收到 SIGQUIT 时,Java 层面的进程都是跑在虚拟机之上的,ART 虚拟机会捕获该信号,并输出相应的 traces 信息保存到目录 /data/anr/traces.txt。

当然也可以通过一条命令来获取指定进程的 traces 信息,例如输出 pid=888 的进程信息:

adb shell kill -3 888 //可指定进程pid执行完该命令后 traces 信息的结果保存到文件 /data/anr/traces.txt,如下:

//[见小节2.2]

----- pid 888 at 2016-11-11 22:22:22 -----

Cmd line: system_server

ABI: arm

Build type: optimized

//[见小节3.1]

Zygote loaded classes=4113 post zygote classes=3239

//[见小节3.2]

Intern table: 57550 strong; 9315 weak

//共加载16动态库 [见小节3.3]

JNI: CheckJNI is off; globals=2418 (plus 115 weak)

Libraries: /system/lib/libandroid.so /system/lib/libandroid_servers.so /system/lib/libaudioeffect_jni.so /system/lib/libcompiler_rt.so /system/lib/libjavacrypto.so /system/lib/libjnigraphics.so /system/lib/libmedia_jni.so /system/lib/librs_jni.so /system/lib/libsechook.so /system/lib/libshell_jni.so /system/lib/libsoundpool.so /system/lib/libwebviewchromium_loader.so /system/lib/libwifi-service.so /vendor/lib/libalarmservice_jni.so /vendor/lib/liblocationservice.so libjavacore.so (16)

//已分配堆内存大小40MB,其中29M已用,总分配207772个对象 [见小节3.4]

Heap: 27% free, 29MB/40MB; 307772 objects

... //省略GC相关信息

//当前进程总99个线程[见小节3.5]

DALVIK THREADS (99):

//主线程调用栈[见小节3.6]

"main" prio=5 tid=1 Native

| group="main" sCount=1 dsCount=0 obj=0x75bd9fb0 self=0x5573d4f770

| sysTid=12078 nice=-2 cgrp=default sched=0/0 handle=0x7fa75fafe8

| state=S schedstat=( 5907843636 827600677 5112 ) utm=453 stm=137 core=0 HZ=100

| stack=0x7fd64ef000-0x7fd64f1000 stackSize=8MB

| held mutexes=

//内核栈[见小节3.6.2]

kernel: __switch_to+0x70/0x7c

kernel: SyS_epoll_wait+0x2a0/0x324

kernel: SyS_epoll_pwait+0xa4/0x120

kernel: cpu_switch_to+0x48/0x4c

native: #00 pc 0000000000069be4 /system/lib64/libc.so (__epoll_pwait+8)

native: #01 pc 000000000001cca4 /system/lib64/libc.so (epoll_pwait+32)

native: #02 pc 000000000001ad74 /system/lib64/libutils.so (_ZN7android6Looper9pollInnerEi+144)

native: #03 pc 000000000001b154 /system/lib64/libutils.so (_ZN7android6Looper8pollOnceEiPiS1_PPv+80)

native: #04 pc 00000000000d4bc0 /system/lib64/libandroid_runtime.so (_ZN7android18NativeMessageQueue8pollOnceEP7_JNIEnvP8_jobjecti+48)

native: #05 pc 000000000000082c /data/dalvik-cache/arm64/system@framework@boot.oat (Java_android_os_MessageQueue_nativePollOnce__JI+144)

at android.os.MessageQueue.nativePollOnce(Native method)

at android.os.MessageQueue.next(MessageQueue.java:323)

at android.os.Looper.loop(Looper.java:135)

at com.android.server.SystemServer.run(SystemServer.java:290)

at com.android.server.SystemServer.main(SystemServer.java:175)

at java.lang.reflect.Method.invoke!(Native method)

at com.android.internal.os.ZygoteInit$MethodAndArgsCaller.run(ZygoteInit.java:738)

at com.android.internal.os.ZygoteInit.main(ZygoteInit.java:628)

"Binder_1" prio=5 tid=8 Native

| group="main" sCount=1 dsCount=0 obj=0x12c610a0 self=0x5573e5c750

| sysTid=12092 nice=0 cgrp=default sched=0/0 handle=0x7fa2743450

| state=S schedstat=( 796240075 863170759 3586 ) utm=50 stm=29 core=1 HZ=100

| stack=0x7fa2647000-0x7fa2649000 stackSize=1013KB

| held mutexes=

kernel: __switch_to+0x70/0x7c

kernel: binder_thread_read+0xd78/0xeb0

kernel: binder_ioctl_write_read+0x178/0x24c

kernel: binder_ioctl+0x2b0/0x5e0

kernel: do_vfs_ioctl+0x4a4/0x578

kernel: SyS_ioctl+0x5c/0x88

kernel: cpu_switch_to+0x48/0x4c

native: #00 pc 0000000000069cd0 /system/lib64/libc.so (__ioctl+4)

native: #01 pc 0000000000073cf4 /system/lib64/libc.so (ioctl+100)

native: #02 pc 000000000002d6e8 /system/lib64/libbinder.so (_ZN7android14IPCThreadState14talkWithDriverEb+164)

native: #03 pc 000000000002df3c /system/lib64/libbinder.so (_ZN7android14IPCThreadState20getAndExecuteCommandEv+24)

native: #04 pc 000000000002e114 /system/lib64/libbinder.so (_ZN7android14IPCThreadState14joinThreadPoolEb+124)

native: #05 pc 0000000000036c38 /system/lib64/libbinder.so (???)

native: #06 pc 000000000001579c /system/lib64/libutils.so (_ZN7android6Thread11_threadLoopEPv+208)

native: #07 pc 0000000000090598 /system/lib64/libandroid_runtime.so (_ZN7android14AndroidRuntime15javaThreadShellEPv+96)

native: #08 pc 0000000000014fec /system/lib64/libutils.so (???)

native: #09 pc 0000000000067754 /system/lib64/libc.so (_ZL15__pthread_startPv+52)

native: #10 pc 000000000001c644 /system/lib64/libc.so (__start_thread+16)

(no managed stack frames)

... //此处省略剩余的N个线程.

接下来从虚拟机角度说说目标进程收到该信号的处理过程,每一行关键信息都说明其所对应的输出方法。

1.1 trace参数解读

"Binder_1" prio=5 tid=8 Native

| group="main" sCount=1 dsCount=0 obj=0x12c610a0 self=0x5573e5c750

| sysTid=12092 nice=0 cgrp=default sched=0/0 handle=0x7fa2743450

| state=S schedstat=( 796240075 863170759 3586 ) utm=50 stm=29 core=1 HZ=100

| stack=0x7fa2647000-0x7fa2649000 stackSize=1013KB

| held mutexes=

第0行

- 线程名:Binder_1(如有 daemon 则代表守护线程)

- prio:线程优先级

- tid:线程内部 id

- 线程状态:NATIVE

第1行

- group:线程所属的线程组

- sCount:线程挂起次数

- dsCount:用于调试的线程挂起次数

- obj:当前线程关联的 java 线程对象

- self:当前线程地址

第2行:

- sysTid:线程真正意义上的 tid

- nice:调度优先级

- cgrp:进程所属的进程调度组

- sched:调度策略

- handle:函数处理地址

第3行:

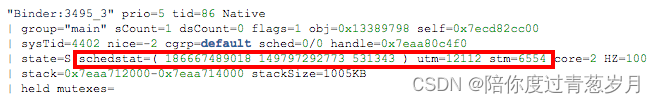

- state:线程状态

- schedstat:CPU 调度时间统计,见 proc/[pid]/task/[tid]/schedstat

- utm/stm:用户态/内核态的 CPU 时间(单位是 jiffies),见 proc/[pid]/task/[tid]/stat

- core:该线程的最后运行所在核

- HZ:时钟频率

第4行:

- stack:线程栈的地址区间

- stackSize:栈的大小

第5行:

- mutex:所持有 mutex 类型,有独占锁 exclusive 和共享锁 shared 两类

schedstat含义说明:

nice 值越小则优先级越高。此处 nice=-2,可见优先级还是比较高的

schedstat 括号中的 3 个数字依次是 Running、Runable、Switch,紧接着的是 utm 和 stm

- Running 时间:CPU 运行的时间,单位 ns

- Runable 时间:RQ 队列的等待时间,单位 ns

- Switch 次数:CPU 调度切换次数

- utm:该线程在用户态所执行的时间,单位是 jiffies,jiffies 定义为 sysconf(_SC_CLK_TCK),默认等于 10ms

- stm:该线程在内核态所执行的时间,单位是 jiffies,默认等于 10ms

- 可见,该线程 Running=186667489018ns,也约等于 186667ms。在 CPU 运行时间包括用户态 (utm) 和内核态(stm)。 utm + stm = (12112 + 6554) ×10 ms = 186666ms。

结论:utm + stm = schedstat 第一个参数值。

二 ART信号捕获

2.1 SignalCatcher

SignalCatcher.cc

void* SignalCatcher::Run(void* arg) {

SignalCatcher* signal_catcher = reinterpret_cast<SignalCatcher*>(arg);

Runtime* runtime = Runtime::Current();

Thread* self = Thread::Current();

//当前进程状态处于非Runnable是

DCHECK_NE(self->GetState(), kRunnable);

{

MutexLock mu(self, signal_catcher->lock_);

signal_catcher->thread_ = self;

signal_catcher->cond_.Broadcast(self);

}

//设置需要handle的信号

SignalSet signals;

signals.Add(SIGQUIT); //信号3

signals.Add(SIGUSR1); //信号10

while (true) {

int signal_number = signal_catcher->WaitForSignal(self, signals);

if (signal_catcher->ShouldHalt()) {

runtime->DetachCurrentThread();

return nullptr;

}

switch (signal_number) {

case SIGQUIT:

//收到信号3 【见小节2.2】

signal_catcher->HandleSigQuit();

break;

case SIGUSR1:

signal_catcher->HandleSigUsr1();

break;

default:

LOG(ERROR) << "Unexpected signal %d" << signal_number;

break;

}

}

}

2.2 SignalCatcher::HandleSigQuit

signal_catcher.cc

void SignalCatcher::HandleSigQuit() {

Runtime* runtime = Runtime::Current();

std::ostringstream os;

os << "\n" << "----- pid " << getpid() << " at " << GetIsoDate() << " -----\n";

DumpCmdLine(os);

std::string fingerprint = runtime->GetFingerprint();

os << "Build fingerprint: '" << (fingerprint.empty() ?

"unknown" : fingerprint) << "'\n";

os << "ABI: '" << GetInstructionSetString(

runtime->GetInstructionSet()) << "'\n";

os << "Build type: " << (kIsDebugBuild ? "debug" : "optimized") << "\n";

// [见小节2.3]

runtime->DumpForSigQuit(os);

os << "----- end " << getpid() << " -----\n";

// [见小节3.7]

Output(os.str());

}

2.3 Runtime::DumpForSigQuit

runtime.cc

void Runtime::DumpForSigQuit(std::ostream& os) {

GetClassLinker()->DumpForSigQuit(os); //[见小节3.1]

GetInternTable()->DumpForSigQuit(os); //[见小节3.2]

GetJavaVM()->DumpForSigQuit(os); //[见小节3.3]

GetHeap()->DumpForSigQuit(os); //[见小节3.4]

TrackedAllocators::Dump(os);

os << "\n";

thread_list_->DumpForSigQuit(os); //[见小节3.5]

BaseMutex::DumpAll(os);

}

三 trace信息

3.1 ClassLinker

class_linker.cc

void ClassLinker::DumpForSigQuit(std::ostream& os) {

Thread* self = Thread::Current();

if (dex_cache_image_class_lookup_required_) {

ScopedObjectAccess soa(self);

MoveImageClassesToClassTable();

}

ReaderMutexLock mu(self, *Locks::classlinker_classes_lock_);

os << "Zygote loaded classes=" << pre_zygote_class_table_.Size()

<< " post zygote classes="

<< class_table_.Size() << "\n";

}

3.2 InternTable

intern_table.cc

void InternTable::DumpForSigQuit(std::ostream& os) const {

os << "Intern table: " << StrongSize() << " strong; "

<< WeakSize() << " weak\n";

}

3.3 JavaVMExt

java_vm_ext.cc

void JavaVMExt::DumpForSigQuit(std::ostream& os) {

os << "JNI: CheckJNI is " << (check_jni_ ? "on" : "off");

if (force_copy_) {

os << " (with forcecopy)";

}

Thread* self = Thread::Current();

{

ReaderMutexLock mu(self, globals_lock_);

os << "; globals=" << globals_.Capacity();

}

{

MutexLock mu(self, weak_globals_lock_);

if (weak_globals_.Capacity() > 0) {

os << " (plus " << weak_globals_.Capacity() << " weak)";

}

}

os << '\n';

{

MutexLock mu(self, *Locks::jni_libraries_lock_);

os << "Libraries: " << Dumpable<Libraries>(*libraries_) << " (" << libraries_->size() << ")\n";

}

}

3.4 Heap

heap.cc

void Heap::DumpForSigQuit(std::ostream& os) {

os << "Heap: " << GetPercentFree() << "% free,

" << PrettySize(GetBytesAllocated()) << "/"

<< PrettySize(GetTotalMemory()) << "; " << GetObjectsAllocated() <<

" objects\n";

DumpGcPerformanceInfo(os); //输出大量gc相关的信息

}

DumpGcPerformanceInfo() 这个方法的参数非常多,先省略,后续再单独用一篇文章来讲解。

3.5 ThreadList

thread_list.cc

void ThreadList::DumpForSigQuit(std::ostream& os) {

{

ScopedObjectAccess soa(Thread::Current());

if (suspend_all_historam_.SampleSize() > 0) {

Histogram<uint64_t>::CumulativeData data;

suspend_all_historam_.CreateHistogram(&data);

// Dump time to suspend.

suspend_all_historam_.PrintConfidenceIntervals(os, 0.99, data);

}

}

Dump(os); // [见小节3.5.1]

DumpUnattachedThreads(os); //[见小节3.5.2]

}

3.5.1 Dump

thread_list.cc

void ThreadList::Dump(std::ostream& os) {

{

MutexLock mu(Thread::Current(), *Locks::thread_list_lock_);

//输出当前进程的线程个数

os << "DALVIK THREADS (" << list_.size() << "):\n";

}

DumpCheckpoint checkpoint(&os);

//执行checkpoint检查

size_t threads_running_checkpoint = RunCheckpoint(&checkpoint);

if (threads_running_checkpoint != 0) {

checkpoint.WaitForThreadsToRunThroughCheckpoint(threads_running_checkpoint);

}

}

DALVIK THREADS (25) 代表的是当前虚拟机中的线程个数为 25. 另外, 此处 RunCheckpoint 方法比较重要,涉及进程的 suspend 状态问题。

3.5.2 DumpUnattachedThreads

thread_list.cc

void ThreadList::DumpUnattachedThreads(std::ostream& os) {

DIR* d = opendir("/proc/self/task");

if (!d) {

return;

}

Thread* self = Thread::Current();

dirent* e;

while ((e = readdir(d)) != nullptr) {

char* end;

pid_t tid = strtol(e->d_name, &end, 10);

if (!*end) {

bool contains;

{

MutexLock mu(self, *Locks::thread_list_lock_);

contains = Contains(tid);

}

if (!contains) {

DumpUnattachedThread(os, tid); //[见小节3.6]

}

}

}

closedir(d);

}

获取当前进程中所有的线程

3.6 DumpUnattachedThread

thread_list.cc

static void DumpUnattachedThread(std::ostream& os, pid_t tid)

NO_THREAD_SAFETY_ANALYSIS {

Thread::DumpState(os, nullptr, tid); //[见小节3.6.1]

DumpKernelStack(os, tid, " kernel: ", false); //[见小节3.6.2]

os << "\n";

}

将进程中的每个线程都执行一次该方法

3.6.1 Thread::DumpState

thread.cc

void Thread::DumpState(std::ostream& os, const Thread* thread, pid_t tid) {

std::string group_name;

int priority;

bool is_daemon = false;

Thread* self = Thread::Current();

if (thread != nullptr) {

... //若为空则不进入该分支

}

if (gAborting == 0 && self != nullptr && thread !=

nullptr && thread->tlsPtr_.opeer != nullptr) {

ScopedObjectAccessUnchecked soa(self);

priority = soa.DecodeField(WellKnownClasses::java_lang_Thread_priority)

->GetInt(thread->tlsPtr_.opeer);

is_daemon = soa.DecodeField(WellKnownClasses::java_lang_Thread_daemon)

->GetBoolean(thread->tlsPtr_.opeer);

mirror::Object* thread_group =

soa.DecodeField(WellKnownClasses::java_lang_Thread_group)->

GetObject(thread->tlsPtr_.opeer);

if (thread_group != nullptr) {

ArtField* group_name_field =

soa.DecodeField(WellKnownClasses::java_lang_ThreadGroup_name);

mirror::String* group_name_string =

reinterpret_cast<mirror::String*>

(group_name_field->GetObject(thread_group));

group_name = (group_name_string != nullptr) ?

group_name_string->ToModifiedUtf8() : "<null>";

}

} else {

priority = GetNativePriority();

}

std::string scheduler_group_name(GetSchedulerGroupName(tid));

if (scheduler_group_name.empty()) {

scheduler_group_name = "default";

}

//线程名,优先级,tid,线程状态

if (thread != nullptr) {

os << '"' << *thread->tlsPtr_.name << '"';

if (is_daemon) {

os << " daemon";

}

os << " prio=" << priority

<< " tid=" << thread->GetThreadId()

<< " " << thread->GetState(); //获取线程状态

if (thread->IsStillStarting()) {

os << " (still starting up)";

}

os << "\n";

//当线程为空指针,则输出not attached

} else {

os << '"' << ::art::GetThreadName(tid) << '"'

<< " prio=" << priority

<< " (not attached)\n";

}

if (thread != nullptr) {

MutexLock mu(self, *Locks::thread_suspend_count_lock_);

os << " | group=\"" << group_name << "\""

<< " sCount=" << thread->tls32_.suspend_count

<< " dsCount=" << thread->tls32_.debug_suspend_count

<< " obj=" << reinterpret_cast<void*>(thread->tlsPtr_.opeer)

<< " self=" << reinterpret_cast<const void*>(thread) << "\n";

}

os << " | sysTid=" << tid

<< " nice=" << getpriority(PRIO_PROCESS, tid)

<< " cgrp=" << scheduler_group_name;

if (thread != nullptr) {

int policy;

sched_param sp;

CHECK_PTHREAD_CALL(pthread_getschedparam,

(thread->tlsPtr_.pthread_self, &policy, &sp), __FUNCTION__);

os << " sched=" << policy << "/" << sp.sched_priority

<< " handle=" << reinterpret_cast<void*>(thread->tlsPtr_.pthread_self);

}

os << "\n";

std::string scheduler_stats;

//读取节点/proc/self/task/%d/schedstat

if (ReadFileToString(StringPrintf("/proc/self/task/%d/schedstat", tid),

&scheduler_stats)) {

scheduler_stats.resize(scheduler_stats.size() - 1);

} else {

scheduler_stats = "0 0 0";

}

char native_thread_state = '?';

int utime = 0;

int stime = 0;

int task_cpu = 0;

// 读取节点/proc/self/task/%d/stat

GetTaskStats(tid, &native_thread_state, &utime, &stime, &task_cpu);

os << " | state=" << native_thread_state

<< " schedstat=( " << scheduler_stats << " )"

<< " utm=" << utime

<< " stm=" << stime

<< " core=" << task_cpu

<< " HZ=" << sysconf(_SC_CLK_TCK) << "\n";

if (thread != nullptr) {

os << " | stack=" << reinterpret_cast<void*>(thread->tlsPtr_.stack_begin) << "-"

<< reinterpret_cast<void*>(thread->tlsPtr_.stack_end) << " stackSize="

<< PrettySize(thread->tlsPtr_.stack_size) << "\n";

os << " | held mutexes=";

for (size_t i = 0; i < kLockLevelCount; ++i) {

if (i != kMonitorLock) {

BaseMutex* mutex = thread->GetHeldMutex(static_cast<LockLevel>(i));

if (mutex != nullptr) {

os << " \"" << mutex->GetName() << "\"";

if (mutex->IsReaderWriterMutex()) {

ReaderWriterMutex* rw_mutex = down_cast<ReaderWriterMutex*>(mutex);

if (rw_mutex->GetExclusiveOwnerTid() == static_cast<uint64_t>(tid)) {

os << "(exclusive held)";

} else {

os << "(shared held)";

}

}

}

}

}

os << "\n";

}

}

3.6.2 DumpKernelStack

art/runtime/utils.cc

ame, &kernel_stack)) {

os << prefix << "(couldn't read " << kernel_stack_filename << ")\n";

return;

}

std::vector<std::string> kernel_stack_frames;

Split(kernel_stack, '\n', &kernel_stack_frames);

kernel_stack_frames.pop_back();

for (size_t i = 0; i < kernel_stack_frames.size(); ++i) {

const char* text = kernel_stack_frames[i].c_str();

const char* close_bracket = strchr(text, ']');

if (close_bracket != nullptr) {

text = close_bracket + 2;

}

os << prefix;

if (include_count) {

os << StringPrintf("#%02zd ", i);

}

os << text << "\n";

}

}

内核栈是通过读取节点/proc/self/task/[tid]/stack

3.7 Output

signal_catcher.cc

void SignalCatcher::Output(const std::string& s) {

if (stack_trace_file_.empty()) {

LOG(INFO) << s;

return;

}

ScopedThreadStateChange tsc(Thread::Current(), kWaitingForSignalCatcherOutput);

// 以追加的方式输出文件

int fd = open(stack_trace_file_.c_str(), O_APPEND | O_CREAT | O_WRONLY, 0666);

if (fd == -1) {

PLOG(ERROR) << "Unable to open stack trace file '" << stack_trace_file_ << "'";

return;

}

std::unique_ptr<File> file(new File(fd, stack_trace_file_, true));

bool success = file->WriteFully(s.data(), s.size());

if (success) {

success = file->FlushCloseOrErase() == 0;

} else {

file->Erase();

}

if (success) {

// 输出该log, 则代表traces信息已完整的输出到文件

LOG(INFO) << "Wrote stack traces to '" << stack_trace_file_ << "'";

} else {

PLOG(ERROR) << "Failed to write stack traces to '" << stack_trace_file_ << "'";

}

}

[小节2.2] 执行完 DumpForSigQuit() 后,便会执行 SignalCatcher::Output() 方法。将前面所有生成的信息一并输出到文件 /data/anr/traces.txt。

2840

2840

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?