Apache Airflow --- 动态DAG

1. DAG

1.1 Tutorial

# [START tutorial]

# [START import_module]

from datetime import datetime, timedelta

from textwrap import dedent

# The DAG object; we'll need this to instantiate a DAG

from airflow import DAG

# Operators; we need this to operate!

from airflow.operators.bash import BashOperator

# [END import_module]

# [START instantiate_dag]

with DAG(

'tutorial',

# [START default_args]

# These args will get passed on to each operator

# You can override them on a per-task basis during operator initialization

default_args={

'depends_on_past': False,

'email': ['airflow@example.com'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

# 'queue': 'bash_queue',

# 'pool': 'backfill',

# 'priority_weight': 10,

# 'end_date': datetime(2016, 1, 1),

# 'wait_for_downstream': False,

# 'sla': timedelta(hours=2),

# 'execution_timeout': timedelta(seconds=300),

# 'on_failure_callback': some_function,

# 'on_success_callback': some_other_function,

# 'on_retry_callback': another_function,

# 'sla_miss_callback': yet_another_function,

# 'trigger_rule': 'all_success'

},

# [END default_args]

description='A simple tutorial DAG',

schedule_interval=timedelta(days=1),

start_date=datetime(2021, 1, 1),

catchup=False,

tags=['example'],

) as dag:

# [END instantiate_dag]

# t1, t2 and t3 are examples of tasks created by instantiating operators

# [START basic_task]

t1 = BashOperator(

task_id='print_date',

bash_command='date',

)

t2 = BashOperator(

task_id='sleep',

depends_on_past=False,

bash_command='sleep 5',

retries=3,

)

# [END basic_task]

# [START documentation]

t1.doc_md = dedent(

"""\

#### Task Documentation

You can document your task using the attributes `doc_md` (markdown),

`doc` (plain text), `doc_rst`, `doc_json`, `doc_yaml` which gets

rendered in the UI's Task Instance Details page.

"""

)

dag.doc_md = __doc__ # providing that you have a docstring at the beginning of the DAG

dag.doc_md = """

This is a documentation placed anywhere

""" # otherwise, type it like this

# [END documentation]

# [START jinja_template]

templated_command = dedent(

"""

{% for i in range(5) %}

echo "{{ ds }}"

echo "{{ macros.ds_add(ds, 7)}}"

{% endfor %}

"""

)

t3 = BashOperator(

task_id='templated',

depends_on_past=False,

bash_command=templated_command,

)

# [END jinja_template]

t1 >> [t2, t3]

# [END tutorial]

1.2 动态Dag

import time

from datetime import datetime

from airflow import DAG

from airflow.models.taskinstance import TaskInstance

from airflow.operators.python import PythonOperator

from kafka_connect import KafkaConnect

import pymssql

with DAG(

dag_id='hello_world',

default_args={

'owner': 'Nan',

'depends_on_past': False,

'email': ['nolan@163.com'],

'email_on_failure': True,

'email_on_retry': True,

#'retries': 0,

#'retry_delay': timedelta(minutes=5),

# 'queue': 'bash_queue',

# 'pool': 'backfill',

# 'priority_weight': 10,

# 'end_date': datetime(2016, 1, 1),

# 'wait_for_downstream': False,

# 'sla': timedelta(hours=2),

# 'execution_timeout': timedelta(seconds=300),

'on_failure_callback': failure_callback,

'on_success_callback': success_callback,

# 'on_retry_callback': another_function,

# 'sla_miss_callback': yet_another_function,

# 'trigger_rule': 'all_success'

},

# [END default_args]

description='A simple template',

schedule_interval=timedelta(days=1),

start_date=datetime(2021, 1, 1),

catchup=False,

tags=['example'],

concurrency=10

) as dag:

#连接数据库拿资料

def getConnStatus():

conn = pymssql.connect(host="xxxx", database='xxx', user='xxxx', password='xxxx')

cursor = conn.cursor()

cursor.execute("select * from xxxxx")

results = cursor.fetchall()

#模拟数据

#results = [(1, 'abc', 'xxx', 'xxx', 'xxx'),(2, 'bcd', 'xxx', 'xxx', 'xxx', ),(3, 'xyz', 'mssql.class', 'kafka_conn', 'key_source')]

return results

def execFunction(**kwargs):

# 根据索引值,切分

everyConn = kwargs.get("connMsg")

# 将结果转string,因为结果多了(,)

conn = str(everyConn)[2:-3]

allMsg = conn.split(",")

xxx = allMsg[0]

xxx = allMsg[1]

xxx = allMsg[2]

#呼叫方法,然后遍历

results = getConnStatus()

for item in result:

name = item[1]

print(name) #abc

op = PythonOperator(

task_id="monitor_conn_{}".format(name),

python_callable=execFunction,

op_kwargs={'connMsg': item},

dag = dag,

)

1.3 Parse Trigger config

- 使用client

airflow trigger_dag ‘example_dag_conf’ -r ‘run_id’ --conf ‘{“message”:“value”}’- 使用ui

def parse_config(**kwargs):

param = kwargs['dag_run'].conf['message']

print(param)

2. Dag execute jar

- python 执行jar

Python execute jar: https://blog.csdn.net/weixin_43916074/article/details/128328888.- jvm在shutdown之后再start报错:OSError: JVM cannot be restarted

本地测试的解决方法:将startjvm这段代码提取出来

发现在Dag上面跑反倒不会遇到这个问题,因为会有两个taskid

import pymssql

import jpype

import os

def getConnStatus():

conn = pymssql.connect(host="xxx.xxx.xx.xxx", database='xxx', user='xxx', password='xxx')

cursor = conn.cursor()

cursor.execute("select * from xxx")

results = cursor.fetchall()

return results

def getJVMClass():

path = 'E:\\xxx\\airflow\\xxx\\xxxx-0.0.1-SNAPSHOT.jar'

jar_path = os.path.join(os.path.abspath("."), path)

# 2.获取jvm.dll 的文件路径

jvmPath = jpype.getDefaultJVMPath()

print(jvmPath)

# 启动jvm

jpype.startJVM(jvmPath, "-ea", "-Djava.class.path=%s" % jar_path)

DataCheckClass = jpype.JClass("com.xxxx.controller.DataCheckController")

return DataCheckClass()

if __name__ == '__main__':

datacheck = getJVMClass()

print("start jvm")

results = getConnStatus()

for item in results:

systemName = item[1]

pipelineType = item[2]

if pipelineType == 'DB':

task_id = "Check_Pipeline_{}".format(systemName),

sourceConf = item[3]

targetConf = item[4]

count = datacheck.compareTableDiff(str(sourceConf), str(targetConf))

print(count)

jpype.shutdownJVM()

3. Force failure

from airflow.exceptions import AirflowFailException

//在execScript里面根据某个方法返回的结果调用task_to_fail()

op = PythonOperator(

task_id="Check_conn_{}".format(connName),

python_callable= execScript,

op_kwargs = {'s_num': s_num},

dag=dag,

)

def task_to_fail():

raise AirflowFailException("Force airflow to fail here!")

4. Dag

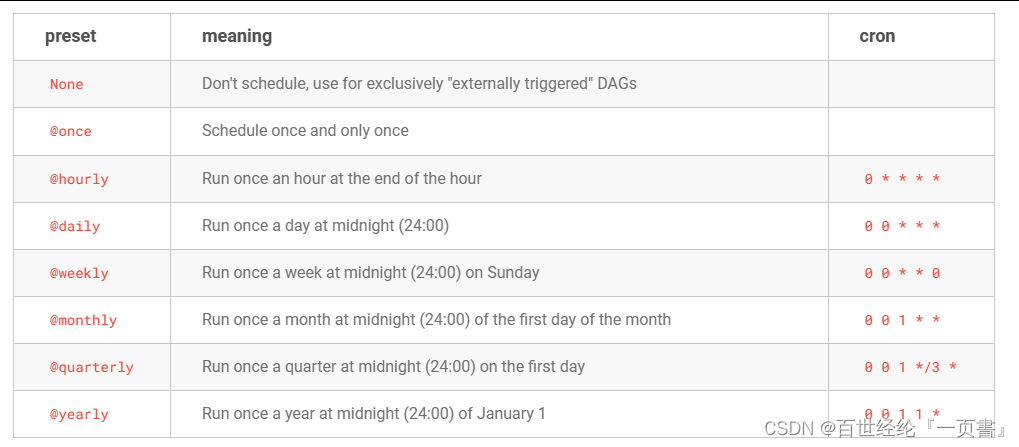

4.1 Cron

- 官方提供

- 也可以参考后面自定义

4.2 start date

- 将start date硬编码,然后设定排程

- 解决方法

5. Waken

在一秒钟内看到本质的人和花半辈子也看不清一件事本质的人,自然是不一样的命运。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?