本文主要讲述pytorch可视化的一些内容,以下为本文目录:

1. TensorBoard 使用指南

随着深度学习的发展,网络模型越来越复杂,难以确定模型每一层的输入输出结构,如果能清晰了解模型结构和数据变化,将有助于我们更加高效地开发模型。TensorBoard 就是为此而生,他可以将代码运行过程中你感兴趣的数据保存在一个文件夹里,然后再读取文件夹中的数据,用浏览器显示出来。除了可视化模型结构外,TensorBoard 还可以记录并可视化训练过程的 loss 变化、可视化图像、连续变量、参数分布等,既是一个记录员,又是一位画家。

1.1 安装

在命令行中输入以下命令即可安装:

pip install tensorboardX

当然也可以使用 pytorch 自带的 tensorboard,这样就可以不用额外安装tensorboard了。

1.2 启动

tensorboard 的启动流程可以分为以下几步:

- 创建记录器writer

# 方式一:下载安装使用tensorboardX

from tensorboardX import SummaryWriter

# 方式二:使用pytorch自带的tensorboard

from torch.utils.tensorboard import SummaryWriter

# 创建记录器

log_dir = '/path/to/logs' # 日志文件输出路径,可以自定义

writer = SummaryWriter(log_dir)

- 写入并保存日志文件

writer.add_graph(model, input_to_model=torch.rand(1, 3, 512, 512))

writer.close()

- 在对应环境的命令行中输入以下命令,启动tensorboard

tensorboard --log_dir=/path/to/logs --port=xxxx #"/path/to/logs"对应前面的 log_dir

# port为外部访问tensorboard的端口号,如果不是在服务器远程使用的话则不需要配置port

-

打开可视化界面

复制粘贴步骤3得到的网址,在浏览器中打开:

http://localhost:6006/

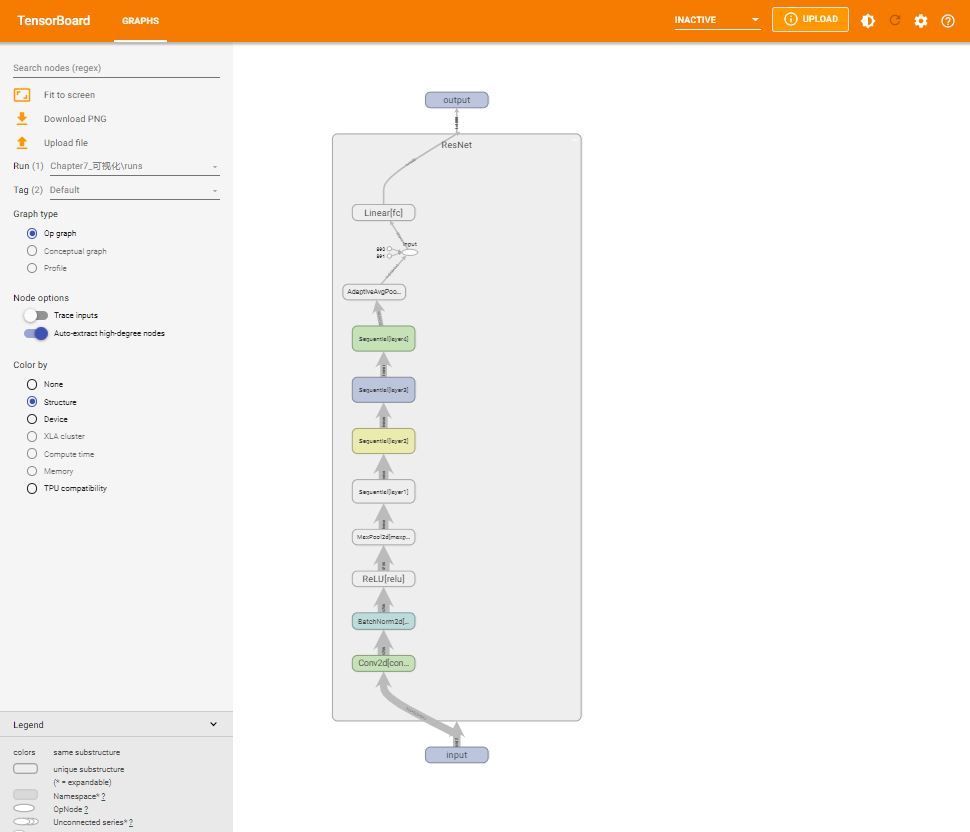

举个栗子,假设我们要查看 resnet34模型的结构。

第一步,下载好resnet模型:

import torchvision.models as models

import os

os.environ['TORCH_HOME']='E:\pytorch\Data' #修改模型保存路径,下载好后重新加载模型就会在这个目录下加载了

# pretrained = True表示下载使用预训练得到的权重,False表示不适用预训练得到的权重

resnet34 = models.resnet34(pretrained=True)

print(resnet34)

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

...

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=512, out_features=1000, bias=True)

)

第二步,创建记录器,写入保存好日志文件:

import torch

import torch.nn as nn

# 方式一:下载安装使用tensorboardX

from tensorboardX import SummaryWriter

#方式二:使用pytorch自带的tensorboard

# from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter('./runs')

writer.add_graph(resnet34, input_to_model=torch.rand(1, 3, 512, 512))

writer.close()

第三步:在当前环境对应的anaconda prompt 中输入以下命令,启动tensorboard:

tensorboard --logdir=runs

第四步,复制上一步命令行执行后得到的网址,粘贴到浏览器中即可查看tensorboard:

双击可展开模块,更多具体介绍可以查看这篇博客。

1.3 图像可视化

利用tensorboard可以方便地展示图片。

- 单张图片:

add_image - 多张图片:

add_images - 多合一:先用

torchvision.utils.make_grid将多张图片拼成一张,然后用writer.add_image显示

以torchvision的 CIFAR10 数据集为例:

import torchvision

from tensorboardX import SummaryWriter

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

transform1 = transforms.Compose([transforms.ToTensor()])

train_data = datasets.CIFAR10(".", train=True, download=True, transform=transform1)

test_data = datasets.CIFAR10(".", train=False, download=True, transform=transform1)

batch_size = 256

train_loader = DataLoader(train_data, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(test_data, batch_size=batch_size)

images, labels = next(iter(train_loader))

print(images.shape, labels.shape)

# 查看单张图片

writer = SummaryWriter('./picture')

writer.add_image('images[0]', images[0])

writer.close()

Files already downloaded and verified

Files already downloaded and verified

torch.Size([256, 3, 32, 32]) torch.Size([256])

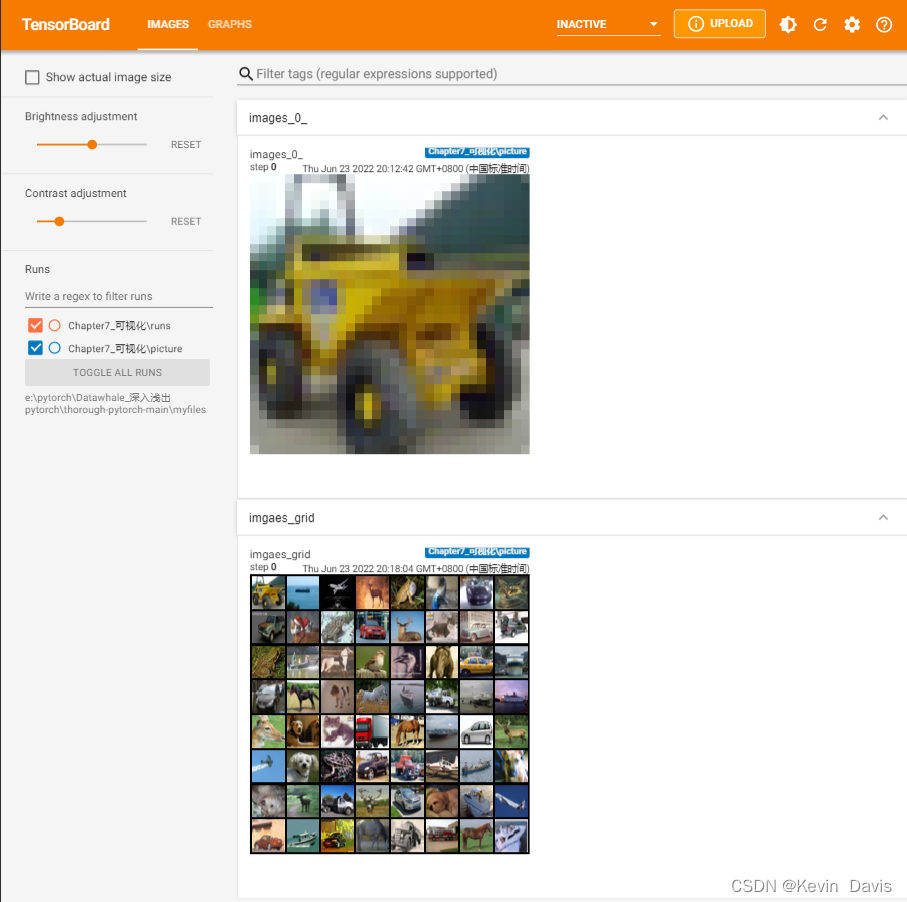

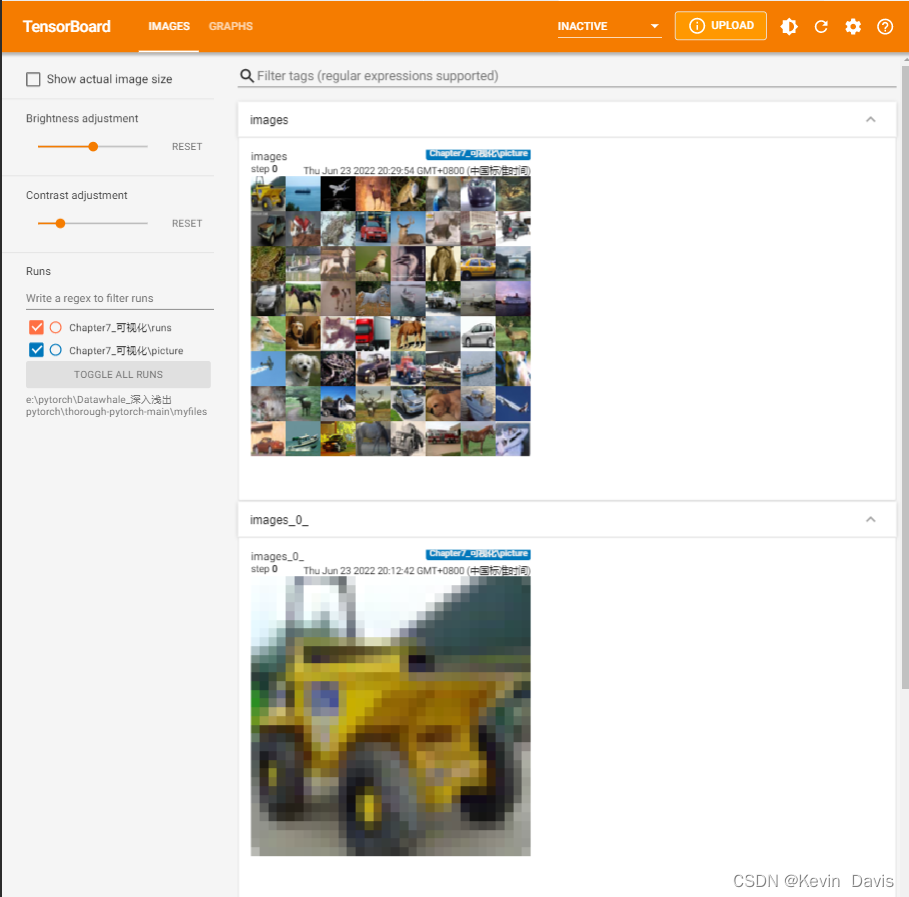

将多张图片拼接成一张图片,中间用黑色网格分割:

writer = SummaryWriter('./picture')

img_grid = torchvision.utils.make_grid(images)

writer.add_image('imgaes_grid', img_grid)

writer.close()

将多张图片直接写入:

writer = SummaryWriter('./picture')

writer.add_images('images', images, global_step=0)

writer.close()

接下来我们修改一下 resnet34 的输出,然后跑一跑 CIFAR10 分类任务:

import numpy as np

import time

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print('Device: ', device)

model = resnet34

model.fc = nn.Linear(512, 10) #修改模型

model = model.to(device)

writer = SummaryWriter('./runs')

writer.add_graph(model, input_to_model=images.to(device))

writer.close()

loss_fn = nn.CrossEntropyLoss()

lr = 1e-3

max_epochs = 5

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer=optimizer, step_size=5, gamma=0.8)

def train(max_epochs, save_dir):

writer = SummaryWriter(save_dir)

for epoch in range(max_epochs):

model.train()

train_loss, val_loss = 0, 0

pred_label, true_label = [], []

start_time = time.time()

for images, labels in train_loader:

images = images.to(device)

labels = labels.to(device)

optimizer.zero_grad()

out = model(images)

pred = torch.argmax(out, 1)

loss = loss_fn(out, labels)

loss.backward()

optimizer.step()

train_loss += loss.item() * images.size(0)

pred_label.append(pred.cpu().data.numpy())

true_label.append(labels.cpu().data.numpy())

train_loss = train_loss / len(train_loader.dataset)

true_label, pred_label = np.concatenate(true_label), np.concatenate(pred_label)

train_acc = np.sum(true_label == pred_label) / len(pred_label)

scheduler.step()

lr0 = scheduler.get_last_lr()

end_time = time.time()

cost_time = end_time - start_time

writer.add_scalar('train_loss', train_loss, epoch)

writer.add_scalar('learning rate', lr0[-1], epoch)

writer.add_scalar('Train_acc', train_acc, epoch)

with torch.no_grad():

model.eval()

pred_labels, true_labels = [], []

for images, labels in train_loader:

images = images.to(device)

labels = labels.to(device)

optimizer.zero_grad()

out = model(images)

pred = torch.argmax(out, 1)

loss = loss_fn(out, labels)

val_loss += loss.item() * images.size(0)

pred_labels.append(pred.cpu().data.numpy())

true_labels.append(labels.cpu().data.numpy())

val_loss = val_loss / len(train_loader.dataset)

true_labels, pred_labels = np.concatenate(true_labels), np.concatenate(pred_labels)

val_acc = np.sum(true_labels == pred_labels) / len(pred_labels)

writer.add_scalar('Val_loss', val_loss, epoch)

writer.add_scalar('Val_acc', val_acc, epoch)

writer.close()

print('Epoch: {}, Train_loss: {:.6f}, Train_acc:{:.2f}%, Val_loss: {:.6f}, Val_acc: {:.2f}%, time: {:.3f}s'.format(epoch,

train_loss, train_acc*100, val_loss, val_acc*100, cost_time))

Device: cuda:0

%%time

import datetime

t0 = datetime.datetime.now().strftime('%Y-%m-%d-%H-%M')

save_dir = './runs/' + t0 + '/'

if not os.path.isdir(save_dir):

os.makedirs(save_dir)

print('save_dir: ', save_dir)

train(max_epochs=max_epochs, save_dir=save_dir)

save_dir: .runs/2022-06-24-12-20/

Epoch: 0, Train_loss: 0.137615, Train_acc:95.85, Val_loss: 0.066162, Val_acc: 97.97%, time: 50.250s

Epoch: 1, Train_loss: 0.080220, Train_acc:97.26, Val_loss: 0.066573, Val_acc: 97.76%, time: 53.099s

Epoch: 2, Train_loss: 0.069566, Train_acc:97.70, Val_loss: 0.065918, Val_acc: 97.72%, time: 49.805s

Epoch: 3, Train_loss: 0.069826, Train_acc:97.61, Val_loss: 0.074443, Val_acc: 97.47%, time: 54.373s

Epoch: 4, Train_loss: 0.065152, Train_acc:97.75, Val_loss: 0.065349, Val_acc: 97.85%, time: 55.126s

CPU times: total: 5min 52s

Wall time: 6min 23s

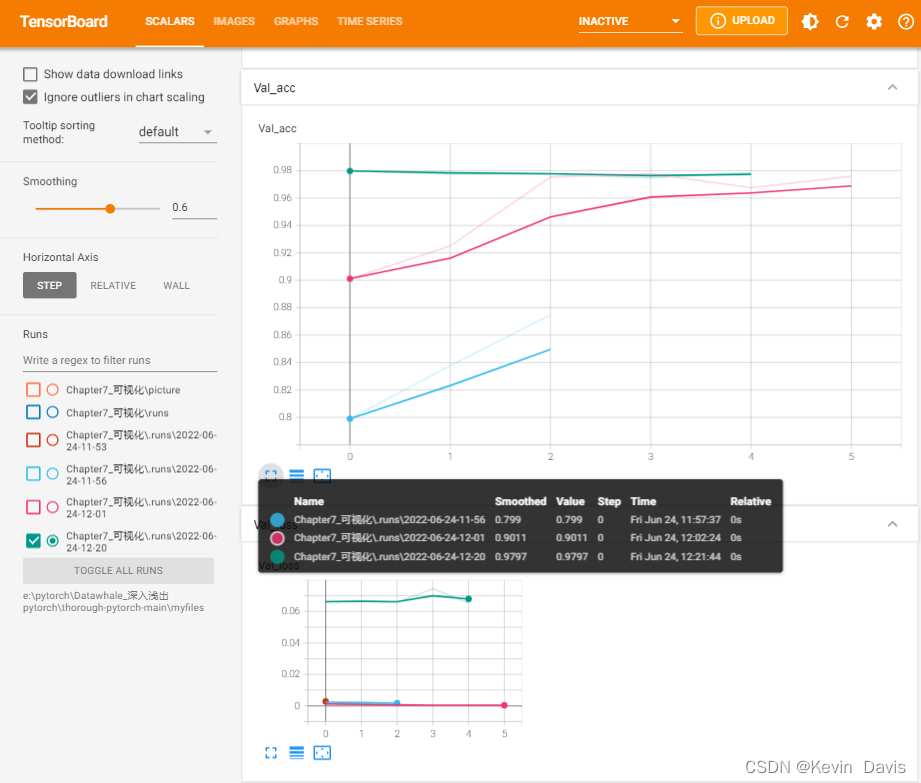

可以看到resnet34 的恐怖性能,无论是训练集还是验证集上都获得了97%左右的精度。

tensorboard 可以可视化不同时候跑出来的结果。其中绿色线是 resnet34 跑出来的结果,才跑了5个epoch,准确率却能一直稳定在97%以上,非常恐怖。

2. torchinfo

torchinfo 能够非常方便地输出模型的详细信息,比如每一层输出的shape,以及参数量。

安装方法:

# 方法一

pip install torch info

# 方法二

conda install -c conda-forge torchinfo

torchinfo 使用起来非常简单,只需调用torchinfo.summary()即可,输入参数为summary(model, input_size=[batch_size, channel, height, width])。

from torchinfo import summary

summary(model, (1, 3, 32, 32)) #1:batch_size, 3: 图片的通道数,32:图片高宽

==========================================================================================

Layer (type:depth-idx) Output Shape Param #

==========================================================================================

ResNet [1, 10] --

├─Conv2d: 1-1 [1, 64, 16, 16] 9,408

├─BatchNorm2d: 1-2 [1, 64, 16, 16] 128

├─ReLU: 1-3 [1, 64, 16, 16] --

├─MaxPool2d: 1-4 [1, 64, 8, 8] --

├─Sequential: 1-5 [1, 64, 8, 8] --

│ └─BasicBlock: 2-1 [1, 64, 8, 8] --

│ │ └─Conv2d: 3-1 [1, 64, 8, 8] 36,864

│ │ └─BatchNorm2d: 3-2 [1, 64, 8, 8] 128

│ │ └─ReLU: 3-3 [1, 64, 8, 8] --

│ │ └─Conv2d: 3-4 [1, 64, 8, 8] 36,864

│ │ └─BatchNorm2d: 3-5 [1, 64, 8, 8] 128

│ │ └─ReLU: 3-6 [1, 64, 8, 8] --

│ └─BasicBlock: 2-2 [1, 64, 8, 8] --

│ │ └─Conv2d: 3-7 [1, 64, 8, 8] 36,864

│ │ └─BatchNorm2d: 3-8 [1, 64, 8, 8] 128

│ │ └─ReLU: 3-9 [1, 64, 8, 8] --

│ │ └─Conv2d: 3-10 [1, 64, 8, 8] 36,864

│ │ └─BatchNorm2d: 3-11 [1, 64, 8, 8] 128

│ │ └─ReLU: 3-12 [1, 64, 8, 8] --

│ └─BasicBlock: 2-3 [1, 64, 8, 8] --

│ │ └─Conv2d: 3-13 [1, 64, 8, 8] 36,864

│ │ └─BatchNorm2d: 3-14 [1, 64, 8, 8] 128

│ │ └─ReLU: 3-15 [1, 64, 8, 8] --

│ │ └─Conv2d: 3-16 [1, 64, 8, 8] 36,864

│ │ └─BatchNorm2d: 3-17 [1, 64, 8, 8] 128

│ │ └─ReLU: 3-18 [1, 64, 8, 8] --

├─Sequential: 1-6 [1, 128, 4, 4] --

│ └─BasicBlock: 2-4 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-19 [1, 128, 4, 4] 73,728

│ │ └─BatchNorm2d: 3-20 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-21 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-22 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-23 [1, 128, 4, 4] 256

│ │ └─Sequential: 3-24 [1, 128, 4, 4] 8,448

│ │ └─ReLU: 3-25 [1, 128, 4, 4] --

│ └─BasicBlock: 2-5 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-26 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-27 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-28 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-29 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-30 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-31 [1, 128, 4, 4] --

│ └─BasicBlock: 2-6 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-32 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-33 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-34 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-35 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-36 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-37 [1, 128, 4, 4] --

│ └─BasicBlock: 2-7 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-38 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-39 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-40 [1, 128, 4, 4] --

│ │ └─Conv2d: 3-41 [1, 128, 4, 4] 147,456

│ │ └─BatchNorm2d: 3-42 [1, 128, 4, 4] 256

│ │ └─ReLU: 3-43 [1, 128, 4, 4] --

├─Sequential: 1-7 [1, 256, 2, 2] --

│ └─BasicBlock: 2-8 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-44 [1, 256, 2, 2] 294,912

│ │ └─BatchNorm2d: 3-45 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-46 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-47 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-48 [1, 256, 2, 2] 512

│ │ └─Sequential: 3-49 [1, 256, 2, 2] 33,280

│ │ └─ReLU: 3-50 [1, 256, 2, 2] --

│ └─BasicBlock: 2-9 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-51 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-52 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-53 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-54 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-55 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-56 [1, 256, 2, 2] --

│ └─BasicBlock: 2-10 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-57 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-58 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-59 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-60 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-61 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-62 [1, 256, 2, 2] --

│ └─BasicBlock: 2-11 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-63 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-64 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-65 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-66 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-67 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-68 [1, 256, 2, 2] --

│ └─BasicBlock: 2-12 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-69 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-70 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-71 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-72 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-73 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-74 [1, 256, 2, 2] --

│ └─BasicBlock: 2-13 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-75 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-76 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-77 [1, 256, 2, 2] --

│ │ └─Conv2d: 3-78 [1, 256, 2, 2] 589,824

│ │ └─BatchNorm2d: 3-79 [1, 256, 2, 2] 512

│ │ └─ReLU: 3-80 [1, 256, 2, 2] --

├─Sequential: 1-8 [1, 512, 1, 1] --

│ └─BasicBlock: 2-14 [1, 512, 1, 1] --

│ │ └─Conv2d: 3-81 [1, 512, 1, 1] 1,179,648

│ │ └─BatchNorm2d: 3-82 [1, 512, 1, 1] 1,024

│ │ └─ReLU: 3-83 [1, 512, 1, 1] --

│ │ └─Conv2d: 3-84 [1, 512, 1, 1] 2,359,296

│ │ └─BatchNorm2d: 3-85 [1, 512, 1, 1] 1,024

│ │ └─Sequential: 3-86 [1, 512, 1, 1] 132,096

│ │ └─ReLU: 3-87 [1, 512, 1, 1] --

│ └─BasicBlock: 2-15 [1, 512, 1, 1] --

│ │ └─Conv2d: 3-88 [1, 512, 1, 1] 2,359,296

│ │ └─BatchNorm2d: 3-89 [1, 512, 1, 1] 1,024

│ │ └─ReLU: 3-90 [1, 512, 1, 1] --

│ │ └─Conv2d: 3-91 [1, 512, 1, 1] 2,359,296

│ │ └─BatchNorm2d: 3-92 [1, 512, 1, 1] 1,024

│ │ └─ReLU: 3-93 [1, 512, 1, 1] --

│ └─BasicBlock: 2-16 [1, 512, 1, 1] --

│ │ └─Conv2d: 3-94 [1, 512, 1, 1] 2,359,296

│ │ └─BatchNorm2d: 3-95 [1, 512, 1, 1] 1,024

│ │ └─ReLU: 3-96 [1, 512, 1, 1] --

│ │ └─Conv2d: 3-97 [1, 512, 1, 1] 2,359,296

│ │ └─BatchNorm2d: 3-98 [1, 512, 1, 1] 1,024

│ │ └─ReLU: 3-99 [1, 512, 1, 1] --

├─AdaptiveAvgPool2d: 1-9 [1, 512, 1, 1] --

├─Linear: 1-10 [1, 10] 5,130

==========================================================================================

Total params: 21,289,802

Trainable params: 21,289,802

Non-trainable params: 0

Total mult-adds (M): 74.78

==========================================================================================

Input size (MB): 0.01

Forward/backward pass size (MB): 1.22

Params size (MB): 85.16

Estimated Total Size (MB): 86.39

==========================================================================================

3. profiler

tensorboard 能够输出很多信息,但注意需要在训练过程中写好记录日志。

torchinfo能够输出模型每一层的详细信息及参数量。

除了这两个之外,我们还可以用 torch 自带的autograd.profiler来记录模型每一层所消耗的计算资源信息,如计算时长等。使用起来非常简单,用前文的数据和模型举个栗子:

with torch.autograd.profiler.profile(use_cuda=True) as prof:

out = model(images.to(device))

print(prof)

--------------------------------- ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------

Name Self CPU % Self CPU CPU total % CPU total CPU time avg Self CUDA Self CUDA % CUDA total CUDA time avg # of Calls

--------------------------------- ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------

aten::to 0.19% 29.000us 8.36% 1.302ms 1.302ms 3.000us 0.00% 2.626ms 2.626ms 1

aten::_to_copy 0.31% 48.000us 8.17% 1.273ms 1.273ms 3.000us 0.00% 2.623ms 2.623ms 1

aten::empty_strided 0.13% 21.000us 0.13% 21.000us 21.000us 1.000us 0.00% 1.000us 1.000us 1

aten::copy_ 7.73% 1.204ms 7.73% 1.204ms 1.204ms 2.619ms 4.01% 2.619ms 2.619ms 1

aten::conv2d 0.10% 15.000us 1.75% 273.000us 273.000us 3.000us 0.00% 1.617ms 1.617ms 1

aten::convolution 0.22% 34.000us 1.66% 258.000us 258.000us 2.000us 0.00% 1.614ms 1.614ms 1

aten::_convolution 0.15% 24.000us 1.44% 224.000us 224.000us 2.000us 0.00% 1.612ms 1.612ms 1

aten::cudnn_convolution 1.24% 193.000us 1.28% 200.000us 200.000us 1.609ms 2.46% 1.610ms 1.610ms 1

aten::empty 0.04% 7.000us 0.04% 7.000us 7.000us 1.000us 0.00% 1.000us 1.000us 1

aten::batch_norm 0.07% 11.000us 0.81% 126.000us 126.000us 3.000us 0.00% 387.000us 387.000us 1

aten::_batch_norm_impl_index 0.08% 13.000us 0.74% 115.000us 115.000us 3.000us 0.00% 384.000us 384.000us 1

aten::cudnn_batch_norm 0.42% 66.000us 0.65% 102.000us 102.000us 374.000us 0.57% 381.000us 381.000us 1

aten::empty_like 0.06% 9.000us 0.12% 19.000us 19.000us 2.000us 0.00% 3.000us 3.000us 1

aten::empty 0.06% 10.000us 0.06% 10.000us 10.000us 1.000us 0.00% 1.000us 1.000us 1

aten::view 0.04% 7.000us 0.04% 7.000us 7.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 1.000us 0.00% 1.000us 1.000us 1

aten::relu_ 1.68% 262.000us 2.09% 325.000us 325.000us 2.000us 0.00% 368.000us 368.000us 1

aten::clamp_min_ 0.22% 34.000us 0.40% 63.000us 63.000us 2.000us 0.00% 366.000us 366.000us 1

aten::clamp_min 0.19% 29.000us 0.19% 29.000us 29.000us 364.000us 0.56% 364.000us 364.000us 1

aten::max_pool2d 0.07% 11.000us 0.37% 58.000us 58.000us 2.000us 0.00% 434.000us 434.000us 1

aten::max_pool2d_with_indices 0.30% 47.000us 0.30% 47.000us 47.000us 432.000us 0.66% 432.000us 432.000us 1

aten::conv2d 0.06% 10.000us 1.49% 232.000us 232.000us 2.000us 0.00% 668.000us 668.000us 1

aten::convolution 0.12% 19.000us 1.42% 222.000us 222.000us 2.000us 0.00% 666.000us 666.000us 1

aten::_convolution 0.12% 19.000us 1.30% 203.000us 203.000us 2.000us 0.00% 664.000us 664.000us 1

aten::cudnn_convolution 1.12% 175.000us 1.18% 184.000us 184.000us 661.000us 1.01% 662.000us 662.000us 1

aten::empty 0.06% 9.000us 0.06% 9.000us 9.000us 1.000us 0.00% 1.000us 1.000us 1

aten::batch_norm 0.06% 10.000us 0.80% 124.000us 124.000us 2.000us 0.00% 126.000us 126.000us 1

aten::_batch_norm_impl_index 0.08% 12.000us 0.73% 114.000us 114.000us 2.000us 0.00% 124.000us 124.000us 1

aten::cudnn_batch_norm 0.44% 68.000us 0.65% 102.000us 102.000us 115.000us 0.18% 122.000us 122.000us 1

aten::empty_like 0.06% 9.000us 0.12% 18.000us 18.000us 2.000us 0.00% 3.000us 3.000us 1

aten::empty 0.06% 9.000us 0.06% 9.000us 9.000us 1.000us 0.00% 1.000us 1.000us 1

aten::view 0.04% 6.000us 0.04% 6.000us 6.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 1.000us 0.00% 1.000us 1.000us 1

aten::relu_ 0.11% 17.000us 0.26% 41.000us 41.000us 2.000us 0.00% 96.000us 96.000us 1

aten::clamp_min_ 0.04% 7.000us 0.15% 24.000us 24.000us 2.000us 0.00% 94.000us 94.000us 1

aten::clamp_min 0.11% 17.000us 0.11% 17.000us 17.000us 92.000us 0.14% 92.000us 92.000us 1

aten::conv2d 0.08% 12.000us 1.15% 179.000us 179.000us 4.000us 0.01% 690.000us 690.000us 1

aten::convolution 0.11% 17.000us 1.07% 167.000us 167.000us 2.000us 0.00% 686.000us 686.000us 1

aten::_convolution 0.10% 16.000us 0.96% 150.000us 150.000us 4.000us 0.01% 684.000us 684.000us 1

aten::cudnn_convolution 0.81% 126.000us 0.86% 134.000us 134.000us 679.000us 1.04% 680.000us 680.000us 1

aten::empty 0.05% 8.000us 0.05% 8.000us 8.000us 1.000us 0.00% 1.000us 1.000us 1

aten::batch_norm 0.06% 9.000us 1.17% 183.000us 183.000us 2.000us 0.00% 137.000us 137.000us 1

aten::_batch_norm_impl_index 0.09% 14.000us 1.12% 174.000us 174.000us 2.000us 0.00% 135.000us 135.000us 1

aten::cudnn_batch_norm 0.80% 125.000us 1.03% 160.000us 160.000us 126.000us 0.19% 133.000us 133.000us 1

aten::empty_like 0.06% 9.000us 0.11% 17.000us 17.000us 2.000us 0.00% 3.000us 3.000us 1

aten::empty 0.05% 8.000us 0.05% 8.000us 8.000us 1.000us 0.00% 1.000us 1.000us 1

aten::view 0.04% 6.000us 0.04% 6.000us 6.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::add_ 0.20% 31.000us 0.20% 31.000us 31.000us 134.000us 0.21% 134.000us 134.000us 1

aten::relu_ 0.10% 16.000us 0.24% 38.000us 38.000us 2.000us 0.00% 96.000us 96.000us 1

aten::clamp_min_ 0.05% 8.000us 0.14% 22.000us 22.000us 2.000us 0.00% 94.000us 94.000us 1

aten::clamp_min 0.09% 14.000us 0.09% 14.000us 14.000us 92.000us 0.14% 92.000us 92.000us 1

aten::conv2d 0.06% 9.000us 1.21% 188.000us 188.000us 2.000us 0.00% 670.000us 670.000us 1

aten::convolution 0.12% 18.000us 1.15% 179.000us 179.000us 2.000us 0.00% 668.000us 668.000us 1

aten::_convolution 0.10% 16.000us 1.03% 161.000us 161.000us 2.000us 0.00% 666.000us 666.000us 1

aten::cudnn_convolution 0.87% 136.000us 0.93% 145.000us 145.000us 663.000us 1.02% 664.000us 664.000us 1

aten::empty 0.06% 9.000us 0.06% 9.000us 9.000us 1.000us 0.00% 1.000us 1.000us 1

aten::batch_norm 0.06% 9.000us 0.72% 112.000us 112.000us 2.000us 0.00% 131.000us 131.000us 1

aten::_batch_norm_impl_index 0.07% 11.000us 0.66% 103.000us 103.000us 4.000us 0.01% 129.000us 129.000us 1

aten::cudnn_batch_norm 0.38% 59.000us 0.59% 92.000us 92.000us 116.000us 0.18% 125.000us 125.000us 1

aten::empty_like 0.06% 10.000us 0.12% 18.000us 18.000us 3.000us 0.00% 4.000us 4.000us 1

aten::empty 0.05% 8.000us 0.05% 8.000us 8.000us 1.000us 0.00% 1.000us 1.000us 1

aten::view 0.03% 5.000us 0.03% 5.000us 5.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 2.000us 0.00% 2.000us 2.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 1.000us 0.00% 1.000us 1.000us 1

aten::relu_ 0.10% 16.000us 0.25% 39.000us 39.000us 2.000us 0.00% 96.000us 96.000us 1

aten::clamp_min_ 0.04% 7.000us 0.15% 23.000us 23.000us 2.000us 0.00% 94.000us 94.000us 1

aten::clamp_min 0.10% 16.000us 0.10% 16.000us 16.000us 92.000us 0.14% 92.000us 92.000us 1

aten::conv2d 0.08% 13.000us 1.05% 164.000us 164.000us 2.000us 0.00% 659.000us 659.000us 1

aten::convolution 0.10% 16.000us 0.97% 151.000us 151.000us 2.000us 0.00% 657.000us 657.000us 1

aten::_convolution 0.10% 15.000us 0.87% 135.000us 135.000us 2.000us 0.00% 655.000us 655.000us 1

aten::cudnn_convolution 0.73% 113.000us 0.77% 120.000us 120.000us 652.000us 1.00% 653.000us 653.000us 1

aten::empty 0.04% 7.000us 0.04% 7.000us 7.000us 1.000us 0.00% 1.000us 1.000us 1

aten::batch_norm 0.06% 10.000us 0.72% 112.000us 112.000us 3.000us 0.00% 129.000us 129.000us 1

aten::_batch_norm_impl_index 0.07% 11.000us 0.65% 102.000us 102.000us 2.000us 0.00% 126.000us 126.000us 1

aten::cudnn_batch_norm 0.38% 59.000us 0.58% 91.000us 91.000us 117.000us 0.18% 124.000us 124.000us 1

aten::empty_like 0.05% 8.000us 0.10% 16.000us 16.000us 2.000us 0.00% 3.000us 3.000us 1

aten::empty 0.05% 8.000us 0.05% 8.000us 8.000us 1.000us 0.00% 1.000us 1.000us 1

aten::view 0.03% 5.000us 0.03% 5.000us 5.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.03% 4.000us 0.03% 4.000us 4.000us 1.000us 0.00% 1.000us 1.000us 1

aten::empty 0.02% 3.000us 0.02% 3.000us 3.000us 1.000us 0.00% 1.000us 1.000us 1

aten::add_ 0.17% 26.000us 0.17% 26.000us 26.000us 133.000us 0.20% 133.000us 133.000us 1

aten::relu_ 0.09% 14.000us 0.21% 33.000us 33.000us 3.000us 0.00% 96.000us 96.000us 1

aten::clamp_min_ 0.04% 7.000us 0.12% 19.000us 19.000us 2.000us 0.00% 93.000us 93.000us 1

aten::clamp_min 0.08% 12.000us 0.08% 12.000us 12.000us 91.000us 0.14% 91.000us 91.000us 1

aten::conv2d 0.06% 9.000us 1.29% 201.000us 201.000us 2.000us 0.00% 669.000us 669.000us 1

aten::convolution 0.10% 16.000us 1.23% 192.000us 192.000us 2.000us 0.00% 667.000us 667.000us 1

aten::_convolution 0.10% 16.000us 1.13% 176.000us 176.000us 2.000us 0.00% 665.000us 665.000us 1

aten::cudnn_convolution 0.98% 153.000us 1.03% 160.000us 160.000us 662.000us 1.01% 663.000us 663.000us 1

aten::empty 0.04% 7.000us 0.04% 7.000us 7.000us 1.000us 0.00% 1.000us 1.000us 1

aten::batch_norm 0.06% 10.000us 0.72% 112.000us 112.000us 2.000us 0.00% 131.000us 131.000us 1

aten::_batch_norm_impl_index 0.08% 12.000us 0.65% 102.000us 102.000us 3.000us 0.00% 129.000us 129.000us 1

--------------------------------- ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------ ------------

Self CPU time total: 15.582ms

Self CUDA time total: 65.290ms

参考资料:

[1] Datawhale_深入浅出pytorch

[2] https://zhuanlan.zhihu.com/p/36946874

2160

2160

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?