计算机视觉系列1 LeNet-5网络的思路和简单实现

本文链接:https://blog.csdn.net/weixin_44633882/article/details/86716096

1.LeNet网络简介

LeNet-5是Yann LeCun提出的,对MNIST数据集的分识别准确度能够达到99.2%

| 层名 | 输出 |

|---|---|

| 输入层INPUT | 32×32 |

| 卷积层C1 | 28×28×6 |

| 下采样层S2 | 14×14×6 |

| 卷积层C3 | 10×10×16 |

| 下采样层S4 | 5×5×16 |

| 全连接层C5 | 120 |

| 全连接层F6 | 84 |

| 输出层OUTPUT | 10 |

2.LeNet每层网络的计算

(1) INPUT层

- 输出:32×32×1

(2) C1层(卷积层)

- 输入:32×32×1 输出:28×28×6 (32-5+1)

- 卷积核:大小:5×5×6

- 分析:

神经元数量:28×28×6

可训练参数:(5×5+1)× 6 = 156 (卷积核中feature个数+bias)

连接数:(5×5+1)×6×28×28 = 122304(每个卷积核都有一个bias,每次卷积运算的结果都会加上bias)

虽然由122304个连接,但只需要学习156个参数。(权值共享)

(3) S2层(下采样层)非池化层

- 输入:28×28×6 输出:14×14×6

- 感受野:2×2 (将4个数相加,乘上一个可训练的系数,加上bias,输入sigmoid)

- 分析:

神经元数量:14×14×6

可训练参数:12 (权值+bias)×6

连接数:(2×2 + 1)×6×14×14 = 5880 (感受野中的四个元素加上bias,很好奇为什么没有加上乘的权重)

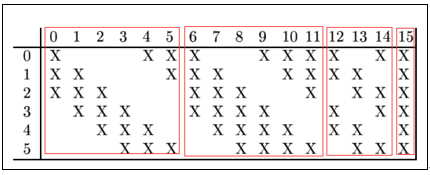

(4) C3层(卷积层)

- 输入:14×14×6 输出:10×10×6 (14-5+1)

- 卷积核:大小:5×5×16

- 分析:

神经元数量:10×10×16

可训练参数:(5×5×6+1)×166×(3×5×5+1)+6×(4×5×5+1)+3×(4×5×5+1)+1×(6×5×5+1) = 1516

连接数:(5×5+1)×10×10×1610×10×1516 = 151600(连接数计算应该反向计算,1516是一个feature所需要的连接数) - 说明:

C3中每个feature map不都是与S2中的所有feature map相连接的

C3这层,将6个14×14的feature map转换为 16个10×10的feature map

这边使用特殊组合来完成

列表示C3中的feature map序列号

行表示S2中的feature map序列号 - 第一个红框:

C3中的前6个feature map每个都是与3个S2中的feature map相连接

可训练参数:(3×5×5+1)×6

这张图就很容易理解了

- 第二个红框:

C3中的6-11的feature map每个都是与4个S2中的feature map相连接

可训练参数:(4×5×5+1)×6 - 第三个红框:

C3中的12-14的feature map每个都是与3个S2中的feature map相连接(不连续的子集)

可训练参数:(4×5×5+1)×3 - 第四个红框:

C3中的15 feature map与S2中所有的feature map相连接

可训练参数:(6×5×5+1)×1

为什么要采用上面的连接方案,而不是直接与所有的feature map相连接?

1.不完全的链接可以减少参数和连接数。

2.每个特征图都是从原来的特征中提取更抽象的特征,采用这样不对称的链接,可以使提取出不同的更高级的特征。

(5) S4层(下采样层)

- 输入:10×10×6 输出:5×5×16

- 感受野:2×2

- 分析:

过程和S2的步骤相似

神经元数量:5×5×16

可训练参数:32 (2×6)2表示乘的权重加上bias

连接数: (2×2+1)×5×5×16 = 2000

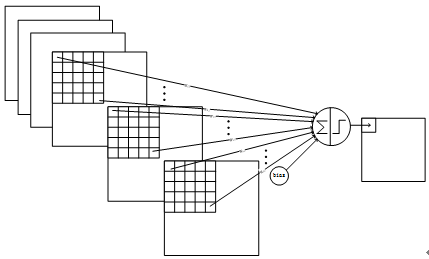

(6) C5层(卷积层)用卷积层实现全连接层的功能

- 输入:5×5×16 输出:1×1×120

- 卷积核:大小:5×5×120

- 分析:

可训练参数:(5×5×16 + 1)×120 =48120(每个卷积核对应一个bias)

(7) F6层(全连接层)

- 输入:1×1×120 输出:1×1×84

- 计算:向量点积,加上bias,输入tanh激活函数

tanh函数: f ( a ) = A t a n h ( S a ) f(a)=Atanh(Sa) f(a)=Atanh(Sa)

A为振幅,S可认为斜率,论文中,A=1.7159 - 分析:

可训练参数:(120+1)×84 = 10164

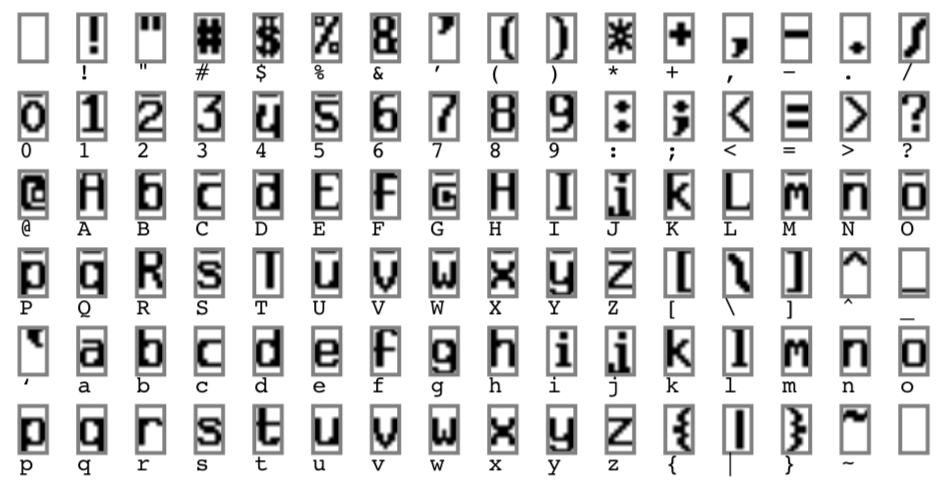

(8) 输出层

输出层由10个欧几里得径向基函数(RBF)构成,每个RBF对应0-9中的一个类别。

RBF函数的输出:

y

i

=

∑

j

(

x

j

−

w

i

j

)

2

y_i=\sum_{j}{(x_j-w_{ij})^2}

yi=j∑(xj−wij)2

X

→

\overrightarrow{X}

X是F6层输出的向量,

x

i

∈

X

→

=

{

x

1

,

.

.

.

,

x

i

,

.

.

,

x

84

}

x_i∈\overrightarrow{X} = \{x_1,...,x_i,..,x_{84}\}

xi∈X={x1,...,xi,..,x84}

对于一个数字i,RBF保留一个常向量,

w

i

j

∈

w

i

→

=

w

i

1

,

.

.

.

,

w

i

j

,

.

.

.

,

w

i

84

w_{ij}∈\overrightarrow{w_i} = {w_{i1},...,w_{ij},...,w_{i84}}

wij∈wi=wi1,...,wij,...,wi84

RBF函数的输出是输入

X

→

\overrightarrow{X}

X和

w

i

→

\overrightarrow{w_i}

wi的欧几里得距离。

RBF核的向量

w

i

→

\overrightarrow{w_i}

wi中的元素是+1或-1。这些是人为设置的,这样可以在7*14的位图上将字符表示出来。

3. LeNet简单的代码复现

代码参考《TensorFlow实战Google深度学习框架》

代码结构

- dataset

- mnist_data

- …

- mnist_data

- LeNet

- mnist_inference,py

- mnist_test.py

- mnist_train.py

- model

- cheakpoint

- …

(1)模型定义(mnist_inference.py)

import tensorflow as tf

INPUT_NODE = 784

OUT_NODE = 10

IMAGE_SIZE = 28

NUM_CHANNELS = 1

NUM_LABELS = 10

# Layer1

CONV1_DEEP = 32

CONV1_SIZE = 5

# Layer2

CONV2_DEEP = 64

CONV2_SIZE = 5

# 全连接层

FC_SIZE = 512

def inference(input_tensor, train, regularizer):

# input_tensor: [batch, height, width, channels]

with tf.variable_scope('l1-conv1'):

# 输入:28*28*1

# 输出:28*28*32

# 卷积核(5,5,1,32) padding:same

conv1_weights = tf.get_variable(

"weight", [CONV1_SIZE, CONV1_SIZE, NUM_CHANNELS, CONV1_DEEP],

initializer=tf.truncated_normal_initializer(stddev=0.1)

)

conv1_biases = tf.get_variable("bias", [CONV1_DEEP], initializer=tf.constant_initializer(0.0))

conv1 = tf.nn.conv2d(input_tensor, conv1_weights, strides=[1, 1, 1, 1], padding='SAME')

relu1 = tf.nn.relu(tf.nn.bias_add(conv1, conv1_biases))

with tf.name_scope("l2-pool1"):

# 输入:28*28*32

# 输出:14*14*32

pool1 = tf.nn.max_pool(relu1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding="SAME")

with tf.variable_scope("l3-conv2"):

# 输入:14*14*32

# 输出:14*14*64

# 卷积核(5,5,32,64)

conv2_weights = tf.get_variable(

"weight", [CONV2_SIZE, CONV2_SIZE, CONV1_DEEP, CONV2_DEEP],

initializer=tf.truncated_normal_initializer(stddev=0.1)

)

conv2_biases = tf.get_variable("bias", [CONV2_DEEP], initializer=tf.constant_initializer(0.0))

conv2 = tf.nn.conv2d(pool1, conv2_weights, strides=[1, 1, 1, 1], padding='SAME')

relu2 = tf.nn.relu(tf.nn.bias_add(conv2, conv2_biases))

with tf.name_scope("l4-pool2"):

# 输入:14*14*64

# 输出:7*7*64

pool2 = tf.nn.max_pool(relu2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

# 矩阵转换: 将pool2(7*7*64)转换为一维向量,作为后面的输入

# 输入: (batch_size) 14*14*64

# 输出: (batch_size, 7*7*64)

pool_shape = pool2.get_shape().as_list()

nodes = pool_shape[1] * pool_shape[2] * pool_shape[3]

reshaped = tf.reshape(pool2, [pool_shape[0], nodes])

with tf.variable_scope('l5-fc1'):

# 输入: 7*7*64

fc1_weights = tf.get_variable("weight", [nodes, FC_SIZE],

initializer=tf.truncated_normal_initializer(stddev=0.1))

if regularizer != None:

# 只有全连接层的权重需要加入正则化

tf.add_to_collection('loss', regularizer(fc1_weights))

fc1_biases = tf.get_variable('bias', [FC_SIZE], initializer=tf.constant_initializer(0.1))

fc1 = tf.nn.relu(tf.matmul(reshaped, fc1_weights) + fc1_biases)

if train:

fc1 = tf.nn.dropout(fc1, 0.5)

with tf.variable_scope('l6-fc2'):

fc2_weights = tf.get_variable("weight", [FC_SIZE, NUM_LABELS],

initializer=tf.truncated_normal_initializer(stddev=0.1))

if regularizer != None:

tf.add_to_collection('loss', regularizer(fc2_weights))

fc2_biases = tf.get_variable("bias", [NUM_LABELS], initializer=tf.constant_initializer(0.1))

logit = tf.matmul(fc1, fc2_weights) + fc2_biases

return logit

if __name__ == '__main__':

pass

(2)模型训练(mnist_train.py)

import sys

sys.path.append(r"C:\Users\11244\Desktop\项目\CNN\LeNet")

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

import os

import numpy as np

import time

BATCH_SIZE = 100

LEARNING_RATE_BASE = 0.01

LEARNING_RATE_DECAY = 0.99

REGULARIZATION_RATE = 0.0001

TRAINING_STEPS = 6000

MOVING_AVERAGE_DECAY = 0.99

# 模型保存的路径和文件名

MODEL_SAVE_PATH = "model/"

MODEL_NAME = "model.ckpt"

def train(mnist):

x = tf.placeholder(tf.float32, [

BATCH_SIZE,

mnist_inference.IMAGE_SIZE,

mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS],

name='x-input')

y_ = tf.placeholder(tf.float32, [None, mnist_inference.OUT_NODE],

name='y-input')

# a function to apply L2 regularization to weights

regularizer = tf.contrib.layers.l2_regularizer(REGULARIZATION_RATE)

y = mnist_inference.inference(x, True, regularizer)

global_step = tf.Variable(0, trainable=False)

# define the loss function, learningrate, 滑动平均操作 and the process of train

variable_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY,

global_step)

# 返回关于所有变量的滑动平均op

variable_averages_op = variable_averages.apply(tf.trainable_variables())

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=y, labels=tf.argmax(y_, 1))

cross_entropy_mean = tf.reduce_mean(cross_entropy)

loss = cross_entropy_mean + tf.add_n(tf.get_collection('loss'))

learning_rate = tf.train.exponential_decay(

LEARNING_RATE_BASE,

global_step,

mnist.train.num_examples / BATCH_SIZE, LEARNING_RATE_DECAY,

staircase=True

)

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(

loss, global_step=global_step)

with tf.control_dependencies([train_step, variable_averages_op]):

train_op = tf.no_op(name='train')

saver = tf.train.Saver()

with tf.Session() as sess:

tf.global_variables_initializer().run()

for i in range(TRAINING_STEPS):

xs, ys = mnist.train.next_batch(BATCH_SIZE)

reshaped_xs = np.reshape(xs, (

BATCH_SIZE,

mnist_inference.IMAGE_SIZE,

mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS))

_, loss_value, step = sess.run([train_op, loss, global_step],

feed_dict={x: reshaped_xs, y_: ys})

# 每1000个epoch保存一次模型

if i % 1000 == 0:

print(time.time())

print("After %d training steps, loss on training batch is %g."

% (step, loss_value))

# 保存格式 如: model.ckpt-1000

saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=global_step)

def main(argv=None):

mnist = input_data.read_data_sets("../dataset/mnist_data", one_hot=True)

train(mnist)

if __name__ == '__main__':

main()

(3)模型测试(mnist_test.py)

import sys

sys.path.append(r"C:\Users\11244\Desktop\项目\CNN\LeNet")

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference

import mnist_train

import os

import numpy as np

import time

def test(mnist):

with tf.Graph().as_default() as g:

x = tf.placeholder(tf.float32, [

mnist.validation.num_examples, # 第一维表示样例的个数

mnist_inference.IMAGE_SIZE, # 第二维和第三维表示图片的尺寸

mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS], # 第四维表示图片的深度,对于RBG格式的图片,深度为5

name='x-input')

y_ = tf.placeholder(tf.float32, [None, mnist_inference.OUT_NODE], name='y-input')

# feed_dict

validate_feed = {x: np.reshape(mnist.validation.images, (

mnist.validation.num_examples, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE,

mnist_inference.NUM_CHANNELS)),

y_: mnist.validation.labels}

# 网络输出

y = mnist_inference.inference(x, False, None)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

variable_averages = tf.train.ExponentialMovingAverage(mnist_train.MOVING_AVERAGE_DECAY)

print(variable_averages.name)

variable_to_restore = variable_averages.variables_to_restore()

saver = tf.train.Saver(variable_to_restore)

with tf.Session() as sess:

ckpt = tf.train.get_checkpoint_state(mnist_train.MODEL_SAVE_PATH)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]

accuracy_score = sess.run(accuracy, feed_dict=validate_feed)

print("After %s training step(s), validation accuracy = %f" % (global_step, accuracy_score))

else:

print("No checkpoint file found")

def main(argv=None):

mnist = input_data.read_data_sets("../dataset/mnist_data", one_hot=True)

test(mnist)

if __name__ == '__main__':

tf.app.run()

5633

5633

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?