需求描述

最近需要将mysql数据库的某个表中的一个状态信息统计结果并实时显示到数据大屏上

思路

首先使用flinkcdc监听mysql中的特定表

然后编写一定的处理逻辑得出结果数据

将结果数据通过flink写入到mysql的结果表中

技术选型

- java 1.8

- mysql 8.0.28

- flink 1.11.3

- flink cdc 1.1.0

实现

数据

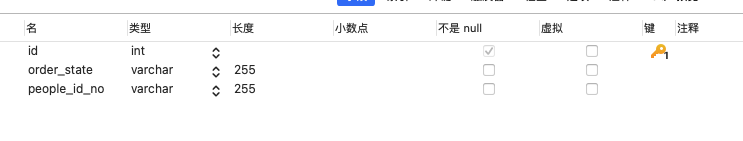

order_info表(需要处理的数据)

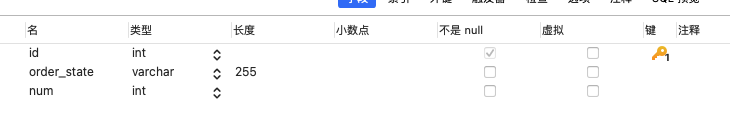

flink_count表(结果数据)

java代码

pom文件

这里面没有必要这么多

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.antg</groupId>

<artifactId>TestFlinkCDC</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<java.version>1.8</java.version>

<flink.version>1.11.3</flink.version>

<scala.binary.version>2.11</scala.binary.version>

<log4j.version>2.17.0</log4j.version>

</properties>

<dependencies>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.22</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.16</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_2.11</artifactId>

<version>1.11.3</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.elasticsearch.client/transport -->

<dependency>

<groupId>com.alibaba.ververica</groupId>

<!-- add the dependency matching your database -->

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>1.1.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-core</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/com.google.guava/guava -->

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>30.1-jre</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.mybatis.spring.boot/mybatis-spring-boot-starter -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.47</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hive_2.11</artifactId>

<version>1.11.3</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-sql-connector-hive-1.2.2_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.11</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-parquet_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>1.2.2</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>1.2.2</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.orc</groupId>

<artifactId>orc-core</artifactId>

<version>1.4.3</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-orc_2.11</artifactId>

<version>1.11.3</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.mybatis/mybatis -->

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis</artifactId>

<version>3.4.6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.1</version>

</dependency>

</dependencies>

</project>

目录结构

Demo01.java

package com.antg.main;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import com.alibaba.ververica.cdc.connectors.mysql.MySQLSource;

import com.antg.entity.FlinkCount;

import com.antg.sink.OutputSkin;

import com.antg.deserialization.MyDeserialization;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.util.Collector;

public class Demo01 {

public static void main(String[] args) throws Exception {

//创建监听的数据源

SourceFunction<String> sourceFunction = MySQLSource.<String>builder()

.hostname("127.0.0.1")

.port(3306)

// .databaseList("test") // 监听test数据库下的所有表

.tableList("test.order_info") //这里一定要带库名,因为上面可以同时监听多个库,会有不同库相同表名的情况

.username("root")

.password("123456")

.deserializer(new MyDeserialization()) // 将SourceRecord 转换成 String形式

.build();

//获取运行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//开始监听数据,并将动态数据变化打印到屏幕中

DataStreamSource<String> ds = env.addSource(sourceFunction);

SingleOutputStreamOperator<FlinkCount> res = ds

.flatMap(new FlatMapFunction<String, Tuple2<String, Long>>() {

@Override

public void flatMap(String line, Collector<Tuple2<String, Long>> out) throws Exception {

//将字符串转json

JSONObject item = (JSONObject) JSON.parse(line);

String before = item.getJSONObject("before").getString("order_state");

String after = item.getJSONObject("after").getString("order_state");

//创建和读取情况

if (item.getString("op").equals("create") || item.getString("op").equals("read")) {

out.collect(new Tuple2<String, Long>(after, 1L));

//更新情况

} else if (item.getString("op").equals("update")) {

//如果是别的字段做更新操作数据不变

if(before.equals(after)){

return;

}

if (after.equals("a")) {

out.collect(new Tuple2<String, Long>("a", 1L));

out.collect(new Tuple2<String, Long>("b", -1L));

} else {

out.collect(new Tuple2<String, Long>("a", -1L));

out.collect(new Tuple2<String, Long>("b", 1L));

}

//删除数据情况

} else if (item.getString("op").equals("delete")) {

out.collect(new Tuple2<String, Long>(before, -1L));

}

//还可以加别的情况,比如delete_flag标志做假删除

}

})

.keyBy(0)

.sum(1)

.map(new MapFunction<Tuple2<String, Long>, FlinkCount>() {

@Override

public FlinkCount map(Tuple2<String, Long> value) throws Exception {

FlinkCount res = new FlinkCount();

res.setOrderState(value.f0);

res.setCount(value.f1);

return res;

}

});

//向数据库输出数据

res.addSink(new OutputSkin());

//ds.print();

//开始执行任务

env.execute();

}

}

MyDeserialization.java

package com.antg.deserialization;

import com.alibaba.fastjson.JSONObject;

import com.alibaba.ververica.cdc.debezium.DebeziumDeserializationSchema;

import io.debezium.data.Envelope;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

public class MyDeserialization implements DebeziumDeserializationSchema<String> {

@Override

public void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception {

//获取主题信息,包含着数据库和表名

String topic = sourceRecord.topic();

String[] arr = topic.split("\\.");

String db = arr[1];

String tableName = arr[2];

//获取操作类型 READ DELETE UPDATE CREATE

Envelope.Operation operation =

Envelope.operationFor(sourceRecord);

//获取值信息并转换为 Struct 类型

Struct value = (Struct) sourceRecord.value();

//得到变化之前的数据

Struct before = value.getStruct("before");

JSONObject beforeJson = new JSONObject();

if(before != null){

for (Field field : before.schema().fields()) {

Object o = before.get(field);

beforeJson.put(field.name(), o);

}

}

//得到变化后的数据

Struct after = value.getStruct("after");

//创建 JSON 格式的数据对象用于存储数据信息

JSONObject afterJson = new JSONObject();

if(after != null){

for (Field field : after.schema().fields()) {

Object o = after.get(field);

afterJson.put(field.name(), o);

}

}

//创建 JSON 格式的数据对象,用于封装最终返回值数据信息

JSONObject result = new JSONObject();

result.put("op", operation.toString().toLowerCase());

result.put("before", beforeJson);

result.put("after", afterJson);

result.put("db", db);

result.put("table", tableName);

//发送数据给下游

collector.collect(result.toJSONString());

}

@Override

public TypeInformation<String> getProducedType() {

return TypeInformation.of(String.class);

}

}

OutputSkin.java

package com.antg.sink;

import com.antg.entity.FlinkCount;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

public class OutputSkin extends RichSinkFunction<FlinkCount> {

Connection connection;

PreparedStatement pstmt;

//获取数据库链接信息

private Connection getConnection(){

Connection conn = null;

try{

Class.forName("com.mysql.jdbc.Driver");//将mysql驱动注册到DriverManager中去

String url = "jdbc:mysql://localhost:3306/test";//数据库路径

conn = DriverManager.getConnection(url,"root","123456");//数据库链接信息

}catch (Exception e){

e.printStackTrace();

}

return conn;

}

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

connection = getConnection();

String sql = "update flink_count set num=? where order_state=?";

pstmt = connection.prepareStatement(sql);

}

//每条记录插入时调用一次

@Override

public void invoke(FlinkCount value, Context context) throws Exception {

//为前面的占位符赋值

pstmt.setString(2,value.getOrderState());

pstmt.setLong(1,value.getCount());

pstmt.executeUpdate();

}

@Override

public void close() throws Exception {

super.close();

if(pstmt != null){

pstmt.close();

}

if (connection != null){

connection.close();

}

}

}

FlinkCount.java

package com.antg.entity;

import lombok.Data;

@Data

public class FlinkCount {

private String orderState;

private Long count;

}

实现效果

当order_info表中的order_state数据发送了变化的时候flink_count表中的数据会动态的变化

比如新加了一条数据,那么结果表中也会相应的累计到对于的状态上

如果删掉了一条数据,结果表会发生变化

如果是a状态变成了b或者b变成了a,结果表也会发送变化

但是如果是别的字段发生变化,结果表不变

总结

flinkcdc初体验,很厉害的一个组件,有空研究一下底层的实现逻辑

以上代码有问题欢迎评论区留言

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?