数据源是尚硅谷的课件, 需要的话可以私信我

核心代码

import org.apache.flink.api.common.functions.AggregateFunction

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.common.state.{ValueState, ValueStateDescriptor}

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.streaming.api.functions.KeyedProcessFunction

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.WindowFunction

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

import org.apache.flink.streaming.connectors.redis.RedisSink

import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig

import org.apache.flink.streaming.connectors.redis.common.mapper.{RedisCommand, RedisCommandDescription, RedisMapper}

import org.apache.flink.util.Collector

import java.text.SimpleDateFormat

import java.util.Properties

// 每条数据

/*

83.149.9.216 - - 17/05/2015:10:05:03 +0000 GET /presentations/logstash-monitorama-2013/images/kibana-search.png

83.149.9.216 - - 17/05/2015:10:05:43 +0000 GET /presentations/logstash-monitorama-2013/images/kibana-dashboard3.png

83.149.9.216 - - 17/05/2015:10:05:47 +0000 GET /presentations/logstash-monitorama-2013/plugin/highlight/highlight.js

*/

// PV数据样例类

case class PVItem(url: String, timestamp: Long)

// windowEnd的keyBy样例类

case class PVWindowEnd(url: String, WindowEnd: Long, Count: Long)

// 目标: 每5分钟统计一次该5分钟的访问量

object PageView {

def main(args: Array[String]): Unit = {

// 创建环境

val env = StreamExecutionEnvironment.getExecutionEnvironment

// 设置时间特性为事件时间

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

// kafka消费数据

/*

// 配置kafka

val properties = new Properties()

properties.put("bootstrap.server", "kafka的ip地址")

// 从kafka消费数据

val inputStream = env.addSource(new FlinkKafkaConsumer[String]("订阅主题",new SimpleStringSchema() ,properties))

*/

// 读取resource的数据文件

val inputStream: DataStream[String] = env.readTextFile(getClass.getResource("apache.log").getPath)

// 将每行数据用空格切割后 封装成样例类 数据乱序 并指定时间戳 设置Watermark为 30秒

val dataStream = inputStream

.map(data=>{

val arr = data.split(" ")

val timestamp = new SimpleDateFormat("dd/MM/yyyy:HH:mm:ss").parse(arr(3)).getTime

// (url: String, timestamp: Long)

PVItem(arr(6), timestamp)

}).assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[PVItem](Time.seconds(30)) {

override def extractTimestamp(t: PVItem): Long = t.timestamp

})

// 定义redis的配置

val conf = new FlinkJedisPoolConfig.Builder().setHost("localhost").setPort(6379).build()

dataStream

.keyBy(_.url) // 用url进行分组 充分利用分区

.timeWindow(Time.minutes(5)) // 开滚动窗口 5分钟

.aggregate(new CountAgg(), new PVResultProcess()) // 预聚合统计PV值接着Process返回 windowEnd的keyBy样例类(url: String, WindowEnd: Long, Count: Long)

.keyBy(_.WindowEnd) // 基于WindowEnd分组

.process(new ResultProcess()) // 返回每个窗口的数据(时间: String, 次数: String)

.addSink(new RedisSink[(String, String)](conf, new MyPVRedisMapper())) // 自定义sink实现RedisSink向Redis插入数据

// 执行

env.execute()

}

}

// 实现RedisMapper 向Redis插入数据

class MyPVRedisMapper() extends RedisMapper[(String, String)]{

// 定义redis字段描述为HSET RedisCommand可选项 LPUSH RPUSH SADD SET PFADD PUBLISH ZADD ZREM HSET 哈希表名"myPV"

override def getCommandDescription: RedisCommandDescription = new RedisCommandDescription(RedisCommand.HSET, "myPV")

// 指定key的值为元组的第一个元素 即windowEnd

override def getKeyFromData(t: (String, String)): String = t._1

// 指定value的值为元组的第二个元素 即count

override def getValueFromData(t: (String, String)):String = t._2

}

// 预聚合

class CountAgg() extends AggregateFunction[PVItem, Long, Long]{

// 创建初始值

override def createAccumulator(): Long = 0L

// 每条数据来都执行add

override def add(in: PVItem, acc: Long): Long = acc+1

// 返回数据

override def getResult(acc: Long): Long = acc

// 合并 随意

override def merge(acc: Long, acc1: Long): Long = acc+acc1

}

// 拿到预聚合的结果 加上用Process里的window取windowEnd 返回一个PVWindowEnd样例类

class PVResultProcess() extends WindowFunction[Long, PVWindowEnd, String, TimeWindow]{

override def apply(key: String, window: TimeWindow, input: Iterable[Long], out:Collector[PVWindowEnd]): Unit = {

out.collect(PVWindowEnd(key, window.getEnd, input.head))

}

}

// 对windowEnd分组后 对相同windowEnd的数据进行状态累加

class ResultProcess extends KeyedProcessFunction[Long, PVWindowEnd, (String, String)]{

// 懒加载一个value状态

lazy val count: ValueState[Long] = getRuntimeContext.getState(new ValueStateDescriptor[Long]("count", classOf[Long]))

// 每条数据来都会执行该函数

override def processElement(i: PVWindowEnd, context: KeyedProcessFunction[Long, PVWindowEnd,(String, String)]#Context, collector: Collector[(String, String)]): Unit = {

// 累加每条数据的Count值 更新count状态

count.update(count.value()+i.Count)

// 定义定时器为关闭窗户1毫秒后执行

context.timerService().registerEventTimeTimer(i.WindowEnd+1)

}

// 定时器触发函数

override def onTimer(timestamp: Long, ctx:KeyedProcessFunction[Long, PVWindowEnd, (String, String)]#OnTimerContext, out: Collector[(String, String)]): Unit = {

// 输出包装好的元组(String, String) 用于存放到redis

out.collect((timestamp.toString, count.value().toString))

// 清空状态

count.clear()

}

}

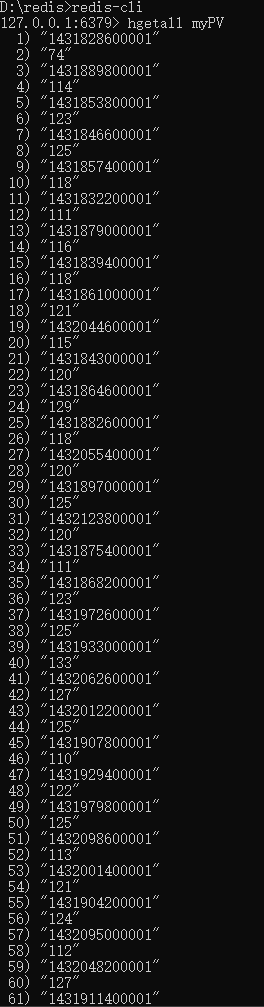

Redis查询结果

依赖

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>1.10.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.10.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.11_2.11</artifactId>

<version>1.10.2</version>

</dependency>

<dependency>

<groupId>org.apache.bahir</groupId>

<artifactId>flink-connector-redis_2.11</artifactId>

<version>1.0</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.25</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-statebackend-rocksdb_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-scala-bridge_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-csv</artifactId>

<version>1.10.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.4.6</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

1020

1020

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?