前言

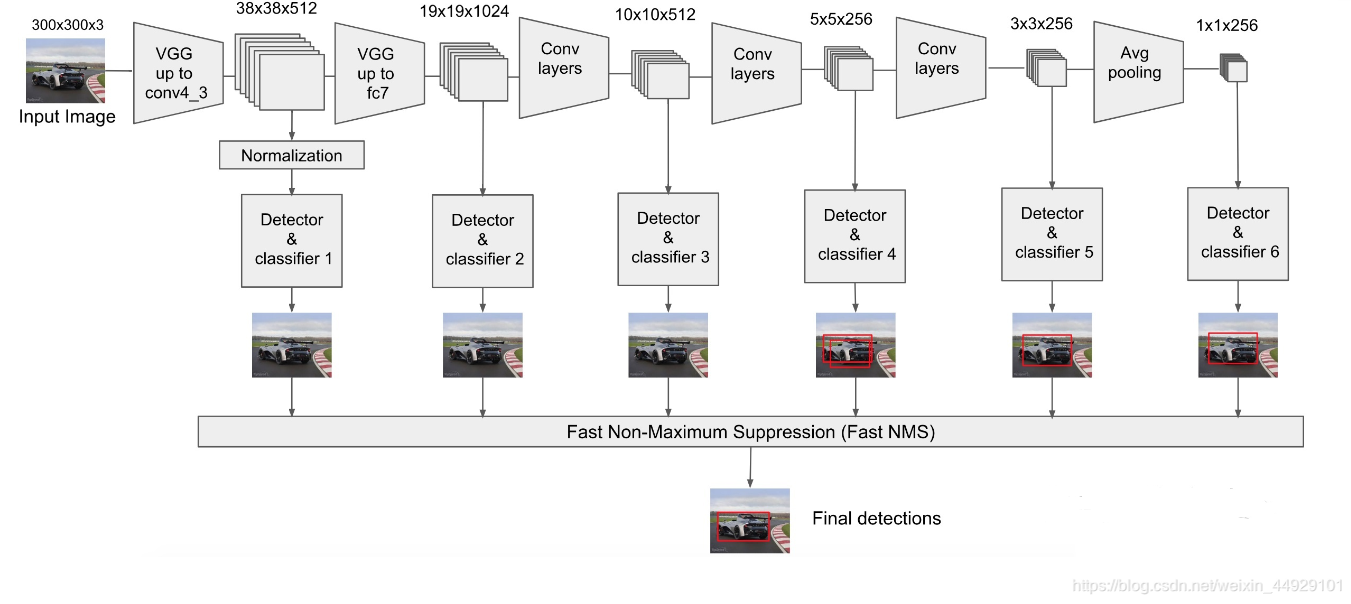

上午写了anchor构造部分,上了一节课,下午继续肝,现在就是SSD的网络构造部分了,如果想知道SSD的模型结构可以看我写的原理里面有结构图,这里就不复制过来了。额算了还是整过来吧:

ssd_model_fn()

def ssd_model_fn(features, labels, mode, params):

"""model_fn for SSD to be used with our Estimator."""

shape = labels['shape']

loc_targets = labels['loc_targets']

cls_targets = labels['cls_targets']

match_scores = labels['match_scores']

global global_anchor_info

decode_fn = global_anchor_info['decode_fn']

num_anchors_per_layer = global_anchor_info['num_anchors_per_layer']

all_num_anchors_depth = global_anchor_info['all_num_anchors_depth']

# bboxes_pred = decode_fn(loc_targets[0])

# bboxes_pred = [tf.reshape(preds, [-1, 4]) for preds in bboxes_pred]

# bboxes_pred = tf.concat(bboxes_pred, axis=0)

# save_image_op = tf.py_func(save_image_with_bbox,

# [ssd_preprocessing.unwhiten_image(features[0]),

# tf.clip_by_value(cls_targets[0], 0, tf.int64.max),

# match_scores[0],

# bboxes_pred],

# tf.int64, stateful=True)

# with tf.control_dependencies([save_image_op]):

#print(all_num_anchors_depth)

with tf.variable_scope(params['model_scope'], default_name=None, values=[features], reuse=tf.AUTO_REUSE):

backbone = ssd_net.VGG16Backbone(params['data_format'])

feature_layers = backbone.forward(features, training=(mode == tf.estimator.ModeKeys.TRAIN))

#print(feature_layers)

location_pred, cls_pred = ssd_net.multibox_head(feature_layers, params['num_classes'], all_num_anchors_depth, data_format=params['data_format'])

if params['data_format'] == 'channels_first':

cls_pred = [tf.transpose(pred, [0, 2, 3, 1]) for pred in cls_pred]

location_pred = [tf.transpose(pred, [0, 2, 3, 1]) for pred in location_pred]

cls_pred = [tf.reshape(pred, [tf.shape(features)[0], -1, params['num_classes']]) for pred in cls_pred]

location_pred = [tf.reshape(pred, [tf.shape(features)[0], -1, 4]) for pred in location_pred]

cls_pred = tf.concat(cls_pred, axis=1)

location_pred = tf.concat(location_pred, axis=1)

cls_pred = tf.reshape(cls_pred, [-1, params['num_classes']])

location_pred = tf.reshape(location_pred, [-1, 4])

with tf.device('/cpu:0'):

with tf.control_dependencies([cls_pred, location_pred]):

with tf.name_scope('post_forward'):

#bboxes_pred = decode_fn(location_pred)

bboxes_pred = tf.map_fn(lambda _preds : decode_fn(_preds),

tf.reshape(location_pred, [tf.shape(features)[0], -1, 4]),

dtype=[tf.float32] * len(num_anchors_per_layer), back_prop=False)

#cls_targets = tf.Print(cls_targets, [tf.shape(bboxes_pred[0]),tf.shape(bboxes_pred[1]),tf.shape(bboxes_pred[2]),tf.shape(bboxes_pred[3])])

bboxes_pred = [tf.reshape(preds, [-1, 4]) for preds in bboxes_pred]

bboxes_pred = tf.concat(bboxes_pred, axis=0)

flaten_cls_targets = tf.reshape(cls_targets, [-1])

flaten_match_scores = tf.reshape(match_scores, [-1])

flaten_loc_targets = tf.reshape(loc_targets, [-1, 4])

# each positive examples has one label

positive_mask = flaten_cls_targets > 0

n_positives = tf.count_nonzero(positive_mask)

batch_n_positives = tf.count_nonzero(cls_targets, -1)

batch_negtive_mask = tf.equal(cls_targets, 0)#tf.logical_and(tf.equal(cls_targets, 0), match_scores > 0.)

batch_n_negtives = tf.count_nonzero(batch_negtive_mask, -1)

batch_n_neg_select = tf.cast(params['negative_ratio'] * tf.cast(batch_n_positives, tf.float32), tf.int32)

batch_n_neg_select = tf.minimum(batch_n_neg_select, tf.cast(batch_n_negtives, tf.int32))

# hard negative mining for classification

predictions_for_bg = tf.nn.softmax(tf.reshape(cls_pred, [tf.shape(features)[0], -1, params['num_classes']]))[:, :, 0]

prob_for_negtives = tf.where(batch_negtive_mask,

0. - predictions_for_bg,

# ignore all the positives

0. - tf.ones_like(predictions_for_bg))

topk_prob_for_bg, _ = tf.nn.top_k(prob_for_negtives, k=tf.shape(prob_for_negtives)[1])

score_at_k = tf.gather_nd(topk_prob_for_bg, tf.stack([tf.range(tf.shape(features)[0]), batch_n_neg_select - 1], axis=-1))

selected_neg_mask = prob_for_negtives >= tf.expand_dims(score_at_k, axis=-1)

# include both selected negtive and all positive examples

final_mask = tf.stop_gradient(tf.logical_or(tf.reshape(tf.logical_and(batch_negtive_mask, selected_neg_mask), [-1]), positive_mask))

total_examples = tf.count_nonzero(final_mask)

cls_pred = tf.boolean_mask(cls_pred, final_mask)

location_pred = tf.boolean_mask(location_pred, tf.stop_gradient(positive_mask))

flaten_cls_targets = tf.boolean_mask(tf.clip_by_value(flaten_cls_targets, 0, params['num_classes']), final_mask)

flaten_loc_targets = tf.stop_gradient(tf.boolean_mask(flaten_loc_targets, positive_mask))

predictions = {

'classes': tf.argmax(cls_pred, axis=-1),

'probabilities': tf.reduce_max(tf.nn.softmax(cls_pred, name='softmax_tensor'), axis=-1),

'loc_predict': bboxes_pred }

cls_accuracy = tf.metrics.accuracy(flaten_cls_targets, predictions['classes'])

metrics = {'cls_accuracy': cls_accuracy}

# Create a tensor named train_accuracy for logging purposes.

tf.identity(cls_accuracy[1], name='cls_accuracy')

tf.summary.scalar('cls_accuracy', cls_accuracy[1])

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(mode=mode, predictions=predictions)

# Calculate loss, which includes softmax cross entropy and L2 regularization.

#cross_entropy = tf.cond(n_positives > 0, lambda: tf.losses.sparse_softmax_cross_entropy(labels=flaten_cls_targets, logits=cls_pred), lambda: 0.)# * (params['negative_ratio'] + 1.)

#flaten_cls_targets=tf.Print(flaten_cls_targets, [flaten_loc_targets],summarize=50000)

cross_entropy = tf.losses.sparse_softmax_cross_entropy(labels=flaten_cls_targets, logits=cls_pred) * (params['negative_ratio'] + 1.)

# Create a tensor named cross_entropy for logging purposes.

tf.identity(cross_entropy, name='cross_entropy_loss')

tf.summary.scalar('cross_entropy_loss', cross_entropy)

#loc_loss = tf.cond(n_positives > 0, lambda: modified_smooth_l1(location_pred, tf.stop_gradient(flaten_loc_targets), sigma=1.), lambda: tf.zeros_like(location_pred))

loc_loss = modified_smooth_l1(location_pred, flaten_loc_targets, sigma=1.)

#loc_loss = modified_smooth_l1(location_pred, tf.stop_gradient(gtargets))

loc_loss = tf.reduce_mean(tf.reduce_sum(loc_loss, axis=-1), name='location_loss')

tf.summary.scalar('location_loss', loc_loss)

tf.losses.add_loss(loc_loss)

l2_loss_vars = []

for trainable_var in tf.trainable_variables():

if '_bn' not in trainable_var.name:

if 'conv4_3_scale' not in trainable_var.name:

l2_loss_vars.append(tf.nn.l2_loss(trainable_var))

else:

l2_loss_vars.append(tf.nn.l2_loss(trainable_var) * 0.1)

# Add weight decay to the loss. We exclude the batch norm variables because

# doing so leads to a small improvement in accuracy.

total_loss = tf.add(cross_entropy + loc_loss, tf.multiply(params['weight_decay'], tf.add_n(l2_loss_vars), name='l2_loss'), name='total_loss')

if mode == tf.estimator.ModeKeys.TRAIN:

global_step = tf.train.get_or_create_global_step()

lr_values = [params['learning_rate'] * decay for decay in params['lr_decay_factors']]

learning_rate = tf.train.piecewise_constant(tf.cast(global_step, tf.int32),

[int(_) for _ in params['decay_boundaries']],

lr_values)

truncated_learning_rate = tf.maximum(learning_rate, tf.constant(params['end_learning_rate'], dtype=learning_rate.dtype), name='learning_rate')

# Create a tensor named learning_rate for logging purposes.

tf.summary.scalar('learning_rate', truncated_learning_rate)

optimizer = tf.train.MomentumOptimizer(learning_rate=truncated_learning_rate,

momentum=params['momentum'])

optimizer = tf.contrib.estimator.TowerOptimizer(optimizer)

# Batch norm requires update_ops to be added as a train_op dependency.

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

train_op = optimizer.minimize(total_loss, global_step)

else:

train_op = None

return tf.estimator.EstimatorSpec(

mode=mode,

predictions=predictions,

loss=total_loss,

train_op=train_op,

eval_metric_ops=metrics,

scaffold=tf.train.Scaffold(init_fn=get_init_fn()))

现在我们开始逐句解析:

"""model_fn for SSD to be used with our Estimator."""

shape = labels['shape']

loc_targets = labels['loc_targets']

cls_targets = labels['cls_targets']

match_scores = labels['match_scores']

global global_anchor_info

decode_fn = global_anchor_info['decode_fn']

num_anchors_per_layer = global_anchor_info['num_anchors_per_layer']

all_num_anchors_depth = global_anchor_info['all_num_anchors_depth']

这里是和input_pipeline()函数末尾对应的,是全局变量,在input_pipeline()函数末尾传入,在这里取出,这里分析一下代表什么吧,loc_targets预测的边框、cls_targets预测的分类、match_scores得分、decode_fn= ymin, xmin, ymax, xmax、num_anchors_per_layer每个特征图anchor数、all_num_anchors_depth 特征图每个像素点anchor数。

with tf.variable_scope(params['model_scope'], default_name=None, values=[features], reuse=tf.AUTO_REUSE):

backbone = ssd_net.VGG16Backbone(params['data_format'])

feature_layers = backbone.forward(features, training=(mode == tf.estimator.ModeKeys.TRAIN))

#print(feature_layers)

location_pred, cls_pred = ssd_net.multibox_head(feature_layers, params['num_classes'], all_num_anchors_depth, data_format=params['data_format'])

if params['data_format'] == 'channels_first':

cls_pred = [tf.transpose(pred, [0, 2, 3, 1]) for pred in cls_pred]

location_pred = [tf.transpose(pred, [0, 2, 3, 1]) for pred in location_pred]

cls_pred = [tf.reshape(pred, [tf.shape(features)[0], -1, params['num_classes']]) for pred in cls_pred]

location_pred = [tf.reshape(pred, [tf.shape(features)[0], -1, 4]) for pred in location_pred]

cls_pred = tf.concat(cls_pred, axis=1)

location_pred = tf.concat(location_pred, axis=1)

cls_pred = tf.reshape(cls_pred, [-1, params['num_classes']])

location_pred = tf.reshape(location_pred, [-1, 4])

第一句是把数据传入VGG16网络,这个其实可以分析,但是差不多每个基础的文本检测算法前期特征提取都是用的VGG16,所以相当成熟这里不想再说了。

backbone.forward用于获得6个特征图也就是经过了后续的网络,6个特征图shape分别为(1,38,38,512),(1,19,19,1024),(1,10,10,512),(1,5,5,256),(1,3,3,256),(1,1,1,256)。然后获得坐标预测和分类预测,对他格式转换6个特征图融合,得到最后的cls_pred,location_pred。

with tf.device('/cpu:0'):

设置使用的设备。

with tf.control_dependencies([cls_pred, location_pred]):

控制操作的运行顺序,得到这两个参数才会运行。

#bboxes_pred = decode_fn(location_pred)

bboxes_pred = tf.map_fn(lambda _preds : decode_fn(_preds),

tf.reshape(location_pred, [tf.shape(features)[0], -1, 4]),

dtype=[tf.float32] * len(num_anchors_per_layer), back_prop=False)

#cls_targets = tf.Print(cls_targets, [tf.shape(bboxes_pred[0]),tf.shape(bboxes_pred[1]),tf.shape(bboxes_pred[2]),tf.shape(bboxes_pred[3])])

bboxes_pred = [tf.reshape(preds, [-1, 4]) for preds in bboxes_pred]

bboxes_pred = tf.concat(bboxes_pred, axis=0)

flaten_cls_targets = tf.reshape(cls_targets, [-1])

flaten_match_scores = tf.reshape(match_scores, [-1])

flaten_loc_targets = tf.reshape(loc_targets, [-1, 4])

lambda声明函数然后调用,作用是把偏移量转化为坐标,后面的操作就是维度变换所有的都降一个维度。

positive_mask = flaten_cls_targets > 0

n_positives = tf.count_nonzero(positive_mask)

batch_n_positives = tf.count_nonzero(cls_targets, -1)

这里实现的是统计实际正样本个数。

batch_negtive_mask = tf.equal(cls_targets, 0)#tf.logical_and(tf.equal(cls_targets, 0), match_scores > 0.)

batch_n_negtives = tf.count_nonzero(batch_negtive_mask, -1)

batch_n_neg_select = tf.cast(params['negative_ratio'] * tf.cast(batch_n_positives, tf.float32), tf.int32)

batch_n_neg_select = tf.minimum(batch_n_neg_select, tf.cast(batch_n_negtives, tf.int32))

这里是统计负样本个数,然后确定选择个数,至于这里的区别选择需要保证正负样本比例1:3。

predictions_for_bg = tf.nn.softmax(tf.reshape(cls_pred, [tf.shape(features)[0], -1, params['num_classes']]))[:, :, 0]

选择一部分负样本进行训练。

prob_for_negtives = tf.where(batch_negtive_mask,

0. - predictions_for_bg,

# ignore all the positives

0. - tf.ones_like(predictions_for_bg))

将后面的部分用-1代替也就是未训练部分。

final_mask = tf.stop_gradient(tf.logical_or(tf.reshape(tf.logical_and(batch_negtive_mask, selected_neg_mask), [-1]), positive_mask))

total_examples = tf.count_nonzero(final_mask)

这是筛选出最后的正负样本。

cls_pred = tf.boolean_mask(cls_pred, final_mask) # 从cls_pred中取final_mask为true的值,分类预测

location_pred = tf.boolean_mask(location_pred, tf.stop_gradient(positive_mask)) # 从clocation_pred中取positive_mask为true的值,回归预测框

只就是我们熟悉的mask掩膜操作。

cls_accuracy = tf.metrics.accuracy(flaten_cls_targets, predictions['classes'])

计算正确率。

cross_entropy = tf.losses.sparse_softmax_cross_entropy(labels=flaten_cls_targets, logits=cls_pred) * (params['negative_ratio'] + 1.)

计算交叉熵损失并且乘以权重。

loc_loss = modified_smooth_l1(location_pred, flaten_loc_targets, sigma=1.)

#loc_loss = modified_smooth_l1(location_pred, tf.stop_gradient(gtargets))

loc_loss = tf.reduce_mean(tf.reduce_sum(loc_loss, axis=-1), name='location_loss')

计算预测框回归smooth_l1损失。

total_loss = tf.add(cross_entropy + loc_loss, tf.multiply(params['weight_decay'], tf.add_n(l2_loss_vars), name='l2_loss'), name='total_loss')

计算总损失。

if mode == tf.estimator.ModeKeys.TRAIN:

global_step = tf.train.get_or_create_global_step()

lr_values = [params['learning_rate'] * decay for decay in params['lr_decay_factors']]

learning_rate = tf.train.piecewise_constant(tf.cast(global_step, tf.int32),

[int(_) for _ in params['decay_boundaries']],

lr_values)

truncated_learning_rate = tf.maximum(learning_rate, tf.constant(params['end_learning_rate'], dtype=learning_rate.dtype), name='learning_rate')

# Create a tensor named learning_rate for logging purposes.

tf.summary.scalar('learning_rate', truncated_learning_rate)

optimizer = tf.train.MomentumOptimizer(learning_rate=truncated_learning_rate,

momentum=params['momentum'])

optimizer = tf.contrib.estimator.TowerOptimizer(optimizer)

# Batch norm requires update_ops to be added as a train_op dependency.

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

train_op = optimizer.minimize(total_loss, global_step)

else:

train_op = None

这是一些基础参数的设置。需要注意的是只有这块才是真正的开始训练类似于session。

return tf.estimator.EstimatorSpec(

mode=mode,

predictions=predictions,

loss=total_loss,

train_op=train_op,

eval_metric_ops=metrics,

scaffold=tf.train.Scaffold(init_fn=get_init_fn()))

最后的返回值。

最后

这里就是我想写的所有解析了,虽然并不是所有代码但是涉及了大部分重要部分,让我们总结一下,数据预处理+anchor建立+模型+损失,希望能对大家有所帮助,在这里向各位前辈致以诚挚敬意。

337

337

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?