前言

今天下午我们一起学习有趣的生成式对抗网络GAN。

GAN介绍

生成对抗网络(Generative Adversarial Networks,GAN)最早由 Ian Goodfellow 在 2014 年提出,是目前深度学习领域最具潜力的研究成果之一。它的核心思想是:同时训练两个相互协作、同时又相互竞争的深度神经网络(一个称为生成器 Generator,另一个称为判别器 Discriminator)来处理无监督学习的相关问题。

通常,我们会用下面这个例子来说明 GAN 的原理:将警察视为判别器,制造假币的犯罪分子视为生成器。一开始,犯罪分子会首先向警察展示一张假币。警察识别出该假币,并向犯罪分子反馈哪些地方是假的。接着,根据警察的反馈,犯罪分子改进工艺,制作一张更逼真的假币给警方检查。这时警方再反馈,犯罪分子再改进工艺。不断重复这一过程,直到警察识别不出真假,那么模型就训练成功了。

GAN的变体非常多,我们就以深度卷积生成对抗网络(Deep Convolutional GAN,DCGAN)为例,自动生成 MNIST 手写体数字。

判别器

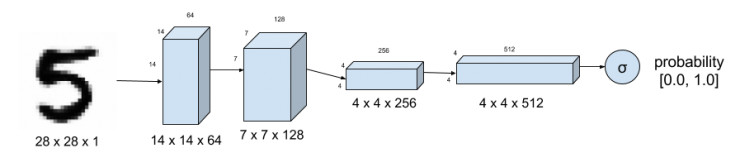

判别器的作用是判断一个模型生成的图像和真实图像比,有多逼真。它的基本结构就是如下图所示的卷积神经网络(Convolutional Neural Network,CNN)。对于 MNIST 数据集来说,模型输入是一个 28x28 像素的单通道图像。Sigmoid 函数的输出值在 0-1 之间,表示图像真实度的概率,其中 0 表示肯定是假的,1 表示肯定是真的。与典型的 CNN 结构相比,这里去掉了层之间的 max-pooling。这里每个 CNN 层都以 LeakyReLU 为激活函数。而且为了防止过拟合,层之间的 dropout 值均被设置在 0.4-0.7 之间,模型结构如下:

ReLU激活函数极为f(x)=alpha * x for x < 0, f(x) = x for x>=0。alpha是一个小的非零数。在我以前的学习中,LeakyReLU一般和BN结合使用来防止过拟合这里把他俩分开了我不是特别理解。

生成器

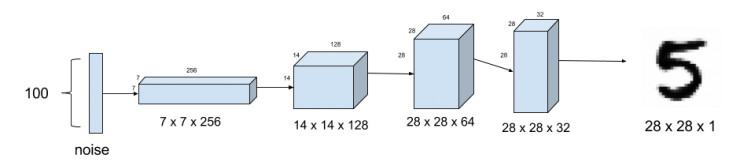

生成器的作用是合成假的图像,其基本机构如下图所示。图中,我们使用了卷积的倒数,即转置卷积(transposed convolution),从 100 维的噪声(满足 -1 至 1 之间的均匀分布)中生成了假图像。这里我们采用了模型前三层之间的上采样来合成更逼真的手写图像。在层与层之间,我们采用了批量归一化的方法来平稳化训练过程。以 ReLU 函数为每一层结构之后的激活函数。最后一层 Sigmoid 函数输出最后的假图像。第一层设置了 0.3-0.5 之间的 dropout 值来防止过拟合。

批量正则化:(这就是BN)

GAN应用

1.图像生成:http://make.girls.moe/。

2.向量空间运算

3.文本转图像

4.超分辨率

主干代码

完整代码我会放在下载里,好像是没法设置0积分我只能设置1积分,这里说声抱歉,这里附上主干代码,基本就是完整的,只少了两个展示效果。

import numpy as np

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Activation, Flatten, Reshape

from keras.layers import Conv2D, Conv2DTranspose, UpSampling2D

from keras.layers import LeakyReLU, Dropout

from keras.layers import BatchNormalization

from keras.optimizers import RMSprop

import matplotlib.pyplot as plt

class DCGAN(object):

def __init__(self, img_rows=28, img_cols=28, channel=1):

# 初始化图片的行列通道数

self.img_rows = img_rows

self.img_cols = img_cols

self.channel = channel

self.D = None # discriminator 判别器

self.G = None # generator 生成器

self.AM = None # adversarial model 对抗模型

self.DM = None # discriminator model 判别模型

# 判别模型

def discriminator(self):

if self.D:

return self.D

self.D = Sequential()

# 定义通道数64

depth = 64

# dropout系数

dropout = 0.4

# 输入28*28*1

input_shape = (self.img_rows, self.img_cols, self.channel)

# 输出14*14*64

self.D.add(Conv2D(depth*1, 5, strides=2, input_shape=input_shape, padding='same'))

self.D.add(LeakyReLU(alpha=0.2))

self.D.add(Dropout(dropout))

# 输出7*7*128

self.D.add(Conv2D(depth*2, 5, strides=2, padding='same'))

self.D.add(LeakyReLU(alpha=0.2))

self.D.add(Dropout(dropout))

# 输出4*4*256

self.D.add(Conv2D(depth*4, 5, strides=2, padding='same'))

self.D.add(LeakyReLU(alpha=0.2))

self.D.add(Dropout(dropout))

# 输出4*4*512

self.D.add(Conv2D(depth*8, 5, strides=1, padding='same'))

self.D.add(LeakyReLU(alpha=0.2))

self.D.add(Dropout(dropout))

# 全连接层

self.D.add(Flatten())

self.D.add(Dense(1))

self.D.add(Activation('sigmoid'))

self.D.summary()

return self.D

# 生成模型

def generator(self):

if self.G:

return self.G

self.G = Sequential()

# dropout系数

dropout = 0.4

# 通道数256

depth = 64*4

# 初始平面大小设置

dim = 7

# 全连接层,100个的随机噪声数据,7*7*256个神经网络

self.G.add(Dense(dim*dim*depth, input_dim=100))

self.G.add(BatchNormalization(momentum=0.9))

self.G.add(Activation('relu'))

# 把1维的向量变成3维数据(7,7,256)

self.G.add(Reshape((dim, dim, depth)))

self.G.add(Dropout(dropout))

# 用法和 MaxPooling2D 基本相反,比如:UpSampling2D(size=(2, 2))

# 就相当于将输入图片的长宽各拉伸一倍,整个图片被放大了

# 上采样,采样后得到数据格式(14,14,256)

self.G.add(UpSampling2D())

# 转置卷积,得到数据格式(14,14,128)

self.G.add(Conv2DTranspose(int(depth/2), 5, padding='same'))

self.G.add(BatchNormalization(momentum=0.9))

self.G.add(Activation('relu'))

# 上采样,采样后得到数据格式(28,28,128)

self.G.add(UpSampling2D())

# 转置卷积,得到数据格式(28,28,64)

self.G.add(Conv2DTranspose(int(depth/4), 5, padding='same'))

self.G.add(BatchNormalization(momentum=0.9))

self.G.add(Activation('relu'))

# 转置卷积,得到数据格式(28,28,32)

self.G.add(Conv2DTranspose(int(depth/8), 5, padding='same'))

self.G.add(BatchNormalization(momentum=0.9))

self.G.add(Activation('relu'))

# 转置卷积,得到数据格式(28,28,1)

self.G.add(Conv2DTranspose(1, 5, padding='same'))

self.G.add(Activation('sigmoid'))

self.G.summary()

return self.G

# 定义判别模型

def discriminator_model(self):

if self.DM:

return self.DM

# 定义优化器

optimizer = RMSprop(lr=0.0002, decay=6e-8)

# 构建模型

self.DM = Sequential()

self.DM.add(self.discriminator())

self.DM.compile(loss='binary_crossentropy', optimizer=optimizer, metrics=['accuracy'])

return self.DM

# 定义对抗模型

def adversarial_model(self):

if self.AM:

return self.AM

# 定义优化器

optimizer = RMSprop(lr=0.0001, decay=3e-8)

# 构建模型

self.AM = Sequential()

# 生成器

self.AM.add(self.generator())

# 判别器

self.AM.add(self.discriminator())

self.AM.compile(loss='binary_crossentropy', optimizer=optimizer, metrics=['accuracy'])

return self.AM

class MNIST_DCGAN(object):

def __init__(self):

# 图片的行数

self.img_rows = 28

# 图片的列数

self.img_cols = 28

# 图片的通道数

self.channel = 1

# 载入数据

(x_train,y_train),(x_test,y_test) = mnist.load_data()

# (60000,28,28)

self.x_train = x_train/255.0

# 改变数据格式(samples, rows, cols, channel)(60000,28,28,1)

self.x_train = self.x_train.reshape(-1, self.img_rows, self.img_cols, 1).astype(np.float32)

# 实例化DCGAN类

self.DCGAN = DCGAN()

# 定义判别器模型

self.discriminator = self.DCGAN.discriminator_model()

# 定义对抗模型

self.adversarial = self.DCGAN.adversarial_model()

# 定义生成器

self.generator = self.DCGAN.generator()

# 训练模型

def train(self, train_steps=2000, batch_size=256, save_interval=0):

noise_input = None

if save_interval>0:

# 生成16个100维的噪声数据

noise_input = np.random.uniform(-1.0, 1.0, size=[16, 100])

for i in range(train_steps):

# 训练判别器,提升判别能力

# 随机得到一个batch的图片数据

images_train = self.x_train[np.random.randint(0, self.x_train.shape[0], size=batch_size), :, :, :]

# 随机生成一个batch的噪声数据

noise = np.random.uniform(-1.0, 1.0, size=[batch_size, 100])

# 生成伪造的图片数据

images_fake = self.generator.predict(noise)

# 合并一个batch的真实图片和一个batch的伪造图片

x = np.concatenate((images_train, images_fake))

# 定义标签,真实数据的标签为1,伪造数据的标签为0

y = np.ones([2*batch_size, 1])

y[batch_size:, :] = 0

# 把数据放到判别器中进行判断

d_loss = self.discriminator.train_on_batch(x, y)

# 训练对抗模型,提升生成器的造假能力

# 标签都定义为1

y = np.ones([batch_size, 1])

# 生成一个batch的噪声数据

noise = np.random.uniform(-1.0, 1.0, size=[batch_size, 100])

# 训练对抗模型

a_loss = self.adversarial.train_on_batch(noise, y)

# 打印判别器的loss和准确率,以及对抗模型的loss和准确率

log_mesg = "%d: [D loss: %f, acc: %f]" % (i, d_loss[0], d_loss[1])

log_mesg = "%s [A loss: %f, acc: %f]" % (log_mesg, a_loss[0], a_loss[1])

print(log_mesg)

# 如果需要保存图片

if save_interval>0:

# 每save_interval次保存一次

if (i+1)%save_interval==0:

self.plot_images(save2file=True, samples=noise_input.shape[0], noise=noise_input, step=(i+1))

# 保存图片

def plot_images(self, save2file=False, fake=True, samples=16, noise=None, step=0):

filename = 'mnist.png'

if fake:

if noise is None:

noise = np.random.uniform(-1.0, 1.0, size=[samples, 100])

else:

filename = "mnist_%d.png" % step

# 生成伪造的图片数据

images = self.generator.predict(noise)

else:

# 获得真实图片数据

i = np.random.randint(0, self.x_train.shape[0], samples)

images = self.x_train[i, :, :, :]

# 设置图片大小

plt.figure(figsize=(10,10))

# 生成16张图片

for i in range(images.shape[0]):

plt.subplot(4, 4, i+1)

# 获取一个张图片数据

image = images[i, :, :, :]

# 变成2维的图片

image = np.reshape(image, [self.img_rows, self.img_cols])

# 显示灰度图片

plt.imshow(image, cmap='gray')

# 不显示坐标轴

plt.axis('off')

# 保存图片

if save2file:

plt.savefig(filename)

plt.close('all')

# 不保存的话就显示图片

else:

plt.show()

# 实例化网络的类

mnist_dcgan = MNIST_DCGAN()

# 训练模型

mnist_dcgan.train(train_steps=10000, batch_size=256, save_interval=500)

结果分析

其实到写这篇博客为止我还没有跑完,我的电脑太次了,我只能更根据他们跑过的结果给大家分析一下。首先,我们分析一下,训练过程,我们要进行两个不同的训练,分别是判别器的训练和对抗模型的训练。也就是先让我们的“警察”变得更强,然后再去训练“小偷(犯罪分子,因为更形象所以以后都用小偷)”。重复这个过程,最后我们的目的是让小偷变成“神偷”。

我们打印一部分结果:

Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes.

Extracting mnist\train-images-idx3-ubyte.gz

Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes.

Extracting mnist\train-labels-idx1-ubyte.gz

Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes.

Extracting mnist\t10k-images-idx3-ubyte.gz

Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes.

Extracting mnist\t10k-labels-idx1-ubyte.gz

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 14, 14, 64) 1664

_________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 14, 14, 64) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 14, 14, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 7, 7, 128) 204928

_________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 7, 7, 128) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 7, 7, 128) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 4, 4, 256) 819456

_________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 4, 4, 256) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 4, 4, 256) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 4, 4, 512) 3277312

_________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 4, 4, 512) 0

_________________________________________________________________

dropout_4 (Dropout) (None, 4, 4, 512) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 8192) 0

_________________________________________________________________

dense_1 (Dense) (None, 1) 8193

_________________________________________________________________

activation_1 (Activation) (None, 1) 0

=================================================================

Total params: 4,311,553

Trainable params: 4,311,553

Non-trainable params: 0

_________________________________________________________________

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_2 (Dense) (None, 12544) 1266944

_________________________________________________________________

batch_normalization_1 (Batch (None, 12544) 50176

_________________________________________________________________

activation_2 (Activation) (None, 12544) 0

_________________________________________________________________

reshape_1 (Reshape) (None, 7, 7, 256) 0

_________________________________________________________________

dropout_5 (Dropout) (None, 7, 7, 256) 0

_________________________________________________________________

up_sampling2d_1 (UpSampling2 (None, 14, 14, 256) 0

_________________________________________________________________

conv2d_transpose_1 (Conv2DTr (None, 14, 14, 128) 819328

_________________________________________________________________

batch_normalization_2 (Batch (None, 14, 14, 128) 512

_________________________________________________________________

activation_3 (Activation) (None, 14, 14, 128) 0

_________________________________________________________________

up_sampling2d_2 (UpSampling2 (None, 28, 28, 128) 0

_________________________________________________________________

conv2d_transpose_2 (Conv2DTr (None, 28, 28, 64) 204864

_________________________________________________________________

batch_normalization_3 (Batch (None, 28, 28, 64) 256

_________________________________________________________________

activation_4 (Activation) (None, 28, 28, 64) 0

_________________________________________________________________

conv2d_transpose_3 (Conv2DTr (None, 28, 28, 32) 51232

_________________________________________________________________

batch_normalization_4 (Batch (None, 28, 28, 32) 128

_________________________________________________________________

activation_5 (Activation) (None, 28, 28, 32) 0

_________________________________________________________________

conv2d_transpose_4 (Conv2DTr (None, 28, 28, 1) 801

_________________________________________________________________

activation_6 (Activation) (None, 28, 28, 1) 0

=================================================================

Total params: 2,394,241

Trainable params: 2,368,705

Non-trainable params: 25,536

_________________________________________________________________

0: [D loss: 0.691503, acc: 0.509766] [A loss: 1.231536, acc: 0.000000]

1: [D loss: 0.648653, acc: 0.548828] [A loss: 1.004777, acc: 0.000000]

2: [D loss: 0.596343, acc: 0.515625] [A loss: 1.437418, acc: 0.000000]

3: [D loss: 0.592514, acc: 0.843750] [A loss: 1.165042, acc: 0.000000]

4: [D loss: 0.474580, acc: 0.982422] [A loss: 1.572894, acc: 0.000000]

5: [D loss: 0.524854, acc: 0.693359] [A loss: 2.045968, acc: 0.000000]

6: [D loss: 0.607588, acc: 0.810547] [A loss: 0.921388, acc: 0.015625]

7: [D loss: 0.671562, acc: 0.500000] [A loss: 1.372429, acc: 0.000000]

8: [D loss: 0.520482, acc: 0.906250] [A loss: 1.128514, acc: 0.000000]

9: [D loss: 0.434005, acc: 0.986328] [A loss: 1.172277, acc: 0.000000]

10: [D loss: 0.443416, acc: 0.644531] [A loss: 1.703157, acc: 0.000000]

11: [D loss: 0.382728, acc: 0.996094] [A loss: 1.243012, acc: 0.000000]

12: [D loss: 0.537050, acc: 0.505859] [A loss: 2.008118, acc: 0.000000]

13: [D loss: 0.472494, acc: 0.931641] [A loss: 1.150631, acc: 0.000000]

14: [D loss: 0.390675, acc: 0.759766] [A loss: 1.370212, acc: 0.000000]

15: [D loss: 0.326801, acc: 0.978516] [A loss: 1.478633, acc: 0.000000]

16: [D loss: 0.324425, acc: 0.916016] [A loss: 1.741925, acc: 0.000000]

17: [D loss: 0.298051, acc: 0.990234] [A loss: 1.635591, acc: 0.000000]

18: [D loss: 0.299104, acc: 0.947266] [A loss: 1.917285, acc: 0.000000]

19: [D loss: 0.267050, acc: 0.998047] [A loss: 1.520450, acc: 0.000000]

20: [D loss: 0.366637, acc: 0.742188] [A loss: 2.631558, acc: 0.000000]

21: [D loss: 0.433646, acc: 0.892578] [A loss: 1.052351, acc: 0.027344]

22: [D loss: 0.617413, acc: 0.515625] [A loss: 1.846913, acc: 0.000000]

23: [D loss: 0.278645, acc: 0.990234] [A loss: 1.351792, acc: 0.000000]

24: [D loss: 0.279705, acc: 0.947266] [A loss: 1.632707, acc: 0.000000]

25: [D loss: 0.236295, acc: 0.980469] [A loss: 1.755901, acc: 0.000000]

26: [D loss: 0.250655, acc: 0.949219] [A loss: 1.947745, acc: 0.000000]

27: [D loss: 0.224082, acc: 0.972656] [A loss: 1.925455, acc: 0.000000]

28: [D loss: 0.228702, acc: 0.957031] [A loss: 2.121093, acc: 0.000000]

29: [D loss: 0.183285, acc: 0.990234] [A loss: 1.802444, acc: 0.003906]

30: [D loss: 0.240770, acc: 0.912109] [A loss: 2.647219, acc: 0.000000]

31: [D loss: 0.263249, acc: 0.923828] [A loss: 0.990316, acc: 0.210938]

32: [D loss: 0.990905, acc: 0.507812] [A loss: 2.862743, acc: 0.000000]

33: [D loss: 0.431388, acc: 0.785156] [A loss: 1.073982, acc: 0.078125]

34: [D loss: 0.358037, acc: 0.789062] [A loss: 1.360353, acc: 0.011719]

35: [D loss: 0.240701, acc: 0.937500] [A loss: 1.453409, acc: 0.019531]

36: [D loss: 0.277398, acc: 0.886719] [A loss: 1.705918, acc: 0.000000]

37: [D loss: 0.241611, acc: 0.916016] [A loss: 1.769321, acc: 0.000000]

38: [D loss: 0.253498, acc: 0.910156] [A loss: 1.983329, acc: 0.000000]

39: [D loss: 0.225967, acc: 0.937500] [A loss: 1.657594, acc: 0.007812]

40: [D loss: 0.273817, acc: 0.863281] [A loss: 2.194973, acc: 0.000000]

41: [D loss: 0.221860, acc: 0.937500] [A loss: 1.231366, acc: 0.117188]

42: [D loss: 0.506532, acc: 0.693359] [A loss: 2.995471, acc: 0.000000]

43: [D loss: 0.495057, acc: 0.800781] [A loss: 0.590665, acc: 0.667969]

44: [D loss: 0.826440, acc: 0.544922] [A loss: 1.846615, acc: 0.000000]

45: [D loss: 0.240079, acc: 0.955078] [A loss: 1.020400, acc: 0.167969]

46: [D loss: 0.429716, acc: 0.730469] [A loss: 1.589278, acc: 0.003906]

47: [D loss: 0.238475, acc: 0.947266] [A loss: 1.356752, acc: 0.031250]

48: [D loss: 0.344801, acc: 0.822266] [A loss: 1.712172, acc: 0.000000]

49: [D loss: 0.259987, acc: 0.925781] [A loss: 1.410086, acc: 0.031250]

50: [D loss: 0.384457, acc: 0.775391] [A loss: 2.109657, acc: 0.000000]

51: [D loss: 0.296866, acc: 0.933594] [A loss: 0.972942, acc: 0.214844]

52: [D loss: 0.579416, acc: 0.615234] [A loss: 2.511665, acc: 0.000000]

53: [D loss: 0.370514, acc: 0.871094] [A loss: 0.617159, acc: 0.640625]

54: [D loss: 0.769498, acc: 0.521484] [A loss: 1.882442, acc: 0.000000]

55: [D loss: 0.259458, acc: 0.962891] [A loss: 1.124723, acc: 0.058594]

56: [D loss: 0.430123, acc: 0.708984] [A loss: 1.732257, acc: 0.000000]

57: [D loss: 0.313927, acc: 0.929688] [A loss: 1.152099, acc: 0.058594]

58: [D loss: 0.470944, acc: 0.654297] [A loss: 1.974094, acc: 0.000000]

59: [D loss: 0.327888, acc: 0.931641] [A loss: 0.991130, acc: 0.140625]

60: [D loss: 0.639045, acc: 0.548828] [A loss: 2.348823, acc: 0.000000]

61: [D loss: 0.384606, acc: 0.886719] [A loss: 0.701185, acc: 0.500000]

62: [D loss: 0.782717, acc: 0.509766] [A loss: 1.906247, acc: 0.000000]

63: [D loss: 0.350297, acc: 0.949219] [A loss: 1.011624, acc: 0.117188]

64: [D loss: 0.546892, acc: 0.558594] [A loss: 1.799624, acc: 0.000000]

65: [D loss: 0.384208, acc: 0.894531] [A loss: 1.125789, acc: 0.023438]

66: [D loss: 0.565075, acc: 0.568359] [A loss: 2.057138, acc: 0.000000]

67: [D loss: 0.409379, acc: 0.916016] [A loss: 0.877637, acc: 0.234375]

68: [D loss: 0.689376, acc: 0.509766] [A loss: 2.064061, acc: 0.000000]

69: [D loss: 0.403014, acc: 0.923828] [A loss: 0.860366, acc: 0.246094]

70: [D loss: 0.700391, acc: 0.505859] [A loss: 2.017132, acc: 0.000000]

71: [D loss: 0.420937, acc: 0.904297] [A loss: 0.904990, acc: 0.167969]

72: [D loss: 0.658957, acc: 0.517578] [A loss: 1.933370, acc: 0.000000]

73: [D loss: 0.439174, acc: 0.884766] [A loss: 0.918563, acc: 0.199219]

74: [D loss: 0.633506, acc: 0.525391] [A loss: 2.004735, acc: 0.000000]

75: [D loss: 0.437182, acc: 0.896484] [A loss: 0.876891, acc: 0.238281]

76: [D loss: 0.677424, acc: 0.503906] [A loss: 2.020369, acc: 0.000000]

77: [D loss: 0.430446, acc: 0.906250] [A loss: 0.815220, acc: 0.281250]

78: [D loss: 0.690905, acc: 0.503906] [A loss: 2.050816, acc: 0.000000]

79: [D loss: 0.445981, acc: 0.908203] [A loss: 0.799167, acc: 0.296875]

80: [D loss: 0.684389, acc: 0.509766] [A loss: 1.916862, acc: 0.000000]

81: [D loss: 0.447047, acc: 0.902344] [A loss: 0.813182, acc: 0.312500]

82: [D loss: 0.681568, acc: 0.507812] [A loss: 1.950821, acc: 0.000000]

83: [D loss: 0.440485, acc: 0.912109] [A loss: 0.906062, acc: 0.175781]

84: [D loss: 0.621085, acc: 0.519531] [A loss: 1.890153, acc: 0.000000]

85: [D loss: 0.440503, acc: 0.888672] [A loss: 0.945012, acc: 0.167969]

86: [D loss: 0.620250, acc: 0.525391] [A loss: 2.101747, acc: 0.000000]

87: [D loss: 0.457391, acc: 0.902344] [A loss: 0.709829, acc: 0.480469]

88: [D loss: 0.733050, acc: 0.503906] [A loss: 2.112121, acc: 0.000000]

89: [D loss: 0.458123, acc: 0.888672] [A loss: 0.705255, acc: 0.464844]

90: [D loss: 0.700676, acc: 0.507812] [A loss: 1.904836, acc: 0.000000]

91: [D loss: 0.452364, acc: 0.906250] [A loss: 0.805733, acc: 0.316406]

92: [D loss: 0.647448, acc: 0.507812] [A loss: 1.923860, acc: 0.000000]

93: [D loss: 0.451022, acc: 0.916016] [A loss: 0.832103, acc: 0.253906]

94: [D loss: 0.658411, acc: 0.515625] [A loss: 2.052168, acc: 0.000000]

95: [D loss: 0.438787, acc: 0.929688] [A loss: 0.815914, acc: 0.308594]

96: [D loss: 0.659617, acc: 0.507812] [A loss: 2.098220, acc: 0.000000]

97: [D loss: 0.448281, acc: 0.902344] [A loss: 0.803287, acc: 0.320312]

98: [D loss: 0.671589, acc: 0.509766] [A loss: 2.052079, acc: 0.000000]

99: [D loss: 0.449434, acc: 0.898438] [A loss: 0.775447, acc: 0.367188]

100: [D loss: 0.694258, acc: 0.507812] [A loss: 2.082814, acc: 0.000000]

101: [D loss: 0.472326, acc: 0.886719] [A loss: 0.704720, acc: 0.503906]

102: [D loss: 0.714512, acc: 0.505859] [A loss: 1.996335, acc: 0.000000]

103: [D loss: 0.465975, acc: 0.882812] [A loss: 0.700260, acc: 0.519531]

104: [D loss: 0.719334, acc: 0.500000] [A loss: 1.992613, acc: 0.000000]

105: [D loss: 0.458248, acc: 0.902344] [A loss: 0.829929, acc: 0.277344]

106: [D loss: 0.702113, acc: 0.507812] [A loss: 2.078796, acc: 0.000000]

107: [D loss: 0.478760, acc: 0.888672] [A loss: 0.795521, acc: 0.320312]

108: [D loss: 0.707462, acc: 0.501953] [A loss: 2.077059, acc: 0.000000]

109: [D loss: 0.508267, acc: 0.833984] [A loss: 0.736822, acc: 0.480469]

110: [D loss: 0.726499, acc: 0.501953] [A loss: 2.034654, acc: 0.000000]

111: [D loss: 0.486403, acc: 0.876953] [A loss: 0.773986, acc: 0.375000]

112: [D loss: 0.720257, acc: 0.500000] [A loss: 2.039338, acc: 0.000000]

113: [D loss: 0.508873, acc: 0.833984] [A loss: 0.771436, acc: 0.398438]

114: [D loss: 0.699827, acc: 0.505859] [A loss: 1.971889, acc: 0.000000]

115: [D loss: 0.535446, acc: 0.796875] [A loss: 0.780918, acc: 0.335938]

116: [D loss: 0.735963, acc: 0.501953] [A loss: 2.047856, acc: 0.000000]

117: [D loss: 0.532679, acc: 0.806641] [A loss: 0.758681, acc: 0.402344]

118: [D loss: 0.748309, acc: 0.507812] [A loss: 2.047386, acc: 0.000000]

119: [D loss: 0.545521, acc: 0.783203] [A loss: 0.733404, acc: 0.429688]

120: [D loss: 0.743108, acc: 0.501953] [A loss: 1.937115, acc: 0.000000]

121: [D loss: 0.565786, acc: 0.765625] [A loss: 0.775433, acc: 0.390625]

122: [D loss: 0.754094, acc: 0.503906] [A loss: 2.029332, acc: 0.000000]

123: [D loss: 0.568998, acc: 0.763672] [A loss: 0.752774, acc: 0.398438]

124: [D loss: 0.768901, acc: 0.505859] [A loss: 2.115191, acc: 0.000000]

125: [D loss: 0.575241, acc: 0.751953] [A loss: 0.712867, acc: 0.468750]

126: [D loss: 0.764882, acc: 0.503906] [A loss: 1.926544, acc: 0.000000]

127: [D loss: 0.564186, acc: 0.775391] [A loss: 0.796656, acc: 0.316406]

128: [D loss: 0.731247, acc: 0.501953] [A loss: 1.915877, acc: 0.000000]

129: [D loss: 0.559361, acc: 0.785156] [A loss: 0.896263, acc: 0.175781]

130: [D loss: 0.718427, acc: 0.517578] [A loss: 2.125160, acc: 0.000000]

131: [D loss: 0.555089, acc: 0.763672] [A loss: 0.735170, acc: 0.449219]

132: [D loss: 0.776140, acc: 0.498047] [A loss: 2.221849, acc: 0.000000]

133: [D loss: 0.593860, acc: 0.707031] [A loss: 0.613928, acc: 0.679688]

134: [D loss: 0.810136, acc: 0.496094] [A loss: 1.733192, acc: 0.000000]

135: [D loss: 0.573212, acc: 0.767578] [A loss: 0.887116, acc: 0.167969]

136: [D loss: 0.696365, acc: 0.503906] [A loss: 1.792508, acc: 0.000000]

137: [D loss: 0.560364, acc: 0.789062] [A loss: 1.007125, acc: 0.093750]

138: [D loss: 0.677562, acc: 0.525391] [A loss: 2.061942, acc: 0.000000]

139: [D loss: 0.566491, acc: 0.773438] [A loss: 0.753326, acc: 0.425781]

140: [D loss: 0.774658, acc: 0.503906] [A loss: 2.188344, acc: 0.000000]

141: [D loss: 0.617926, acc: 0.673828] [A loss: 0.557792, acc: 0.816406]

142: [D loss: 0.837046, acc: 0.494141] [A loss: 1.679428, acc: 0.000000]

143: [D loss: 0.578102, acc: 0.775391] [A loss: 0.876964, acc: 0.187500]

144: [D loss: 0.666602, acc: 0.517578] [A loss: 1.605749, acc: 0.000000]

145: [D loss: 0.593821, acc: 0.705078] [A loss: 0.979484, acc: 0.074219]

146: [D loss: 0.655855, acc: 0.527344] [A loss: 1.861180, acc: 0.000000]

147: [D loss: 0.586890, acc: 0.761719] [A loss: 0.713094, acc: 0.457031]

148: [D loss: 0.747398, acc: 0.509766] [A loss: 2.095814, acc: 0.000000]

149: [D loss: 0.611762, acc: 0.689453] [A loss: 0.568410, acc: 0.789062]

150: [D loss: 0.801940, acc: 0.500000] [A loss: 1.570221, acc: 0.000000]

151: [D loss: 0.591073, acc: 0.750000] [A loss: 0.849759, acc: 0.222656]

152: [D loss: 0.656044, acc: 0.517578] [A loss: 1.549002, acc: 0.000000]

153: [D loss: 0.576636, acc: 0.728516] [A loss: 0.988255, acc: 0.113281]

154: [D loss: 0.640300, acc: 0.537109] [A loss: 1.627752, acc: 0.000000]

155: [D loss: 0.557738, acc: 0.806641] [A loss: 0.777479, acc: 0.328125]

156: [D loss: 0.705224, acc: 0.500000] [A loss: 1.894259, acc: 0.000000]

157: [D loss: 0.585216, acc: 0.716797] [A loss: 0.593588, acc: 0.726562]

158: [D loss: 0.767763, acc: 0.496094] [A loss: 1.704471, acc: 0.000000]

159: [D loss: 0.589467, acc: 0.722656] [A loss: 0.697022, acc: 0.464844]

160: [D loss: 0.700470, acc: 0.500000] [A loss: 1.498897, acc: 0.000000]

161: [D loss: 0.562630, acc: 0.796875] [A loss: 0.852995, acc: 0.207031]

162: [D loss: 0.640407, acc: 0.515625] [A loss: 1.593685, acc: 0.000000]

163: [D loss: 0.566859, acc: 0.751953] [A loss: 0.791467, acc: 0.324219]

164: [D loss: 0.671670, acc: 0.513672] [A loss: 1.831024, acc: 0.000000]

165: [D loss: 0.575037, acc: 0.750000] [A loss: 0.632219, acc: 0.687500]

166: [D loss: 0.737036, acc: 0.500000] [A loss: 1.722258, acc: 0.000000]

167: [D loss: 0.563757, acc: 0.777344] [A loss: 0.713480, acc: 0.460938]

168: [D loss: 0.675642, acc: 0.505859] [A loss: 1.523493, acc: 0.000000]

169: [D loss: 0.560679, acc: 0.787109] [A loss: 0.730296, acc: 0.421875]

170: [D loss: 0.653577, acc: 0.523438] [A loss: 1.702063, acc: 0.000000]

171: [D loss: 0.561906, acc: 0.798828] [A loss: 0.664845, acc: 0.578125]

172: [D loss: 0.698300, acc: 0.509766] [A loss: 1.723585, acc: 0.000000]

173: [D loss: 0.541326, acc: 0.826172] [A loss: 0.709710, acc: 0.476562]

174: [D loss: 0.694211, acc: 0.505859] [A loss: 1.701803, acc: 0.000000]

175: [D loss: 0.548754, acc: 0.806641] [A loss: 0.673307, acc: 0.574219]

176: [D loss: 0.678137, acc: 0.517578] [A loss: 1.591114, acc: 0.000000]

177: [D loss: 0.538395, acc: 0.826172] [A loss: 0.824897, acc: 0.250000]

178: [D loss: 0.676258, acc: 0.519531] [A loss: 1.867238, acc: 0.000000]

179: [D loss: 0.567595, acc: 0.751953] [A loss: 0.609377, acc: 0.710938]

180: [D loss: 0.723833, acc: 0.503906] [A loss: 1.589976, acc: 0.000000]

181: [D loss: 0.570847, acc: 0.791016] [A loss: 0.784557, acc: 0.312500]

182: [D loss: 0.674772, acc: 0.521484] [A loss: 1.637760, acc: 0.000000]

183: [D loss: 0.545820, acc: 0.833984] [A loss: 0.815327, acc: 0.253906]

184: [D loss: 0.650002, acc: 0.527344] [A loss: 1.694071, acc: 0.000000]

185: [D loss: 0.559047, acc: 0.783203] [A loss: 0.684570, acc: 0.550781]

186: [D loss: 0.713607, acc: 0.503906] [A loss: 1.838257, acc: 0.000000]

187: [D loss: 0.567683, acc: 0.730469] [A loss: 0.626130, acc: 0.675781]

188: [D loss: 0.722959, acc: 0.503906] [A loss: 1.648201, acc: 0.000000]

189: [D loss: 0.575623, acc: 0.777344] [A loss: 0.710549, acc: 0.503906]

190: [D loss: 0.679402, acc: 0.527344] [A loss: 1.537004, acc: 0.000000]

191: [D loss: 0.570763, acc: 0.783203] [A loss: 0.822068, acc: 0.277344]

192: [D loss: 0.694234, acc: 0.525391] [A loss: 1.666622, acc: 0.000000]

193: [D loss: 0.572525, acc: 0.763672] [A loss: 0.705894, acc: 0.503906]

194: [D loss: 0.693591, acc: 0.523438] [A loss: 1.578833, acc: 0.000000]

195: [D loss: 0.572520, acc: 0.771484] [A loss: 0.752325, acc: 0.421875]

196: [D loss: 0.707219, acc: 0.517578] [A loss: 1.623304, acc: 0.000000]

197: [D loss: 0.571517, acc: 0.771484] [A loss: 0.724463, acc: 0.480469]

198: [D loss: 0.721095, acc: 0.511719] [A loss: 1.644762, acc: 0.000000]

199: [D loss: 0.597435, acc: 0.677734] [A loss: 0.671912, acc: 0.585938]

200: [D loss: 0.712119, acc: 0.517578] [A loss: 1.471917, acc: 0.000000]

201: [D loss: 0.583933, acc: 0.757812] [A loss: 0.801758, acc: 0.289062]

202: [D loss: 0.678271, acc: 0.531250] [A loss: 1.509630, acc: 0.000000]

203: [D loss: 0.585138, acc: 0.750000] [A loss: 0.781951, acc: 0.312500]

204: [D loss: 0.683118, acc: 0.519531] [A loss: 1.558321, acc: 0.000000]

205: [D loss: 0.574745, acc: 0.753906] [A loss: 0.718502, acc: 0.449219]

206: [D loss: 0.689886, acc: 0.509766] [A loss: 1.646207, acc: 0.000000]

207: [D loss: 0.579706, acc: 0.759766] [A loss: 0.710435, acc: 0.503906]

208: [D loss: 0.709791, acc: 0.513672] [A loss: 1.577824, acc: 0.000000]

209: [D loss: 0.581872, acc: 0.740234] [A loss: 0.666501, acc: 0.589844]

210: [D loss: 0.696718, acc: 0.515625] [A loss: 1.595843, acc: 0.000000]

211: [D loss: 0.582528, acc: 0.744141] [A loss: 0.706088, acc: 0.492188]

212: [D loss: 0.692366, acc: 0.519531] [A loss: 1.561489, acc: 0.000000]

213: [D loss: 0.584884, acc: 0.742188] [A loss: 0.706848, acc: 0.484375]

214: [D loss: 0.684679, acc: 0.525391] [A loss: 1.480523, acc: 0.000000]

215: [D loss: 0.583968, acc: 0.757812] [A loss: 0.779733, acc: 0.339844]

216: [D loss: 0.669083, acc: 0.527344] [A loss: 1.524182, acc: 0.000000]

217: [D loss: 0.580016, acc: 0.783203] [A loss: 0.707451, acc: 0.496094]

218: [D loss: 0.702822, acc: 0.519531] [A loss: 1.549842, acc: 0.000000]

219: [D loss: 0.608712, acc: 0.720703] [A loss: 0.738430, acc: 0.429688]

220: [D loss: 0.683573, acc: 0.517578] [A loss: 1.524086, acc: 0.000000]

221: [D loss: 0.607246, acc: 0.693359] [A loss: 0.702375, acc: 0.515625]

222: [D loss: 0.704177, acc: 0.525391] [A loss: 1.657308, acc: 0.000000]

223: [D loss: 0.603331, acc: 0.703125] [A loss: 0.610635, acc: 0.710938]

224: [D loss: 0.717118, acc: 0.501953] [A loss: 1.430291, acc: 0.000000]

225: [D loss: 0.601408, acc: 0.744141] [A loss: 0.758834, acc: 0.402344]

226: [D loss: 0.682414, acc: 0.527344] [A loss: 1.442136, acc: 0.000000]

227: [D loss: 0.592158, acc: 0.750000] [A loss: 0.733325, acc: 0.425781]

228: [D loss: 0.689754, acc: 0.527344] [A loss: 1.474277, acc: 0.000000]

229: [D loss: 0.600397, acc: 0.712891] [A loss: 0.754566, acc: 0.414062]

230: [D loss: 0.702799, acc: 0.521484] [A loss: 1.466574, acc: 0.000000]

231: [D loss: 0.609971, acc: 0.718750] [A loss: 0.753828, acc: 0.437500]

232: [D loss: 0.696546, acc: 0.527344] [A loss: 1.433056, acc: 0.000000]

233: [D loss: 0.603584, acc: 0.734375] [A loss: 0.729402, acc: 0.472656]

234: [D loss: 0.691293, acc: 0.533203] [A loss: 1.500463, acc: 0.000000]

235: [D loss: 0.612721, acc: 0.679688] [A loss: 0.748835, acc: 0.410156]

236: [D loss: 0.709877, acc: 0.515625] [A loss: 1.482194, acc: 0.000000]

237: [D loss: 0.603751, acc: 0.728516] [A loss: 0.715858, acc: 0.503906]

238: [D loss: 0.710946, acc: 0.515625] [A loss: 1.565704, acc: 0.000000]

239: [D loss: 0.613677, acc: 0.685547] [A loss: 0.616414, acc: 0.675781]

240: [D loss: 0.744525, acc: 0.505859] [A loss: 1.453091, acc: 0.003906]

241: [D loss: 0.635414, acc: 0.648438] [A loss: 0.671547, acc: 0.585938]

242: [D loss: 0.704229, acc: 0.505859] [A loss: 1.248188, acc: 0.000000]

243: [D loss: 0.620378, acc: 0.679688] [A loss: 0.802517, acc: 0.269531]

244: [D loss: 0.676368, acc: 0.554688] [A loss: 1.273573, acc: 0.007812]

245: [D loss: 0.627741, acc: 0.697266] [A loss: 0.805003, acc: 0.277344]

246: [D loss: 0.680954, acc: 0.535156] [A loss: 1.364167, acc: 0.000000]

247: [D loss: 0.617311, acc: 0.708984] [A loss: 0.779782, acc: 0.324219]

248: [D loss: 0.704194, acc: 0.525391] [A loss: 1.512358, acc: 0.000000]

249: [D loss: 0.618787, acc: 0.705078] [A loss: 0.656829, acc: 0.621094]

250: [D loss: 0.749846, acc: 0.511719] [A loss: 1.544242, acc: 0.000000]

251: [D loss: 0.619732, acc: 0.691406] [A loss: 0.703778, acc: 0.523438]

252: [D loss: 0.743708, acc: 0.511719] [A loss: 1.425920, acc: 0.000000]

253: [D loss: 0.626959, acc: 0.660156] [A loss: 0.713868, acc: 0.492188]

254: [D loss: 0.715720, acc: 0.513672] [A loss: 1.382949, acc: 0.000000]

255: [D loss: 0.630420, acc: 0.667969] [A loss: 0.766442, acc: 0.335938]

256: [D loss: 0.706938, acc: 0.531250] [A loss: 1.348288, acc: 0.003906]

257: [D loss: 0.633038, acc: 0.687500] [A loss: 0.775958, acc: 0.332031]

258: [D loss: 0.696131, acc: 0.521484] [A loss: 1.309652, acc: 0.003906]

259: [D loss: 0.622492, acc: 0.669922] [A loss: 0.793313, acc: 0.285156]

260: [D loss: 0.685976, acc: 0.527344] [A loss: 1.376491, acc: 0.000000]

261: [D loss: 0.638133, acc: 0.673828] [A loss: 0.790920, acc: 0.355469]

262: [D loss: 0.679706, acc: 0.544922] [A loss: 1.427657, acc: 0.000000]

263: [D loss: 0.644071, acc: 0.628906] [A loss: 0.745437, acc: 0.433594]

264: [D loss: 0.732221, acc: 0.503906] [A loss: 1.472740, acc: 0.000000]

265: [D loss: 0.658985, acc: 0.611328] [A loss: 0.653982, acc: 0.589844]

266: [D loss: 0.738794, acc: 0.507812] [A loss: 1.425443, acc: 0.000000]

267: [D loss: 0.661236, acc: 0.578125] [A loss: 0.620909, acc: 0.738281]

268: [D loss: 0.729037, acc: 0.503906] [A loss: 1.274895, acc: 0.003906]

269: [D loss: 0.646045, acc: 0.638672] [A loss: 0.715880, acc: 0.460938]

270: [D loss: 0.718505, acc: 0.513672] [A loss: 1.197851, acc: 0.003906]

271: [D loss: 0.641561, acc: 0.656250] [A loss: 0.762347, acc: 0.355469]

272: [D loss: 0.680380, acc: 0.533203] [A loss: 1.152511, acc: 0.007812]

273: [D loss: 0.655355, acc: 0.642578] [A loss: 0.818601, acc: 0.261719]

274: [D loss: 0.679781, acc: 0.564453] [A loss: 1.128714, acc: 0.011719]

275: [D loss: 0.655671, acc: 0.628906] [A loss: 0.856017, acc: 0.191406]

276: [D loss: 0.690292, acc: 0.511719] [A loss: 1.310163, acc: 0.000000]

277: [D loss: 0.647914, acc: 0.660156] [A loss: 0.750118, acc: 0.390625]

278: [D loss: 0.704151, acc: 0.513672] [A loss: 1.374055, acc: 0.000000]

279: [D loss: 0.654893, acc: 0.621094] [A loss: 0.650132, acc: 0.625000]

280: [D loss: 0.729345, acc: 0.498047] [A loss: 1.403211, acc: 0.000000]

281: [D loss: 0.657251, acc: 0.605469] [A loss: 0.647218, acc: 0.632812]

282: [D loss: 0.732492, acc: 0.503906] [A loss: 1.268551, acc: 0.003906]

283: [D loss: 0.662744, acc: 0.626953] [A loss: 0.689310, acc: 0.558594]

284: [D loss: 0.700425, acc: 0.511719] [A loss: 1.162058, acc: 0.003906]

285: [D loss: 0.651830, acc: 0.623047] [A loss: 0.780479, acc: 0.312500]

286: [D loss: 0.691948, acc: 0.525391] [A loss: 1.133446, acc: 0.011719]

287: [D loss: 0.650677, acc: 0.644531] [A loss: 0.793982, acc: 0.304688]

288: [D loss: 0.698428, acc: 0.515625] [A loss: 1.247315, acc: 0.000000]

289: [D loss: 0.657979, acc: 0.648438] [A loss: 0.722180, acc: 0.449219]

290: [D loss: 0.701157, acc: 0.517578] [A loss: 1.253019, acc: 0.003906]

291: [D loss: 0.649832, acc: 0.644531] [A loss: 0.701979, acc: 0.503906]

292: [D loss: 0.712979, acc: 0.519531] [A loss: 1.271342, acc: 0.000000]

293: [D loss: 0.660288, acc: 0.621094] [A loss: 0.689836, acc: 0.550781]

294: [D loss: 0.726884, acc: 0.505859] [A loss: 1.200393, acc: 0.003906]

295: [D loss: 0.663560, acc: 0.617188] [A loss: 0.701607, acc: 0.488281]

296: [D loss: 0.707670, acc: 0.507812] [A loss: 1.129499, acc: 0.003906]

297: [D loss: 0.663256, acc: 0.617188] [A loss: 0.739957, acc: 0.371094]

298: [D loss: 0.689587, acc: 0.523438] [A loss: 1.191701, acc: 0.000000]

299: [D loss: 0.660023, acc: 0.623047] [A loss: 0.746944, acc: 0.371094]

300: [D loss: 0.684651, acc: 0.531250] [A loss: 1.150684, acc: 0.000000]

301: [D loss: 0.651196, acc: 0.642578] [A loss: 0.808909, acc: 0.246094]

302: [D loss: 0.678586, acc: 0.552734] [A loss: 1.157491, acc: 0.003906]

303: [D loss: 0.652035, acc: 0.646484] [A loss: 0.783414, acc: 0.289062]

304: [D loss: 0.671595, acc: 0.564453] [A loss: 1.269552, acc: 0.000000]

305: [D loss: 0.648778, acc: 0.662109] [A loss: 0.721312, acc: 0.453125]

306: [D loss: 0.716662, acc: 0.517578] [A loss: 1.334316, acc: 0.000000]

307: [D loss: 0.653186, acc: 0.613281] [A loss: 0.611153, acc: 0.742188]

308: [D loss: 0.724283, acc: 0.505859] [A loss: 1.280901, acc: 0.000000]

309: [D loss: 0.657101, acc: 0.617188] [A loss: 0.631597, acc: 0.683594]

310: [D loss: 0.730646, acc: 0.500000] [A loss: 1.158158, acc: 0.000000]

311: [D loss: 0.657883, acc: 0.625000] [A loss: 0.732748, acc: 0.410156]

312: [D loss: 0.703416, acc: 0.523438] [A loss: 1.026374, acc: 0.015625]

313: [D loss: 0.669057, acc: 0.599609] [A loss: 0.774714, acc: 0.257812]

314: [D loss: 0.681841, acc: 0.539062] [A loss: 1.001734, acc: 0.019531]

315: [D loss: 0.658778, acc: 0.632812] [A loss: 0.791964, acc: 0.246094]

316: [D loss: 0.682347, acc: 0.537109] [A loss: 1.017623, acc: 0.011719]

317: [D loss: 0.662230, acc: 0.595703] [A loss: 0.856697, acc: 0.121094]

318: [D loss: 0.676194, acc: 0.546875] [A loss: 1.032536, acc: 0.019531]

319: [D loss: 0.653828, acc: 0.617188] [A loss: 0.825725, acc: 0.207031]

320: [D loss: 0.668046, acc: 0.556641] [A loss: 1.124810, acc: 0.015625]

。。。。。。。。。。。。。。。。。。。。。..。

9919: [D loss: 0.702338, acc: 0.517578] [A loss: 0.747043, acc: 0.378906]

9920: [D loss: 0.703451, acc: 0.494141] [A loss: 0.835588, acc: 0.164062]

9921: [D loss: 0.693855, acc: 0.517578] [A loss: 0.730508, acc: 0.410156]

9922: [D loss: 0.715099, acc: 0.472656] [A loss: 0.826207, acc: 0.195312]

9923: [D loss: 0.706640, acc: 0.474609] [A loss: 0.751718, acc: 0.335938]

9924: [D loss: 0.691947, acc: 0.550781] [A loss: 0.813644, acc: 0.226562]

9925: [D loss: 0.697594, acc: 0.513672] [A loss: 0.768750, acc: 0.269531]

9926: [D loss: 0.689501, acc: 0.552734] [A loss: 0.768900, acc: 0.335938]

9927: [D loss: 0.696636, acc: 0.531250] [A loss: 0.772304, acc: 0.316406]

9928: [D loss: 0.697596, acc: 0.523438] [A loss: 0.806830, acc: 0.261719]

9929: [D loss: 0.697859, acc: 0.505859] [A loss: 0.727642, acc: 0.433594]

9930: [D loss: 0.697926, acc: 0.523438] [A loss: 0.879466, acc: 0.101562]

9931: [D loss: 0.697527, acc: 0.531250] [A loss: 0.701799, acc: 0.488281]

9932: [D loss: 0.710694, acc: 0.490234] [A loss: 0.855742, acc: 0.128906]

9933: [D loss: 0.705487, acc: 0.521484] [A loss: 0.727056, acc: 0.417969]

9934: [D loss: 0.700702, acc: 0.496094] [A loss: 0.823942, acc: 0.179688]

9935: [D loss: 0.704966, acc: 0.498047] [A loss: 0.767886, acc: 0.281250]

9936: [D loss: 0.701806, acc: 0.509766] [A loss: 0.798015, acc: 0.238281]

9937: [D loss: 0.715728, acc: 0.478516] [A loss: 0.776415, acc: 0.292969]

9938: [D loss: 0.697116, acc: 0.511719] [A loss: 0.829303, acc: 0.195312]

9939: [D loss: 0.699822, acc: 0.509766] [A loss: 0.740921, acc: 0.375000]

9940: [D loss: 0.719507, acc: 0.478516] [A loss: 0.859272, acc: 0.160156]

9941: [D loss: 0.695651, acc: 0.525391] [A loss: 0.704798, acc: 0.503906]

9942: [D loss: 0.707328, acc: 0.503906] [A loss: 0.808904, acc: 0.218750]

9943: [D loss: 0.688593, acc: 0.548828] [A loss: 0.744065, acc: 0.394531]

9944: [D loss: 0.699065, acc: 0.523438] [A loss: 0.802332, acc: 0.257812]

9945: [D loss: 0.698068, acc: 0.525391] [A loss: 0.745907, acc: 0.347656]

9946: [D loss: 0.701033, acc: 0.498047] [A loss: 0.813438, acc: 0.199219]

9947: [D loss: 0.691200, acc: 0.541016] [A loss: 0.756370, acc: 0.343750]

9948: [D loss: 0.712499, acc: 0.476562] [A loss: 0.830884, acc: 0.136719]

9949: [D loss: 0.696183, acc: 0.509766] [A loss: 0.704277, acc: 0.488281]

9950: [D loss: 0.696827, acc: 0.523438] [A loss: 0.825646, acc: 0.183594]

9951: [D loss: 0.692385, acc: 0.529297] [A loss: 0.743277, acc: 0.378906]

9952: [D loss: 0.704989, acc: 0.513672] [A loss: 0.853841, acc: 0.144531]

9953: [D loss: 0.703750, acc: 0.476562] [A loss: 0.710911, acc: 0.484375]

9954: [D loss: 0.714116, acc: 0.507812] [A loss: 0.949648, acc: 0.078125]

9955: [D loss: 0.694516, acc: 0.525391] [A loss: 0.714422, acc: 0.453125]

9956: [D loss: 0.710579, acc: 0.500000] [A loss: 0.842483, acc: 0.171875]

9957: [D loss: 0.693028, acc: 0.515625] [A loss: 0.750638, acc: 0.355469]

9958: [D loss: 0.707715, acc: 0.515625] [A loss: 0.801792, acc: 0.230469]

9959: [D loss: 0.706937, acc: 0.507812] [A loss: 0.780088, acc: 0.292969]

9960: [D loss: 0.704332, acc: 0.519531] [A loss: 0.721530, acc: 0.414062]

9961: [D loss: 0.708584, acc: 0.492188] [A loss: 0.830755, acc: 0.191406]

9962: [D loss: 0.699920, acc: 0.500000] [A loss: 0.730089, acc: 0.402344]

9963: [D loss: 0.709511, acc: 0.482422] [A loss: 0.819024, acc: 0.214844]

9964: [D loss: 0.698606, acc: 0.513672] [A loss: 0.775723, acc: 0.296875]

9965: [D loss: 0.707085, acc: 0.474609] [A loss: 0.832017, acc: 0.226562]

9966: [D loss: 0.708760, acc: 0.500000] [A loss: 0.773791, acc: 0.296875]

9967: [D loss: 0.714988, acc: 0.501953] [A loss: 0.876916, acc: 0.128906]

9968: [D loss: 0.699741, acc: 0.527344] [A loss: 0.761598, acc: 0.339844]

9969: [D loss: 0.709108, acc: 0.505859] [A loss: 0.784633, acc: 0.292969]

9970: [D loss: 0.704452, acc: 0.476562] [A loss: 0.811802, acc: 0.210938]

9971: [D loss: 0.706212, acc: 0.490234] [A loss: 0.733744, acc: 0.398438]

9972: [D loss: 0.709742, acc: 0.486328] [A loss: 0.834597, acc: 0.183594]

9973: [D loss: 0.698140, acc: 0.531250] [A loss: 0.685229, acc: 0.550781]

9974: [D loss: 0.711616, acc: 0.492188] [A loss: 0.845335, acc: 0.140625]

9975: [D loss: 0.693955, acc: 0.531250] [A loss: 0.699906, acc: 0.519531]

9976: [D loss: 0.705078, acc: 0.515625] [A loss: 0.821778, acc: 0.203125]

9977: [D loss: 0.697455, acc: 0.513672] [A loss: 0.714708, acc: 0.457031]

9978: [D loss: 0.705634, acc: 0.500000] [A loss: 0.791556, acc: 0.261719]

9979: [D loss: 0.702151, acc: 0.509766] [A loss: 0.729714, acc: 0.398438]

9980: [D loss: 0.710843, acc: 0.492188] [A loss: 0.803456, acc: 0.218750]

9981: [D loss: 0.698539, acc: 0.500000] [A loss: 0.743020, acc: 0.351562]

9982: [D loss: 0.702784, acc: 0.507812] [A loss: 0.829923, acc: 0.164062]

9983: [D loss: 0.710229, acc: 0.480469] [A loss: 0.726384, acc: 0.417969]

9984: [D loss: 0.711040, acc: 0.503906] [A loss: 0.807429, acc: 0.207031]

9985: [D loss: 0.696956, acc: 0.533203] [A loss: 0.755409, acc: 0.363281]

9986: [D loss: 0.700728, acc: 0.488281] [A loss: 0.772643, acc: 0.304688]

9987: [D loss: 0.704541, acc: 0.492188] [A loss: 0.800799, acc: 0.261719]

9988: [D loss: 0.700189, acc: 0.521484] [A loss: 0.770434, acc: 0.320312]

9989: [D loss: 0.697253, acc: 0.509766] [A loss: 0.797975, acc: 0.242188]

9990: [D loss: 0.706917, acc: 0.492188] [A loss: 0.883894, acc: 0.128906]

9991: [D loss: 0.695292, acc: 0.505859] [A loss: 0.720615, acc: 0.433594]

9992: [D loss: 0.698072, acc: 0.535156] [A loss: 0.877171, acc: 0.132812]

9993: [D loss: 0.691101, acc: 0.515625] [A loss: 0.722928, acc: 0.472656]

9994: [D loss: 0.703333, acc: 0.533203] [A loss: 0.944150, acc: 0.082031]

9995: [D loss: 0.699371, acc: 0.521484] [A loss: 0.715438, acc: 0.464844]

9996: [D loss: 0.710147, acc: 0.505859] [A loss: 0.822873, acc: 0.191406]

9997: [D loss: 0.708276, acc: 0.480469] [A loss: 0.714018, acc: 0.453125]

9998: [D loss: 0.701492, acc: 0.507812] [A loss: 0.802196, acc: 0.257812]

9999: [D loss: 0.695118, acc: 0.513672] [A loss: 0.777079, acc: 0.292969]

Elapsed: 2.9090983357694413 hr

我们的判别器的工作原理是这样的:我们先用生成器生成一堆图片便签为0(假)在用真实图片便签为1(真),然后拿给判别器训练。而对抗模型则是用生成器的产物便签为1,让判别器去判别。数据中D就代表判别器,A代表对抗模型我们可以看到一开始判别器,准确率很低,因为他不是一个好警察,然后上升到90左右说明他已经是一个好警察了,但是最后准确率不断下降,这并不是因为他的水平下降,而是我们的生成器愈发完美,成了“神偷”达到了我们的目的。在看对抗模型,也可以看出我们的警察不能识别出小偷造的假币了。所以GAN是很成功的。

最后

这就是生成式对抗网络GAN,文中后续把犯罪分子改成了小偷,因为我觉得厉害的小偷叫神偷,但是厉害的犯罪分子我没想到叫啥。所以给大家给大家带来的不便深感抱歉。

4311

4311

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?