1.导入opencv库

在Android studio导入OpenCV配置使用中有详细说明

2.添加文件

Manifest 的设置权限,主要是对摄像头添加权限

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools">

<uses-permission android:name="android.permission.CAMERA"/>

<uses-feature android:name="android.hardware.camera" android:required="false"/>

<uses-feature android:name="android.hardware.camera.autofocus" android:required="false"/>

<uses-feature android:name="android.hardware.camera.front" android:required="false"/>

<uses-feature android:name="android.hardware.camera.front.autofocus" android:required="false"/>

<supports-screens android:resizeable="true"

android:smallScreens="true"

android:normalScreens="true"

android:largeScreens="true"

android:anyDensity="true" />

<application

android:allowBackup="true"

android:dataExtractionRules="@xml/data_extraction_rules"

android:fullBackupContent="@xml/backup_rules"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:supportsRtl="true"

android:theme="@style/Theme.MyOpenCV01"

tools:targetApi="31">

<activity

android:name=".FdActivity"

android:exported="true">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

界面代码

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:opencv="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical">

<org.opencv.android.JavaCameraView

android:id="@+id/cameraView_face"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:visibility="gone"

opencv:camera_id="any"

opencv:show_fps="true" />

</RelativeLayout>

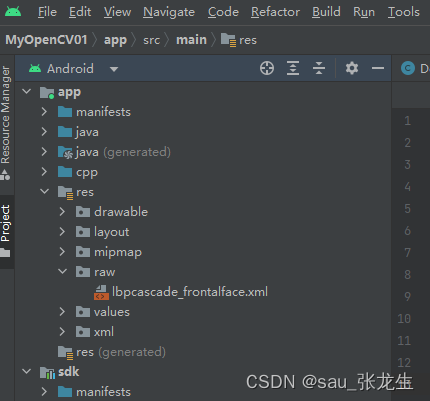

引用opencv的层叠分类器和jni

将解压缩后的opencv-android-sdk\samples\face-detection\res\raw文件夹下的lbpcascade_frontalface.xml文件复制到res/raw文件夹下

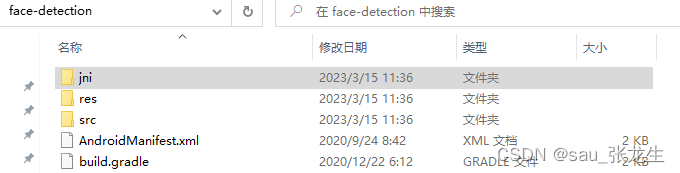

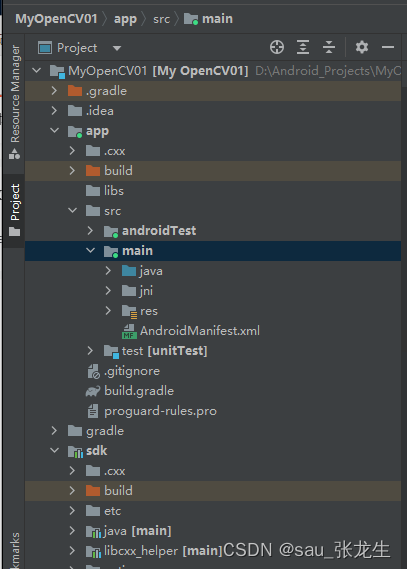

然后,再在工程目录中添加JNI,将opencv-android-sdk\samples\face-detection人脸检测文件夹中的jni目录整个复制到工程目录中,如图

添加编译选项

打开app的build.gradle

添加如下两段代码到android{ }中

externalNativeBuild {

cmake{

arguments "-DOpenCV_DIR=" + project(":sdk").projectDir + "/native/jni",

"-DANDROID+TOOLCHAIN=clang",

"-DANDROID_STL=c++_shared"

targets "detection_based_tracker"

}

}

externalNativeBuild{

cmake{

path 'src/main/jni/CMakeLists.txt'

}

}

此时,编译工程,能够在工程文件夹的build/intermediates/cmake中看到so文件被编译出来

引用jni方法

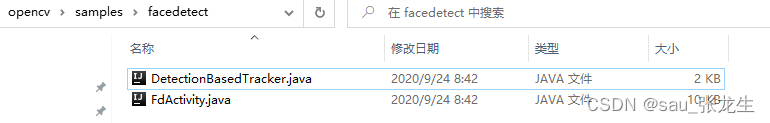

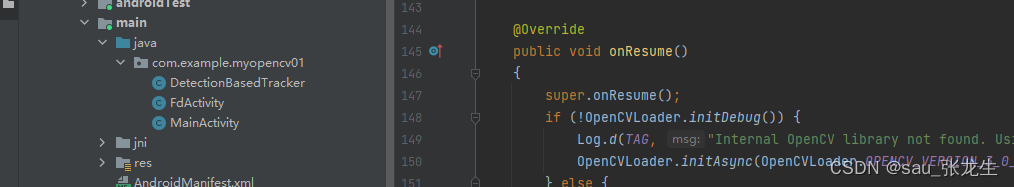

这里直接采用将opencv-sdk/sample文件夹中人脸检测的源代码复制到当前工程中

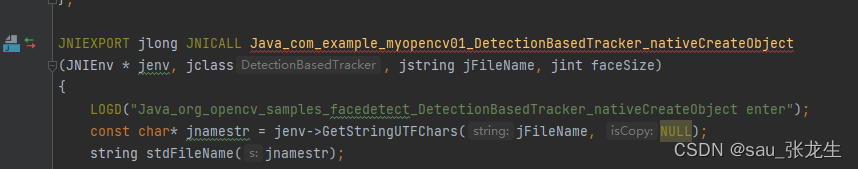

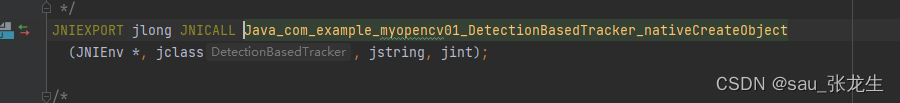

这时,DetectionBasedTracker类的代码中下面对JNI代码的外部引用会报错,我们打开jni下的DetectionBasedTracker_jni.cpp和.h文件,将红线位置改为自己的包名

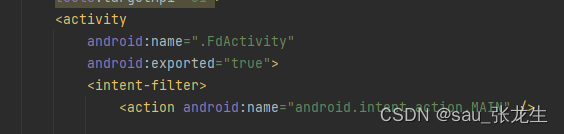

最后,再将Manifest文件中的启动文件改为.FdActivity

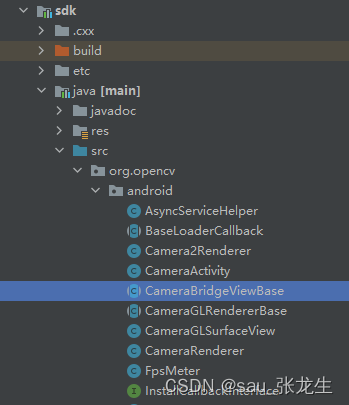

这时运行程序,手机中只显示一块,并且是歪的,我们需要改变导入的opencv库中CameraBridgeViewBase类内deliverAndDrawFrame的写法

/**

* 获取屏幕旋转角度

*/

private int rotationToDegree() {

WindowManager windowManager = (WindowManager) getContext().getSystemService(Context.WINDOW_SERVICE);

int rotation = windowManager.getDefaultDisplay().getRotation();

int degrees = 0;

switch(rotation) {

case Surface.ROTATION_0:

if(mCameraIndex == CAMERA_ID_FRONT) {

degrees = -90;

} else {

degrees = 90;

}

break;

case Surface.ROTATION_90:

break;

case Surface.ROTATION_180:

break;

case Surface.ROTATION_270:

if(mCameraIndex == CAMERA_ID_ANY || mCameraIndex == CAMERA_ID_BACK) {

degrees = 180;

}

break;

}

return degrees;

}

/**

* 计算得到屏幕宽高比

*/

private float calcScale(int widthSource, int heightSource, int widthTarget, int heightTarget) {

if(widthTarget <= heightTarget) {

return (float) heightTarget / (float) heightSource;

} else {

return (float) widthTarget / (float) widthSource;

}

}

/**

* This method shall be called by the subclasses when they have valid

* object and want it to be delivered to external client (via callback) and

* then displayed on the screen.

* @param frame - the current frame to be delivered

*/

protected void deliverAndDrawFrame(CvCameraViewFrame frame) {

Mat modified;

if (mListener != null) {

modified = mListener.onCameraFrame(frame);

} else {

modified = frame.rgba();

}

boolean bmpValid = true;

if (modified != null) {

try {

Utils.matToBitmap(modified, mCacheBitmap);

} catch(Exception e) {

Log.e(TAG, "Mat type: " + modified);

Log.e(TAG, "Bitmap type: " + mCacheBitmap.getWidth() + "*" + mCacheBitmap.getHeight());

Log.e(TAG, "Utils.matToBitmap() throws an exception: " + e.getMessage());

bmpValid = false;

}

}

if (bmpValid && mCacheBitmap != null) {

Canvas canvas = getHolder().lockCanvas();

if (canvas != null) {

canvas.drawColor(0, android.graphics.PorterDuff.Mode.CLEAR);

if (BuildConfig.DEBUG) Log.d(TAG, "mStretch value: " + mScale);

//TODO 额外添加,让预览框达到全屏效果

int degrees = rotationToDegree();

Matrix matrix = new Matrix();

matrix.postRotate(degrees);

Bitmap outputBitmap = Bitmap.createBitmap(mCacheBitmap, 0, 0, mCacheBitmap.getWidth(), mCacheBitmap.getHeight(), matrix, true);

if (outputBitmap.getWidth() <= canvas.getWidth()) {

mScale = calcScale(outputBitmap.getWidth(), outputBitmap.getHeight(), canvas.getWidth(), canvas.getHeight());

} else {

mScale = calcScale(canvas.getWidth(), canvas.getHeight(), outputBitmap.getWidth(), outputBitmap.getHeight());

}

if (mScale != 0) {

canvas.scale(mScale, mScale, 0, 0);

}

Log.d(TAG, "mStretch value: " + mScale);

canvas.drawBitmap(outputBitmap, 0, 0, null);

/*

if (mScale != 0) {

canvas.drawBitmap(mCacheBitmap, new Rect(0,0,mCacheBitmap.getWidth(), mCacheBitmap.getHeight()),

new Rect((int)((canvas.getWidth() - mScale*mCacheBitmap.getWidth()) / 2),

(int)((canvas.getHeight() - mScale*mCacheBitmap.getHeight()) / 2),

(int)((canvas.getWidth() - mScale*mCacheBitmap.getWidth()) / 2 + mScale*mCacheBitmap.getWidth()),

(int)((canvas.getHeight() - mScale*mCacheBitmap.getHeight()) / 2 + mScale*mCacheBitmap.getHeight())), null);

} else {

canvas.drawBitmap(mCacheBitmap, new Rect(0,0,mCacheBitmap.getWidth(), mCacheBitmap.getHeight()),

new Rect((canvas.getWidth() - mCacheBitmap.getWidth()) / 2,

(canvas.getHeight() - mCacheBitmap.getHeight()) / 2,

(canvas.getWidth() - mCacheBitmap.getWidth()) / 2 + mCacheBitmap.getWidth(),

(canvas.getHeight() - mCacheBitmap.getHeight()) / 2 + mCacheBitmap.getHeight()), null);

}

*/

if (mFpsMeter != null) {

mFpsMeter.measure();

mFpsMeter.draw(canvas, 20, 30);

}

getHolder().unlockCanvasAndPost(canvas);

}

}

}

最后编译,运行,效果如下:

FdActivity代码

package com.example.myopencv01;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.util.Collections;

import java.util.List;

import org.opencv.android.BaseLoaderCallback;

import org.opencv.android.CameraActivity;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewFrame;

import org.opencv.android.LoaderCallbackInterface;

import org.opencv.android.OpenCVLoader;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfRect;

import org.opencv.core.Rect;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.android.CameraBridgeViewBase;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2;

import org.opencv.objdetect.CascadeClassifier;

import org.opencv.imgproc.Imgproc;

import android.app.Activity;

import android.content.Context;

import android.os.Bundle;

import android.util.Log;

import android.view.Menu;

import android.view.MenuItem;

import android.view.WindowManager;

public class FdActivity extends CameraActivity implements CvCameraViewListener2 {

private static final String TAG = "OCVSample::Activity";

private static final Scalar FACE_RECT_COLOR = new Scalar(0, 255, 0, 255);

public static final int JAVA_DETECTOR = 0;

public static final int NATIVE_DETECTOR = 1;

private MenuItem mItemFace50;

private MenuItem mItemFace40;

private MenuItem mItemFace30;

private MenuItem mItemFace20;

private MenuItem mItemType;

private Mat mRgba;

private Mat mGray;

private File mCascadeFile;

private CascadeClassifier mJavaDetector;

private DetectionBasedTracker mNativeDetector;

private int mDetectorType = JAVA_DETECTOR;

private String[] mDetectorName;

private float mRelativeFaceSize = 0.2f;

private int mAbsoluteFaceSize = 0;

private CameraBridgeViewBase mOpenCvCameraView;

private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS:

{

Log.i(TAG, "OpenCV loaded successfully");

// opencv初始化加载成功,在加载本地so库

System.loadLibrary("detection_based_tracker");

try {

// load cascade file from application resources 人脸检测文件

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

//初始化检测引擎

mJavaDetector = new CascadeClassifier(mCascadeFile.getAbsolutePath());

if (mJavaDetector.empty()) {

Log.e(TAG, "Failed to load cascade classifier");

mJavaDetector = null;

} else

Log.i(TAG, "Loaded cascade classifier from " + mCascadeFile.getAbsolutePath());

mNativeDetector = new DetectionBasedTracker(mCascadeFile.getAbsolutePath(), 0);

cascadeDir.delete();

} catch (IOException e) {

e.printStackTrace();

Log.e(TAG, "Failed to load cascade. Exception thrown: " + e);

}

mOpenCvCameraView.enableView();

} break;

default:

{

super.onManagerConnected(status);

} break;

}

}

};

public FdActivity() {

mDetectorName = new String[2];

mDetectorName[JAVA_DETECTOR] = "Java";

mDetectorName[NATIVE_DETECTOR] = "Native (tracking)";

Log.i(TAG, "Instantiated new " + this.getClass());

}

/** Called when the activity is first created. */

@Override

public void onCreate(Bundle savedInstanceState) {

Log.i(TAG, "called onCreate");

super.onCreate(savedInstanceState);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

setContentView(R.layout.activity_main);

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.cameraView_face);

mOpenCvCameraView.setVisibility(CameraBridgeViewBase.VISIBLE);

mOpenCvCameraView.setCvCameraViewListener(this);

}

@Override

public void onPause()

{

super.onPause();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public void onResume()

{

super.onResume();

if (!OpenCVLoader.initDebug()) {

Log.d(TAG, "Internal OpenCV library not found. Using OpenCV Manager for initialization");

OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_3_0_0, this, mLoaderCallback);

} else {

Log.d(TAG, "OpenCV library found inside package. Using it!");

mLoaderCallback.onManagerConnected(LoaderCallbackInterface.SUCCESS);

}

}

@Override

protected List<? extends CameraBridgeViewBase> getCameraViewList() {

return Collections.singletonList(mOpenCvCameraView);

}

public void onDestroy() {

super.onDestroy();

mOpenCvCameraView.disableView();

}

public void onCameraViewStarted(int width, int height) {

mGray = new Mat();

mRgba = new Mat();

}

public void onCameraViewStopped() {

mGray.release();

mRgba.release();

}

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

mGray = inputFrame.gray();

if (mAbsoluteFaceSize == 0) {

int height = mGray.rows();

if (Math.round(height * mRelativeFaceSize) > 0) {

mAbsoluteFaceSize = Math.round(height * mRelativeFaceSize);

}

mNativeDetector.setMinFaceSize(mAbsoluteFaceSize);

}

MatOfRect faces = new MatOfRect();

if (mDetectorType == JAVA_DETECTOR) {

if (mJavaDetector != null)

mJavaDetector.detectMultiScale(mGray, faces, 1.1, 2, 2, // TODO: objdetect.CV_HAAR_SCALE_IMAGE

new Size(mAbsoluteFaceSize, mAbsoluteFaceSize), new Size());

}

else if (mDetectorType == NATIVE_DETECTOR) {

if (mNativeDetector != null)

mNativeDetector.detect(mGray, faces);

}

else {

Log.e(TAG, "Detection method is not selected!");

}

//绘制检测框

Rect[] facesArray = faces.toArray();

for (int i = 0; i < facesArray.length; i++)

Imgproc.rectangle(mRgba, facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3);

Log.i(TAG, "共检测到 " + faces.toArray().length + " 张脸");

return mRgba;

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

Log.i(TAG, "called onCreateOptionsMenu");

mItemFace50 = menu.add("Face size 50%");

mItemFace40 = menu.add("Face size 40%");

mItemFace30 = menu.add("Face size 30%");

mItemFace20 = menu.add("Face size 20%");

mItemType = menu.add(mDetectorName[mDetectorType]);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

Log.i(TAG, "called onOptionsItemSelected; selected item: " + item);

if (item == mItemFace50)

setMinFaceSize(0.5f);

else if (item == mItemFace40)

setMinFaceSize(0.4f);

else if (item == mItemFace30)

setMinFaceSize(0.3f);

else if (item == mItemFace20)

setMinFaceSize(0.2f);

else if (item == mItemType) {

int tmpDetectorType = (mDetectorType + 1) % mDetectorName.length;

item.setTitle(mDetectorName[tmpDetectorType]);

setDetectorType(tmpDetectorType);

}

return true;

}

private void setMinFaceSize(float faceSize) {

mRelativeFaceSize = faceSize;

mAbsoluteFaceSize = 0;

}

private void setDetectorType(int type) {

if (mDetectorType != type) {

mDetectorType = type;

if (type == NATIVE_DETECTOR) {

Log.i(TAG, "Detection Based Tracker enabled");

mNativeDetector.start();

} else {

Log.i(TAG, "Cascade detector enabled");

mNativeDetector.stop();

}

}

}

}

DetectionBasedTracker类代码

package com.example.myopencv01;

import org.opencv.core.Mat;

import org.opencv.core.MatOfRect;

public class DetectionBasedTracker

{

//构造方法,初始化人脸检测

public DetectionBasedTracker(String cascadeName, int minFaceSize) {

mNativeObj = nativeCreateObject(cascadeName, minFaceSize);

}

//开始

public void start() {

nativeStart(mNativeObj);

}

//停止

public void stop() {

nativeStop(mNativeObj);

}

//设置人脸最小尺寸

public void setMinFaceSize(int size) {

nativeSetFaceSize(mNativeObj, size);

}

//检测

public void detect(Mat imageGray, MatOfRect faces) {

nativeDetect(mNativeObj, imageGray.getNativeObjAddr(), faces.getNativeObjAddr());

}

//释放资源

public void release() {

nativeDestroyObject(mNativeObj);

mNativeObj = 0;

}

private long mNativeObj = 0;

//native方法

private static native long nativeCreateObject(String cascadeName, int minFaceSize);

private static native void nativeDestroyObject(long thiz);

private static native void nativeStart(long thiz);

private static native void nativeStop(long thiz);

private static native void nativeSetFaceSize(long thiz, int size);

private static native void nativeDetect(long thiz, long inputImage, long faces);

}

713

713

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?