Parameter Server's Architecture

Parameter Server's Architecture(李沐大神和Alex提出的)(与mapreduce的主要区别在于本方法为异步mapreduce为同步)

The Parameter Server

The parameter server was proposed by [1] for scalable machine learningCharacters: client-server architecture, message-passing communication,and asynchronous.

(Note that MapReduce is bulk synchronous.)

Ray [2], an open-source software system, supports parameter server.

Reference

1. Li and others: Scaling distributed machine learning with the parameter server. In OSDl, 2014

2. Moritz and others: Ray: A distributed framework for emerging Al applications. In OSDl, 2018

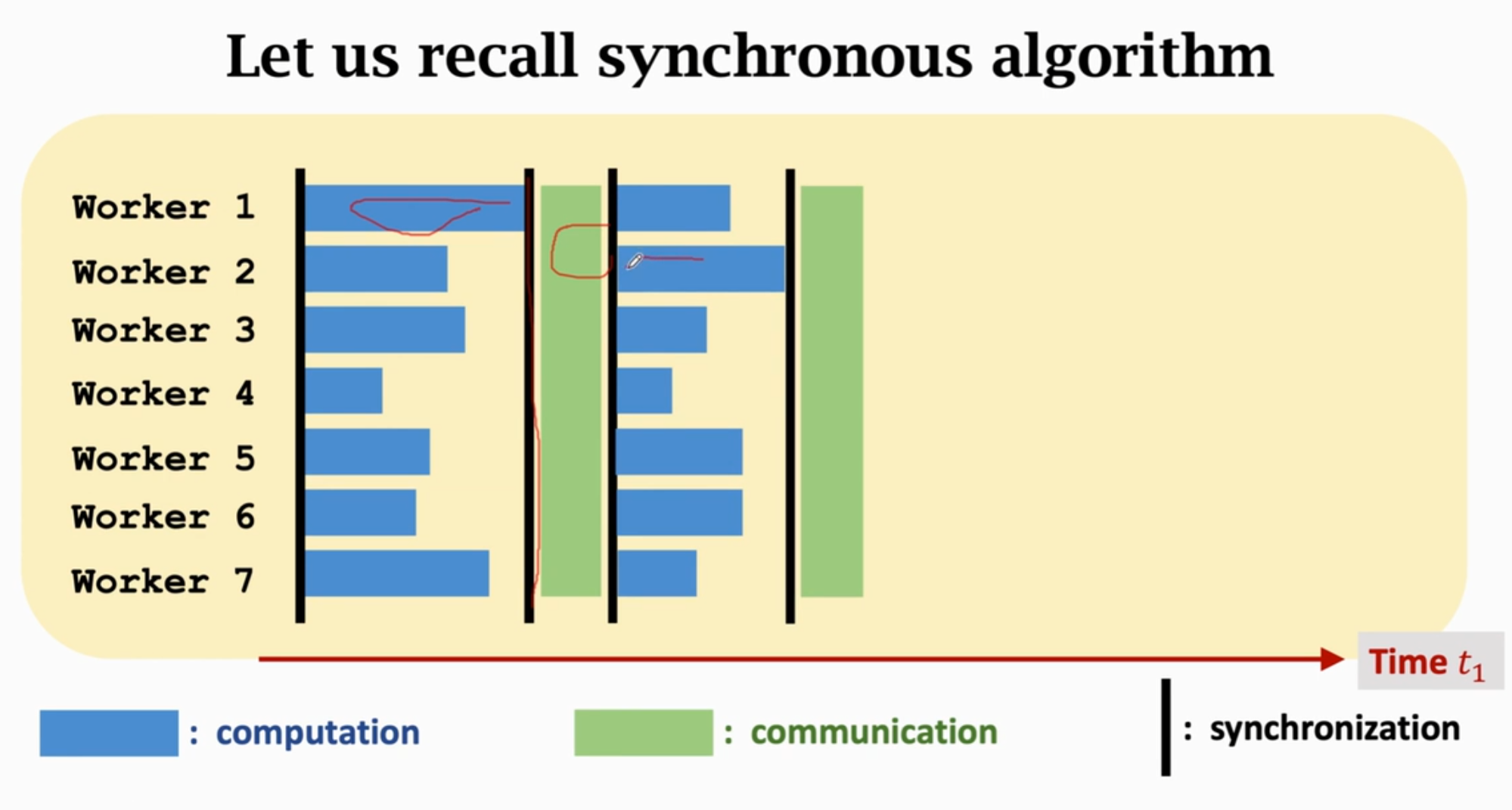

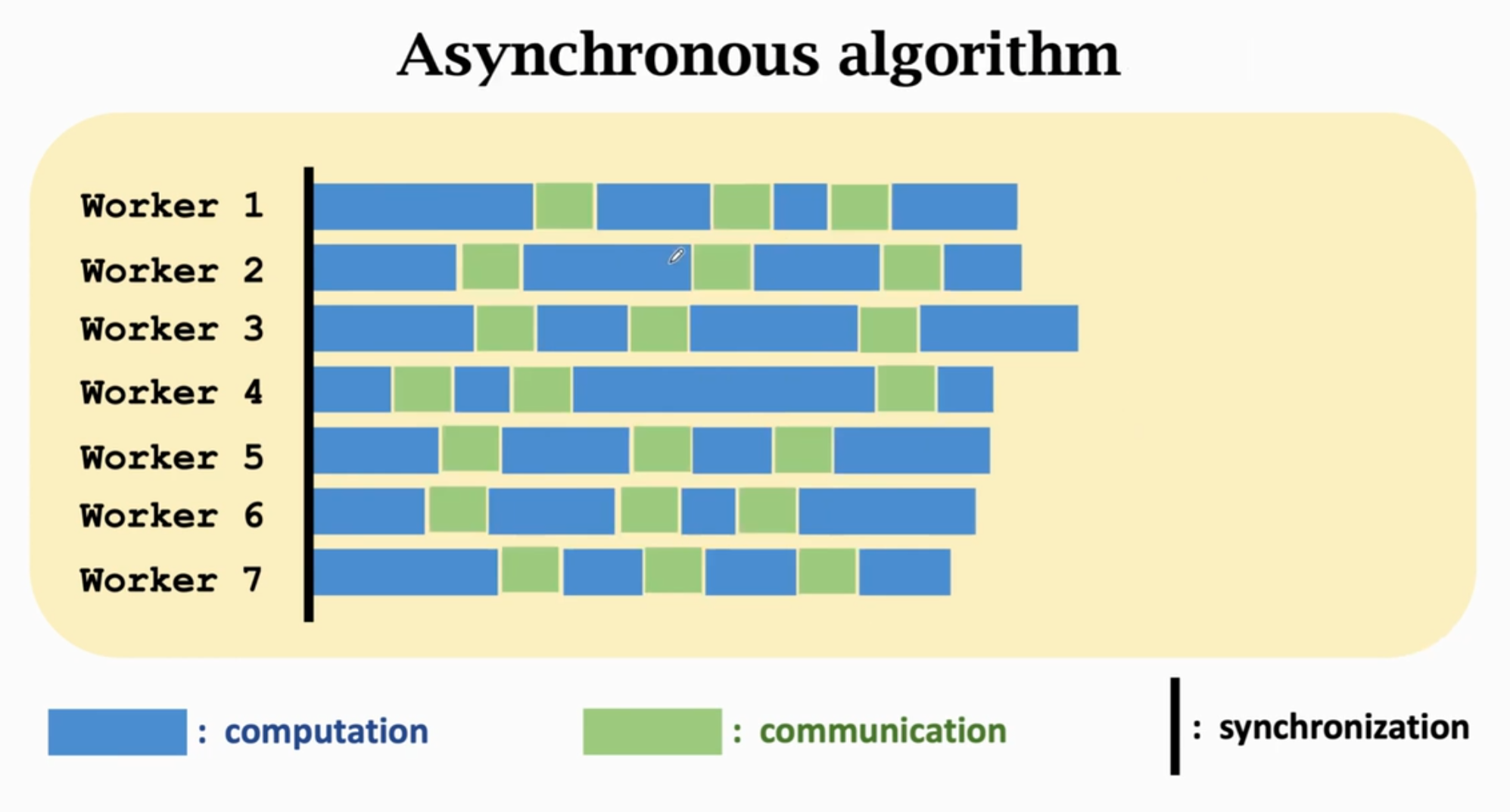

Synchronous algorithm vs Asynchronous algorithm

同步通信效率非常低

异步通信不需要等待其他worker因此高效

Asynchronous Gradient Descent 异步梯度下降

The i-th worker repeats:

1. Pull the up-to-date modelparameters w from the server.

2. Compute gradient using its local data and w.

3. Push to the server.

The server performs:

1. Receive gradient from aworker.

2. Update the parameters by:

Reference

1. Niu and others: Hogwild: A lock-free approach to parallelizing stochastic gradient descent. In NIPS, 2011

Pro and Con of Asynchronous Algorithms

In practice, asynchronous algorithms are faster than the synchronous.

In theory, asynchronous algorithms has slower convergence rate.

Asynchronous algorithms have restrictions, e.g., a worker cannot bemuch slower than the others.(Why?)

1166

1166

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?