(一)torch变量:x.item()

import torch

x =torch.tensor(1.0)

print(x)

print(x.item())

(二)这里,outputs与 targets是怎么计算损失呢?两者维度不一致。

images,targets = data

outputs = mymodule(images)

result_loss = loss(outputs, targets)

# 数据集有10类

print("数据集类别:{}".format(test_set.classes))

# 数据集类别:['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

# 一次送入4张图片,图片类别为targets

print("targets:{}".format(targets)) # batch.size = 4

# targets:tensor([4, 9, 4, 5])

# 预测outputs,每张图片在10个类别上都有对应的数值

print("outputs:{}".format(outputs))

# outputs:tensor([[ 0.0357, -0.0586, 0.1161, 0.0275, -0.0507, -0.0844, -0.0898, -0.0606,

# -0.0637, -0.0835],

# [ 0.0303, -0.0467, 0.0796, 0.0333, -0.0884, -0.0416, -0.0786, -0.0808,

# -0.0288, -0.0781],

# [ 0.0235, -0.0408, 0.0819, 0.0319, -0.0814, -0.0777, -0.0769, -0.0737,

# -0.0421, -0.0689],

# [ 0.0382, -0.0477, 0.0989, 0.0187, -0.0787, -0.0645, -0.0660, -0.0720,

# -0.0403, -0.0815]], grad_fn=<AddmmBackward0>)

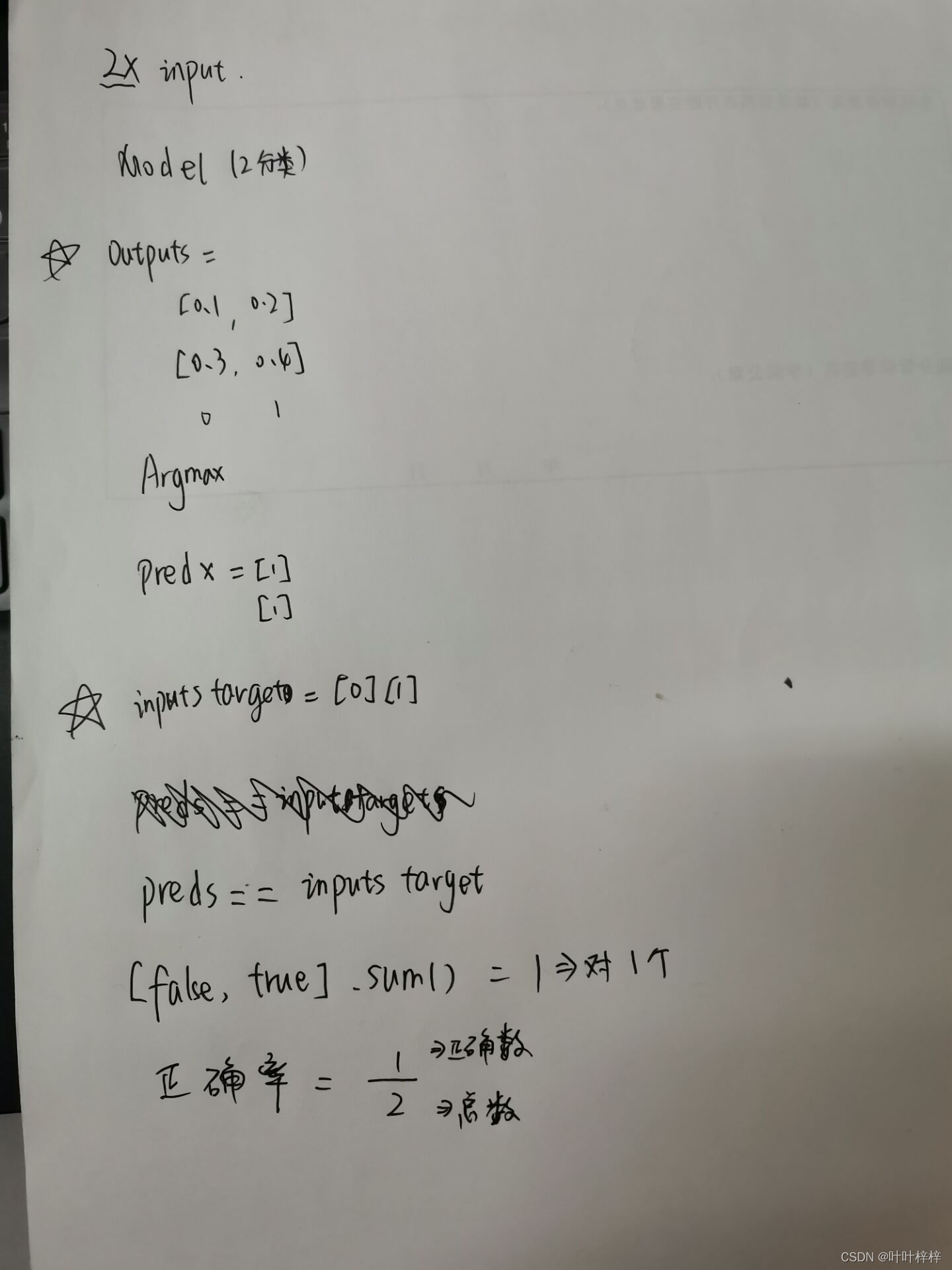

以二分类问题为例:

import torch

outputs = torch.tensor([[0.1,0.2],

[0.3,0.4]])

print(outputs.argmax(1)) # x方向

preds = outputs.argmax(1)

targets = torch.tensor([0,1])

print(preds == targets)

print((preds == targets).sum())

完整代码

import torch

import torchvision.datasets

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear,Sequential

from torch.utils.data import DataLoader

from torchvision import transforms

from torch.utils.tensorboard import SummaryWriter

# 数据集 CIFAR10

dataset_transform = transforms.Compose([transforms.ToTensor()]) # 数据集图片 PIL-->tensor

# train_set = torchvision.datasets.CIFAR10(root="./dataset2", train=True, transform=dataset_transform, download=True)

train_set = torchvision.datasets.CIFAR10(root="./dataset2", train=True, transform=dataset_transform, download=False)

# test_set = torchvision.datasets.CIFAR10(root="./dataset2", train=False, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset2", train=False, transform=dataset_transform, download=False)

print("......训练集长度:{}......".format(len(train_set)))

print("......测试机长度:{}......".format(len(test_set)))

# 加载数据 一次加载64张;打乱顺序;一次性创建num_worker个worker,数值越大,寻batch速度越快;剩余的64除不尽的丢弃数据

train_loader = DataLoader(dataset=train_set, batch_size=64, shuffle=True, num_workers=0, drop_last=True)

test_loader = DataLoader(dataset=test_set, batch_size=64, shuffle=True, num_workers=0, drop_last=True)

# 搭建网络

class MyModule(nn.Module):

def __init__(self):

super().__init__()

self.model1 = Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding= 2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

mymodule = MyModule()

print(mymodule) #打印网络

# 损失函数

loss = nn.CrossEntropyLoss()

# 反向传播

learning_rate = 1e-2 # 1e-2=1*(10)^(-2) = 1/100 = 0.01

optim = torch.optim.SGD(mymodule.parameters(),lr=learning_rate)

# 训练次数

total_train_step = 0

# 测试次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加 tensorboard

writer = SummaryWriter("./log")

for i in range(epoch):

print("------第{}轮训练开始------".format(i+1))

# 训练步骤开始

for data in train_loader:

images,targets = data

outputs = mymodule(images)

result_loss = loss(outputs, targets)

# 优化器优化模型

optim.zero_grad() # 反向传播梯度设置为0

result_loss.backward() # 反向传播 得到梯度 grad可用debug查看梯度变化

optim.step() # 优化器进行参数调优

total_train_step = total_train_step+1

if total_train_step % 100 ==0:

print("训练次数:{},train_Loss:{}".format(total_train_step,result_loss.item()))

writer.add_scalar("train_loss",result_loss.item(),total_train_step)

# 测试步骤开始

total_test_loss = 0

total_test_accuracy = 0

with torch.no_grad():

for data in test_loader:

images,targets = data

outputs = mymodule(images)

result_loss = loss(outputs,targets)

total_test_loss = total_test_loss + result_loss

accuracy = (outputs.argmax(1)==targets).sum()

total_test_accuracy = total_test_accuracy + accuracy

print("整体测试集上的loss:{}".format(total_test_loss.item()))

print("整体测试集上的accuracy:{}".format(total_test_accuracy/len(test_set)))

writer.add_scalar("test_loss",total_test_loss.item(),total_test_step)

writer.add_scalar("test_accuracy", total_test_accuracy/len(test_set), total_test_step)

total_test_step = total_test_step + 1

torch.save(mymodule,"./models/mymodule_{}.pth".format(i)) # 每一轮训练结果

print("模型已保存")

writer.close()

760

760

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?