Redis-cluster-6.2.7-A机房B机房组成6主6从大集群

Centos7系统-Redis集群部署

Redis

原理

#1-可以理解为n个主从架构组合在一起对外服务。

#2-Redis Cluster要求至少需要3个master才能组成一个集群,同时每个master至少需要有一个slave节点。

#3-如果一个主从能够存储32G的数据,如果这个集群包含了两个主从,则整个集群就能够存储64G的数据。

#4-主从架构中,可以通过增加slave节点的方式来扩展读请求的并发量

#5-虽然每个master下都挂载了一个slave节点,但是在Redis Cluster中的读、写请求其实都是在master上完成的。

#6-slave节点只是充当了一个数据备份的角色,当master发生了宕机,就会将对应的slave节点提拔为master,来重新对外提供服务

需求

AB机房搭建redis大集群,当A机房故障,B机房redis集群依然可以写入key及查看key

1-服务器信息

虚拟机信息

服务器 redis端口

192.168.101.191 主:6379 主:6380

192.168.101.192 主:6379 主:6380

192.168.101.193 主:6379 主:6380

192.168.101.194 从:6379 从:6380

192.168.101.195 从:6379 从:6380

192.168.101.196 从:6379 从:6380

2-环境准备

永久修改主机名

全部节点

[root@localhost ~]# hostnamectl set-hostname hhht-1

[root@localhost ~]# bash

修改hosts映射文件

全部节点

[root@sredis ~]# vim /etc/hosts

192.168.101.191 hhht-1

192.168.101.192 hhht-2

192.168.101.193 hhht-3

192.168.101.194 hhht-4

192.168.101.195 hhht-5

192.168.101.196 hhht-6

创建安装目录

全部节点

#应用部署目录:/opt/apps/应用名称/

[root@hhht-1 ~]# mkdir /opt/apps

临时关闭selinux

全部节点

[root@hhht-1 ~]# setenforce 0

永久关闭selinux

全部节点

方法一:

[root@hhht-1 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

[root@hhht-1 ~]# setenforce 0

方法二

[root@hhht-1 ~]# vim /etc/sysconfig/selinux

#找到

SELINUX=enforcing

#改为

SELINUX=disabled

因已经临时关闭,暂不需要重启

关闭防火墙

全部节点

#查看状态-1

[root@hhht-1 ~]# firewall-cmd --state

running

#查看状态-2

[root@hhht-1 ~]# systemctl status firewalld.service

Active: active (running)

#关闭防火墙

[root@redis ~]# systemctl stop firewalld.service

#关闭开机自启

[root@redis ~]# systemctl disable firewalld.service

3-安装依赖

3.1安装gcc

全部节点

[root@hhht-1 ~]# yum -y install gcc gcc-c++

3.2升级gcc

全部节点

CentOS7默认安装的是4.8.5,而redis6.0+只支持5.3以上版本,这里将gcc升级到9

查看gcc版本

[root@hhht-1 ~]# gcc -v

gcc version 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC)

有网环境安装

#安装scl源

[root@hhht-1 ~]# yum -y install centos-release-scl

#升级gcc9

[root@hhht-1 ~]# yum -y install devtoolset-9-gcc devtoolset-9-gcc-c++ devtoolset-9-binutils

#临时切换版本

[root@hhht-1 ~]# scl enable devtoolset-9 bash

#永久切换版本

[root@hhht-1 ~]# echo source /opt/rh/devtoolset-9/enable >> /etc/profile

#刷新变量

[root@hhht-1 ~]# source /etc/profile

#验证gcc是否升级到9

[root@hhht-1 ~]# gcc -v

gcc version 9.3.1 20200408 (Red Hat 9.3.1-2) (GCC)

离线安装

#准备依赖包和安装包

[root@hhht-1 bao]# ll

-rw-r--r-- 1 root root 124304709 Nov 24 11:52 gcc-9.2.0.tar.gz

-rw-r--r-- 1 root root 2383840 Nov 24 11:51 gmp-6.1.0.tar.bz2

-rw-r--r-- 1 root root 1658291 Nov 24 11:50 isl-0.18.tar.bz2

-rw-r--r-- 1 root root 669925 Nov 24 11:51 mpc-1.0.3.tar.gz

-rw-r--r-- 1 root root 1279284 Nov 24 11:51 mpfr-3.1.4.tar.bz2

#解压安装包gcc-9.2.0.tar.gz

[root@hhht-1 bao]# tar xf gcc-9.2.0.tar.gz

#将4个依赖包复制到解压后的 gcc-9.2.0 目录中去,这4个包不需要解压,直接放入

[root@hhht-1 bao]# cp gmp-6.1.0.tar.bz2 isl-0.18.tar.bz2 mpc-1.0.3.tar.gz mpfr-3.1.4.tar.bz2 gcc-9.2.0/

#执行 ./contrib/download_prerequisites

[root@hhht-1 bao]# cd gcc-9.2.0/

[root@redis-2 gcc-9.2.0]# ./contrib/download_prerequisites

#如报错

tar (child): lbzip2: Cannot exec: No such file or directory tar (child): Epror is not recoverable

#解决

[root@redis-2 gcc-9.2.0]# yum -y install bzip2

#创建一个目标安装目录

[root@hhht-1 gcc-9.2.0]# mkdir /opt/apps/gcc-9.2

#执行配置(时间很长)

[root@hhht-1 gcc-9.2.0]# ./configure --prefix=/opt/apps/gcc-9.2 --disable-checking --enable-languages=c,c++ --disable-multilib

#编译

[root@hhht-1 gcc-9.2.0]# make

#编译安装

[root@hhht-1 gcc-9.2.0]# make install

#配置环境变量,启用新版本

[root@hhht-1 gcc-9.2.0]# vim /etc/profile

末行添加:export PATH="/opt/apps/gcc-9.2/bin:$PATH"

#刷新变量

[root@hhht-1 gcc-9.2.0]# source /etc/profile

#查看gcc版本

[root@hhht-1 gcc-9.2.0]# gcc -v

gcc version 9.2.0 (GCC)

#查看cc版本

[root@hhht-1 gcc-9.2.0]# cc -v

gcc version 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC)

"以前安装过低版本的gcc环境,很有可能gcc和cc不一致

gcc 和 cc要一致,否则编译的时候会有各种错误"

#更改cc版本

[root@hhht-1 gcc-9.2.0]# which cc

/usr/bin/cc

#删除原cc

[root@hhht-1 gcc-9.2.0]# rm /usr/bin/cc

#软链接新cc

[root@hhht-1 gcc-9.2.0]# cd /opt/apps/gcc-9.2/bin/

[root@hhht-1 bin]# ln -s gcc cc

#刷新变量

[root@hhht-1 bin]# source /etc/profile

#查看cc版本

[root@hhht-1 bin]# cc -v

gcc version 9.2.0 (GCC)

4-修改系统配置信息

临时更改文件最大打开数

全部节点

[root@hhht-1 bin]# ulimit -n 65535

永久更改文件最大打开数

全部节点

[root@hhht-1 bin]# vim /etc/security/limits.conf

#末行添加:

* soft noproc 65536

* hard noproc 65536

* soft nofile 65536

* hard nofile 65536

#因临时更改了,暂不需要重启

提高TCP端口监听队列的长度

全部节点

#查看默认值

[root@hhht-1 bin]# cat /proc/sys/net/core/somaxconn

128

#更改

[root@hhht-1 bin]# vim /etc/sysctl.conf

"末行添加"

net.core.somaxconn = 20480 #该值不要超过ulimit -n 值的一半

#使修改生效

[root@hhht-1 bin]# sysctl -p

5-编译安装redis

先在hhht-1服务器上安装服务及更改配置,然后scp其他节点,再更改配置文件即可

准备安装包

[root@hhht-1 bin]# cd /root/bao/

[root@hhht-1 bao]# ll

-rw-r--r-- 1 root root 2487287 Nov 24 17:43 redis-6.2.7.tar.gz

解压Redis

[root@hhht-1 bao]# tar xf redis-6.2.7.tar.gz

创建安装目录

[root@hhht-1 bao]# mkdir -p /opt/apps/redis-6379/{data,conf,logs}

#data: 数据目录

#conf: 配置文件目录

#logs: 日志目录

编译Redis,如果没有打印出错误信息则表示编译成功

[root@hhht-1 bao]# cd redis-6.2.7

[root@hhht-1 redis-6.2.7]# make

安装Redis并指定安装目录

[root@hhht-1 redis-6.2.7]# make install PREFIX=/opt/apps/redis-6379/

6-配置并启动Redis

6.1-端口6379服务

先在hhht-1服务器上安装服务及更改配置,然后scp其他节点,再更改配置文件即可

拷贝配置文件

[root@hhht-1 redis-6.2.7]# cp redis.conf /opt/apps/redis-6379/conf/redis.conf

修改配置文件

[root@redis-3 redis-6.2.7]# cd /opt/apps/redis-6379/conf/

[root@hhht-1 conf]# vim redis.conf

#更改为

75行 bind 0.0.0.0

98行 port 6379

259行 daemonize yes # 后台运行程序

304行 logfile "/opt/apps/redis-6379/logs/redis.log"

456行 dir /opt/apps/redis-6379/data

629行 repl-disable-tcp-nodelay yes

486行 masterauth zb@123 # redis密码看需求配置

903行 requirepass zb@123 # redis密码看需求配置

967行 maxclients 10000 # 连接数

994行 #maxmemory 524288000 # 500M # redis内存大小(防止redistribution内存溢出,服务器宕机)

1023行 maxmemory-policy allkeys-lru # key过期策略(删除lru算法的key)

1254行 appendonly yes # AOF持久化

1306行 no-appendfsync-on-rewrite yes # AOF持久化

1387行 cluster-enabled yes # 集群

1401行 cluster-node-timeout 15000 # 集群节点的超时时限

1466行 cluster-migration-barrier 1 # 保证redis集群中不会出现裸奔的主节点

配置文件参数讲解

#bind 0.0.0.0

所有IP都能访问(不安全)多IP访问:192.168.1.100 10.0.0.1

#daemonize yes

启用守护进程(Windows 不支持守护线程的配置为 no )

#requirepass

设置 Redis 连接密码,如果配置了连接密码,客户端在连接 Redis 时需要通过 AUTH 命令提供密码,默认关闭

#maxclients

设置同一时间最大客户端连接数,默认无限制,Redis 可以同时打开的客户端连接数为 Redis 进程可以打开的最大文件描述符数

如果设置 maxclients 0,表示不作限制。当客户端连接数到达限制时

Redis 会关闭新的连接并向客户端返回 max number of clients reached 错误信息

#masterauth

1-edis启用密码认证一定要requirepass和masterauth同时设置。

2-如果主节点设置了requirepass登录验证,在主从切换,slave在和master做数据同步的时候首先需要发送一个ping的消息

给主节点判断主节点是否存活,再监听主节点的端口是否联通,发送数据同步等都会用到master的登录密码,否则无法登录

log会出现响应的报错。也就是说slave的masterauth和master的requirepass是对应的

所以建议redis启用密码时将各个节点的masterauth和requirepass设置为相同的密码,降低运维成本

当然设置为不同也是可以的,注意slave节点masterauth和master节点requirepass的对应关系就行。

#requirepass

masterauth作用:主要是针对master对应的slave节点设置的,在slave节点数据同步的时候用到。

requirepass作用:对登录权限做限制,redis每个节点的requirepass可以是独立、不同的

6.2-端口6380服务

先在hhht-1服务器上安装服务及更改配置,然后scp其他节点,再更改配置文件即可

复制6380端口的redis

[root@hhht-1 conf]# cd ../../

[root@hhht-1 apps]# ll

drwxr-xr-x 8 root root 84 Nov 24 15:00 gcc-9.2

drwxr-xr-x 8 10 143 255 Jun 22 2016 jdk1.8.0_101

drwxr-xr-x 7 root root 64 Nov 24 18:16 redis-6379

#复制6380端口目录

[root@hhht-1 apps]# cp -r redis-6379/ redis-6380

[root@hhht-1 apps]# ll

drwxr-xr-x 8 root root 84 Nov 24 15:00 gcc-9.2

drwxr-xr-x 8 10 143 255 Jun 22 2016 jdk1.8.0_101

drwxr-xr-x 7 root root 64 Nov 24 18:16 redis-6379

drwxr-xr-x 7 root root 64 Nov 24 18:47 redis-6380

修改6380端口配置文件

[root@hhht-1 apps]# vim redis-6380/conf/redis.conf

#更改:

98行 port 6380

291行 pidfile /var/run/redis_6380.pid

304行 logfile "/opt/apps/redis-6380/logs/redis.log"

456行 dir /opt/apps/redis-6380/data

6.3-配置redis系统服务

先在hhht-1服务器上配置,然后scp其他节点即可

6379端口配置systemctl启动,停止,重启命令

[root@hhht-1 apps]# vim /etc/systemd/system/redis-6379.service

[Unit]

Description=Redis

After=syslog.target network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/var/run/redis_6379.pid

ExecStart=/opt/apps/redis-6379/bin/redis-server /opt/apps/redis-6379/conf/redis.conf

ExecStop=/opt/apps/redis-6379/bin/redis-cli -p 6379 shutdown save

PrivateTmp=true

[Install]

WantedBy=multi-user.target

6380端口配置systemctl启动,停止,重启命令

[root@hhht-1 apps]# vim /etc/systemd/system/redis-6380.service

[Unit]

Description=Redis

After=syslog.target network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/var/run/redis_6380.pid

ExecStart=/opt/apps/redis-6380/bin/redis-server /opt/apps/redis-6380/conf/redis.conf

ExecStop=/opt/apps/redis-6380/bin/redis-cli -p 6380 shutdown save

PrivateTmp=true

[Install]

WantedBy=multi-user.target

7-配置其他节点

复制6379和6380目录到其他节点

[root@hhht-1 apps]# scp -r redis-6379/ redis-6380/ 192.168.101.192:/opt/apps/

[root@hhht-1 apps]# scp -r redis-6379/ redis-6380/ 192.168.101.193:/opt/apps/

[root@hhht-1 apps]# scp -r redis-6379/ redis-6380/ 192.168.101.194:/opt/apps/

[root@hhht-1 apps]# scp -r redis-6379/ redis-6380/ 192.168.101.195:/opt/apps/

[root@hhht-1 apps]# scp -r redis-6379/ redis-6380/ 192.168.101.196:/opt/apps/

复制6379和6380的systemctl配置文件

[root@hhht-1 apps]# scp /etc/systemd/system/redis-63* 192.168.101.192:/etc/systemd/system/

[root@hhht-1 apps]# scp /etc/systemd/system/redis-63* 192.168.101.193:/etc/systemd/system/

[root@hhht-1 apps]# scp /etc/systemd/system/redis-63* 192.168.101.194:/etc/systemd/system/

[root@hhht-1 apps]# scp /etc/systemd/system/redis-63* 192.168.101.195:/etc/systemd/system/

[root@hhht-1 apps]# scp /etc/systemd/system/redis-63* 192.168.101.196:/etc/systemd/system/

8-启动redis服务

加载配置

全部节点

[root@dx-001 redis-6380]# systemctl daemon-reload

启动redis及查看状态

全部节点

#启动

[root@hhht-1 apps]# systemctl start redis-6379

[root@hhht-1 apps]# systemctl start redis-6380

#查看状态

[root@hhht-1 apps]# systemctl status redis-6379

● redis-6379.service - redis-server

Loaded: loaded (/etc/systemd/system/redis-6379.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2022-11-24 20:01:02 CST; 5s ago

Process: 2212 ExecStart=/opt/apps/redis-6379/bin/redis-server /opt/apps/redis-6379/conf/redis.conf (code=exited, status=0/SUCCESS)

Main PID: 2213 (redis-server)

CGroup: /system.slice/redis-6379.service

└─2213 /opt/apps/redis-6379/bin/redis-server 0.0.0.0:6379 [cluster]

Nov 24 20:01:02 hhht-1 systemd[1]: Starting redis-server...

Nov 24 20:01:02 hhht-1 systemd[1]: Started redis-server.

[root@hhht-1 apps]# systemctl status redis-6380

● redis-6380.service - Redis

Loaded: loaded (/etc/systemd/system/redis-6380.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2022-11-24 19:57:39 CST; 3min 31s ago

Process: 2184 ExecStart=/opt/apps/redis-6380/bin/redis-server /opt/apps/redis-6380/conf/redis.conf (code=exited, status=0/SUCCESS)

Main PID: 2185 (redis-server)

CGroup: /system.slice/redis-6380.service

└─2185 /opt/apps/redis-6380/bin/redis-server 0.0.0.0:6380 [cluster]

Nov 24 19:57:39 hhht-1 systemd[1]: Starting Redis...

Nov 24 19:57:39 hhht-1 systemd[1]: PID file /var/run/redis_6380.pid not readable (yet?) after start.

Nov 24 19:57:39 hhht-1 systemd[1]: Started Redis.

加入自启

全部节点

[root@hhht-1 apps]# systemctl enable redis-6379

[root@hhht-1 apps]# systemctl enable redis-6380

创建 redis 命令链接

全部节点

[root@redis-3 apps]# cp /opt/apps/redis-6379/bin/redis-cli /usr/bin/redis-cli

[root@redis-3 apps]# cp /opt/apps/redis-6379/bin/redis-server /usr/bin/redis-server

#测试

[root@redis-3 apps]# redis-

redis-cli redis-server

9-创建集群

每个服务器上redis实例成功启动后,在第一台上执行命令创建集群

(注意如果在redis.conf中设置了密码,这里就要加上-a ‘密码’,如果没有密码,就不加-a参数)

因为需要指定A机房节点全部为主,B机房全部为从,所以需要手动指定主从关系,从而保证高可用

确认对应主从节点配置信息,确认无误输入yes

A机房:

192.168.101.191:6379

192.168.101.192:6379

192.168.101.193:6379

192.168.101.191:6380

192.168.101.192:6380

192.168.101.193:6380

9.1 指定主节点

创建具有6个主节点的集群,没有从节点

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster create --cluster-replicas 0 192.168.101.191:6379 192.168.101.192:6379 192.168.101.193:6379 192.168.101.191:6380 192.168.101.192:6380 192.168.101.193:6380

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 2730

Master[1] -> Slots 2731 - 5460

Master[2] -> Slots 5461 - 8191

Master[3] -> Slots 8192 - 10922

Master[4] -> Slots 10923 - 13652

Master[5] -> Slots 13653 - 16383

M: 0d5fdbd0b0e51d68db8894e093c731938744b2b9 192.168.101.191:6379 # 主1

slots:[0-2730] (2731 slots) master

M: 87ce01c12574c3fc19fa50cb06eda30b3146f4b3 192.168.101.192:6379 # 主2

slots:[2731-5460] (2730 slots) master

M: d9618532d70263ee5812debd59194ca08dcdb2e0 192.168.101.193:6379 # 主3

slots:[5461-8191] (2731 slots) master

M: a65c8b27e00a5beac6d15480905fe4722414548c 192.168.101.191:6380 # 主4

slots:[8192-10922] (2731 slots) master

M: 21c397c395a80f423b133f650b3f16235a252e1f 192.168.101.192:6380 # 主5

slots:[10923-13652] (2730 slots) master

M: 6b7597bc1b2ca54110a43c7f9e3265fc1fafe4cb 192.168.101.193:6380 # 主6

slots:[13653-16383] (2731 slots) master

Can I set the above configuration? (type 'yes' to accept): yes # 确认主信息无误 yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 192.168.101.191:6379)

M: 0d5fdbd0b0e51d68db8894e093c731938744b2b9 192.168.101.191:6379

slots:[0-2730] (2731 slots) master

M: 6b7597bc1b2ca54110a43c7f9e3265fc1fafe4cb 192.168.101.193:6380

slots:[13653-16383] (2731 slots) master

M: 87ce01c12574c3fc19fa50cb06eda30b3146f4b3 192.168.101.192:6379

slots:[2731-5460] (2730 slots) master

M: d9618532d70263ee5812debd59194ca08dcdb2e0 192.168.101.193:6379

slots:[5461-8191] (2731 slots) master

M: 21c397c395a80f423b133f650b3f16235a252e1f 192.168.101.192:6380

slots:[10923-13652] (2730 slots) master

M: a65c8b27e00a5beac6d15480905fe4722414548c 192.168.101.191:6380

slots:[8192-10922] (2731 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered. # 集群创建成功

.....

9.2 指定从节点

查看集群节点信息,记录主节点ID

[root@hhht-2 data]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

d9618532d70263ee5812debd59194ca08dcdb2e0 192.168.101.193:6379@16379 master - 0 3 5461-8191 # 主3

21c397c395a80f423b133f650b3f16235a252e1f 192.168.101.192:6380@16380 master - 0 5 10923-13652 # 主5

6b7597bc1b2ca54110a43c7f9e3265fc1fafe4cb 192.168.101.193:6380@16380 master - 0 1 6 13653-16383 # 主6

a65c8b27e00a5beac6d15480905fe4722414548c 192.168.101.191:6380@16380 myself,master- 0 4 8192-10922 # 主4

0d5fdbd0b0e51d68db8894e093c731938744b2b9 192.168.101.191:6379@16379 master - 0 1 0-2730 # 主1

87ce01c12574c3fc19fa50cb06eda30b3146f4b3 192.168.101.192:6379@16379 master - 0 2 2731-5460 # 主2

添加从节点,在添加时指定从节点的主节点,建立主从对应关系

主节点1的从节点

#1- 192.168.101.194:6379 要添加的从节点

#2- 192.168.101.191:6379 原集群中任意节点

#3- --cluster-slave 表示要添加从节点

#4- --cluster-master-id 要添加到哪一个主节点,id是*****

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.194:6379 192.168.101.191:6379 --cluster-slave --cluster-master-id 0d5fdbd0b0e51d68db8894e093c731938744b2b9

>>> Adding node 192.168.101.194:6379 to cluster 192.168.101.191:6379

>>> Performing Cluster Check (using node 192.168.101.191:6379)

M: 0d5fdbd0b0e51d68db8894e093c731938744b2b9 192.168.101.191:6379

slots:[0-2730] (2731 slots) master

M: 6b7597bc1b2ca54110a43c7f9e3265fc1fafe4cb 192.168.101.193:6380

slots:[13653-16383] (2731 slots) master

M: 87ce01c12574c3fc19fa50cb06eda30b3146f4b3 192.168.101.192:6379

slots:[2731-5460] (2730 slots) master

M: d9618532d70263ee5812debd59194ca08dcdb2e0 192.168.101.193:6379

slots:[5461-8191] (2731 slots) master

M: 21c397c395a80f423b133f650b3f16235a252e1f 192.168.101.192:6380

slots:[10923-13652] (2730 slots) master

M: a65c8b27e00a5beac6d15480905fe4722414548c 192.168.101.191:6380

slots:[8192-10922] (2731 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.101.194:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 192.168.101.191:6379.

[OK] New node added correctly.

主节点2的从节点

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.195:6379 192.168.101.191:6379 --cluster-slave --cluster-master-id 87ce01c12574c3fc19fa50cb06eda30b3146f4b3

主节点3的从节点

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.196:6379 192.168.101.191:6379 --cluster-slave --cluster-master-id d9618532d70263ee5812debd59194ca08dcdb2e0

主节点4的从节点

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.194:6380 192.168.101.191:6379 --cluster-slave --cluster-master-id a65c8b27e00a5beac6d15480905fe4722414548c

主节点5的从节点

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.195:6380 192.168.101.191:6379 --cluster-slave --cluster-master-id 21c397c395a80f423b133f650b3f16235a252e1f

主节点6的从节点

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.196:6380 192.168.101.191:6379 --cluster-slave --cluster-master-id 6b7597bc1b2ca54110a43c7f9e3265fc1fafe4cb

10-验证Redis集群

10.1 查看集群信息

使用redis-cli工具链接redis集群,连接集群需要添加 “-c ” 参数 有密码 “-a 密码”

[root@hhht-1 ~]# redis-cli -c -h 192.168.101.191 -p 6380

查看集群信息

192.168.101.191:6380> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:12

cluster_size:6

cluster_current_epoch:6

cluster_my_epoch:4

cluster_stats_messages_ping_sent:3088

cluster_stats_messages_pong_sent:3131

cluster_stats_messages_meet_sent:1

cluster_stats_messages_sent:6220

cluster_stats_messages_ping_received:3131

cluster_stats_messages_pong_received:3089

cluster_stats_messages_received:6220

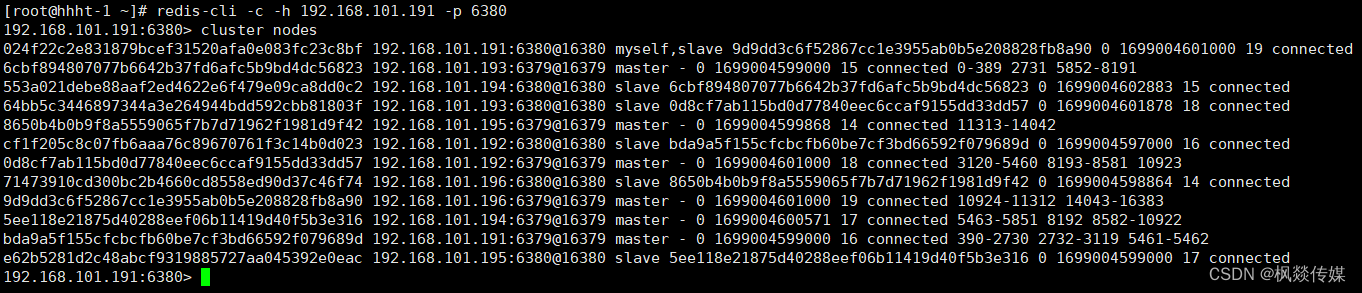

查看集群节点信息 redis

集群槽位总槽数为:16384

#如6主就是16384÷6,每个主节点上平均2730个槽位

192.168.101.191:6380> cluster nodes

ID 节点地址 主/从节点 当前节点 链路状态 散列槽号或范围

66..8 192.168.101.196:6380@16380 slave - 6b..b 0 188..93 6 connected # 从6

72..8 192.168.101.194:6380@16380 slave - a6..c 0 169..00 4 connected # 从4

d9..0 192.168.101.193:6379@16379 master 0 169..21 3 connected 5461-8191 # 主3

21..f 192.168.101.192:6380@16380 master - 0 169..89 5 connected 10923-13652 # 主5

6b..b 192.168.101.193:6380@16380 master 0 169..00 6 connected 13653-16383 # 主6

05..1 192.168.101.195:6379@16379 slave - 87..3 0 169..00 2 connected # 从2

d0..a 192.168.101.196:6379@16379 slave - d9..0 0 169..00 3 connected # 从3

7e..8 192.168.101.195:6380@16380 slave - 21..f 0 169..00 5 connected # 从5

a4..d 192.168.101.194:6379@16379 slave - 0d..9 0 169..97 1 connected # 主3

87..3 192.168.101.192:6379@16379 master - 0 169..77 2 connected 2731-5460 # 从1

0d..9 192.168.101.191:6379@16379 master 2ffaw 0 169..00 1 connected 0-2730 # 主1

a6..c 192.168.101.191:6380@16380 myself,master -0 169..00 4 connected 8192-10922 # 主4

#0: 以毫秒为单位的当前激活的ping发送的unix时间,如果没有挂起的ping,则为零

#188..93: 毫秒 unix 时间收到最后一个乒乓球,slave后面的长串字母可以看出是哪个主节点的ID,也即是该节点是哪个节点的从机

查看槽位信息

192.168.101.191:6380> cluster slots

1) 1) (integer) 0

2) (integer) 2730

3) 1) "192.168.101.191"

2) (integer) 6379

3) "0d5fdbd0b0e51d68db8894e093c731938744b2b9"

4) 1) "192.168.101.194"

2) (integer) 6379

3) "a4e1f4cd211395ed531e72f251589e39029389ad"

2) 1) (integer) 2731

2) (integer) 5460

3) 1) "192.168.101.192"

2) (integer) 6379

3) "87ce01c12574c3fc19fa50cb06eda30b3146f4b3"

4) 1) "192.168.101.195"

2) (integer) 6379

3) "05ba83bb6366c3b519cc126db326375594b17081"

3) 1) (integer) 5461

2) (integer) 8191

3) 1) "192.168.101.193"

2) (integer) 6379

3) "d9618532d70263ee5812debd59194ca08dcdb2e0"

4) 1) "192.168.101.196"

2) (integer) 6379

3) "d0a8f11e9536ed3d705a46c864b100d29dfed82a"

4) 1) (integer) 8192

2) (integer) 10922

3) 1) "192.168.101.191"

2) (integer) 6380

3) "a65c8b27e00a5beac6d15480905fe4722414548c"

4) 1) "192.168.101.194"

2) (integer) 6380

3) "723e91e6820a480603406ddc69b87e9fc7d9f8b8"

5) 1) (integer) 10923

2) (integer) 13652

3) 1) "192.168.101.192"

2) (integer) 6380

3) "21c397c395a80f423b133f650b3f16235a252e1f"

4) 1) "192.168.101.195"

2) (integer) 6380

3) "7ea22088abd7fd8317e927c4bf7e2f7f26e1e1e8"

6) 1) (integer) 13653

2) (integer) 16383

3) 1) "192.168.101.193"

2) (integer) 6380

3) "6b7597bc1b2ca54110a43c7f9e3265fc1fafe4cb"

4) 1) "192.168.101.196"

2) (integer) 6380

3) "664c2003ec96bd9d5dd9a3aa0091c1cbe8cfafe8"

存储数据

192.168.101.191:6380> set name tt

-> Redirected to slot [5798] located at 192.168.101.193:6379

OK

读取数据

192.168.101.193:6379> get name

"tt"

删除key

192.168.101.193:6379> del name

(integer) 1

退出

192.168.101.193:6379> exit

10.2 验证模拟故障

测试A机房故障

关闭A机房1虚拟机192.168.101.191:6379节点

[root@hhht-1 ~]# systemctl stop redis-6379

查看B机房redis集群状态及写入、查看key

[root@hhht-4 ~]# redis-cli -c -h 192.168.101.194 -p 6379

192.168.101.194:6379> cluster nodes

192.168.101.194:6379@16379 myself,master - 0 1699216395000 8 connected 0-2730 # 主的从节点变为主

192.168.101.196:6380@16380 master - 0 1699216401334 7 connected 13653-16383

192.168.101.192:6380@16380 slave 0 1699216399000 11 connected

192.168.101.194:6380@16380 master - 0 1699216398000 9 connected 8192-10922

192.168.101.193:6380@16380 slave 0 1699216400000 7 connected

192.168.101.196:6379@16379 master - 0 1699216398000 12 connected 5461-8191

192.168.101.193:6379@16379 slave 0 1699216399000 12 connected

192.168.101.191:6379@16379 slave,fail ? 1699216386226 8 disconnected # 主失效

192.168.101.195:6380@16380 master - 0 1699216400327 11 connected 10923-13652

192.168.101.192:6379@16379 slave 0 1699216398000 10 connected

192.168.101.191:6380@16380 slave 0 1699216400000 9 connected

192.168.101.195:6379@16379 master - 0 1699216400000 10 connected 2731-5460

#创建key及查看

192.168.101.194:6379> set a 11

-> Redirected to slot [15495] located at 192.168.101.196:6380

OK

192.168.101.196:6380> get a

"11"

按此方法依次关闭第二,第三…等主节点

#关闭redis主节点,写入key,查看key过程省略

恢复redis集群,测试直接关闭一台(2个redis主节点)虚拟机

#恢复redis集群(启动停止的redis节点即可),关闭redis主节点,写入key,查看key过程省略

恢复redis集群,测试直接关闭两台(4个redis主节点)虚拟机

#恢复redis集群(启动停止的redis节点即可),关闭redis主节点,写入key,查看key过程省略

恢复redis集群,测试直接关闭三台(6个redis主节点)虚拟机

#恢复redis集群(启动停止的redis节点即可),关闭redis主节点,写入key,查看key过程省略

10.3 总结

1-依次关闭A机房redis主节点,B机房可以写入key,查看key

2-同时关闭A机房1主机(2个主节点),B机房可以写入key,查看key

3-同时关闭A机房2主机(4个主节点),B机房可以写入key,查看key

4-同时关闭A机房3主机(6个主节点),B机房可以写入key,查看key

11-添加节点至集群

新节点信息

IP

192.168.101.197

按上述文档安装新节点redis并启动服务

IP 端口

192.168.101.197 6379

192.168.101.197 6380

11.1 主节点添加

当前的6主6从进行扩容成7主7从

# 使用如下命令即可添加节点将一个新的节点添加到集群中

# -a 密码认证(没有密码不用带此参数)

# 参数:--cluster add-node 添加节点 新节点IP:新节点端口 任意存活节点IP:任意存活节点端口操作

[root@hhht-2 data]# redis-cli --cluster add-node 192.168.101.197:6379 192.168.101.191:6379

>>> Adding node 192.168.101.197:6379 to cluster 192.168.101.191:6379

>>> Performing Cluster Check (using node 192.168.101.191:6379)

M: bda9a5f155cfcbcfb60be7cf3bd66592f079689d 192.168.101.191:6379

slots:[0-2730] (2731 slots) master

1 additional replica(s)

M: 6cbf894807077b6642b37fd6afc5b9bd4dc56823 192.168.101.193:6379

slots:[5461-8191] (2731 slots) master

1 additional replica(s)

S: 64bb5c3446897344a3e264944bdd592cbb81803f 192.168.101.193:6380

slots: (0 slots) slave

replicates 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57

M: 5ee118e21875d40288eef06b11419d40f5b3e316 192.168.101.194:6379

slots:[8192-10922] (2731 slots) master

1 additional replica(s)

M: 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57 192.168.101.192:6379

slots:[2731-5460] (2730 slots) master

1 additional replica(s)

S: cf1f205c8c07fb6aaa76c89670761f3c14b0d023 192.168.101.192:6380

slots: (0 slots) slave

replicates bda9a5f155cfcbcfb60be7cf3bd66592f079689d

S: e62b5281d2c48abcf9319885727aa045392e0eac 192.168.101.195:6380

slots: (0 slots) slave

replicates 5ee118e21875d40288eef06b11419d40f5b3e316

S: 71473910cd300bc2b4660cd8558ed90d37c46f74 192.168.101.196:6380

slots: (0 slots) slave

replicates 8650b4b0b9f8a5559065f7b7d71962f1981d9f42

M: 8650b4b0b9f8a5559065f7b7d71962f1981d9f42 192.168.101.195:6379

slots:[10923-13652] (2730 slots) master

1 additional replica(s)

S: 553a021debe88aaf2ed4622e6f479e09ca8dd0c2 192.168.101.194:6380

slots: (0 slots) slave

replicates 6cbf894807077b6642b37fd6afc5b9bd4dc56823

S: 024f22c2e831879bcef31520afa0e083fc23c8bf 192.168.101.191:6380

slots: (0 slots) slave

replicates 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90

M: 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90 192.168.101.196:6379

slots:[13653-16383] (2731 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.101.197:6379 to make it join the cluster.

[OK] New node added correctly.

查看集群节点信息

可以看到192.168.101.197:6379已经被添加到了新的集群中了,但是6379并且没有任何的槽位信息,需要迁移槽位

[root@hhht-1 data]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

ID 节点地址 主/从节点 当前节点 链路状态 散列槽号或范围

52..a6 192.168.101.192:6379@16379 master - 0 188..93 2 connected 2731-546 # 主2

5f31b6 192.168.101.191:6380@16380 myself,slave bc48 0 169..00 6 connected # 主6

8b5036 192.168.101.193:6380@16380 slave e8cc0 0 169..21 2 connected # 从2

20a7ce 192.168.101.191:6379@16379 master - 0 169..89 1 connected 0-2730 # 主1

2c3d33 192.168.101.196:6380@16380 slave 7dsad 0 169..00 5 connected # 从5

24e9b5 192.168.101.194:6380@16380 slave 98faa 0 169..00 3 connected # 从3

bc4745 192.168.101.196:6379@16379 master - 0 169..00 6 connected 13653-16383 # 从6

dc2403 192.168.101.195:6380@16380 slave eafaf 0 169..00 4 connected # 从4

95fe68 192.168.101.193:6379@16379 master - 0 169..97 3 connected 5461-8191 # 主3

d3bbea 192.168.101.194:6379@16379 master - 0 169..77 4 connected 8192-10922 # 主4

3b1faf 192.168.101.192:6380@16380 slave 2ffaw 0 169..00 1 connected # 从1

7ab1ed 192.168.101.195:6379@16379 master - 0 169..00 5 connected 10923-13652 # 主5

52..a6 192.168.101.197:6379@16379 master - 0 188..93 7 connected # 主7

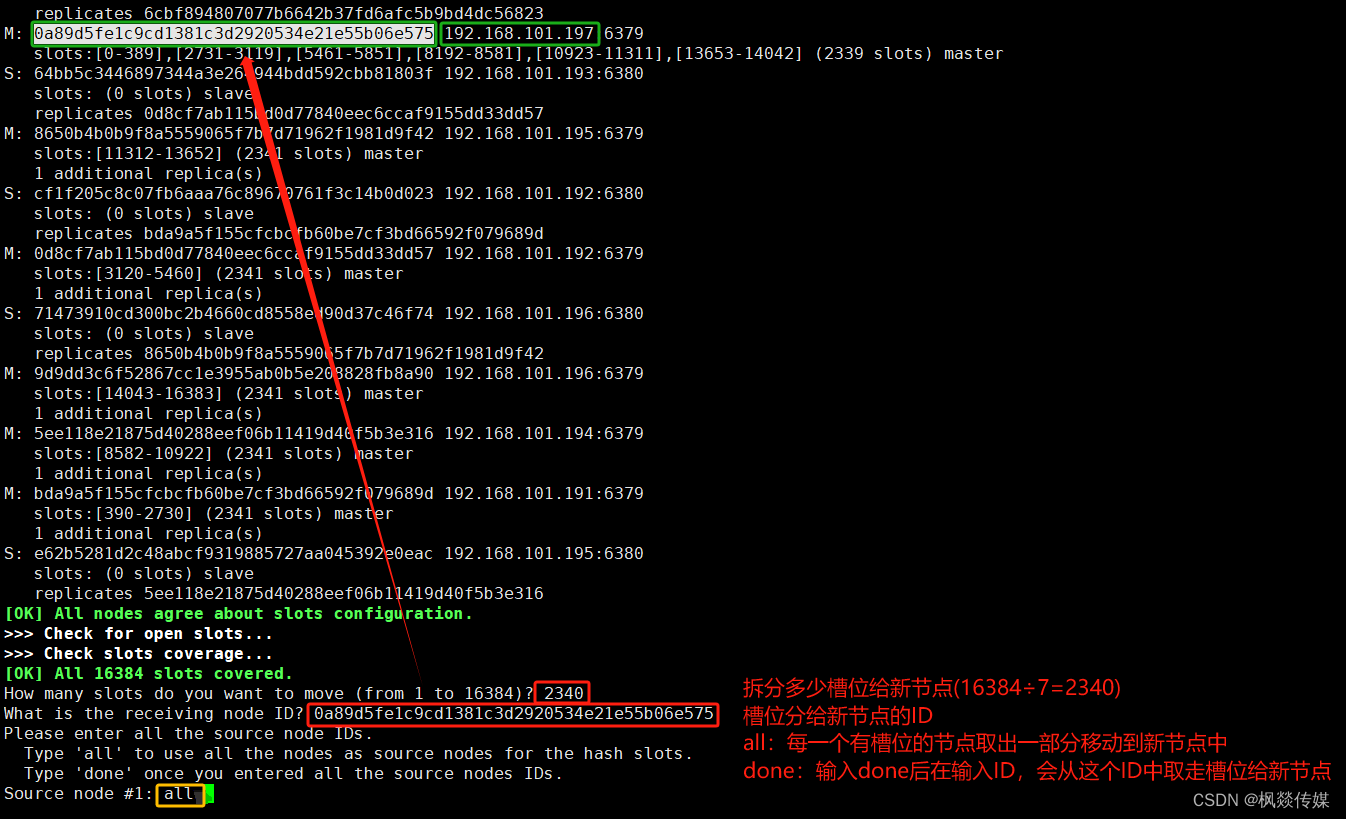

槽位迁移

注意:新添加的节点是没有哈希曹的,所以并不能正常存储数据,需要给新添加的节点分配哈希曹

哈希槽的配置不均匀,可能导致数据不同步;

# 使用如下命令将其它主节点的分片迁移到当前节点中

# -a 密码认证(没有密码不用带此参数)

# 参数:--cluster reshard 槽位迁移 从节点IP:节点端口,中迁移槽位到当前节点中(任意节点ip:port)

[root@hhht-2 data]# redis-cli --cluster reshard 192.168.101.191:6380

>>> Performing Cluster Check (using node 192.168.101.191:6380)

S: 024f22c2e831879bcef31520afa0e083fc23c8bf 192.168.101.191:6380

slots: (0 slots) slave

replicates 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90

M: 6cbf894807077b6642b37fd6afc5b9bd4dc56823 192.168.101.193:6379

slots:[5852-8191] (2340 slots) master

1 additional replica(s)

S: 553a021debe88aaf2ed4622e6f479e09ca8dd0c2 192.168.101.194:6380

slots: (0 slots) slave

replicates 6cbf894807077b6642b37fd6afc5b9bd4dc56823

M: 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 192.168.101.197:6379

slots:[0-389],[2731-3119],[5461-5851],[8192-8581],[10923-11311],[13653-14042] (2339 slots) master

S: 64bb5c3446897344a3e264944bdd592cbb81803f 192.168.101.193:6380

slots: (0 slots) slave

replicates 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57

M: 8650b4b0b9f8a5559065f7b7d71962f1981d9f42 192.168.101.195:6379

slots:[11312-13652] (2341 slots) master

1 additional replica(s)

S: cf1f205c8c07fb6aaa76c89670761f3c14b0d023 192.168.101.192:6380

slots: (0 slots) slave

replicates bda9a5f155cfcbcfb60be7cf3bd66592f079689d

M: 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57 192.168.101.192:6379

slots:[3120-5460] (2341 slots) master

1 additional replica(s)

S: 71473910cd300bc2b4660cd8558ed90d37c46f74 192.168.101.196:6380

slots: (0 slots) slave

replicates 8650b4b0b9f8a5559065f7b7d71962f1981d9f42

M: 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90 192.168.101.196:6379

slots:[14043-16383] (2341 slots) master

1 additional replica(s)

M: 5ee118e21875d40288eef06b11419d40f5b3e316 192.168.101.194:6379

slots:[8582-10922] (2341 slots) master

1 additional replica(s)

M: bda9a5f155cfcbcfb60be7cf3bd66592f079689d 192.168.101.191:6379

slots:[390-2730] (2341 slots) master

1 additional replica(s)

S: e62b5281d2c48abcf9319885727aa045392e0eac 192.168.101.195:6380

slots: (0 slots) slave

replicates 5ee118e21875d40288eef06b11419d40f5b3e316

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)?2340 # 1

What is the receiving node ID? 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 # 2

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all # 3

输入完成后会打印一片执行计划给你看,输入yes就会把槽位与数据全部迁移到新节点了

Moving slot 6236 from 6cbf894807077b6642b37fd6afc5b9bd4dc56823

Moving slot 6237 from 6cbf894807077b6642b37fd6afc5b9bd4dc56823

Moving slot 6238 from 6cbf894807077b6642b37fd6afc5b9bd4dc56823

Moving slot 6239 from 6cbf894807077b6642b37fd6afc5b9bd4dc56823

Moving slot 6240 from 6cbf894807077b6642b37fd6afc5b9bd4dc56823

Do you want to proceed with the proposed reshard plan (yes/no)? #yes

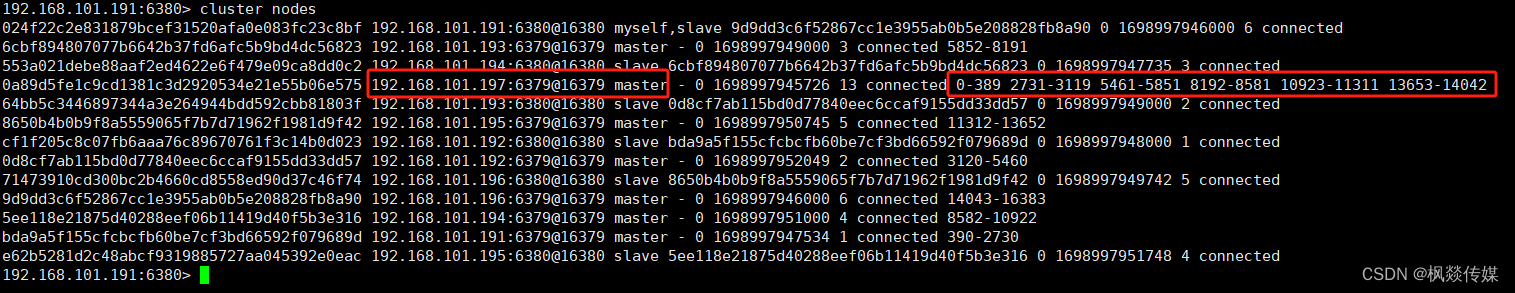

迁移完毕后再查看集群信息可以看到192.168.101.197:6379已经添加了槽位了

[root@hhht-1 data]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

02..f 192.168.101.191:6380@16380 myself, 0 6 connected

6c..3 192.168.101.193:6379@16379 master - 0 3 connected 5852-8191

55..2 192.168.101.194:6380@16380 slave 0 3 connected

0a..5 192.168.101.197:6379@16379 master - 0 13 connected 0-389 2731-3119 5461-5851 8192-8581 10923-11311 13653-14042

64..f 192.168.101.193:6380@16380 slave 0 2 connected

86..2 192.168.101.195:6379@16379 master - 0 5 connected 11312-13652

cf..3 192.168.101.192:6380@16380 slave 0 1 connected

0d..7 192.168.101.192:6379@16379 master - 0 2 connected 3120-5460

71..4 192.168.101.196:6380@16380 slave 0 5 connected

9d..0 192.168.101.196:6379@16379 master - 0 6 connected 14043-16383

5e..6 192.168.101.194:6379@16379 master - 0 4 connected 8582-10922

bd..d 192.168.101.191:6379@16379 master - 0 1 connected 390-2730

e6..c 192.168.101.195:6380@16380 slave 0 4 connected

11.2 从节点添加

添加从节点首先需要先把节点添加到集群中

# 使用如下命令即可添加节点将一个新的节点添加到集群中

# -a 密码认证(没有密码不用带此参数)

# --cluster add-node 添加节点 新节点IP:新节点端口 任意存活节点IP:任意存活节点端口

[root@hhht-2 data]# redis-cli --cluster add-node 192.168.101.197:6380 192.168.101.191:6379

>>> Adding node 192.168.101.197:6380 to cluster 192.168.101.191:6379

>>> Performing Cluster Check (using node 192.168.101.191:6379)

M: bda9a5f155cfcbcfb60be7cf3bd66592f079689d 192.168.101.191:6379

slots:[390-2730] (2341 slots) master

1 additional replica(s)

M: 6cbf894807077b6642b37fd6afc5b9bd4dc56823 192.168.101.193:6379

slots:[5852-8191] (2340 slots) master

1 additional replica(s)

S: 64bb5c3446897344a3e264944bdd592cbb81803f 192.168.101.193:6380

slots: (0 slots) slave

replicates 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57

M: 5ee118e21875d40288eef06b11419d40f5b3e316 192.168.101.194:6379

slots:[8582-10922] (2341 slots) master

1 additional replica(s)

M: 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57 192.168.101.192:6379

slots:[3120-5460] (2341 slots) master

1 additional replica(s)

M: 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 192.168.101.197:6379

slots:[0-389],[2731-3119],[5461-5851],[8192-8581],[10923-11311],[13653-14042] (2339 slots) master

S: cf1f205c8c07fb6aaa76c89670761f3c14b0d023 192.168.101.192:6380

slots: (0 slots) slave

replicates bda9a5f155cfcbcfb60be7cf3bd66592f079689d

S: e62b5281d2c48abcf9319885727aa045392e0eac 192.168.101.195:6380

slots: (0 slots) slave

replicates 5ee118e21875d40288eef06b11419d40f5b3e316

S: 71473910cd300bc2b4660cd8558ed90d37c46f74 192.168.101.196:6380

slots: (0 slots) slave

replicates 8650b4b0b9f8a5559065f7b7d71962f1981d9f42

M: 8650b4b0b9f8a5559065f7b7d71962f1981d9f42 192.168.101.195:6379

slots:[11312-13652] (2341 slots) master

1 additional replica(s)

S: 553a021debe88aaf2ed4622e6f479e09ca8dd0c2 192.168.101.194:6380

slots: (0 slots) slave

replicates 6cbf894807077b6642b37fd6afc5b9bd4dc56823

S: 024f22c2e831879bcef31520afa0e083fc23c8bf 192.168.101.191:6380

slots: (0 slots) slave

replicates 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90

M: 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90 192.168.101.196:6379

slots:[14043-16383] (2341 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.101.197:6380 to make it join the cluster.

[OK] New node added correctly.

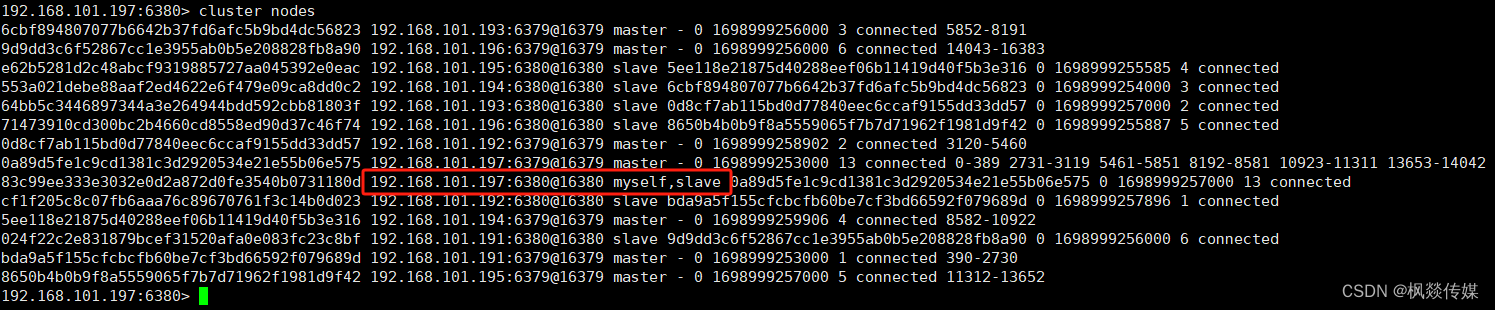

查询集群节点信息

[root@hhht-1 data]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

可以查看到192.168.101.197:6380节点已经添加进去了,但是任何的节点添加都是主节点,需要改成从节点

配置节点

使用客户端命令连接到刚刚新添加的192.168.101.197:6380节点上,并且为他设置一个主节点

# 连接需设为从节点的Redis服务

./bin/redis-cli -p 6380

# 将当前节点分配为 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 的从节点

CLUSTER REPLICATE 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

[root@hhht-1 data]# redis-cli -c -h 192.168.101.197 -p 6380

192.168.101.197:6380> CLUSTER REPLICATE 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

OK

或者直接指定主节点ID添加

#1- 192.168.101.194:6379 要添加的从节点

#2- 192.168.101.191:6379 原集群中任意节点

#3- --cluster-slave 表示要添加从节点

#4- --cluster-master-id 要添加到哪一个主节点,id是*****

[root@hhht-1 ~]# /opt/apps/redis-6379/bin/redis-cli --cluster add-node 192.168.101.194:6379 192.168.101.191:6379 --cluster-slave --cluster-master-id 0d5fdbd0b0e51d68db8894e093c731938744b2b9

再次查看集群节点信息

192.168.101.197:6380已经更改为从节点了

11.3 删除主节点

主节点删除需要对槽进行迁移,如当前需要移除192.168.101.197:6379节点

需要把192.168.101.197:6379的节点槽位移动到别的节点中,才能删除

槽位迁移

# 使用如下命令将其它主节点的分片迁移到其他节点中

# -a 密码认证(没有密码不用带此参数)

# 参数:--cluster reshard 槽位迁移 任意IP:端口

[root@hhht-1 data]# redis-cli -c --cluster reshard 192.168.101.191:6379

>>> Performing Cluster Check (using node 192.168.101.191:6379)

M: bda9a5f155cfcbcfb60be7cf3bd66592f079689d 192.168.101.191:6379

slots:[390-2730] (2341 slots) master

1 additional replica(s)

M: 6cbf894807077b6642b37fd6afc5b9bd4dc56823 192.168.101.193:6379

slots:[5852-8191] (2340 slots) master

1 additional replica(s)

S: 64bb5c3446897344a3e264944bdd592cbb81803f 192.168.101.193:6380

slots: (0 slots) slave

replicates 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57

M: 5ee118e21875d40288eef06b11419d40f5b3e316 192.168.101.194:6379

slots:[8582-10922] (2341 slots) master

1 additional replica(s)

M: 0d8cf7ab115bd0d77840eec6ccaf9155dd33dd57 192.168.101.192:6379

slots:[3120-5460] (2341 slots) master

1 additional replica(s)

M: 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 192.168.101.197:6379

slots:[0-389],[2731-3119],[5461-5851],[8192-8581],[10923-11311],[13653-14042] (2339 slots) master

1 additional replica(s)

S: cf1f205c8c07fb6aaa76c89670761f3c14b0d023 192.168.101.192:6380

slots: (0 slots) slave

replicates bda9a5f155cfcbcfb60be7cf3bd66592f079689d

S: e62b5281d2c48abcf9319885727aa045392e0eac 192.168.101.195:6380

slots: (0 slots) slave

replicates 5ee118e21875d40288eef06b11419d40f5b3e316

S: 71473910cd300bc2b4660cd8558ed90d37c46f74 192.168.101.196:6380

slots: (0 slots) slave

replicates 8650b4b0b9f8a5559065f7b7d71962f1981d9f42

M: 8650b4b0b9f8a5559065f7b7d71962f1981d9f42 192.168.101.195:6379

slots:[11312-13652] (2341 slots) master

1 additional replica(s)

S: 553a021debe88aaf2ed4622e6f479e09ca8dd0c2 192.168.101.194:6380

slots: (0 slots) slave

replicates 6cbf894807077b6642b37fd6afc5b9bd4dc56823

S: 024f22c2e831879bcef31520afa0e083fc23c8bf 192.168.101.191:6380

slots: (0 slots) slave

replicates 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90

S: 83c99ee333e3032e0d2a872d0fe3540b0731180d 192.168.101.197:6380

slots: (0 slots) slave

replicates 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

M: 9d9dd3c6f52867cc1e3955ab0b5e208828fb8a90 192.168.101.196:6379

slots:[14043-16383] (2341 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

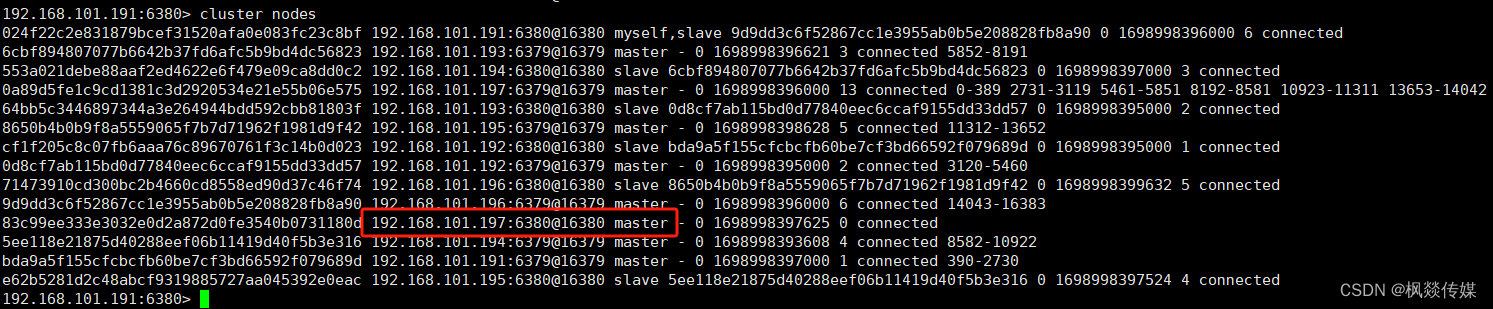

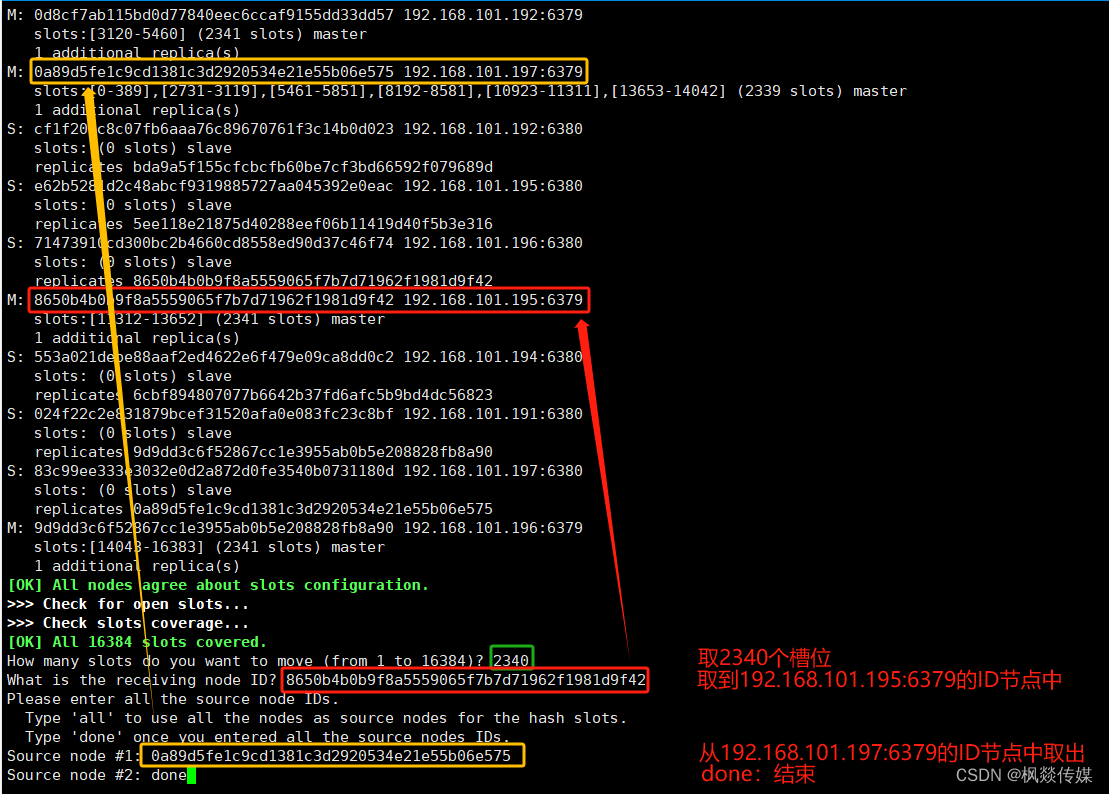

How many slots do you want to move (from 1 to 16384)? 2340 # 1

What is the receiving node ID? 8650b4b0b9f8a5559065f7b7d71962f1981d9f42 # 2

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 # 3

Source node #2: done # 4

输入完成后会打印一片执行计划给你看,输入yes就会把槽位与数据全部迁移到其他节点了

Moving slot 14039 from 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

Moving slot 14040 from 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

Moving slot 14041 from 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

Moving slot 14042 from 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

Do you want to proceed with the proposed reshard plan (yes/no)? # yes

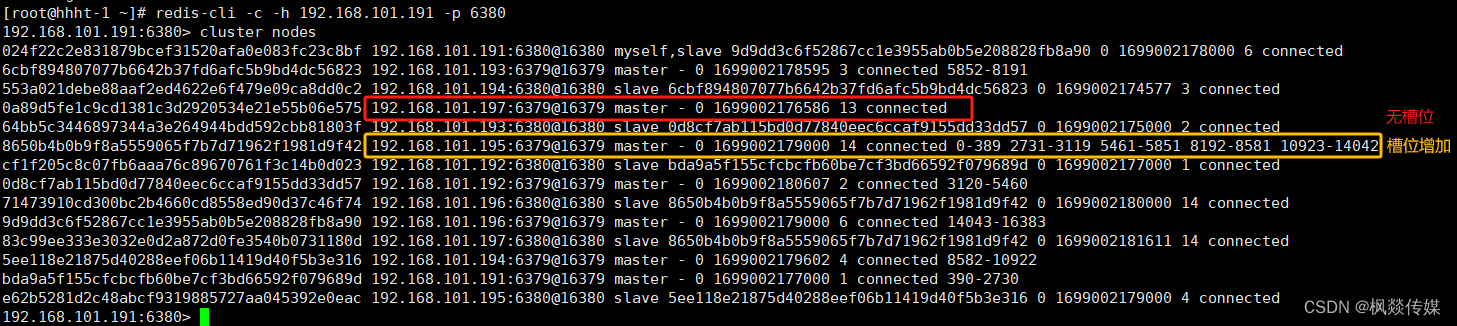

查看集群节点信息

[root@hhht-1 ~]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

移完后可以看到192.168.101.197:6379槽位没有了,192.168.101.195:6379的槽位增加了

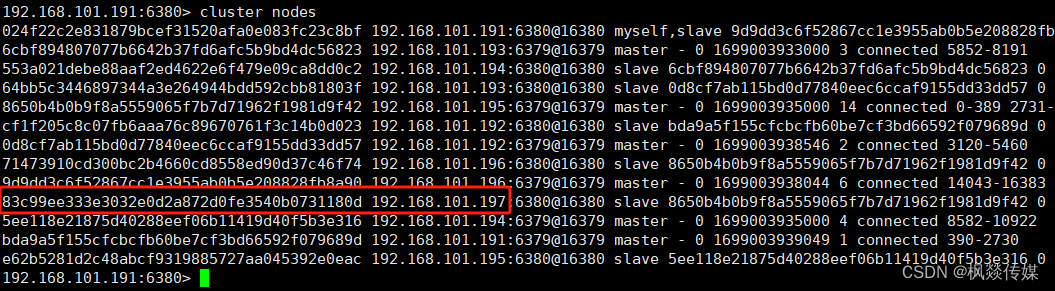

删除集群节点

# 执行如下命令删除节点

# -a 密码认证(没有密码不用带此参数)

# --cluster del-node 连接任意一个存活的节点IP:连接任意一个存活的节点端口 要删除节点ID

[root@hhht-2 ~]# redis-cli --cluster del-node 192.168.101.191:6379 0a89d5fe1c9cd1381c3d2920534e21e55b06e575

>>> Removing node 0a89d5fe1c9cd1381c3d2920534e21e55b06e575 from cluster 192.168.101.191:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

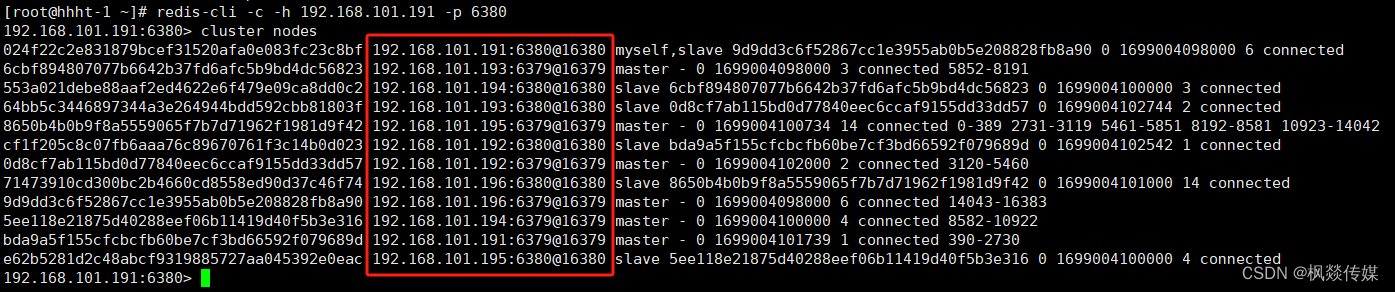

11.4 删除从节点

查看从节点ID

[root@hhht-1 ~]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

从节点删除比较简单,直接删除即可

# -a 密码认证(没有密码不用带此参数)

# --cluster del-node 连接任意一个存活的节点IP:连接任意一个存活的节点端口 要删除节点ID

[root@hhht-2 ~]# redis-cli --cluster del-node 192.168.101.191:6379 83c99ee333e3032e0d2a872d0fe3540b0731180d

>>> Removing node 83c99ee333e3032e0d2a872d0fe3540b0731180d from cluster 192.168.101.191:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

查看集群节点信息

[root@hhht-1 ~]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

删除成功后可以看到192.168.101.191:6380节点已经在集群中消失了

11.5 重新分配槽位

重新分配槽位慎用!!!该功能可以让集群的槽位重新平均分配

但是由于涉及到槽位大量迁移会导致整个Redis阻塞停止处理客户端的请求

# -a 密码认证(没有密码不用带此参数)

# --cluster rebalance 重新分配集群中的槽位 任意IP:端口

[root@hhht-2 ~]# redis-cli --cluster rebalance 192.168.101.191:6379

>>> Performing Cluster Check (using node 192.168.101.191:6379)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 6 nodes. Total weight = 6.00

Moving 391 slots from 192.168.101.195:6379 to 192.168.101.193:6379

#############################################################################

Moving 390 slots from 192.168.101.195:6379 to 192.168.101.191:6379

#############################################################################

Moving 390 slots from 192.168.101.195:6379 to 192.168.101.194:6379

#############################################################################

Moving 390 slots from 192.168.101.195:6379 to 192.168.101.192:6379

#############################################################################

Moving 389 slots from 192.168.101.195:6379 to 192.168.101.196:6379

#############################################################################

查看集群节点信息

#槽位已经重启分配

[root@hhht-1 ~]# redis-cli -c -h 192.168.101.191 -p 6380

192.168.101.191:6380> cluster nodes

12-redis集群总结

Redis集群总结

1-读写都是在master时,当slave加入集群,会进行数据同步,连接集群中的任意主或从节点去读写数据,

都会根据key哈希取模后路由到某个master节点去处理。slave不提供读写服务,只会同步数据。

2-关闭任意一主,会导致部分写操作失败,是由于从节点不能执行写操作,在Slave升级为Master期间会有少量的失败。

3-关闭从节点对于整个集群没有影响

4-某个主节点和他麾下的所有从节点全部挂掉,我们集群就进入faill状态,不可用。因为slot不完整。

5-如果集群超过半数以上master挂掉,无论他们是否有对应slave,集群进入fail状态,因为无法选举。

6-如果集群中的任意master宕机,且此master没有slave。集群不可用。(同3)

7-投票选举过程是集群中所有master参与,

如果半数以上master节点与master节点通信超时(cluster-node-timeout),认为当前master节点挂掉。

8-选举只会针对某个master下的所有slave选举,而不是对所有全量的slave选举。

9-原先的master重新恢复连接后,他会成为新master的从服务器。

10-由于主从同步,客户端的写入命令,有可能会丢失(为啥?参考主从复制原理AOF与RDB)。

11-redis并非强一致性,由于主从特性,所以最后一部分数据会丢失。CAP理论。

12-集群只实现了主节点的故障转移;从节点故障时只会被下线,不会进行故障转移

因此,使用集群时,一般不会使用读写分离技术,因为从节点故障会导致读服务不可用,可用性变差了。所以不要在集群里做读写分离。

13-问题

问题一

Redis集群为什么至少需要三个master节点?

因为新master的选举需要大于半数的集群master节点同意才能选举成功

如果只有两个master节点,当其中一个挂了,是达不到选举新master的条件的

问题二

Redis集群为什么推荐节点数为奇数?

奇数个master节点可以在满足选举该条件的基础上节省一个节点,比如三个master节点和四个master节点的集群相比,

大家如果都挂了一个master节点都能选举新master节点,如果都挂了两个master节点都没法选举新master节点了,

所以奇数的master节点更多的是从节省机器资源角度出发说的。

例如:

在9个master的架构中,如果4台master故障,通过过半机制,redis可以选举新的master。如果5台master故障无法选举新的master

在10个master的架构中,如果4台master故障,通过过半机制,redis可以选举新的master。如果5台master故障无法选举新的master

在高可用方面,9台master与10台master一致。所以通常会使用奇数。假设现在reids内存不足需要拓展,

我们将master的数量加到11台,就高可用方面来说,就算其中5台master发送故障,也可以自动选举新的master。

2003

2003

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?