Text Mining文本挖掘 包含中英文数据预处理以及分析

中文

数据展示

首先导入必要的库:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from pandas.plotting import scatter_matrix

from sklearn.cluster import KMeans

from sklearn.metrics import silhouette_score

from wordcloud import WordCloud

from sklearn import ensemble

from sklearn.model_selection import train_test_split

import jieba

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from nltk.stem import PorterStemmer

from nltk.stem import WordNetLemmatizer

from nltk.stem import SnowballStemmer

from sklearn.feature_extraction.text import CountVectorizer, HashingVectorizer, TfidfTransformer,TfidfVectorizer

from nltk.tag import pos_tag

from sklearn import model_selection, preprocessing, linear_model, naive_bayes, metrics, svm

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn import decomposition, ensemble

import pandas

import random

import re

from nltk.tag import StanfordNERTagger

import os

import nltk

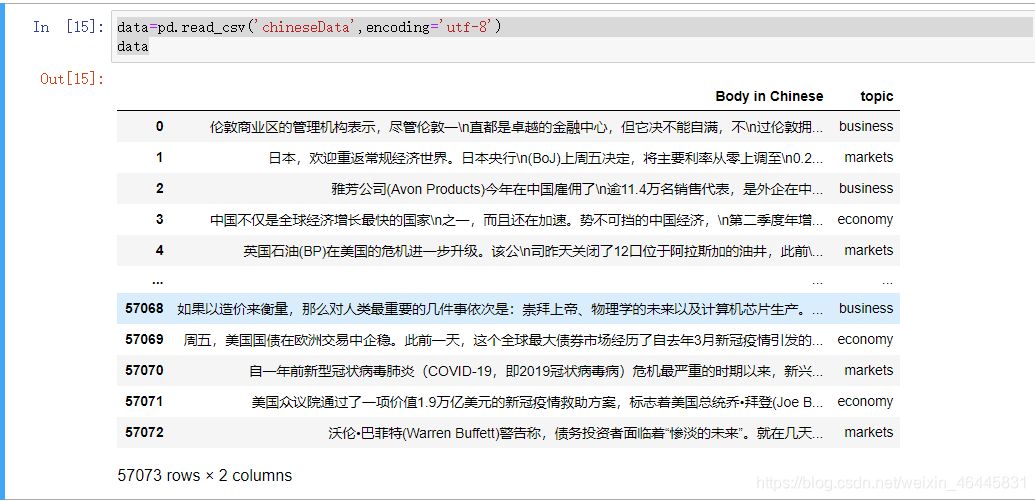

展示数据

data=pd.read_csv('chineseData',encoding='utf-8')

data

数据预处理

移除数字和字母

def find_unchinese(file):

pattern = re.compile(r'[a-zA-Z0-9]')

unchinese = re.sub(pattern,'',file)

return unchinese

加载jieba分词(好用!!)

import jieba

s='今天天气好冷,快出太阳'

jieba.lcut(s)

加载停用词列表

def stopwordslist():

stopwords = [line.strip() for line in open('1.txt',encoding='UTF-8').readlines()]

return stopwords

加载标点符号列表

punctuation = r"""!"#$%&'()*+,-./:;<=>?@[\]^_`{|}~“”?,�!【】()、。:;’‘……¥·0123456789–"""

dicts={i:'' for i in punctuation}

punc_table=str.maketrans(dicts)

数据预处理方法汇总集合

包含去除非中文、去除标点符号、去停用词,最终将文本处理为仅有中文且不包含停用词的连续的文本。

wordnet_lemmatizer = WordNetLemmatizer() # Based on Snowball stem extraction algorithm

def Data_regularization(sentence):

# 对文档中的每一行进行分词

print("Began to participle")

sentence = find_unchinese(sentence)

sentence = sentence.translate(punc_table)

sentence_depart = jieba.lcut(sentence)

# 创建一个停用词列表

stopwords = stopwordslist()

# 输出结果为outstr

outstr = ''

# 去停用词

for word in sentence_depart:

if word not in stopwords:

if word != '\t':

if word != '\n':

outstr += word

outstr += ' '

return outstr

对文本进行处理

选择topic

data2=data.dropna(axis=0, how='any', inplace=False)

data2=data2.reset_index(drop=True)

data2

因为有些时候topic不止一个,所以用ramdon随机抽取其中一个topic作为这个文本的topic。

for i in range(0, data2.shape[0]):

s = data2.loc[i,'topic'].split(',')

if len(s) != 1:

r = random.randint(0,len(s)-1)

data2.loc[i,'topic'] = s[r]

print('The ' + str(i) + ' is successfully select')

去空值

for i in range(0,len(data2)):

if data2.loc[i,'Body in Chinese'] == 0 or data2.loc[i,'Body in Chinese'] == '0':

data2=data2.drop(i, axis=0)

data2=data2.reset_index(drop=True)

data2

用一个新的dataframe保存数据,给你多次重来修改的机会

df=data2

df

对文本进行正则化

##Regularization

for i in range(0, df.shape[0]):

print(i)

df.loc[i,'Body in Chinese']=Data_regularization(df.loc[i,'Body in Chinese'])

将处理后的文本保存为csv文件以便后续CNN分类训练

outputpath='dfchi.csv'

df.to_csv(outputpath,sep=',',index=False,header=False)

l = []

for i in range(0, df.shape[0]):

s = df.loc[i,'topic']

l.append(s)

np.unique(l)

df1 = df[df['topic'].isin(['book'])].reset_index(drop=True)

df1.to_csv('book.csv',sep=',',index=False,header=True)

df2 = df[df['topic'].isin(['business'])].reset_index(drop=True)

df2.to_csv('business.csv',sep=',',index=False,header=True)

df3 = df[df['topic'].isin(['culture'])].reset_index(drop=True)

df3.to_csv('culture.csv',sep=',',index=False,header=True)

df4 = df[df['topic'].isin(['economy'])].reset_index(drop=True)

df4.to_csv('economy.csv',sep=',',index=False,header=True)

df5 = df[df['topic'].isin(['lifestyle'])].reset_index(drop=True)

df5.to_csv('lifestyle.csv',sep=',',index=False,header=True)

df6 = df[df['topic'].isin(['management'])].reset_index(drop=True)

df6.to_csv('management.csv',sep=',',index=False,header=True)

df7 = df[df['topic'].isin(['markets'])].reset_index(drop=True)

df7.to_csv('markets.csv',sep=',',index=False,header=True)

df8 = df[df['topic'].isin(['people'])].reset_index(drop=True)

df8.to_csv('people.csv',sep=',',index=False,header=True)

df9 = df[df['topic'].isin(['politics'])].reset_index(drop=True)

df9.to_csv('politics.csv',sep=',',index=False,header=True)

df10 = df[df['topic'].isin(['society'])].reset_index(drop=True)

df10.to_csv('society.csv',sep=',',index=False,header=True)

英文文本

数据预处理(归一化)

导入数据

data=pd.read_csv('classification_data3.csv',encoding='utf-8')

data

移除中文

# Remove the Chinese

def find_unchinese(file):

pattern = re.compile(r'[\u4e00-\u9fa5]')

unchinese = re.sub(pattern,'',file)

return unchinese

提取名词

因为名词的分类效果会更好

def Extract_Noun(file):

outstr = ''

sentence_depart = word_tokenize(file.strip())

for word in sentence_depart:

p = pos_tag(word_tokenize(word))

# Only extract noun

if p[0][1] == 'NN' or p[0][1] == 'NNS' or p[0][1] == 'NNP' or p[0][1] == 'NNPS':

outstr += word

outstr += ' '

return outstr

去停用词

两种方法,一种是自己从网上下载的停用词表,一种是nltk的stopword表

def stopwordslist():

stopwords = [line.strip() for line in open('stopWord.txt',encoding='UTF-8').readlines()]

return stopwords

stop_words = set(stopwords.words('english'))

词干化和词性还原

因为词干化还原的更狠,会经常导致单词不完整,所以作者选择的是词性还原,就是类似把dogs还原成dog这样。

wordnet_lemmatizer = WordNetLemmatizer()

#snowball_stemmer = SnowballStemmer('english')

# Based on Snowball stem extraction algorithm

方法结合

词性还原时针对名词、动词、形容词和副词

def Data_regularization(sentence):

# 对文档中的每一行进行分词

print("Began to participle")

sentence = Extract_Noun(sentence)

sentence = find_unchinese(sentence)

sentence = sentence.translate(punc_table)

sentence_depart = word_tokenize(sentence.strip())

# 创建一个停用词列表

#stopwords = stopwordslist()

# 输出结果为outstr

outstr = ''

# 去停用词

for word in sentence_depart:

if word not in stop_words:

if word != '\t':

if word != '\n':

# snowball_stemmer.stem(w) Word stemming

word1 = wordnet_lemmatizer.lemmatize(word, pos = 'n')

word2 = wordnet_lemmatizer.lemmatize(word1, pos = 'v')

word3 = wordnet_lemmatizer.lemmatize(word2, pos = 'a')

word4 = wordnet_lemmatizer.lemmatize(word3, pos = 'r')

#把所有字符中的大写字母转换成小写字母

word = word4.lower()

outstr += word

outstr += ' '

return outstr

去空值,重复值

data=data.drop_duplicates(subset='title in English',keep='first')

data=data.drop_duplicates(subset='body in English',keep='first')

data2=data.dropna(axis=0, how='any', inplace=False)

nandata=data2.isnull().sum().to_frame('Number of NaN') ## Number of missing (NaN) values

nandata

data2=data2.reset_index(drop=True)

data2

随机选择topic

因为有些时候topic不止一个,所以用ramdon随机抽取其中一个topic作为这个文本的topic。

for i in range(0, data2.shape[0]):

s = data2.loc[i,'topic'].split(',')

if len(s) != 1:

r = random.randint(0,len(s)-1)

data2.loc[i,'topic'] = s[r]

print('The ' + str(i) + ' is successfully select')

选取部分需要用上的dataframe

df=data2[['body in English','topic']]

df

开始处理

##Regularization

for i in range(0, df.shape[0]):

df.loc[i,'body in English']=Data_regularization(df.loc[i,'body in English'])

导出数据

outputpath='df.csv'

df.to_csv(outputpath,sep=',',index=False,header=False)

df1 = df[df['topic'].isin(['book'])].reset_index(drop=True)

df1.to_csv('book.csv',sep=',',index=False,header=True)

df2 = df[df['topic'].isin(['business'])].reset_index(drop=True)

df2.to_csv('business.csv',sep=',',index=False,header=True)

df3 = df[df['topic'].isin(['culture'])].reset_index(drop=True)

df3.to_csv('culture.csv',sep=',',index=False,header=True)

df4 = df[df['topic'].isin(['economy'])].reset_index(drop=True)

df4.to_csv('economy.csv',sep=',',index=False,header=True)

df5 = df[df['topic'].isin(['lifestyle'])].reset_index(drop=True)

df5.to_csv('lifestyle.csv',sep=',',index=False,header=True)

df6 = df[df['topic'].isin(['management'])].reset_index(drop=True)

df6.to_csv('management.csv',sep=',',index=False,header=True)

df7 = df[df['topic'].isin(['markets'])].reset_index(drop=True)

df7.to_csv('markets.csv',sep=',',index=False,header=True)

df8 = df[df['topic'].isin(['people'])].reset_index(drop=True)

df8.to_csv('people.csv',sep=',',index=False,header=True)

df9 = df[df['topic'].isin(['politics'])].reset_index(drop=True)

df9.to_csv('politics.csv',sep=',',index=False,header=True)

df10 = df[df['topic'].isin(['society'])].reset_index(drop=True)

df10.to_csv('society.csv',sep=',',index=False,header=True)

创建并加载语料库

corpus = []

for i in range(0, df.shape[0]):

corpus.append(df.loc[i,'body in English'])

print('The ' + str(i) + ' text is successfully add in corpus')

进行文本分析

TF-IDF

一、使用TF-IDF对文本进行预处理,将文本化为向量的表示形式

1、TfidfVectorizer可以把原始文本转化为tf-idf的特征矩阵,从而为后续的文本相似度计算,主题模型(如LSI),文本搜索排序等一系列应用奠定基础

from sklearn.feature_extraction.text import CountVectorizer

vectorizer=CountVectorizer()

X=vectorizer.fit_transform(corpus)

print(vectorizer.fit_transform(corpus))

X.toarray()

这步如果语料库太大,会出现内存不足的问题,因此我们可以使用Hash trick降维

print(vectorizer.get_feature_names())

Hash Trick

from sklearn.feature_extraction.text import HashingVectorizer

vectorizer2=HashingVectorizer(n_features = 11,norm =None)

X2=vectorizer2.fit_transform(corpus)

X2

X2.toarray()

from sklearn.feature_extraction.text import TfidfTransformer

transformer = TfidfTransformer()

tfidf = transformer.fit_transform(X2)

print(tfidf)

tfidf.toarray()

6056

6056

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?