目录

一、环境说明

部署环境:

| 主机名 | ip地址 | 节点类型 | 系统版本 |

|---|---|---|---|

| cka01 | 192.168.0.180 | master、etcd | centos7 |

| cka02 | 192.168.0.41 | worker | centos7 |

| cka03 | 192.168.0.241 | worker | centos7 |

相关组件版本说明:

| 组件 | 版本 | 说明 |

|---|---|---|

| kubernetes | 1.20.9 | 主程序 |

| docker | 19.03.15 | 容器 |

| calico | 3.18.1 | 网络插件 |

| etcd | 3.4.13 | 数据库 |

| coredns | 1.7.0 | dns组件 |

| kubernetes-dashboard | v2.2.0 | web界面 |

二、集群安装

安装分为三个部分:

-

基础环境准备,需要在所有节点上操作:

- 配置主机名

- 添加/etc/hosts

- 清空防火墙

- 关闭selinux

- 配置内核参数

- 加载ip_vs内核模块

- 安装Docker

- 安装kubelet、kubectl、kubeadm

-

配置master节点,只需要在master节点上操作:

- 生成kubeadm-config.yaml文件

- 编辑kubeadm-config.yaml文件

- 根据配置的kubeadm-config.yaml文件部署master

- 安装网络插件

-

配置worker节点,只需要在worker节点上操作:

- 将节点添加为集群worker

基础环境准备

#修改主机名:

#以一个节点为例

hostnamectl set-hostname cka01 --static

#添加/etc/hostshosts:

echo "192.168.0.180 cka01" >> /etc/hosts

#清空防火墙规则和selinux:

iptables -F

setenforce 0

sed -i 's/SELINUX=/SELINUX=disabled/g' /etc/selinux/config#设置yum源:

wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

yum install -y epel-release

sed -i "s/#baseurl/baseurl/g" /etc/yum.repos.d/epel.repo

sed -i "s/metalink/#metalink/g" /etc/yum.repos.d/epel.repo

sed -i "s@https\?://download.fedoraproject.org/pub@https://repo.huaweicloud.com@g" /etc/yum.repos.d/epel.repo

#修改内核参数:

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf如果出现sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No Such file or directory这样的错误,可以忽略

#加载内核模块:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- br_netfilter

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && \

bash /etc/sysconfig/modules/ipvs.modules && \

lsmod | grep -E "ip_vs|nf_conntrack_ipv4"

#安装docker:

yum install -y yum-utils device-mapper-persistent-data lvm2

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum install -y docker-ce-19.03.15

mkdir /etc/docker

cat > /etc/docker/daemon.json << EOF

{

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "10"

},

"live-restore": true,

"registry-mirrors": ["https://pqbap4ya.mirror.aliyuncs.com"]

}

EOF

systemctl restart docker

systemctl enable docker

#安装kubeadm、kubelet、kubectl:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9

systemctl enable kubelet && systemctl start kubelet配置master节点

通过如下指令创建默认的kubeadm-config.yaml文件:

kubeadm config print init-defaults > kubeadm-config.yaml修改kubeadm-config.yaml文件如下:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.0.180

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: cka01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.9

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

安装master节点:

kubeadm init --config kubeadm-config.yaml配置访问集群:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -u) $HOME/.kube/config完成部署之后,发现两个问题:

- master节点一直notready

- coredns pod一直pending

其实这两个问题都是因为还没有安装网络插件导致的,接下来开始安装网络插件

安装calico网络插件:

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml配置worker节点

在master节点上,当master部署成功时,会返回类似如下信息:

kubeadm join 192.168.0.180:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:cad3fa778559b724dff47bb1ad427bd39d97dd76e934b9467507a2eb990a50c7直接将该条指令复制至worker节点执行,即可完成节点的添加

需要说明的是,以上指令中的token有效期只有24小时,当token失效以后,可以使用kubeadm token create --print-join-command生成新的添加节点指令

三、扩展插件安装

在集群安装完成之后,正式投产还需要安装一些插件。

-

安装扩展插件,只需要在master节点上操作:

- 安装helm

- 安装ingress

- 安装dashboard

- 安装metrics-server

安装helm

helm的github仓库地址: https://github.com/helm/helm

安装操作:

wget https://breezey-public.oss-cn-zhangjiakou.aliyuncs.com/softwares/linux/helm-v3.0.3-linux-amd64.tar.gz

# 添加官方chart

helm repo add stable https://kubernetes-charts.storage.googleapis.com/安装ingress

ingress的github地址: github.com/kubernetes/ingress-nginx

部署:

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/baremetal/deploy.yaml# 修改内容如下

...

image: registry.cn-zhangjiakou.aliyuncs.com/breezey/ingress-nginx:v0.41.2

...

ports:

- name: http

containerPort: 80

protocol: TCP

hostPort: 80

- name: https

containerPort: 443

protocol: TCP

hostPort: 443

- name: webhook

containerPort: 8443

protocol: TCP

...安装metrics-server

metrics-server用于在集群内部向kube-apiserver暴露集群指标。

其代码托管地址: https://github.com/kubernetes-sigs/metrics-server

安装文件下载地址: https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.1/components.yaml

#下载安装文件:

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.1/components.yaml

#修改metrics-server-deployment.yaml文件相关配置:

#1、修改镜像地址,默认镜像来自k8s.grc.io

image: registry.cn-zhangjiakou.aliyuncs.com/breezey/metrics-server:v0.4.1

#2、修改metrics-server启动参数

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

#部署:

kubectl apply -f components.yaml

#验证:

[root@cka-master metrics-server]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

192.168.0.93 169m 8% 1467Mi 39%

cka-node1 138m 6% 899Mi 24%安装dashboard

dashboard的github仓库地址:https://github.com/kubernetes/dashboard

#代码仓库当中,有给出安装示例的相关部署文件,我们可以直接获取之后,直接部署即可:

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml

kubectl apply -f ./recommended.yaml

#默认这个部署文件当中,会单独创建一个名为kubernetes-dashboard的命名空间,并将kubernetes-dashboard部署在该命名空间下。dashboard的镜像来自docker hub官方,所以可不用修改镜像地址,直接从官方获取即可。

#但是在默认情况下,dashboard并不对外开放访问端口,我这里简化操作,直接使用nodePort的方式将其端口暴露出来,修改serivce部分的定义:

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 32443

type: NodePort

selector:

k8s-app: kubernetes-dashboard

#重新创建service:

kubectl delete svc kubernetes-dashboard -n kubernetes-dashboard

kubectl apply -f ./recommended.yaml

#此时,即可通过浏览器访问web端,端口为32443:

#需要我们输入一个kubeconfig文件或者一个token。事实上在安装dashboard时,也为我们默认创建好了一个serviceaccount,为kubernetes-dashboard,并为其生成好了token,我们可以通过如下指令获取该sa的token:

kubectl describe secret -n kubernetes-dashboard $(kubectl get secret -n kubernetes-dashboard |grep kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'

eyJhbGciOiJSUzI1NiIsImtpZCI6IkUtYTBrbU44TlhMUjhYWXU0VDZFV1JlX2NQZ0QxV0dPRjBNUHhCbUNGRzAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291eeeeeeLWRhc2hib2FyZC10b2tlbi1rbXBuMiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFxxxxxxxxxxxxxxxxxxxxxxxGZmZmYxLWJhOTAtNDU5Ni1hMzgxLTRhNDk5NzMzYWI0YiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.UwAsemOra-EGl2OzKc3lur8Wtg5adqadulxH7djFpmpWmDj1b8n1YFiX-AwZKSbv_jMZd-mvyyyyyyyyyyyyyyyMYLyVub98kurq0eSWbJdzvzCvBTXwYHl4m0RdQKzx9IwZznzWyk2E5kLYd4QHaydCw7vH26by4cZmsqbRkTsU6_0oJIs0YF0YbCqZKbVhrSVPp2Fw5IyVP-ue27MjaXNCSSNxLX7GNfK1W1E68CrdbX5qqz0-Ma72EclidSxgs17T34p9mnRq1-aQ3ji8bZwvhxuTtCw2DkeU7DbKfzXvJw9ENBB-A0fN4bewP6Og07Q

#通过该token登入集群以后,发现很多namespace包括一些其他的资源都没有足够的权限查看。这是因为默认我们使用的这个帐户只有有限的权限。我们可以通过对该sa授予cluster-admin权限来解决这个问题:

#修改ClusterRoleBinding资源内容如下:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

#重新创建clusterrolebinding:

kubectl delete clusterrolebinding kubernetes-dashboard -n kubernetes-dashboard

kubectl apply -f ./recommended.yaml

#此时,kubernetes-dashboard相关配置即完成。四、重置集群

在安装过程中,我们会遇到一些问题,这个时候可以把集群重置,推倒重来。

# 重置集群

kubeadm reset

# 停止kubelet

systemctl stop kubelet

# 删除已经部署的容器

docker ps -aq |xargs docker rm -f

# 清理所有目录

rm -rf /etc/kubernetes /var/lib/kubelet /var/lib/etcd /var/lib/cni/五、升级集群

注意事项

- 使用kubeadm升级集群,不支持跨版本升级

- swap必须关闭

- 注意数据备份。虽然kubeadm upgrade操作不会触碰你的工作负载,只会更新kubernetes的组件,但任何时候,备份都是最佳实践。

- 节点更新完成后,其上的所有容器都会被重启

操作升级

升级master节点

检查可用的kubeadm版本

# ubuntu

apt update

apt-cache madison kubeadm

# centos

yum list --showduplicates kubeadm --disableexcludes=kubernetes

#更新kubeadm软件包

# ubuntu

apt update

apt upgrade -y kubeadm=1.21.0-00

# centos

yum update -y kubeadm-1.21.0-0

#排干要更新的节点:

# 这里以master节点为例

kubectl drain 192.168.0.180 --ignore-daemonsets

# 创建升级计划:

kubeadm upgrade plan

#按照提示执行升级:

kubeadm upgrade apply v1.21.0

# 将节点重新设置为可调度:

kubectl uncordon cka01

# 如果有多个master节点,升级其他Master节点, 直接执行如下操作即可:

kubeadm upgrade node

#升级kubelet和kubectl

# ubuntu

apt update

apt upgrade -y kubelet=1.20.4-00 kubectl=1.21.0-00

# centos

yum update -y kubelet-1.20.4-0 kubectl-1.21.0-0

# 重启kubelet

systemctl daemon-reload

systemctl restart kubelet

# 升级worker节点

# 在woker节点上升级kubeadm

yum upgrade kubeadm-1.21.0-0 -y

# 在master节点上排干要升级的worker节点

kubectl drain cka02

# 在worker节点上执行升级操作

kubectl upgrade node

# 在worker节点上更新kubelet和kubectl

yum upgrade kubelet-1.21.0-0 kubectl-1.21.0-0

# 重启worker节点上的kubelet

systemctl daemon_reload

systemctl restart kubelet

# 在master节点上取消worker节点的不可调度设置

kubectl uncordon cka02附录

calico-node在master上无法启动问题

pod状态:

calico-node-hhl5j 0/1 Running 0 3h54m

报错如下:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 4m38s (x1371 over 3h52m) kubelet (combined from similar events): Readiness probe failed: 2021-08-07 09:43:25.755 [INFO][1038] confd/health.go 180: Number of node(s) with BGP peering established = 0

calico/node is not ready: BIRD is not ready: BGP not established with 192.168.0.41,192.168.0.241

#解决方法:

调整calicao的网络插件的网卡发现机制,修改IP_AUTODETECTION_METHOD对应的value值。

默认情况下,官方提供的yaml文件中,ip识别策略(IPDETECTMETHOD)没有配置,即默认为first-found,这会导致一个网络异常的ip作为nodeIP被注册,从而影响node之间的网络连接。可以修改成can-reach或者interface的策略,尝试连接某一个Ready的node的IP,以此选择出正确的IP。

打开calico.yaml,找到CLUSTER_TYPE,配置项,在其下添加一个变量配置,示例如下:

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# 要添加的配置项

- name: IP_AUTODETECTION_METHOD

value: "interface=eth0"

然后重新apply calico.yaml即可:

kubectl apply -f calico.yaml六、安装KubeSphere前置环境

安装nfs文件系统服务器

安装nfs-server

在每个机器上安装nfs-utils

yum install -y nfs-utils

在master 执行以下命令,执行命令启动 nfs 服务并且创建共享目录并且生效配置,检查配置是否生效exportfs

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

exportfs -r配置nfs-client

showmount -e 192.168.6.102

mkdir -p /nfs/data

mount -t nfs 192.168.6.102:/nfs/data /nfs/data配置默认存储

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.6.102 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.6.102

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

创建一个yaml文件,并且使用命令 kubectl apply -f sc.yaml 创建pod,创建好之后使用kubectl get sc查看配置是否生效。

安装metrics-server集群指标监控组件

安装metrics-server集群指标监控组件

创建metrics.yaml使用命令kubectl apply -f ./metrics.yaml 创建pod,安装好之后使用kubectl top nodes 即可查看每个节点的cpu内存等使用情况。

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --kubelet-insecure-tls

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

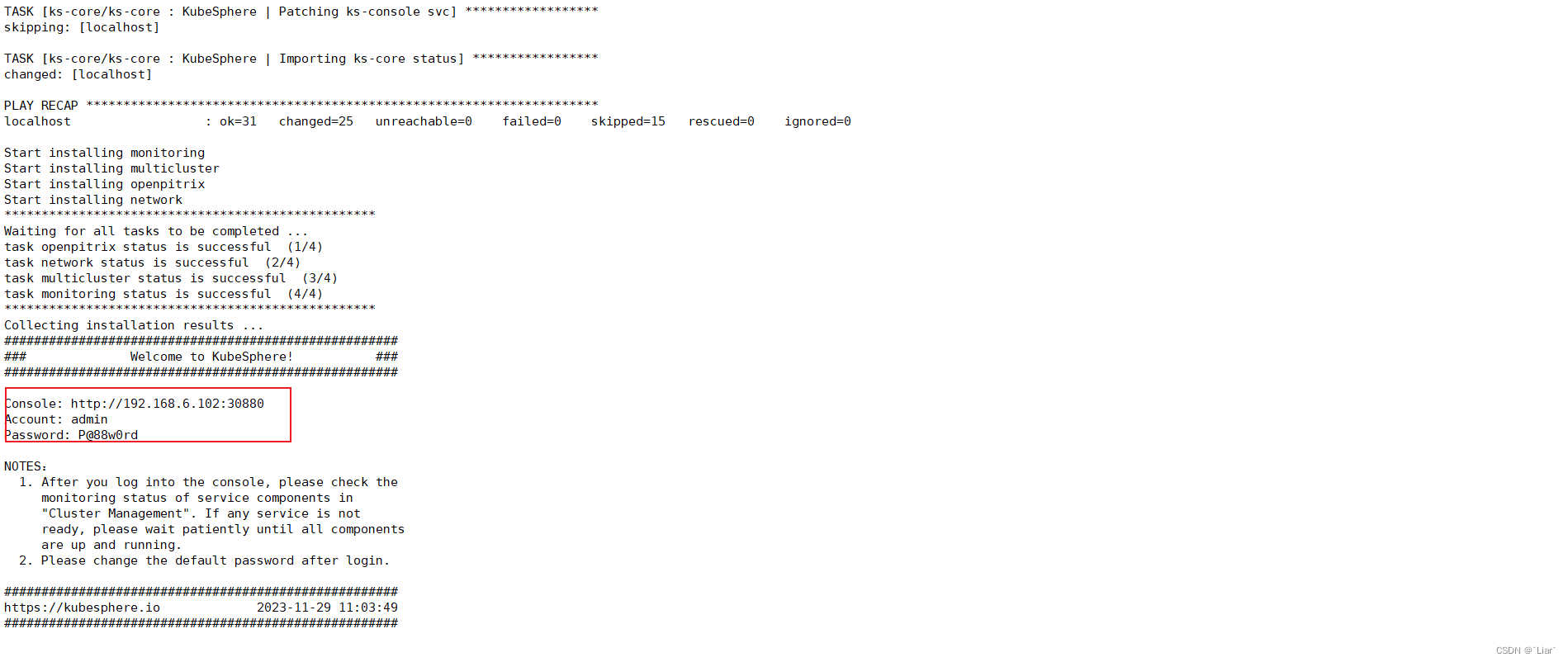

安装KubeSphere

下载核心文件

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

wget https://20.205.243.166/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml --no-check-certificate执行安装

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

#解决找不到证书的问题

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

使用命令查看进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

963

963

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?