格式

STE表

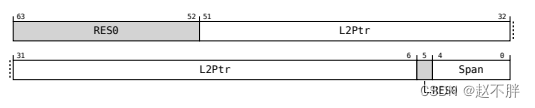

Level 1 Stream Table Descriptor

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.1 Level 1 Stream Table Descriptor

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.1 Level 1 Stream Table Descriptor

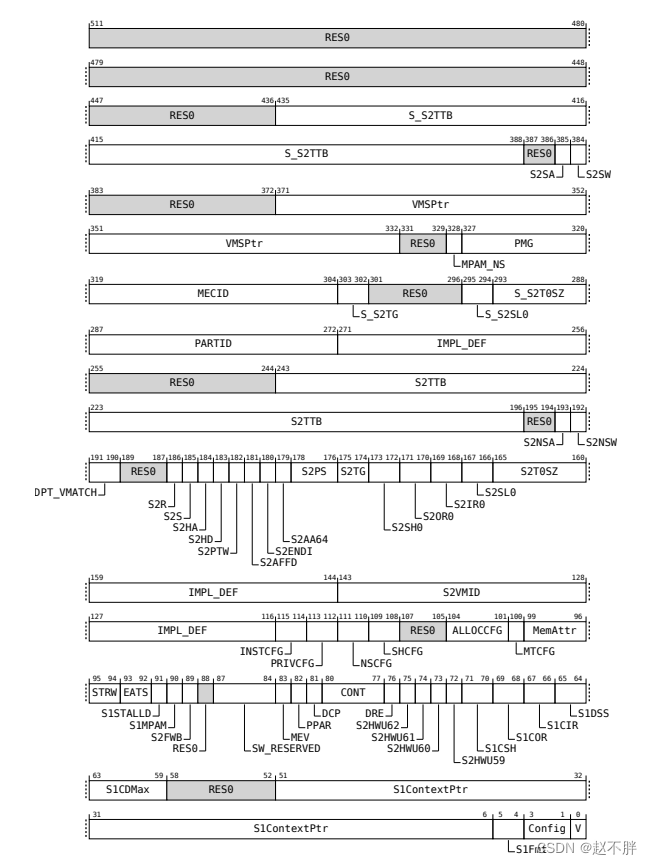

Stream Table Entry

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.2 Stream Table Entry

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.2 Stream Table Entry

CD表

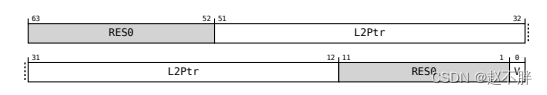

Level 1 Context Descriptor

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.3 Level 1 Context Descriptor

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.3 Level 1 Context Descriptor

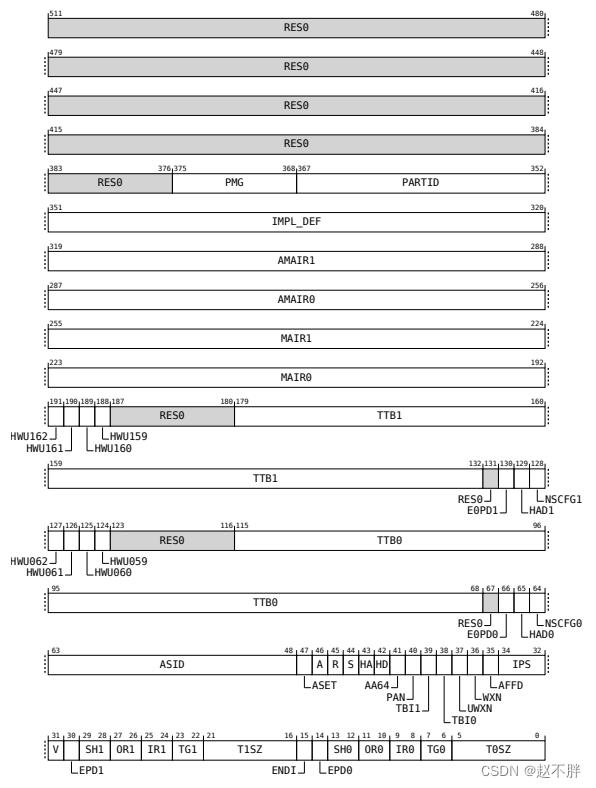

Context Descriptor

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.4 Context Descriptor

描述见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-5.4 Context Descriptor

结构体

#define STRTAB_L1_SZ_SHIFT 20

#define STRTAB_SPLIT 8

#define STRTAB_L1_DESC_DWORDS 1

#define STRTAB_L1_DESC_SPAN GENMASK_ULL(4, 0)

#define STRTAB_L1_DESC_L2PTR_MASK GENMASK_ULL(51, 6)

#define STRTAB_STE_DWORDS 8

/* High-level stream table and context descriptor structures */

struct arm_smmu_strtab_l1_desc {

u8 span;

__le64 *l2ptr;

dma_addr_t l2ptr_dma;

};

- span: Level 1 Stream Table Descriptor的span,值等于STRTAB_SPLIT,代表Level 1 Stream Table Descriptor的个数和索引。

- l2ptr: Level 1 Stream Table Descriptor的l2ptr指针,指向STE的L2表。

- l2ptr_dma:l2ptr的PA用来填充Level 1 Stream Table Descriptor。

arm_smmu_device.strtab_cfg

struct arm_smmu_strtab_cfg {

__le64 *strtab;

dma_addr_t strtab_dma;

struct arm_smmu_strtab_l1_desc *l1_desc;

unsigned int num_l1_ents;

u64 strtab_base;

u32 strtab_base_cfg;

};

- strtab :STE表的VA。指向一个一个的Level 1 Stream Table Descriptor主要内容是L2Ptr和Span。Level 1 Stream Table Descriptor的详细描述见-《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》Chapter 5

Data structure formats-Level 1 Stream Table Descriptor - strtab_dma:STE表的PA。

- l1_desc :STE的L1 desc的数组指针。

- num_l1_ents STE的L1表有多少个。

- strtab_base:STE的base地址(来自strtab_dma),需要写到ARM_SMMU_STRTAB_BASE寄存器。关于该寄存器见

《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification-Chapter 6

Memory map and registers-6.3.23 SMMU_STRTAB_BASE - strtab_base_cfg:STE的config,需要写到ARM_SMMU_STRTAB_BASE_CFG寄存器。关于该寄存器见《IHI0070F_b_System_Memory_Management_Unit_Architecture_Specification》-Chapter 6

Memory map and registers-6.3.24 SMMU_STRTAB_BASE_CFG

struct arm_smmu_s1_cfg {

__le64 *cdptr;

dma_addr_t cdptr_dma;

struct arm_smmu_ctx_desc {

u16 asid;

u64 ttbr;

u64 tcr;

u64 mair;

} cd;

};

struct arm_smmu_s2_cfg {

u16 vmid;

u64 vttbr;

u64 vtcr;

};

cdptr: 虚拟地址指向Level 1 Context Descriptor或者 Context Descriptor。

cdptr_dma:cdptr的物理地址,填充到相应Stream Table Entry,S1ContextPtr字段。

asid:ASID需要填充到相应Context Descripto,ASID字段。

ttbr:ttbr需要填充到相应Context Descriptor,TTB0字段。

tcr:tcr需要填充到相应Context Descriptor,IPS,EPD1,EPD0,SH0, OR0, IR0,TG0,T0SZ字段。

mair:mair需要填充到相应Context Descriptor,MAIR1和MAIR0字段。

vmid:VMID填充到相应Stream Table Entry,S2VMID字段

vttbr:vttbr填充到相应Stream Table Entry,S2TTB字段。

vtcr:vtcr填充到相应Stream Table Entry,S2PS,S2TG,S2SH0,S2OR0,S2IR0,S2SL0,S2T0SZ字段。

初始化

以 2 level的STE表为例子,arm_smmu_init_strtab_2lvl函数主要初始化了L1的STE表(Level 1 Stream Table Descriptor)。

static int arm_smmu_init_strtab_2lvl(struct arm_smmu_device *smmu)

{

void *strtab;

u64 reg;

u32 size, l1size;

struct arm_smmu_strtab_cfg *cfg = &smmu->strtab_cfg;

/* Calculate the L1 size, capped to the SIDSIZE. */

size = STRTAB_L1_SZ_SHIFT - (ilog2(STRTAB_L1_DESC_DWORDS) + 3);

size = min(size, smmu->sid_bits - STRTAB_SPLIT);

cfg->num_l1_ents = 1 << size;

size += STRTAB_SPLIT;

if (size < smmu->sid_bits)

dev_warn(smmu->dev,

"2-level strtab only covers %u/%u bits of SID\n",

size, smmu->sid_bits);

l1size = cfg->num_l1_ents * (STRTAB_L1_DESC_DWORDS << 3);

strtab = dmam_alloc_coherent(smmu->dev, l1size, &cfg->strtab_dma,

GFP_KERNEL | __GFP_ZERO);

if (!strtab) {

dev_err(smmu->dev,

"failed to allocate l1 stream table (%u bytes)\n",

size);

return -ENOMEM;

}

cfg->strtab = strtab;

/* Configure strtab_base_cfg for 2 levels */

reg = FIELD_PREP(STRTAB_BASE_CFG_FMT, STRTAB_BASE_CFG_FMT_2LVL);

reg |= FIELD_PREP(STRTAB_BASE_CFG_LOG2SIZE, size);

reg |= FIELD_PREP(STRTAB_BASE_CFG_SPLIT, STRTAB_SPLIT);

cfg->strtab_base_cfg = reg;

return arm_smmu_init_l1_strtab(smmu);

}

arm_smmu_init_l1_strtab函数主要初始化了l1_desc数组。

static int arm_smmu_init_l1_strtab(struct arm_smmu_device *smmu)

{

unsigned int i;

struct arm_smmu_strtab_cfg *cfg = &smmu->strtab_cfg;

size_t size = sizeof(*cfg->l1_desc) * cfg->num_l1_ents;

void *strtab = smmu->strtab_cfg.strtab;

cfg->l1_desc = devm_kzalloc(smmu->dev, size, GFP_KERNEL);

if (!cfg->l1_desc) {

dev_err(smmu->dev, "failed to allocate l1 stream table desc\n");

return -ENOMEM;

}

for (i = 0; i < cfg->num_l1_ents; ++i) {

arm_smmu_write_strtab_l1_desc(strtab, &cfg->l1_desc[i]);

strtab += STRTAB_L1_DESC_DWORDS << 3;

}

return 0;

}

- 申请了l1_desc的数组。

- arm_smmu_write_strtab_l1_desc函数将l1_desc数组中内容写到Level 1 Stream Table Descriptor中。

设备添加

以下流程添加smmu的dev的时候会,调用arm_smmu_init_l2_strtab函数填充STE的L2表(Stream Table Entry)

add_iommu_group/iommu_bus_notifier

–>iommu_probe_device

---->arm_smmu_add_device

------>arm_smmu_init_l2_strtab

arm_smmu_init_l2_strtab函数实现如下:

static int arm_smmu_init_l2_strtab(struct arm_smmu_device *smmu, u32 sid)

{

size_t size;

void *strtab;

struct arm_smmu_strtab_cfg *cfg = &smmu->strtab_cfg;

struct arm_smmu_strtab_l1_desc *desc = &cfg->l1_desc[sid >> STRTAB_SPLIT];//找到SID对应的l1 desc

if (desc->l2ptr)

return 0;

size = 1 << (STRTAB_SPLIT + ilog2(STRTAB_STE_DWORDS) + 3);//申请的STE的L2表的大小是1<<(8+3+3),smmuv3中Stream Table Entry的每个表是512bit即64字节。size大小可以存放256个Stream Table Entry。

strtab = &cfg->strtab[(sid >> STRTAB_SPLIT) * STRTAB_L1_DESC_DWORDS]; //找到sid对应的strtab

desc->span = STRTAB_SPLIT + 1; //span设置为9

desc->l2ptr = dmam_alloc_coherent(smmu->dev, size, &desc->l2ptr_dma,

GFP_KERNEL | __GFP_ZERO);

if (!desc->l2ptr) {

dev_err(smmu->dev,

"failed to allocate l2 stream table for SID %u\n",

sid);

return -ENOMEM;

}

arm_smmu_init_bypass_stes(desc->l2ptr, 1 << STRTAB_SPLIT);

arm_smmu_write_strtab_l1_desc(strtab, desc);//将span和l2ptr的值写到sid对应的strtab中。

return 0;

}

初始化时候通过arm_smmu_init_strtab_2lvl函数初始化了arm_smmu_device的Level 1 Stream Table Descriptor,这时候主要是申请地址。

arm_smmu_add_device通过arm_smmu_init_l2_strtab函数申请了对应StreamID的Stream Table Entry。同时将Stream Table Entry的地址写到对应的Level 1 Stream Table Descriptor中。至此该设备的Level 1 Stream Table Descriptor表就填写完成。

设备attach

调用流程如下

iommu_group_get_for_dev

–>iommu_group_add_device

---->__iommu_attach_device

------>arm_smmu_attach_dev

-------->arm_smmu_domain_finalise

arm_smmu_attach_dev是arm-smmu-v3的arm_smmu_ops中的attach_dev回调,实现如下:

static int arm_smmu_attach_dev(struct iommu_domain *domain, struct device *dev)

{

int ret = 0;

unsigned long flags;

struct iommu_fwspec *fwspec = dev_iommu_fwspec_get(dev);

struct arm_smmu_device *smmu;

struct arm_smmu_domain *smmu_domain = to_smmu_domain(domain);

struct arm_smmu_master *master;

if (!fwspec)

return -ENOENT;

master = fwspec->iommu_priv;

smmu = master->smmu;

arm_smmu_detach_dev(master);

mutex_lock(&smmu_domain->init_mutex);

if (!smmu_domain->smmu) {

smmu_domain->smmu = smmu;

ret = arm_smmu_domain_finalise(domain);

if (ret) {

smmu_domain->smmu = NULL;

goto out_unlock;

}

} else if (smmu_domain->smmu != smmu) {

dev_err(dev,

"cannot attach to SMMU %s (upstream of %s)\n",

dev_name(smmu_domain->smmu->dev),

dev_name(smmu->dev));

ret = -ENXIO;

goto out_unlock;

}

master->domain = smmu_domain;

if (smmu_domain->stage != ARM_SMMU_DOMAIN_BYPASS)

master->ats_enabled = arm_smmu_ats_supported(master);

if (smmu_domain->stage == ARM_SMMU_DOMAIN_S1)

arm_smmu_write_ctx_desc(smmu, &smmu_domain->s1_cfg);

arm_smmu_install_ste_for_dev(master);

spin_lock_irqsave(&smmu_domain->devices_lock, flags);

list_add(&master->domain_head, &smmu_domain->devices);

spin_unlock_irqrestore(&smmu_domain->devices_lock, flags);

arm_smmu_enable_ats(master);

out_unlock:

mutex_unlock(&smmu_domain->init_mutex);

return ret;

}

ATS的实现跳过,这里面该函数主要调用了三个函数

- arm_smmu_domain_finalise :

- arm_smmu_write_ctx_desc:主要将arm_smmu_s1_cfg结构体中asid,ttbr,tcr,mair填充到Context Descriptor表中。

- arm_smmu_install_ste_for_dev:该函数主要

arm_smmu_domain_finalise函数实现如下:

static int arm_smmu_domain_finalise(struct iommu_domain *domain)

{

int ret;

unsigned long ias, oas;

enum io_pgtable_fmt fmt;

struct io_pgtable_cfg pgtbl_cfg;

struct io_pgtable_ops *pgtbl_ops;

int (*finalise_stage_fn)(struct arm_smmu_domain *,

struct io_pgtable_cfg *);

struct arm_smmu_domain *smmu_domain = to_smmu_domain(domain);

struct arm_smmu_device *smmu = smmu_domain->smmu;

if (domain->type == IOMMU_DOMAIN_IDENTITY) {

smmu_domain->stage = ARM_SMMU_DOMAIN_BYPASS; //bypass模式直接return

return 0;

}

/* Restrict the stage to what we can actually support */

if (!(smmu->features & ARM_SMMU_FEAT_TRANS_S1))

smmu_domain->stage = ARM_SMMU_DOMAIN_S2;//配置为stage 2模式

if (!(smmu->features & ARM_SMMU_FEAT_TRANS_S2))

smmu_domain->stage = ARM_SMMU_DOMAIN_S1;//配置为stage 1模式

switch (smmu_domain->stage) {

case ARM_SMMU_DOMAIN_S1://stage 1模式

ias = (smmu->features & ARM_SMMU_FEAT_VAX) ? 52 : 48;

ias = min_t(unsigned long, ias, VA_BITS);

oas = smmu->ias;

fmt = ARM_64_LPAE_S1;

finalise_stage_fn = arm_smmu_domain_finalise_s1;

break;

case ARM_SMMU_DOMAIN_NESTED:

case ARM_SMMU_DOMAIN_S2://tage 2模式配置ias,oas

ias = smmu->ias;

oas = smmu->oas;

fmt = ARM_64_LPAE_S2;

finalise_stage_fn = arm_smmu_domain_finalise_s2;

break;

default:

return -EINVAL;

}

pgtbl_cfg = (struct io_pgtable_cfg) { //临时变量pgtbl_cfg用来初始化pgtable

.pgsize_bitmap = smmu->pgsize_bitmap,

.ias = ias,

.oas = oas,

.coherent_walk = smmu->features & ARM_SMMU_FEAT_COHERENCY,

.tlb = &arm_smmu_flush_ops,

.iommu_dev = smmu->dev,

};

if (smmu_domain->non_strict)

pgtbl_cfg.quirks |= IO_PGTABLE_QUIRK_NON_STRICT;

pgtbl_ops = alloc_io_pgtable_ops(fmt, &pgtbl_cfg, smmu_domain);

if (!pgtbl_ops)

return -ENOMEM;

domain->pgsize_bitmap = pgtbl_cfg.pgsize_bitmap;

domain->geometry.aperture_end = (1UL << pgtbl_cfg.ias) - 1;

domain->geometry.force_aperture = true;

ret = finalise_stage_fn(smmu_domain, &pgtbl_cfg);

if (ret < 0) {

free_io_pgtable_ops(pgtbl_ops);

return ret;

}

smmu_domain->pgtbl_ops = pgtbl_ops;

return 0;

}

该函数主要配置了ARM_64_LPAE_S1或者ARM_64_LPAE_S2模式。初始化pgtable和pgtbl_ops。同时初始化arm_smmu_domain的arm_smmu_s1_cfg或者arm_smmu_s2_cfg结构体。主要通过调用了alloc_io_pgtable_ops和finalise_stage_fn函数实现:

static const struct io_pgtable_init_fns *

io_pgtable_init_table[IO_PGTABLE_NUM_FMTS] = {

#ifdef CONFIG_IOMMU_IO_PGTABLE_LPAE

[ARM_32_LPAE_S1] = &io_pgtable_arm_32_lpae_s1_init_fns,

[ARM_32_LPAE_S2] = &io_pgtable_arm_32_lpae_s2_init_fns,

[ARM_64_LPAE_S1] = &io_pgtable_arm_64_lpae_s1_init_fns,

[ARM_64_LPAE_S2] = &io_pgtable_arm_64_lpae_s2_init_fns,

[ARM_MALI_LPAE] = &io_pgtable_arm_mali_lpae_init_fns,

#endif

#ifdef CONFIG_IOMMU_IO_PGTABLE_ARMV7S

[ARM_V7S] = &io_pgtable_arm_v7s_init_fns,

#endif

#ifdef CONFIG_IOMMU_IO_PGTABLE_FAST

[ARM_V8L_FAST] = &io_pgtable_av8l_fast_init_fns,

#endif

};

struct io_pgtable_init_fns io_pgtable_arm_64_lpae_s1_init_fns = {

.alloc = arm_64_lpae_alloc_pgtable_s1,

.free = arm_lpae_free_pgtable,

};

struct io_pgtable_init_fns io_pgtable_arm_64_lpae_s2_init_fns = {

.alloc = arm_64_lpae_alloc_pgtable_s2,

.free = arm_lpae_free_pgtable,

};

struct io_pgtable_ops *alloc_io_pgtable_ops(enum io_pgtable_fmt fmt,

struct io_pgtable_cfg *cfg,

void *cookie)

{

struct io_pgtable *iop;

const struct io_pgtable_init_fns *fns;

if (fmt >= IO_PGTABLE_NUM_FMTS)

return NULL;

fns = io_pgtable_init_table[fmt];

if (!fns)

return NULL;

iop = fns->alloc(cfg, cookie);

if (!iop)

return NULL;

iop->fmt = fmt;

iop->cookie = cookie;

iop->cfg = *cfg;

return &iop->ops;

}

EXPORT_SYMBOL_GPL(alloc_io_pgtable_ops);

alloc_io_pgtable_ops函数根据传入的fmt(smmuv3中是ARM_64_LPAE_S1和ARM_64_LPAE_S2)。调用pgtable对应的alloc函数arm_64_lpae_alloc_pgtable_s1或者arm_64_lpae_alloc_pgtable_s2。

arm_64_lpae_alloc_pgtable_s1函数,主要调用arm_lpae_alloc_pgtable函数描述如下。初始化tcr和mair同时根据pgd_size申请pgd表。

arm_lpae_alloc_pgtable函数注册io_pgtable_ops如下,

以及根据io_pgtable_cfg参数确定pgd_size。

data->iop.ops = (struct io_pgtable_ops) {

.map = arm_lpae_map,

.unmap = arm_lpae_unmap,

.iova_to_phys = arm_lpae_iova_to_phys,

};

arm_64_lpae_alloc_pgtable_s2函数与arm_64_lpae_alloc_pgtable_s1类似不在赘述。

通过finalise_stage_fn调用arm_smmu_domain_finalise_s1或者arm_smmu_domain_finalise_s2函数。

arm_smmu_domain_finalise_s1函数设置了arm_smmu_s1_cfg的asid,同时申请了Context Descriptor表,arm_smmu_s1_cfg的cdptr和cdptr_dma分别指向Context Descriptor表的VA和PA。将arm_64_lpae_alloc_pgtable_s1函数中获取的 ttbr(存放的PGD页表),tcr和mair,配置arm_smmu_domain中的arm_smmu_s1_cfg的ttbr,tcr, mair。其中cdptr的大小为64字节,可以容纳一个Context Descriptor表(64字节)。

arm_smmu_domain_finalise_s2函数设置了arm_smmu_s2_cfg的vmid,同时将arm_64_lpae_alloc_pgtable_s2函数中获取的vttbr(存放的PGD页表)和vtcr,配置arm_smmu_domain中的arm_smmu_s2_cfg的vttbr,vtcr。

arm_smmu_install_ste_for_dev实现如下

static void arm_smmu_install_ste_for_dev(struct arm_smmu_master *master)

{

int i, j;

struct arm_smmu_device *smmu = master->smmu;

for (i = 0; i < master->num_sids; ++i) {

u32 sid = master->sids[i];

__le64 *step = arm_smmu_get_step_for_sid(smmu, sid);

/* Bridged PCI devices may end up with duplicated IDs */

for (j = 0; j < i; j++)

if (master->sids[j] == sid)

break;

if (j < i)

continue;

arm_smmu_write_strtab_ent(master, sid, step);

}

}

- arm_smmu_get_step_for_sid函数使用SID做索引找到对应 Level 1 Stream Table Descriptor中的l2ptr指向的Stream Table Entry

- arm_smmu_write_strtab_ent函数主要负责填充对应的Stream Table Entry。实现如下:

static void arm_smmu_write_strtab_ent(struct arm_smmu_master *master, u32 sid,

__le64 *dst)

{

/*

* This is hideously complicated, but we only really care about

* three cases at the moment:

*

* 1. Invalid (all zero) -> bypass/fault (init)

* 2. Bypass/fault -> translation/bypass (attach)

* 3. Translation/bypass -> bypass/fault (detach)

*

* Given that we can't update the STE atomically and the SMMU

* doesn't read the thing in a defined order, that leaves us

* with the following maintenance requirements:

*

* 1. Update Config, return (init time STEs aren't live)

* 2. Write everything apart from dword 0, sync, write dword 0, sync

* 3. Update Config, sync

*/

u64 val = le64_to_cpu(dst[0]);

bool ste_live = false;

struct arm_smmu_device *smmu = NULL;

struct arm_smmu_s1_cfg *s1_cfg = NULL;

struct arm_smmu_s2_cfg *s2_cfg = NULL;

struct arm_smmu_domain *smmu_domain = NULL;

struct arm_smmu_cmdq_ent prefetch_cmd = {

.opcode = CMDQ_OP_PREFETCH_CFG,

.prefetch = {

.sid = sid,

},

};

if (master) {

smmu_domain = master->domain;

smmu = master->smmu;

}

if (smmu_domain) {

switch (smmu_domain->stage) {

case ARM_SMMU_DOMAIN_S1:

s1_cfg = &smmu_domain->s1_cfg;

break;

case ARM_SMMU_DOMAIN_S2:

case ARM_SMMU_DOMAIN_NESTED:

s2_cfg = &smmu_domain->s2_cfg;

break;

default:

break;

}

}

if (val & STRTAB_STE_0_V) {

switch (FIELD_GET(STRTAB_STE_0_CFG, val)) {

case STRTAB_STE_0_CFG_BYPASS:

break;

case STRTAB_STE_0_CFG_S1_TRANS:

case STRTAB_STE_0_CFG_S2_TRANS:

ste_live = true;

break;

case STRTAB_STE_0_CFG_ABORT:

BUG_ON(!disable_bypass);

break;

default:

BUG(); /* STE corruption */

}

}

/* Nuke the existing STE_0 value, as we're going to rewrite it */

val = STRTAB_STE_0_V;

/* Bypass/fault */

if (!smmu_domain || !(s1_cfg || s2_cfg)) {

if (!smmu_domain && disable_bypass)

val |= FIELD_PREP(STRTAB_STE_0_CFG, STRTAB_STE_0_CFG_ABORT);

else

val |= FIELD_PREP(STRTAB_STE_0_CFG, STRTAB_STE_0_CFG_BYPASS);

dst[0] = cpu_to_le64(val);

dst[1] = cpu_to_le64(FIELD_PREP(STRTAB_STE_1_SHCFG,

STRTAB_STE_1_SHCFG_INCOMING));

dst[2] = 0; /* Nuke the VMID */

/*

* The SMMU can perform negative caching, so we must sync

* the STE regardless of whether the old value was live.

*/

if (smmu)

arm_smmu_sync_ste_for_sid(smmu, sid);

return;

}

if (s1_cfg) {//配置ARM_SMMU_DOMAIN_S1的Stream Table Entry

BUG_ON(ste_live);

dst[1] = cpu_to_le64(

FIELD_PREP(STRTAB_STE_1_S1CIR, STRTAB_STE_1_S1C_CACHE_WBRA) |

FIELD_PREP(STRTAB_STE_1_S1COR, STRTAB_STE_1_S1C_CACHE_WBRA) |

FIELD_PREP(STRTAB_STE_1_S1CSH, ARM_SMMU_SH_ISH) |

FIELD_PREP(STRTAB_STE_1_STRW, STRTAB_STE_1_STRW_NSEL1));

if (smmu->features & ARM_SMMU_FEAT_STALLS &&

!(smmu->features & ARM_SMMU_FEAT_STALL_FORCE))

dst[1] |= cpu_to_le64(STRTAB_STE_1_S1STALLD);

val |= (s1_cfg->cdptr_dma & STRTAB_STE_0_S1CTXPTR_MASK) |

FIELD_PREP(STRTAB_STE_0_CFG, STRTAB_STE_0_CFG_S1_TRANS);

}

if (s2_cfg) {//配置ARM_SMMU_DOMAIN_S2的Stream Table Entry

BUG_ON(ste_live);

dst[2] = cpu_to_le64(

FIELD_PREP(STRTAB_STE_2_S2VMID, s2_cfg->vmid) |

FIELD_PREP(STRTAB_STE_2_VTCR, s2_cfg->vtcr) |

#ifdef __BIG_ENDIAN

STRTAB_STE_2_S2ENDI |

#endif

STRTAB_STE_2_S2PTW | STRTAB_STE_2_S2AA64 |

STRTAB_STE_2_S2R);

dst[3] = cpu_to_le64(s2_cfg->vttbr & STRTAB_STE_3_S2TTB_MASK);

val |= FIELD_PREP(STRTAB_STE_0_CFG, STRTAB_STE_0_CFG_S2_TRANS);

}

if (master->ats_enabled)

dst[1] |= cpu_to_le64(FIELD_PREP(STRTAB_STE_1_EATS,

STRTAB_STE_1_EATS_TRANS));

arm_smmu_sync_ste_for_sid(smmu, sid);

/* See comment in arm_smmu_write_ctx_desc() */

WRITE_ONCE(dst[0], cpu_to_le64(val));

arm_smmu_sync_ste_for_sid(smmu, sid);//发送CMD_CFGI_STE cmd

/* It's likely that we'll want to use the new STE soon */

if (!(smmu->options & ARM_SMMU_OPT_SKIP_PREFETCH))

arm_smmu_cmdq_issue_cmd(smmu, &prefetch_cmd);//发送CMD_PREFETCH_CONFIG cmd

}

根据arm_smmu_domain当前配置的ARM_SMMU_DOMAIN_S1还是ARM_SMMU_DOMAIN_S2。将对应的arm_smmu_s1_cfg或者arm_smmu_s2_cfg中的数据同步到对应的Stream Table Entry中。主要涉及到参考格式中的Stream Table Entry图表

对于arm_smmu_s1_cfg:

-

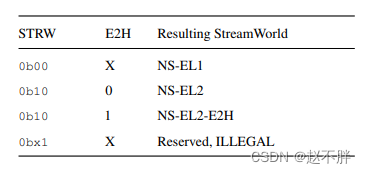

S1CIR,S1COR配置为 Normal, Write-Back cacheable, Read-Allocate,S1CSH配置为Inner Shareable,STRW 配置为NS-EL1见下表:

-

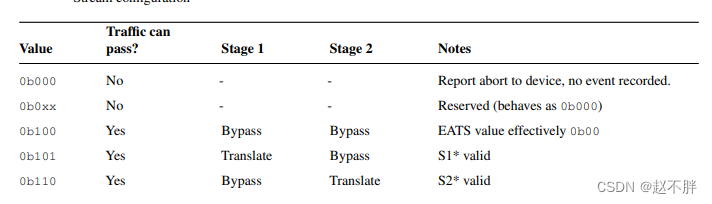

将cdptr_dma( Context Descriptor的物理地址)写到Stream Table Entry的S1ContextPtr中。配置Config标志位为 stage1 translate ,stage 2 bypass 。详情见下表。同时设置STE Valid。

对于arm_smmu_s2_cfg:

对于arm_smmu_s2_cfg: -

vmid写到S2VMID,vttbr写到S2TTB ,vtcr写到S2TG,S2SH0,S2SH0,S2IR0,S2SL0,S2T0SZ中。同时打开S2ENDI,S2PTW,S2AA64,S2R。

-

配置Config标志位为 stage1 bypass ,stage 2 translate。详情见下表。同时设置STE Valid。

至此STE表以及CD表的构建基本描述完成,下一步通过iommu_map填充MMU的页表。

iommu_map

iommu_map

–>arm_smmu_map

---->arm_lpae_map

先看iommu_map函数,参数分别是dev的iommu_domain,传入的iova地址,传入的需要map的PA地址 paddr, map的地址大小size,以及标记读写等的prot mask。

int iommu_map(struct iommu_domain *domain, unsigned long iova,

phys_addr_t paddr, size_t size, int prot)

{

const struct iommu_ops *ops = domain->ops;

unsigned long orig_iova = iova;

unsigned int min_pagesz;

size_t orig_size = size;

phys_addr_t orig_paddr = paddr;

int ret = 0;

if (unlikely(ops->map == NULL ||

domain->pgsize_bitmap == 0UL))

return -ENODEV;

if (unlikely(!(domain->type & __IOMMU_DOMAIN_PAGING)))

return -EINVAL;

/* find out the minimum page size supported */

min_pagesz = 1 << __ffs(domain->pgsize_bitmap);

/*

* both the virtual address and the physical one, as well as

* the size of the mapping, must be aligned (at least) to the

* size of the smallest page supported by the hardware

*/

if (!IS_ALIGNED(iova | paddr | size, min_pagesz)) {

pr_err("unaligned: iova 0x%lx pa %pa size 0x%zx min_pagesz 0x%x\n",

iova, &paddr, size, min_pagesz);

return -EINVAL;

}

pr_debug("map: iova 0x%lx pa %pa size 0x%zx\n", iova, &paddr, size);

while (size) {

size_t pgsize = iommu_pgsize(domain->pgsize_bitmap,

iova | paddr, size);

pr_debug("mapping: iova 0x%lx pa %pa pgsize 0x%zx\n",

iova, &paddr, pgsize);

ret = ops->map(domain, iova, paddr, pgsize, prot);

if (ret)

break;

iova += pgsize;

paddr += pgsize;

size -= pgsize;

}

if (ops->iotlb_sync_map)

ops->iotlb_sync_map(domain);

/* unroll mapping in case something went wrong */

if (ret)

iommu_unmap(domain, orig_iova, orig_size - size);

else

trace_map(to_msm_iommu_domain(domain), orig_iova, orig_paddr,

orig_size, prot);

return ret;

}

EXPORT_SYMBOL_GPL(iommu_map);

- iommu_pgsize函数 根据iommu_domain的pgsize_bitmap(例如SZ_4K | SZ_2M | SZ_1G)以及需要map的size大小确定每次需要map的pgsize大小。

- 之后调用arm_smmu_map函数每次map pgsize大小的地址。直到完成。

arm_smmu_map主要通过iommu_domain的pgtbl_ops回调arm_lpae_map(io-pgtable-arm.c)函数.

#define ARM_LPAE_MAX_LEVELS 4 //最多支持的4级页表

#define ARM_LPAE_START_LVL(d) (ARM_LPAE_MAX_LEVELS - (d)->levels) //patable的开始的第一级页表

va_bits = cfg->ias - data->pg_shift;

data->levels = DIV_ROUND_UP(va_bits, data->bits_per_level);

//确定支持的页表级别levels,由于va_bits和每一级页表的索引的比特位来确定。4K页表的bits_per_level是9.

static int arm_lpae_map(struct io_pgtable_ops *ops, unsigned long iova,

phys_addr_t paddr, size_t size, int iommu_prot)

{

struct arm_lpae_io_pgtable *data = io_pgtable_ops_to_data(ops);

arm_lpae_iopte *ptep = data->pgd;//ptep取值为pgd

int ret, lvl = ARM_LPAE_START_LVL(data);//patable的开始的第一级页表

arm_lpae_iopte prot;

/* If no access, then nothing to do */

if (!(iommu_prot & (IOMMU_READ | IOMMU_WRITE)))

return 0;

if (WARN_ON(iova >= (1ULL << data->iop.cfg.ias) ||

paddr >= (1ULL << data->iop.cfg.oas)))

return -ERANGE;

prot = arm_lpae_prot_to_pte(data, iommu_prot);

ret = __arm_lpae_map(data, iova, paddr, size, prot, lvl, ptep, NULL,

NULL);

/*

* Synchronise all PTE updates for the new mapping before there's

* a chance for anything to kick off a table walk for the new iova.

*/

wmb();

return ret;

}

- arm_lpae_prot_to_pte函数主要根据io_pgtable_fmt(ARM_32_LPAE_S1/ARM_32_LPAE_S2/ARM_64_LPAE_S1/ARM_64_LPAE_S2)和iommu_prot(IOMMU_READ/IOMMU_WRITE/IOMMU_CACHE/IOMMU_NOEXEC)创建pte的属性mask。

- __arm_lpae_map函数逐层创建页表索引。

__arm_lpae_map函数的实现跟linux中创建页表的方式类似,只不过的索引VA变为IOVA。

__arm_lpae_map是递归函数,主要调用以下函数:

arm_lpae_init_pte:申请地址的size如果匹配各个页表level的block_size(SZ_4K | SZ_2M | SZ_1G),将AP和页表属性写到pte页表索引中。

arm_lpae_install_table:否则通过__arm_lpae_alloc_pages申请页表索引,将索引的PA和索引的table属性填充到上一级页表。

2329

2329

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?