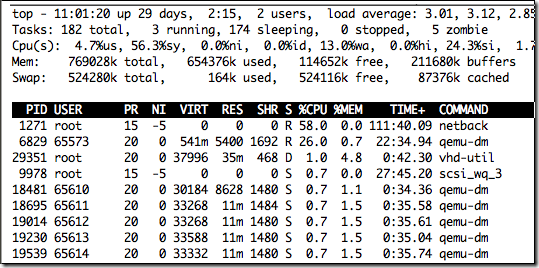

在利用xenserver做性能测试的时候发现netback进程占用cpu很高(如下图),google了一下,才发现netback进程对xenserver性能调优有不小的影响。下面是摘录的E文,中文是我自己翻译的。E文水平有限,还请见谅。

Static allocation of virtual network interfaces (VIFs) and interrupt request (IRQ) queues among available CPUs can lead to non-optimal network throughput.

VIF的固定分配和各个cpu间的中断请求队列可能导致非最优的网络流量。

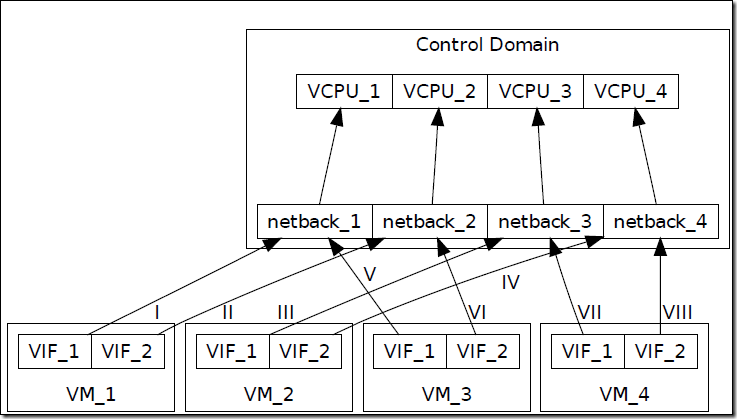

All guest network traffic is processed by netback processes in the control domain on the guest’s host.There is a fixed number of netback processes per host (four by default in XS 5.6 FP1), where each one is pinned to a speci?c control domain virtual CPU (VCPU).

所有客户端网络流量都被控制域的netback进程所控制。每个主机有固定数量的netback进程(XS 5.6 FP1中默认是4),每个netback进程被绑定在控制域的vcpu中。

All traffic for a specific VIF of a guest is processed by a statically-allocated netback process. The static allocation of VIFs to netback processes is done in a round robin fashion when virtual machines (VMs) are first started after XenServer restart. The allocation is not dynamic, since that would require further synchronisation mechanisms, and would close any active connection on every rebalance.

一个客户端的某个vif的所有流量被静态分配的netback进程控制。当xenserver重启后,虚拟机第一次启动时,vif以循环方式固定分配到某个netback进程。这种分配不是动态的,由于它需要更进一步地同步机制,并且在每次重新循环时会关闭所有活动连接。

For example, suppose we have a host with four CPUs, with four netback processes (running on four VCPUs in the control domain), and two physical network interfaces (PIFs). Furthermore, suppose the host has four VMs, where each VM has two VIFs that correspond to PIFs (in the same order for all VMs),and that VMs are started one after another (which is normally the case). Then,the first VIF of all four VMs will be allocated to odd-numbered netback processes, while the second VIF of all four VMs will be allocated to even-numbered netback processes. Therefore, if VMs send network trafficonly on their first VIF, then only two of the available four CPUs will be utilised. See Figure 1.With heavy network traffic on fast network interfaces, CPU can quickly become the bottleneck, so being able to utilise all available CPUs is crucial.

例如,假设有一个4cpu的主机,4个netback进程(在控制域中运行4个vcpus),并且两个物理网卡。另外,假设这个主机由4个vms,每个vm有2个vifs绑定到pifs(对于所有vms都是一样顺序),4个vms按顺序启动(一般情况下都是如此)。那么,4个vms的第一个vif将会被分配到奇数的netback进程,而第二个vif将会被分配给偶数的netback进程。因此,如果vms只通过它们的第一个vif发送网络流量,那么只有4cpu中的2个被使用(本例则只有1,3被使用,2和4不会被用到)。例如图1。在快速网络接口中有很大的网络流量,cpu会迅速变成瓶颈。因此能够使用所有可用的cpu是决定因素。

图1

There are at least three ways to modify the allocation of VIFs on netback processes:

modify the order in which VMs are first started;

modify the order of declared VIFs (on VMs); and,

add dummy VIFs to VMs.

Continuing with the working example from §3, suppose we find that all guest-level network traffic is going through the second VIF of each VM, and that all VMs require roughly the same network throughput. There are two simple solutions to achieving optimal network throughput here:

1. Add a VIF to each VM, restart host, start VMs in order — the second VIFs are allocated to netback processes (in this order) 2, 1, 4, and 3.

2. Switch the order of the two VIFs on the third and the fourth VMs, restart host, start VMs in order — the relevant VIFs (those that were previously the second VIFs) are allocated to netback processes (in this order) 2, 4,1, and 3.

考虑到上例,假设我们发现所有客户端网络流量都是通过每个vm的第二个虚拟网卡走,并且所有vms需要大概相同的网络流量。那么有两个简单的方法来达到最优的网络流量,如下:

- 1、给每个vm加一个vif,然后重启xenserver主机,按顺序启动vms——第二个vifs将会按2,1,4,3的顺序分配给netback进程。

- 2、对调第三个和第四个vm上两个网卡的顺序,重启xenserver主机,按顺序启动vms,相关的VIFS(之前的第二个vifs)将会分配按2,4,1,3的顺序分配给netback进程。

华丽的分割线

以上就是摘录的,最后两行调优例子,大家可以去看下。我按照方法对调,确实会用到4个cpu,顺序是2,4,1,3。有兴趣的童鞋可以试试。

3435

3435

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?