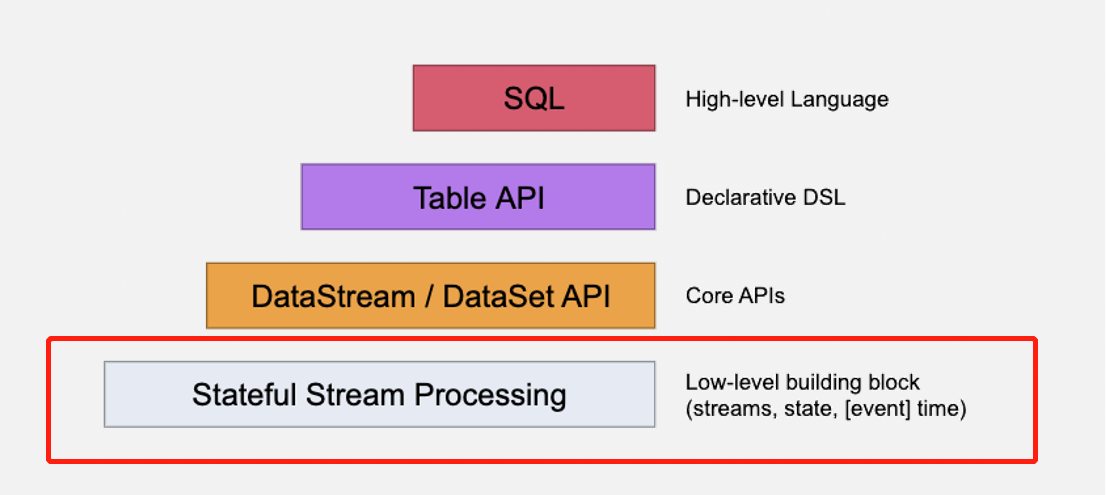

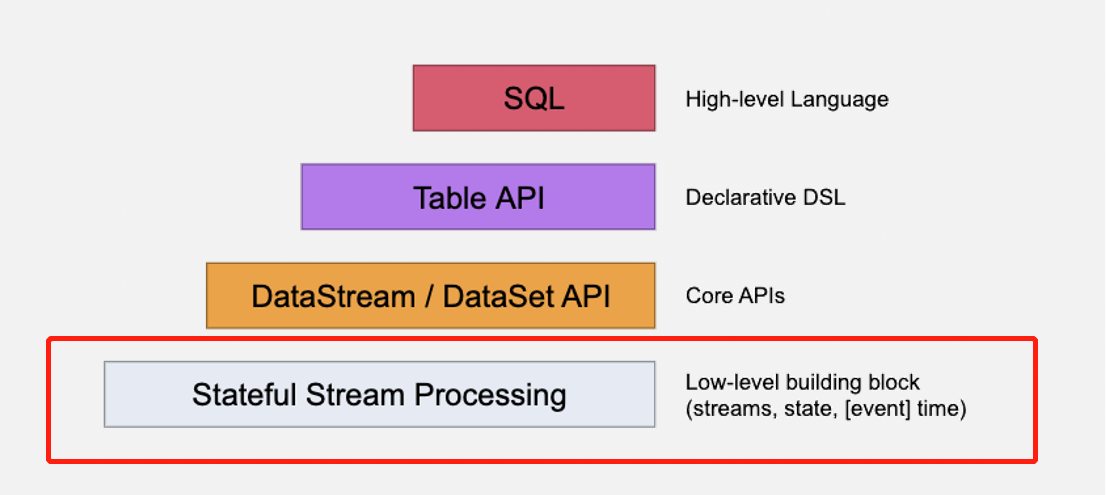

Flink process(底层)(windowprocess可以获取到时间)

1. process

package com.wt.flink.core

import org.apache.flink.configuration.Configuration

import org.apache.flink.streaming.api.functions.ProcessFunction

import org.apache.flink.streaming.api.scala._

import org.apache.flink.util.Collector

object Demo7Process {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val linesDS: DataStream[String] = env.socketTextStream("master", 8888)

/**

* process:一行一行的处理数据,可以返回多行,相当于flatmap

* process:可以用于替代 map,filter,flatmap

*

*/

//在DataStream 上使用process函数

val procesDS: DataStream[(String, Int)] = linesDS

.process(new ProcessFunction[String, (String, Int)] {

/**

* 也可以重写open和close方法,可以将初始化的代码放在open中

*

*/

override def open(parameters: Configuration): Unit = super.open(parameters)

override def close(): Unit = super.close()

/**

* processElement: 将ds的中的数据一条一条传递给processElement,

* processElement可以输出多条数据,相当于flatmap

*

* @param value : 一行数据

* @param ctx : 上下问对象,可以获取到flink的时间

* @param out : 用于见数据发送到下游

*/

override def processElement(value: String,

context: ProcessFunction[String, (String, Int)]#Context,

out: Collector[(String, Int)]): Unit = {

val clazz: String = value.split(",")(4)

//将数据发送到下游

out.collect((clazz, 1))

}

})

procesDS.print()

env.execute()

}

}

KeyByProcess

package com.wt.flink.core

import org.apache.flink.streaming.api.functions.KeyedProcessFunction

import org.apache.flink.streaming.api.scala._

import org.apache.flink.util.Collector

import scala.collection.mutable

object Demo8KeyByPrecess {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val linesDS: DataStream[String] = env.socketTextStream("master", 8888)

val wordsDS: DataStream[String] = linesDS.flatMap(_.split(","))

val kvDS: DataStream[(String, Int)] = wordsDS.map((_, 1))

val keyByDS: KeyedStream[(String, Int), String] = kvDS.keyBy(_._1)

/**

* keyBy之后使用process

* keyBy: 将相同的key分到同一个task中

*

* KeyedProcessFunction

*

*/

val countDS: DataStream[(String, Int)] = keyByDS

.process(new KeyedProcessFunction[String, (String, Int), (String, Int)] {

//同一个并行度中是一个变量

//var count: Int = 0

//用于保存每个单词的数量

val countMap: mutable.Map[String, Int] = new mutable.HashMap[String, Int]()

/**

* 将数据一行一行传递给processElement,processElement可以返回多条数据

*

* @param value : 一行数据

* @param ctx :上下文对象

* @param out :用于将数据发送到下游

*/

override def processElement(value: (String, Int),

ctx: KeyedProcessFunction[String, (String, Int), (String, Int)]#Context,

out: Collector[(String, Int)]): Unit = {

//获取当前的key

val key: String = ctx.getCurrentKey

//从map中获取单词的数量,如果有就返回,如果没有就返回0

var count: Int = countMap.getOrElse(key, 0)

//做一个累计

count += 1

//更新map中的单词的数量

countMap.put(key, count)

//将数据发送到下游

out.collect(key, count)

}

})

countDS.print()

env.execute()

}

}

WindowProcess

package com.wt.flink.core

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.ProcessWindowFunction

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

object Demo9WindowProcess {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val linesDS: DataStream[String] = env.socketTextStream("master", 8888)

val wordsDS: DataStream[String] = linesDS.flatMap(_.split(','))

//按照单词分组

val keyByDS: KeyedStream[String, String] = wordsDS.keyBy(word => word)

//统计最近5秒单词的数量

val windowDS: WindowedStream[String, String, TimeWindow] = keyByDS

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

/**

* 在窗口之后使用process函数,一次传入一个窗口的数据

*

*/

val countDS: DataStream[(String, Long, Long, Int)] = windowDS.process(

new ProcessWindowFunction[String, (String, Long, Long, Int), String, TimeWindow] {

/**

* process: 一个窗口处理一次

*

* @param key : key

* @param context : 上下文对象,可以获取窗口的开始和结束时间

* @param elements :窗口内所有的数据

* @param out :用于将数据发送到下游

*/

override def process(key: String,

context: Context,

elements: Iterable[String],

out: Collector[(String, Long, Long, Int)]): Unit = {

//计算单词的数量

val count: Int = elements.size

//获取窗口的开始和结束时间

val window: TimeWindow = context.window

val start: Long = window.getStart

val end: Long = window.getEnd

//将统计的结果发送到下游

out.collect(key, start, end, count)

}

}

)

countDS.print()

env.execute()

}

}

windowsprocess可以获取到时间,我们可以与小汽车一题结合,获取小汽车通过卡口的时间

package com.wt.flink.core

import com.alibaba.fastjson.{JSON, JSONObject}

import org.apache.flink.api.common.eventtime.{SerializableTimestampAssigner, WatermarkStrategy}

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.connector.kafka.source.KafkaSource

import org.apache.flink.connector.kafka.source.enumerator.initializer.OffsetsInitializer

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.ProcessWindowFunction

import org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

import java.time.Duration

object Demo10CarTime {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

/**

* 读取数据

*

*/

val source: KafkaSource[String] = KafkaSource

.builder[String]

.setBootstrapServers("master:9092,node1:9092,node2:9092") //kafka集群broker列表

.setTopics("cars") //指定topic

.setGroupId("asdasdasd") //指定消费者组,一条数据在一个组内只被消费一次

.setStartingOffsets(OffsetsInitializer.latest()) //读取数据的位置,earliest:读取所有的数据,latest:读取最新的数据

.setValueOnlyDeserializer(new SimpleStringSchema()) //反序列的类

.build

//使用kafka source

val carsDS: DataStream[String] = env.fromSource(source, WatermarkStrategy.noWatermarks(), "Kafka Source")

/**

* 解析数据

*

*/

val cardAndTimeDS: DataStream[(Long, Long)] = carsDS.map(line => {

//将字符串转换成json对象

val jsonObj: JSONObject = JSON.parseObject(line)

//使用字段名获取字段值

//卡口编号

val card: Long = jsonObj.getLong("card")

//事件时间,事件时间要求时毫秒级别

val time: Long = jsonObj.getLong("time") * 1000

(card, time)

})

/**

* 增加水位线和时间字段

*

*/

val assDS: DataStream[(Long, Long)] = cardAndTimeDS.assignTimestampsAndWatermarks(

WatermarkStrategy

//设置水位线的生成策略,前移5秒

.forBoundedOutOfOrderness(Duration.ofSeconds(5))

//设置时间字段

.withTimestampAssigner(new SerializableTimestampAssigner[(Long, Long)] {

override def extractTimestamp(element: (Long, Long), recordTimestamp: Long): Long = {

//时间字段

element._2

}

})

)

/**

* 按照卡口分组

*

*/

val kvDS: DataStream[(Long, Int)] = assDS.map(kv => (kv._1, 1))

//按照卡口分组

val keyBYDS: KeyedStream[(Long, Int), Long] = kvDS.keyBy(_._1)

//开窗口

val windowDS: WindowedStream[(Long, Int), Long, TimeWindow] = keyBYDS

.window(SlidingEventTimeWindows.of(Time.minutes(15), Time.minutes(4)))

val cardFlowDS: DataStream[(Long, Long, Long, Int)] = windowDS

.process(new ProcessWindowFunction[(Long, Int), (Long, Long, Long, Int), Long, TimeWindow] {

/**

* 一个窗口执行一次

*

* @param key :卡口

* @param context :上下文对象

* @param elements :窗口内所有的数据

* @param out : 用于将数据发送到下游

*/

override def process(key: Long,

context: Context,

elements: Iterable[(Long, Int)],

out: Collector[(Long, Long, Long, Int)]): Unit = {

//车流量

val flow: Int = elements.size

//获取窗口的开始和结束时间

val window: TimeWindow = context.window

val stat: Long = window.getStart

val end: Long = window.getEnd

//将数据发送到下游

out.collect((key, stat, end, flow))

}

})

cardFlowDS.print()

env.execute()

}

}

815

815

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?