1、增加依赖

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>1.0.0</version>

</dependency>

2、三个案例

案例1:生产数据

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

public class Demo1KafkaProducer {

public static void main(String[] args) {

Properties properties = new Properties();

//指定broker列表

properties.setProperty("bootstrap.servers", "master:9092,node2:9092,node2:9092");

//指定key和value的数据格式

properties.setProperty("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.setProperty("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//创建生产者

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//生产数据

producer.send(new ProducerRecord<>("words","java"));

producer.flush();

//关闭连接

producer.close();

}

}案例2: 文件生产数据到kafka(读取)

import java.io.BufferedReader;

import java.io.FileReader;

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

public class Demo2FileToKaFka {

public static void main(String[] args) throws Exception{

Properties properties = new Properties();

//指定broker列表

properties.setProperty("bootstrap.servers", "master:9092,node2:9092,node2:9092");

//指定key和value的数据格式

properties.setProperty("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.setProperty("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//创建生产者

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//读取文件

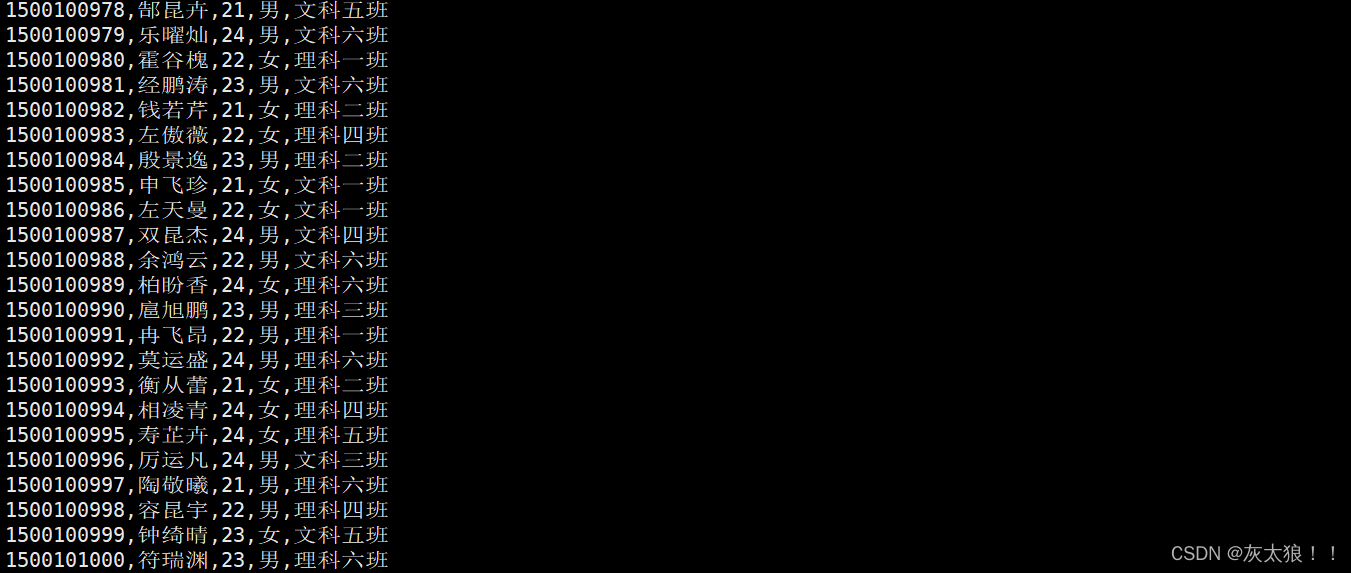

BufferedReader bs = new BufferedReader(new FileReader("flink/data/student.csv"));

String line;

while ((line=bs.readLine())!=null){

//生产数据 如果指定分区默认为轮循添加数据

producer.send(new ProducerRecord<>("students",line));

producer.flush();

}

//关闭连接

bs.close();

producer.close();

}

}创建控制台消费者消费数据

kafka-console-consumer.sh --bootstrap-server master:9092,node1:9092,node2:9092 --from-beginning --topic bigdata

使用hash分区的方式改写该案例:

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.io.BufferedReader;

import java.io.FileReader;

import java.util.Properties;

public class Dem3FileToKafkaWithHash {

public static void main(String[] args) throws Exception {

Properties properties = new Properties();

//指定broker列表

properties.setProperty("bootstrap.servers", "master:9092,node2:9092,node2:9092");

//指定key和value的数据格式

properties.setProperty("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.setProperty("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//创建生产者

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);

//读取文件

FileReader fileReader = new FileReader("flink/data/student.csv");

BufferedReader bufferedReader = new BufferedReader(fileReader);

String line;

while ((line = bufferedReader.readLine()) != null) {

String clazz = line.split(",")[4];

//hash分区

int partition = Math.abs(clazz.hashCode()) % 3;

//生产数据

//kafka-topics.sh --create --zookeeper master:2181,node1:2181,node2:2181/kafka --replication-factor 2 --partitions 3 --topic students_hash_partition

//指定分区生产数据

producer.send(new ProducerRecord<>("students_hash_partition", partition, null, line));

producer.flush();

}

//关闭连接

fileReader.close();

bufferedReader.close();

producer.close();

}

}案例3:消费kafka中的数据

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.util.ArrayList;

import java.util.Properties;

public class Demo4Consumer {

public static void main(String[] args) {

Properties properties = new Properties();

//指定kafka集群列表

properties.setProperty("bootstrap.servers", "master:9092,node2:9092,node2:9092");

//指定key和value的数据格式

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

/*

* earliest

* 当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费

* latest 默认

* 当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产认值生的该分区下的数据

* none

* topic各分区都存在已提交的offset时,从offset后开始消费;只要有一个分区不存在已提交的offset,则抛出异常

*

*/

properties.setProperty("auto.offset.reset", "earliest");

//指定消费者组,一条数据在一个组内只消费一次

properties.setProperty("group.id", "java_kafka_group1");

//创建消费者

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(properties);

//订阅topic

ArrayList<String> topics = new ArrayList<>();

topics.add("hash_students");

consumer.subscribe(topics);

//死循环拉取数据,使数据全部拉取完毕

while (true) {

//拉取数据 默认只会拉取500条数据

ConsumerRecords<String, String> consumerRecords = consumer.poll(1000);

//解析数据

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

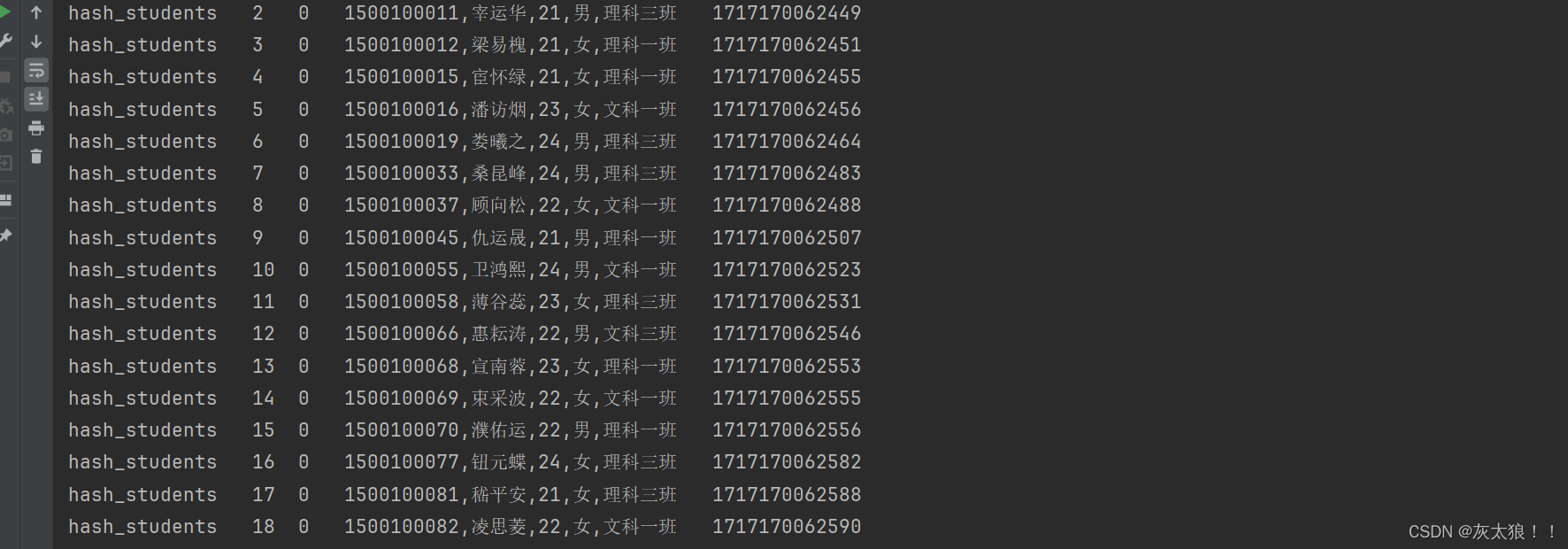

String topic = consumerRecord.topic(); //主题

long offset = consumerRecord.offset(); //偏移量

int partition = consumerRecord.partition(); //分区

String value = consumerRecord.value(); //数据

long timestamp = consumerRecord.timestamp(); //处理时间

System.out.println(topic + "\t" + offset + "\t" + partition + "\t" + value + "\t" + timestamp);

}

}

}

}

1037

1037

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?