目录

第 1 部分 线性回归模型

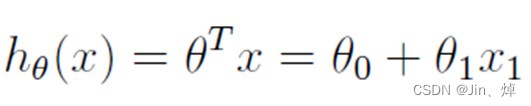

线性回归:用一条曲线对数据点进行拟合,这个拟合过程叫做回归。当该曲线为一条直线时,就是线性回归。特征函数的估计表达式:

1.损失函数:均方误差

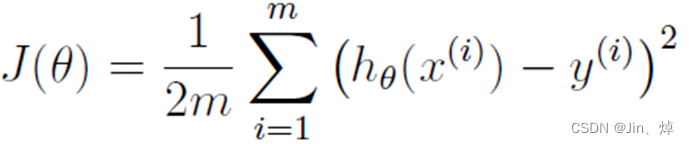

2.梯度下降算法更新参数θ图解

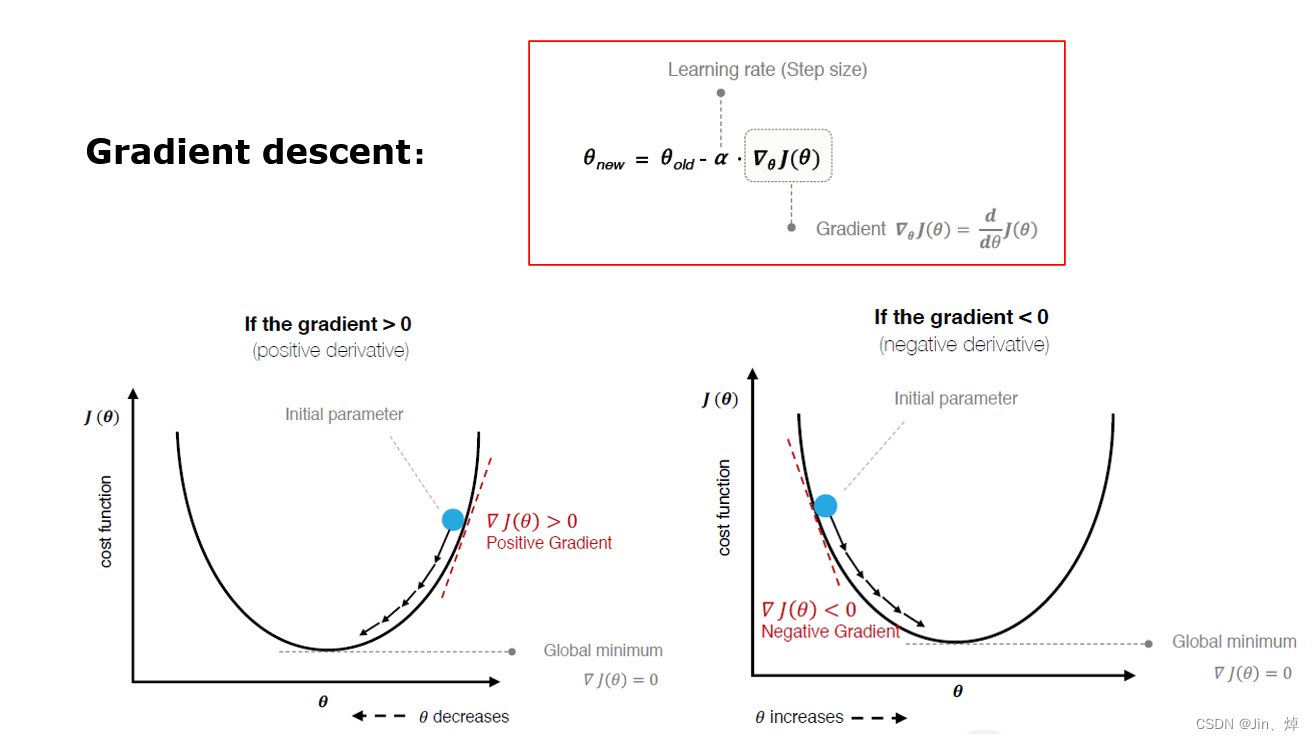

3.通过矩阵实现线性回归

1. 初始化和基本功能

(1)开始运行程序,并进行初始化;

(2)完善函数warmUpExercise(),要求返回一个5 x 5的单位矩阵。

2. 数据可视化

(1)查阅 matlab 工具箱中函数 load,熟悉其基本语法学会载入数据;

(2)所给代码已利用函数 load 将数据文件 ex1data1.txt 加载至 X 和 y 中;

(3)完善函数 plotData(X,y),并绘制出数据的散点图。

3. 梯度下降

(1)查阅相关资料,理解梯度下降法的原理和步骤;

(2)完善函数 computeCost(X,y,theta),熟悉其基本语法;

(3)完善函数gradientDescent(X, y, theta, alpha, iterations),掌握其基本语法并 调用函数。

4. 可视化 J

为了更好的理解代价函数 J(θ),根据前面所完成的代码,现将θ0至θ1之间的值进行 绘图。

warmUpExercise.m

function A = warmUpExercise()

%function [输出变量] = 函数名称(输入变量)

%WARMUPEXERCISE Example function in octave

% A = WARMUPEXERCISE() is an example function that returns the 5x5 identity matrix

A = [];

% ============= YOUR CODE HERE ==============

% Instructions: Return the 5x5 identity matrix

% In octave, we return values by defining which variables

% represent the return values (at the top of the file)

% and then set them accordingly.

A=eye(5); % 返回单位矩阵,5*5

% ===========================================

end

plotData.m

function plotData(x, y)

%PLOTDATA Plots the data points x and y into a new figure

% PLOTDATA(x,y) plots the data points and gives the figure axes labels of

% population and profit.

% ====================== YOUR CODE HERE ======================

% Instructions: Plot the training data into a figure using the

% "figure" and "plot" commands. Set the axes labels using

% the "xlabel" and "ylabel" commands. Assume the

% population and revenue data have been passed in

% as the x and y arguments of this function.

%

% Hint: You can use the 'rx' option with plot to have the markers

% appear as red crosses. Furthermore, you can make the

% markers larger by using plot(..., 'rx', 'MarkerSize', 10);

% 这里的x,y用于测试函数功能

%x=[1;2;3;4]

%y=[2;4;8;5]

figure; % open a new figure window

plot(x,y,'bx','MarkerSize', 10) % -实线 b 蓝色 x标记 标记大小10

xlabel('Population of City in 10,000s')

ylabel('Profit in $ 10,000s')

% ============================================================

end

gradientDescent.m

function [theta, J_history] = gradientDescent(X, y, theta, alpha, num_iters)

%GRADIENTDESCENT Performs gradient descent to learn theta

% theta = GRADIENTDESENT(X, y, theta, alpha, num_iters) updates theta by

% taking num_iters gradient steps with learning rate alpha

% Initialize some useful values

m = length(y); % number of training examples

J_history = zeros(num_iters, 1); % 保存梯度损失

for iter = 1:num_iters

% ====================== YOUR CODE HERE ======================

% Instructions: Perform a single gradient step on the parameter vector

% theta.

%

% Hint: While debugging, it can be useful to print out the values

% of the cost function (computeCost) and gradient here.

%

% theta 2*1

% X 97*2

% X' 2*97

% X*theta-y 97*1

theta=theta-alpha*X'*(X*theta-y)/m;

% ============================================================

% Save the cost J in every iteration

J_history(iter) = computeCost(X, y, theta);

end

end

computeCost.m

function J = computeCost(X, y, theta)

%COMPUTECOST Compute cost for linear regression

% J = COMPUTECOST(X, y, theta) computes the cost of using theta as the

% parameter for linear regression to fit the data points in X and y

% Initialize some useful values

m = length(y); % number of training examples

% You need to return the following variables correctly

J = 0;

% ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta

% You should set J to the cost.

% X 97*2

% theta 2*1

% y 97*1

J = sum((X * theta-y).^2) / (2*m);

% =========================================================================

end

ex1.m

%% Machine Learning Online Class - Exercise 1: Linear Regression

% Instructions

% ------------

%

% This file contains code that helps you get started on the

% linear exercise. You will need to complete the following functions

% in this exericse:

%

% warmUpExercise.m

% plotData.m

% gradientDescent.m

% computeCost.m

%

% For this exercise, you will not need to change any code in this file,

% or any other files other than those mentioned above.

%

% x refers to the population size in 10,000s

% y refers to the profit in $10,000s

%

%% Initialization

clear ; close all; clc

%% ==================== Part 1: Basic Function ====================

% Complete warmUpExercise.m

fprintf('Running warmUpExercise ... \n');

fprintf('5x5 Identity Matrix: \n');

warmUpExercise()

fprintf('Program paused. Press enter to continue.\n');

pause;

%% ======================= Part 2: Plotting =======================

fprintf('Plotting Data ...\n')

data = load('ex1data1.txt');

X = data(:, 1); y = data(:, 2);

m = length(y); % number of training examples

% Plot Data

% Note: You have to complete the code in plotData.m

plotData(X, y);

fprintf('Program paused. Press enter to continue.\n');

pause;

%% =================== Part 3: Gradient descent ===================

fprintf('Running Gradient Descent ...\n')

X = [ones(m, 1), data(:,1)]; % Add a column of ones to x 添加一列1

theta = zeros(2, 1); % initialize fitting parameters

% Some gradient descent settings

iterations = 1500;

alpha = 0.01;

% compute and display initial cost

computeCost(X, y, theta)

% run gradient descent

theta = gradientDescent(X, y, theta, alpha, iterations);

% print theta to screen

fprintf('Theta found by gradient descent: ');

fprintf('%f %f \n', theta(1), theta(2));

% Plot the linear fit

hold on; % keep previous plot visible

plot(X(:,2), X*theta, '-')

legend('Training data', 'Linear regression')

hold off % don't overlay any more plots on this figure

% Predict values for population sizes of 35,000 and 70,000

predict1 = [1, 3.5] *theta;

fprintf('For population = 35,000, we predict a profit of %f\n',...

predict1*10000);

predict2 = [1, 7] * theta;

fprintf('For population = 70,000, we predict a profit of %f\n',...

predict2*10000);

fprintf('Program paused. Press enter to continue.\n');

pause;

%% ============= Part 4: Visualizing J(theta_0, theta_1) =============

fprintf('Visualizing J(theta_0, theta_1) ...\n')

% Grid over which we will calculate J

theta0_vals = linspace(-10, 10, 100);

theta1_vals = linspace(-1, 4, 100);

% initialize J_vals to a matrix of 0's

J_vals = zeros(length(theta0_vals), length(theta1_vals));

% Fill out J_vals

for i = 1:length(theta0_vals)

for j = 1:length(theta1_vals)

t = [theta0_vals(i); theta1_vals(j)];

J_vals(i,j) = computeCost(X, y, t);

end

end

% Because of the way meshgrids work in the surf command, we need to

% transpose J_vals before calling surf, or else the axes will be flipped

J_vals = J_vals';

% Surface plot

figure;

surf(theta0_vals, theta1_vals, J_vals)

xlabel('\theta_0'); ylabel('\theta_1');

% Contour plot

figure;

% Plot J_vals as 15 contours spaced logarithmically between 0.01 and 100

contour(theta0_vals, theta1_vals, J_vals, logspace(-2, 3, 20))

xlabel('\theta_0'); ylabel('\theta_1');

hold on;

plot(theta(1), theta(2), 'rx', 'MarkerSize', 10, 'LineWidth', 2);

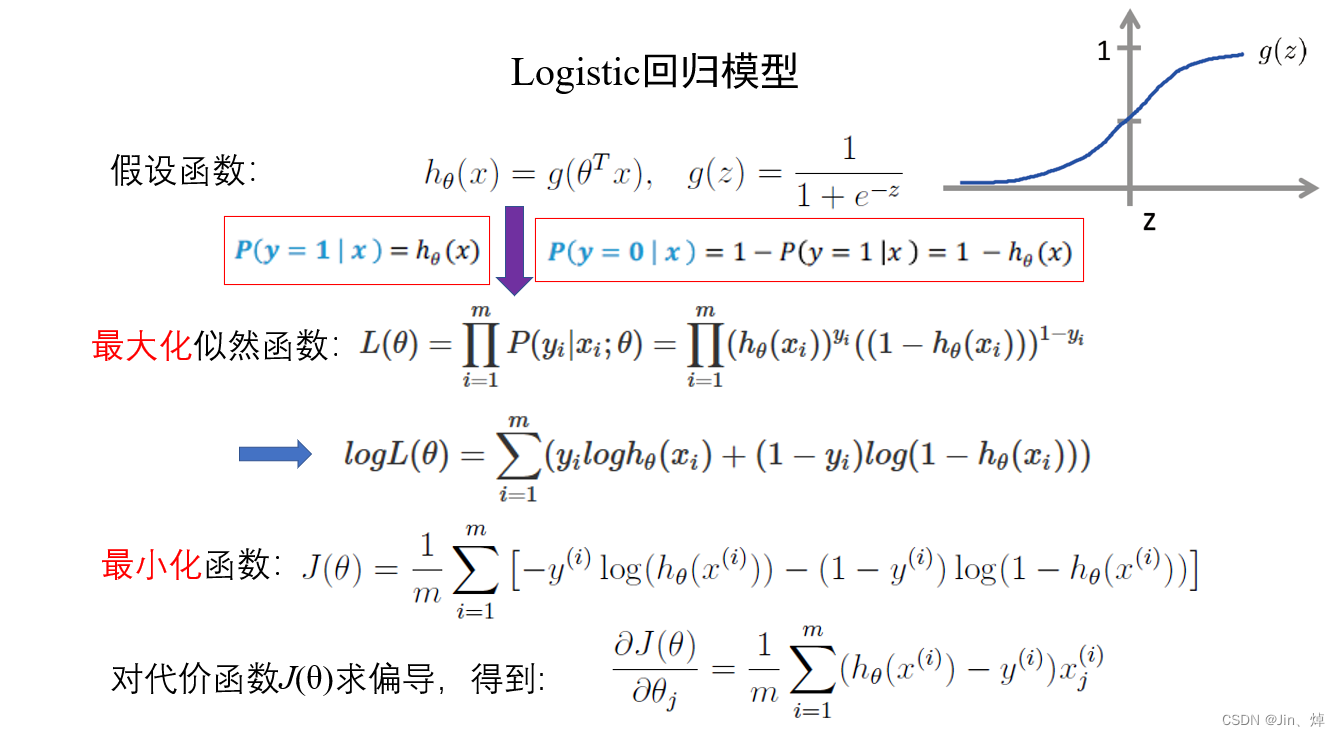

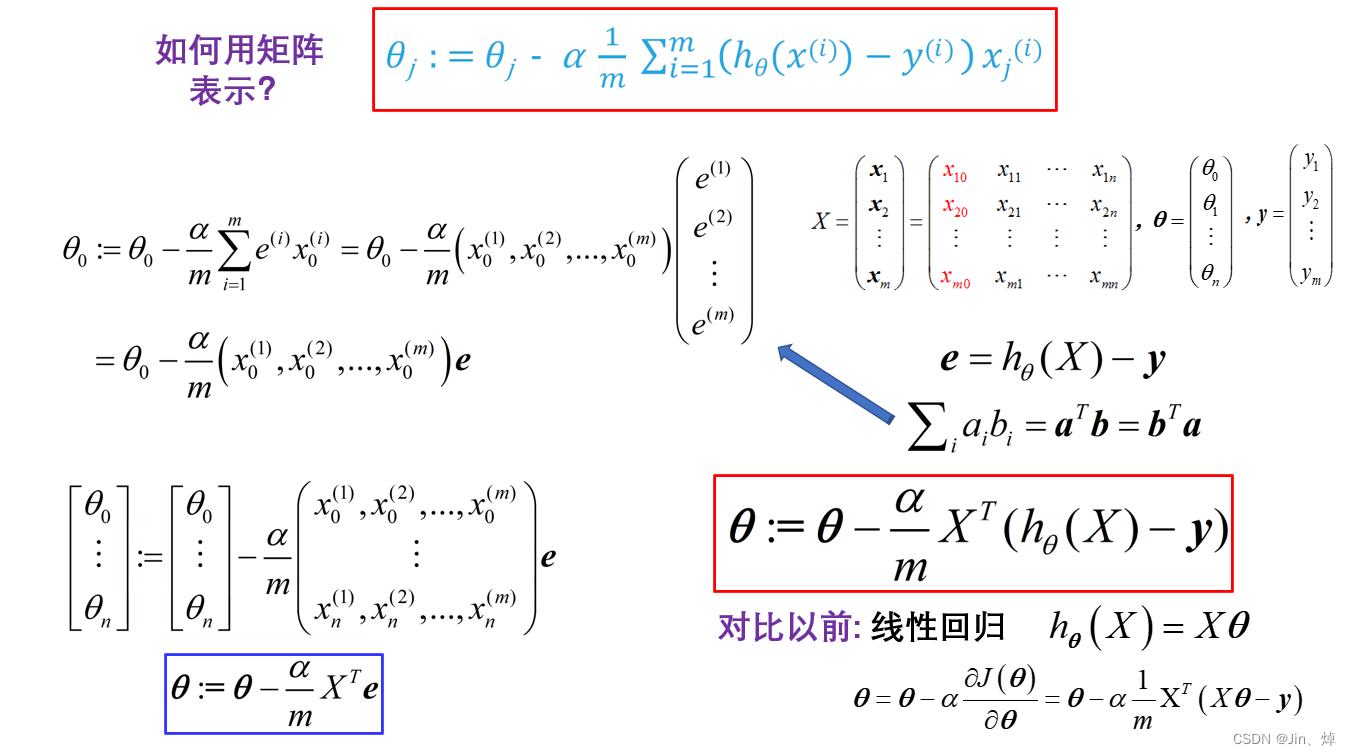

第 2 部分 logistic 回归模型

1. 初始化

开始运行程序,并对函数进行初始化。

2. 数据可视化

(1)查阅 matlab 图像处理工具箱中函数 load,熟悉其基本语法学会导入数据;

(2)所给代码已利用 load 将数据文件 ex2data1.txt 加载至 X 和 y 中,ex2data1.txt 中数据分别为两门课程的成绩与是否被录取;

(3)完善函数 plotData(X,y),并绘制出数据的散点图。

3. 计算代价和梯度

(1)查阅教材3.3,理解和掌握Sigmoid函数的构建,并完善代码中的函数sigmoid();

(2)理解和计算梯度和代价函数J,并完善函数costFunction();

4. 通过fminunc函数进行优化

(1)查阅matlab工具箱中函数optimset,了解函数各项输入与输出含义;

(2)查阅matlab工具箱中函数fminunc,熟悉其基本语法并理解函数的功能;

(3)利用函数fminunc找出logistic回归代价函数的最佳参数θ;

(4)理解函数plotDecisionBoundary(),并绘制出优化后的logistic回归模型。

5. 预测

(1)根据给定的一组数据(如一名学生分数:[1 45 85])通过优化所得的参数θ进行 预测;

(2)完善函数predict(),并对载入数据进行预测和计算预测的准确性。

sigmoid.m

function g = sigmoid(z)

%SIGMOID Compute sigmoid functoon

% J = SIGMOID(z) computes the sigmoid of z.

% You need to return the following variables correctly

g = zeros(size(z));

% ====================== YOUR CODE HERE ======================

% Instructions: Compute the sigmoid of each value of z (z can be a matrix,

% vector or scalar).

g = 1 ./ ( 1 + exp(-z) ) ;

% =============================================================

endpredict.m

function p = predict(theta, X)

%PREDICT Predict whether the label is 0 or 1 using learned logistic

%regression parameters theta

% p = PREDICT(theta, X) computes the predictions for X using a

% threshold at 0.5 (i.e., if sigmoid(theta'*x) >= 0.5, predict 1)

m = size(X, 1); % Number of training examples

% You need to return the following variables correctly

p = zeros(m, 1);

% ====================== YOUR CODE HERE ======================

% Instructions: Complete the following code to make predictions using

% your learned logistic regression parameters.

% You should set p to a vector of 0's and 1's

%

k = find(sigmoid( X * theta) >= 0.5 );

p(k)= 1;

d = find(sigmoid( X * theta) < 0.5 );

p(d)= 0;

% =========================================================================

end

plotDecisionBoundary.m

function plotDecisionBoundary(theta, X, y)

%PLOTDECISIONBOUNDARY Plots the data points X and y into a new figure with

%the decision boundary defined by theta

% PLOTDECISIONBOUNDARY(theta, X,y) plots the data points with + for the

% positive examples and o for the negative examples. X is assumed to be

% a either

% 1) Mx3 matrix, where the first column is an all-ones column for the

% intercept.

% 2) MxN, N>3 matrix, where the first column is all-ones

% Plot Data

plotData(X(:,2:3), y);

hold on

if size(X, 2) <= 3 %返回维度2的长度

% Only need 2 points to define a line, so choose two endpoints

plot_x = [min(X(:,2))-2, max(X(:,2))+2];

% Calculate the decision boundary line

plot_y = (-1./theta(3)).*(theta(2).*plot_x + theta(1));

% Plot, and adjust axes for better viewing

plot(plot_x, plot_y,'r')

% Legend, specific for the exercise

legend('Admitted', 'Not admitted', 'Decision Boundary')

axis([30, 100, 30, 100])

else

% Here is the grid range

u = linspace(-1, 1.5, 50);

v = linspace(-1, 1.5, 50);

z = zeros(length(u), length(v));

% Evaluate z = theta*x over the grid

for i = 1:length(u)

for j = 1:length(v)

z(i,j) = mapFeature(u(i), v(j))*theta;

end

end

z = z'; % important to transpose z before calling contour

% Plot z = 0

% Notice you need to specify the range [0, 0]

contour(u, v, z, [0, 0], 'LineWidth', 2)

end

hold off

end

plotData.m

function plotData(X, y)

%PLOTDATA Plots the data points X and y into a new figure

% PLOTDATA(x,y) plots the data points with + for the positive examples

% and o for the negative examples. X is assumed to be a Mx2 matrix.

% Create New Figure

figure; hold on;

% ====================== YOUR CODE HERE ======================

% Instructions: Plot the positive and negative examples on a

% 2D plot, using the option 'k+' for the positive

% examples and 'ko' for the negative examples.

%

%k = find(X) 返回一个包含数组 X 中每个非零元素的线性索引的向量。

x1 = find(y == 1);

x2 = find(y == 0);

plot(X(x1,1),X(x1,2),'k+','LineWidth', 2, 'MarkerSize', 7);

plot(X(x2,1),X(x2,2),'ko','MarkerFaceColor', 'y','MarkerSize', 7);

% =========================================================================

hold off;

end

costFunction.m

function [J, grad] = costFunction(theta, X, y)

%COSTFUNCTION Compute cost and gradient for logistic regression

% J = COSTFUNCTION(theta, X, y) computes the cost of using theta as the

% parameter for logistic regression and the gradient of the cost

% w.r.t. to the parameters.

% Initialize some useful values

m = length(y); % number of training examples

% You need to return the following variables correctly

J = 0;

grad = zeros(size(theta));

% ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta.

% You should set J to the cost.

% Compute the partial derivatives and set grad to the partial

% derivatives of the cost w.r.t. each parameter in theta

%

% Note: grad should have the same dimensions as theta

%

% y 10*1

% X 100*3

% theta 3*1

% X' 3*100

% X*theta 100*1

% grad 3*1

J= -1 * sum( y .* log( sigmoid(X*theta) ) + (1 - y ) .* log( (1 - sigmoid(X*theta)) ) ) / m ;

grad = ( X' * (sigmoid(X*theta) - y ) )/ m ;

% =============================================================

end

ex2.m

%% Machine Learning Online Class - Exercise 2: Logistic Regression

%

% Instructions

% ------------

%

% This file contains code that helps you get started on the logistic

% regression exercise. You will need to complete the following functions

% in this exericse:

%

% plotData.m

% sigmoid.m

% costFunction.m

% predict.m

%

% For this exercise, you will not need to change any code in this file,

% or any other files other than those mentioned above.

%

%% Initialization

clear ; close all; clc

%% Load Data

% The first two columns contains the exam scores and the third column

% contains the label.

data = load('ex2data1.txt');

X = data(:, [1, 2]); y = data(:, 3);

%% ==================== Part 1: Plotting ====================

% We start the exercise by first plotting the data to understand the

% the problem we are working with.

fprintf(['Plotting data with + indicating (y = 1) examples and o ' ...

'indicating (y = 0) examples.\n']);

plotData(X, y);

% Put some labels

hold on;

% Labels and Legend

xlabel('Exam 1 score')

ylabel('Exam 2 score')

% Specified in plot order

legend('Admitted', 'Not admitted')

hold off;

fprintf('\nProgram paused. Press enter to continue.\n');

pause;

%% ============ Part 2: Compute Cost and Gradient ============

% In this part of the exercise, you will implement the cost and gradient

% for logistic regression. You neeed to complete the code in

% costFunction.m

% Setup the data matrix appropriately, and add ones for the intercept term

[m, n] = size(X);

% Add intercept term to x and X_test

X = [ones(m, 1) X];

% Initialize fitting parameters

initial_theta = zeros(n + 1, 1);

% Compute and display initial cost and gradient

[cost, grad] = costFunction(initial_theta, X, y);

fprintf('Cost at initial theta (zeros): %f\n', cost);

fprintf('Gradient at initial theta (zeros): \n');

fprintf(' %f \n', grad);

fprintf('\nProgram paused. Press enter to continue.\n');

pause;

%% ============= Part 3: Optimizing using fminunc =============

% In this exercise, you will use a built-in function (fminunc) to find the

% optimal parameters theta.

% Set options for fminunc

options = optimset('GradObj', 'on', 'MaxIter', 400);

% Run fminunc to obtain the optimal theta

% This function will return theta and the cost

[theta, cost] = ...

fminunc(@(t)(costFunction(t, X, y)), initial_theta, options);

% Print theta to screen

fprintf('Cost at theta found by fminunc: %f\n', cost);

fprintf('theta: \n');

fprintf(' %f \n', theta);

% Plot Boundary

plotDecisionBoundary(theta, X, y);

% Put some labels

hold on;

% Labels and Legend

xlabel('Exam 1 score')

ylabel('Exam 2 score')

% Specified in plot order

legend('Admitted', 'Not admitted', 'Decision Boundary')

hold off;

fprintf('\nProgram paused. Press enter to continue.\n');

pause;

%% ============== Part 4: Predict and Accuracies ==============

% After learning the parameters, you'll like to use it to predict the outcomes

% on unseen data. In this part, you will use the logistic regression model

% to predict the probability that a student with score 45 on exam 1 and

% score 85 on exam 2 will be admitted.

%

% Furthermore, you will compute the training and test set accuracies of

% our model.

%

% Your task is to complete the code in predict.m

% Predict probability for a student with score 45 on exam 1

% and score 85 on exam 2

prob = sigmoid([1 45 85] * theta);

fprintf(['For a student with scores 45 and 85, we predict an admission ' ...

'probability of %f\n\n'], prob);

% Compute accuracy on our training set

p = predict(theta, X);

fprintf('Train Accuracy: %f\n', mean(double(p == y)) * 100);

fprintf('\nProgram paused. Press enter to continue.\n');

pause;

573

573

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?