- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

要求:

- 学习如何编写一个完整的深度学习程序

- 了解分类彩色图片和灰度图片有什么区别

- 测试集accuracy达到72%

我的环境:

- 语言环境:Python 3.8.10

- 编译器:JupyterLab

- 深度学习框架:TensorFlow 2.13.1

- 显卡(GPU):NVIDIA GeForce RTX 3080 Ti

一、前期工作

1.设置GPU

如果使用的是CPU可以忽略这步。

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")

if gpus:

gpu0 = gpus[0] # 如果有多个GPU,仅使用第0个GPU

tf.config.experimental.set_memory_growth(gpu0, True) # 设置GPU显存用量按需使用

tf.config.set_visible_devices([gpu0], "GPU")

2.导入数据

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

import matplotlib.pyplot as plt

(train_images, train_labels), (test_images, test_labels) = datasets.cifar10.load_data()

3.归一化

# 将像素的值标准化至0到1的区间内。

train_images, test_images = train_images / 255.0, test_images / 255.0

train_images.shape, test_images.shape, train_labels.shape, test_labels.shape

执行结果:

((50000, 32, 32, 3), (10000, 32, 32, 3), (50000, 1), (10000, 1))

4.可视化

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

plt.figure(figsize=(20,10))

for i in range(20):

plt.subplot(5,10,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(class_names[train_labels[i][0]])

plt.show()

执行结果:

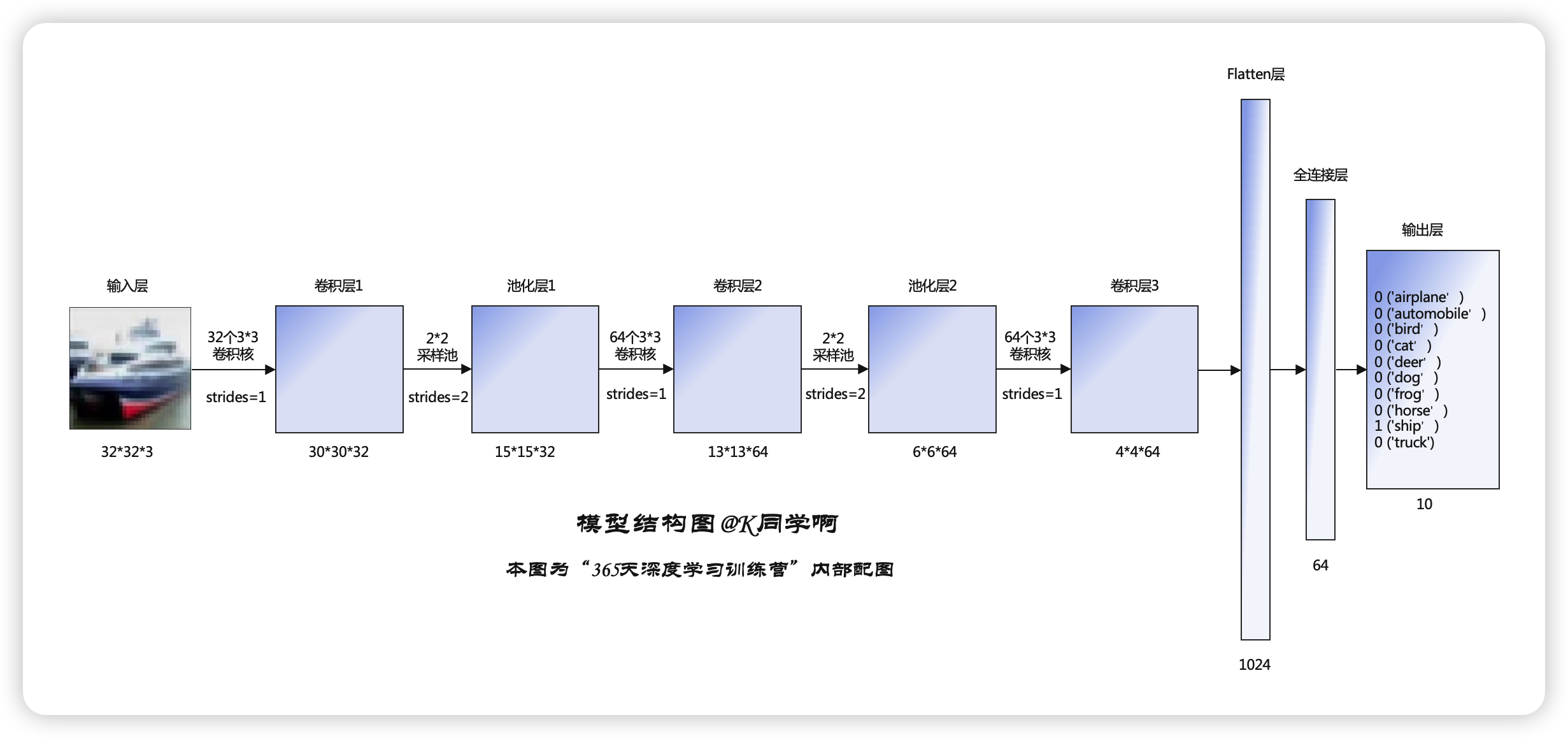

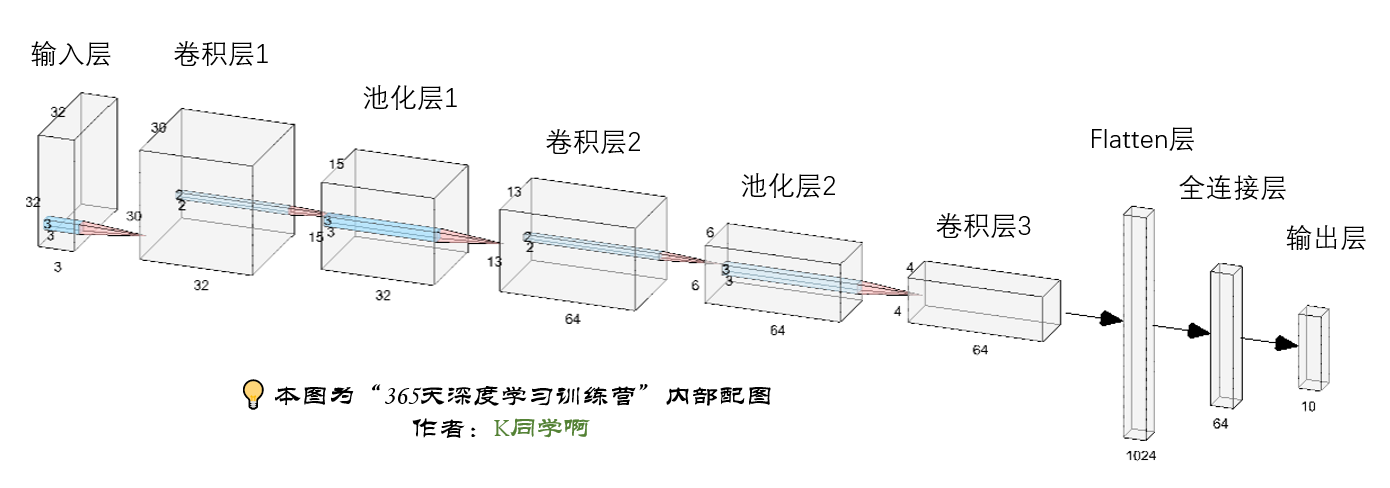

二、构建CNN网络

平面结构图:

立体结构图:

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)), #卷积层1,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层1,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层2,卷积核3*3

layers.MaxPooling2D((2, 2)), #池化层2,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), #卷积层3,卷积核3*3

layers.Flatten(), #Flatten层,连接卷积层与全连接层

layers.Dense(64, activation='relu'), #全连接层,特征进一步提取

layers.Dense(10) #输出层,输出预期结果

])

model.summary() # 打印网络结构

执行结果:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 30, 30, 32) 896

max_pooling2d (MaxPooling2 (None, 15, 15, 32) 0

D)

conv2d_1 (Conv2D) (None, 13, 13, 64) 18496

max_pooling2d_1 (MaxPoolin (None, 6, 6, 64) 0

g2D)

conv2d_2 (Conv2D) (None, 4, 4, 64) 36928

flatten (Flatten) (None, 1024) 0

dense (Dense) (None, 64) 65600

dense_1 (Dense) (None, 10) 650

=================================================================

Total params: 122570 (478.79 KB)

Trainable params: 122570 (478.79 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

三、编译

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

四、训练模型

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

执行结果:

Epoch 1/10

1563/1563 [==============================] - 13s 7ms/step - loss: 1.5189 - accuracy: 0.4456 - val_loss: 1.2919 - val_accuracy: 0.5370

Epoch 2/10

1563/1563 [==============================] - 10s 6ms/step - loss: 1.1395 - accuracy: 0.5985 - val_loss: 1.0768 - val_accuracy: 0.6214

Epoch 3/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.9872 - accuracy: 0.6556 - val_loss: 0.9638 - val_accuracy: 0.6660

Epoch 4/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.8951 - accuracy: 0.6873 - val_loss: 0.9616 - val_accuracy: 0.6682

Epoch 5/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.8207 - accuracy: 0.7130 - val_loss: 0.8736 - val_accuracy: 0.7010

Epoch 6/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.7677 - accuracy: 0.7328 - val_loss: 0.8919 - val_accuracy: 0.6953

Epoch 7/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.7189 - accuracy: 0.7470 - val_loss: 0.9046 - val_accuracy: 0.6909

Epoch 8/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.6747 - accuracy: 0.7651 - val_loss: 0.8532 - val_accuracy: 0.7106

Epoch 9/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.6348 - accuracy: 0.7786 - val_loss: 0.8466 - val_accuracy: 0.7182

Epoch 10/10

1563/1563 [==============================] - 10s 6ms/step - loss: 0.5932 - accuracy: 0.7908 - val_loss: 0.8911 - val_accuracy: 0.7154

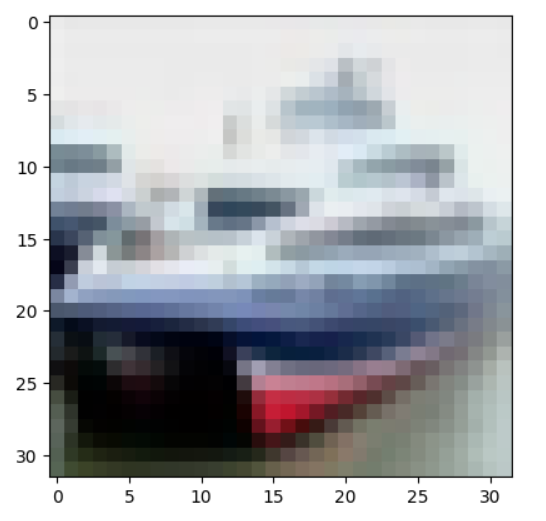

五、预测

通过模型预测得到的是每一个类别的概率,数字越大该图片的为该类别的可能性就越大。

plt.imshow(test_images[1])

import numpy as np

pre = model.predict(test_images)

print(class_names[np.argmax(pre[1])])

执行结果:

ship

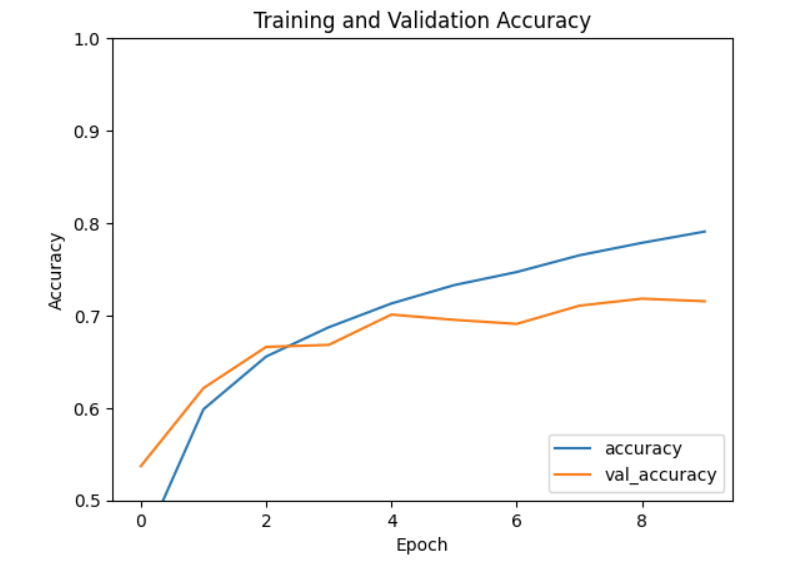

六、模型评估

import matplotlib.pyplot as plt

plt.plot(history.history['accuracy'], label='accuracy')

plt.plot(history.history['val_accuracy'], label='val_accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.ylim([0.5, 1])

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.show()

test_loss, test_accuracy = model.evaluate(test_images, test_labels, verbose=2)

执行结果:

313/313 - 1s - loss: 0.8911 - accuracy: 0.7154

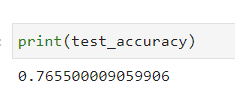

print(test_accuracy)

0.715399980545044

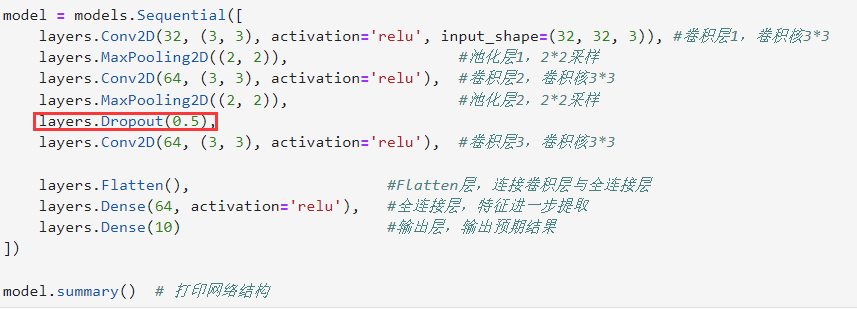

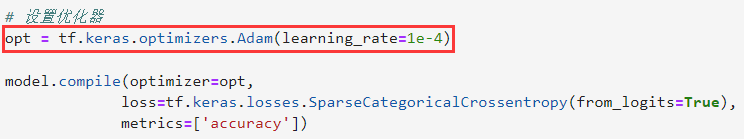

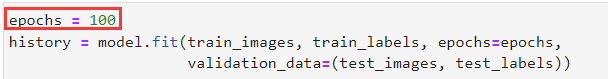

七、模型改进

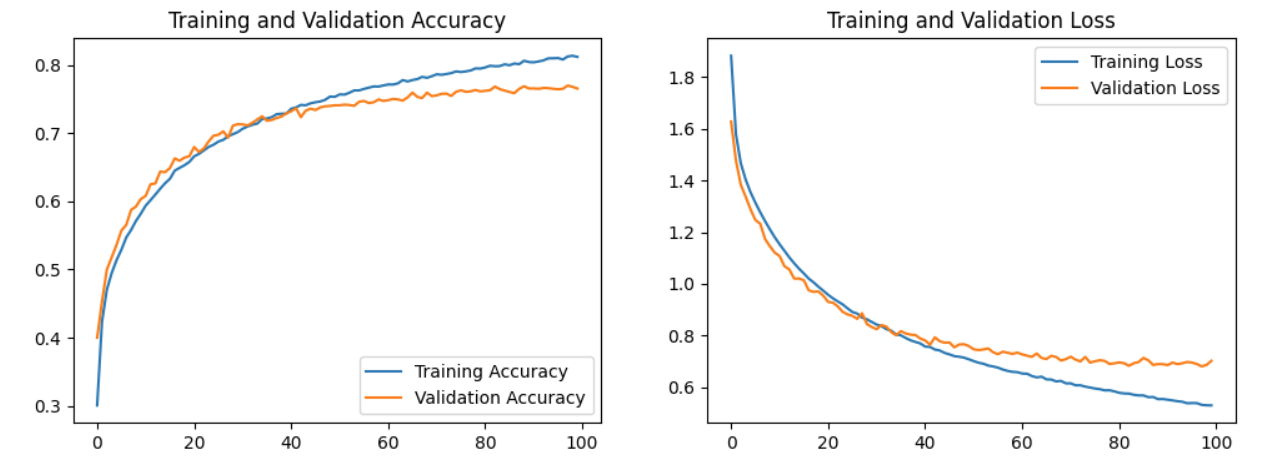

由于本周打卡其中一个任务是提高测试集accuracy准确率达到72%以上,所以我对优化器进行了一个小小的修改,修改了其中的学习率,同时在CNN网络结构中添加了Dropout层,并且将epochs增加至100。

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(epochs)

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

test_loss, test_accuracy = model.evaluate(test_images, test_labels, verbose=2)

执行结果为:

测试集准确率为:

八、个人总结

本周的深度学习打卡内容是使用TensorFlow框架进行彩色图片分类,首先学习了如何编写一个完整的深度学习程序。本次流程为:设置GPU——导入数据——处理数据——数据可视化——构建简单的CNN网络——编译——训练模型——进行预测——模型评估——模型改进。通过与上周的第T1周:实现mnist手写数字识别对比,在进行归一化时,可知彩色图片分类为RGB三通道,而灰度图片是单通道。最后一个任务是提高测试集的accuracy达到72%以上,自己通过一些尝试(见七、模型改进),将准确率由最初的71.54%提升到了76.55%。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?