具体思路是:根据 ,自定义

、

、

,生成一批符合实际标定成像过程的理论数据

和

,输入cv2.calibrateCamera()函数,看能不能反求出

和

。

这是opencv里定义的参数:

cv2.calibrateCamera(objectPoints, imagePoints, imageSize[, cameraMatrix[, distCoeffs[, rvecs[, tvecs[, flags[, criteria]]]]]]) → retval, cameraMatrix, distCoeffs, rvecs, tvecs

import numpy as np

from scipy.spatial.transform import Rotation as R

import cv2

def calculate_world_corner(corner_scale, square_size):

corner_height, corner_width = corner_scale

obj_corner = np.zeros([corner_height * corner_width, 3])

obj_corner[:, :2] = np.mgrid[0:corner_height, 0:corner_width].T.reshape(-1, 2) # (w*h)*2

return obj_corner * square_size

img_size = [960, 960]

focal = 1200

pix_spacing = 0.309

K = np.array([[focal/pix_spacing, 0, img_size[0]/2],

[0, focal/pix_spacing, img_size[1]/2],

[0, 0, 1]])

dist = np.zeros((1, 5))

obj_points = calculate_world_corner([5, 5], 25)

rmat = R.from_euler('xyz', [45, 0, 0], degrees=True).as_matrix()

trans = np.array([[0], [0], [1000]])

img_points = np.matmul(K, (np.matmul(rmat, obj_points.T) + trans)).T

img_points = (img_points[:, :2] / img_points[:, 2:])[:, None, :]

retval, cameraMatrix, distCoeffs, rvecs, tvecs = cv2.calibrateCamera(obj_points, img_points, img_size, K, dist)但是会出现以下报错:

cv2.error: OpenCV(4.7.0) D:\a\opencv-python\opencv-python\opencv\modules\calib3d\src\calibration.cpp:3405: error: (-210:Unsupported format or combination of formats) objectPoints should contain vector of vectors of points of type Point3f in function 'cv::collectCalibrationData'

解决方案,改数据类型(改为float32,还没搞清原因,可能是opencv参数偏爱float类型),把ndarray变成list输入:

img_points_list = [img_points.astype(np.float32)]

obj_points_list = [obj_points.astype(np.float32)]

retval, cameraMatrix, distCoeffs, rvecs, tvecs = cv2.calibrateCamera(obj_points_list, img_points_list, img_size, K, dist)就可以得到计算结果了:

retval表示重投影误差,可以参考https://blog.csdn.net/qq_32998593/article/details/113063216,cameraMatrix是相机内参,distCoeffs是畸变系数,rvecs是旋转向量,tvecs是平移向量。

这里求的时候也可以用cv2.projectPoints()来获得,那么最后代码可以写成这样:

import numpy as np

from scipy.spatial.transform import Rotation as R

import copy

import cv2

import matplotlib.pyplot as plt

def calculate_world_corner(corner_scale, square_size):

corner_height, corner_width = corner_scale

obj_corner = np.zeros([corner_height * corner_width, 3])

obj_corner[:, :2] = np.mgrid[0:corner_height, 0:corner_width].T.reshape(-1, 2) # (w*h)*2

return obj_corner * square_size

img_size = [960, 960]

focal = 1200

pix_spacing = 0.309

K = np.array([[focal/pix_spacing, 0, img_size[0]/2],

[0, focal/pix_spacing, img_size[1]/2],

[0, 0, 1]])

dist = np.zeros((1, 5))

euler_in = [45, 0, 0]

rvecs_in = R.from_euler('xyz', euler_in, degrees=True).as_rotvec()[None].reshape(3, 1)

tvecs_in = np.array([[0], [0], [1000]]).astype(np.float64)

obj_points = calculate_world_corner([5, 5], 25)

img_points, _ = cv2.projectPoints(obj_points, rvecs_in, tvecs_in, K, dist)

img_points_list = [img_points.astype(np.float32)]

obj_points_list = [obj_points.astype(np.float32)]

K_in = copy.deepcopy(K) # 这一行是因为输入cv2.calibrateCamera()后K会变化

retval, cameraMatrix, distCoeffs, rvecs, tvecs = cv2.calibrateCamera(obj_points_list, img_points_list, img_size, K_in, dist)

euler = R.from_rotvec(rvecs[0].T).as_euler('xyz', degrees=True)

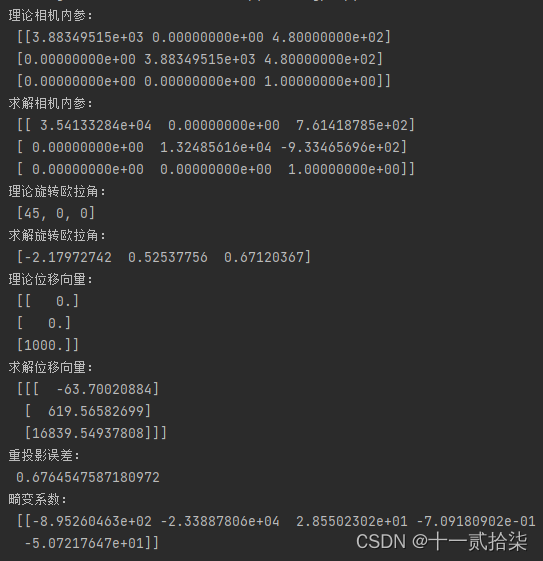

print('理论相机内参:\n', K)

print('求解相机内参:\n', cameraMatrix)

print('理论旋转欧拉角:\n', euler_in)

print('求解旋转欧拉角:\n', euler[0])

# print('理论旋转向量:\n', rvecs_in)

# print('求解旋转向量:\n', np.asarray(rvecs))

print('理论位移向量:\n', tvecs_in)

print('求解位移向量:\n', np.asarray(tvecs))

print('重投影误差:\n', retval)

print('畸变系数:\n', distCoeffs)结果如下:

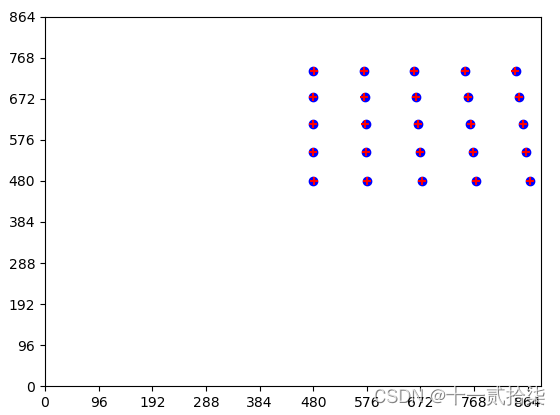

可以看到其实求解得到的内参矩阵误差很大,而且求解得到的欧拉角和位移也和理论值差很多,根本不能用,再看一下重投影的结果,其实还是可接受的:

img_points_reproj, _ = cv2.projectPoints(obj_points, rvecs[0], tvecs[0], cameraMatrix, distCoeffs)

plt.scatter(img_points[:, 0, 0], img_points[:, 0, 1], c='blue')

plt.scatter(img_points_reproj[:, 0, 0], img_points[:, 0, 1], c='red', marker='+')

plt.xticks(range(0, 960, 96))

plt.yticks(range(0, 960, 96))

plt.show(block=True)

但虽然重投影结果看上去可接受,但求到的旋转角度和位移差的也太多了,不太可行。我怀疑可能是因为只给了一个轴的角度,在分解矩阵的时候可能约束不够求不出来。(不过多张图即使是每个图也只给一个轴角度但只要给的角度不同求出的结果误差也比较小)

修改了一下角度后发现内参矩阵的误差变小了,而且重投影误差的值变得更小了。

这提示我们在用cv2.calibrateCamera()直接去计算相机内参的时候,要关注一下返回的重投影误差的值,对于某些情况下计算得到的内参可能没法用,就结果来看,计算得到的内参也有误差,还要考虑一下这个误差是不是可接受的。

495

495

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?