1.背景

目前各大主流的FlinkSQL平台百花齐放,像Dinky(前身Dlink),StreamPark(前身Streamx)等等,Dinky有做一些sql逻辑的校验,但是还不是很完善,所以很多时候还是需要使用idea进行本地调试,很多调试的方式是使用TableAPI的,即代码嵌套sql,这种方式在java代码中写着是很难看,并且有时候是很难发现语法错误的,因此衍生出使用sql文件进行调试的方式,能够让开发者一目了然地知道哪个位置的语法写得有问题。

2.原理

有Flink开发基础的同学应该很明白,FlinkSQL的开发底层是基于StreamTableEnvironment执行环境进行的,而此次介绍的方法很简单,就是基于Flink-client实现的,该方式的优点是1.无需手动启动本地集群提交任务,2.零代码开发,只需要导一些相关的依赖jar包,注意需要使用哪些连接器只需要导入对应的连接器依赖即可。

3.环境要求(笔者环境,仅供参考)

1.idea/maven 2.scala-1.12 3.flink-1.16.2 4.java1.8 5.mysql 要求开启了binlog日志,使用的用户需要具有对应的读取权限

4.Maven依赖导入

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>2.12.11</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-json -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-json</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.16</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-runtime-web -->

<!-- 导入该依赖能够使用WebUI查看任务的执行情况 端口号为flink-con.yml文件里面的rest.port参数-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web</artifactId>

<version>1.16.0</version>

<scope>provided</scope>

</dependency>

<!-- mysql-cdc连接器依赖 -->

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.4.1</version>

</dependency>

<!-- 核心依赖,能够帮你将sql文件提交到flink-sql-client客户端执行,并且无需启动session集群 -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-sql-client</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-python</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>5. Flink-conf.yaml 文件,需要读取到Flink相关的配置

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

#==============================================================================

# Common

#==============================================================================

# The external address of the host on which the JobManager runs and can be

# reached by the TaskManagers and any clients which want to connect. This setting

# is only used in Standalone mode and may be overwritten on the JobManager side

# by specifying the --host <hostname> parameter of the bin/jobmanager.sh executable.

# In high availability mode, if you use the bin/start-cluster.sh script and setup

# the conf/masters file, this will be taken care of automatically. Yarn

# automatically configure the host name based on the hostname of the node where the

# JobManager runs.

jobmanager.rpc.address: localhost

# The RPC port where the JobManager is reachable.

jobmanager.rpc.port: 6123

# The host interface the JobManager will bind to. By default, this is localhost, and will prevent

# the JobManager from communicating outside the machine/container it is running on.

# On YARN this setting will be ignored if it is set to 'localhost', defaulting to 0.0.0.0.

# On Kubernetes this setting will be ignored, defaulting to 0.0.0.0.

#

# To enable this, set the bind-host address to one that has access to an outside facing network

# interface, such as 0.0.0.0.

jobmanager.bind-host: localhost

# The total process memory size for the JobManager.

#

# Note this accounts for all memory usage within the JobManager process, including JVM metaspace and other overhead.

jobmanager.memory.process.size: 1024m

taskmanager.bind-host: localhost

taskmanager.host: localhost

taskmanager.memory.process.size: 2048m

taskmanager.numberOfTaskSlots: 6

# The parallelism used for programs that did not specify and other parallelism.

parallelism.default: 1

# The default file system scheme and authority.

#

# By default file paths without scheme are interpreted relative to the local

# root file system 'file:///'. Use this to override the default and interpret

# relative paths relative to a different file system,

# for example 'hdfs://mynamenode:12345'

#重要!!!!Flink-CK相关参数,必须设置,根据自己的机器路径配置,不配置读不到更新数据!!!为了更快的看出数据的表更,将ck间隔设置为10s一次

fs.default-scheme: file:Users/hubiwei/opt/test

execution.checkpointing.interval: 10s

execution.checkpointing.max-concurrent-checkpoints: 1

execution.checkpointing.timeout: 10min

state.backend: hashmap

state.checkpoints.dir: file:Users/hbw/opt/test

jobmanager.execution.failover-strategy: region

#==============================================================================

# Rest & web frontend

#==============================================================================

# The port to which the REST client connects to. If rest.bind-port has

# not been specified, then the server will bind to this port as well.

# 本地WebUI端口

rest.port: 9998

# The address to which the REST client will connect to

#

rest.address: localhost

rest.bind-address: localhost

# Flag to specify whether job submission is enabled from the web-based

# 非常重要!!!!! 一定得设置,不然会IO错误

execution.target: local

6.准备数据(本次事例为使用mysql-cdc实时同步数据到控制台)

-- 1.在mysql中建立一个源表

create table test(

id bigint

,name VARCHAR(10)

,age bigint

,PRIMARY KEY (id)

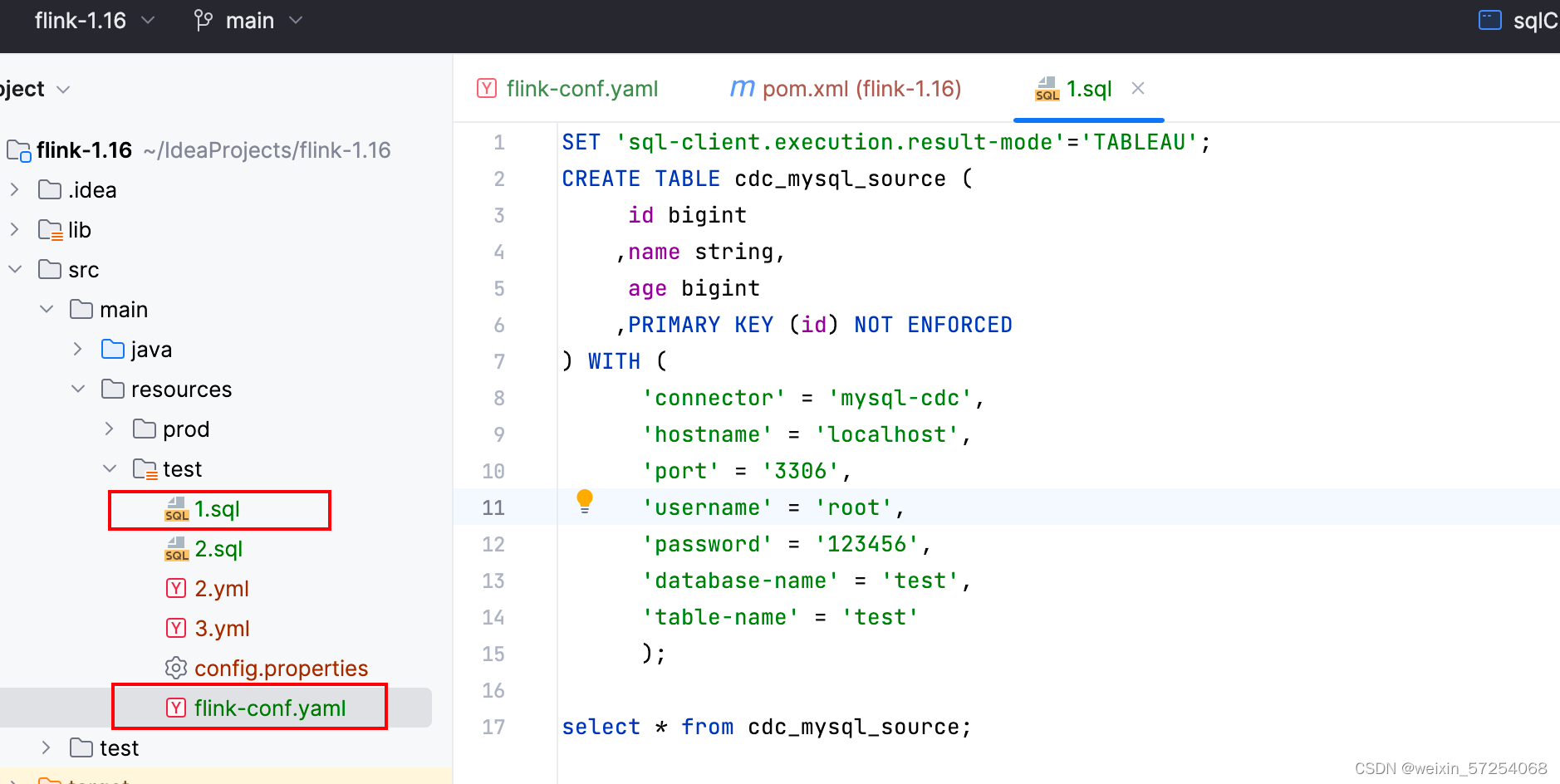

);7.准备FlinkSQL文件

SET 'sql-client.execution.result-mode'='TABLEAU'; -- 在query场景需要设置此参数 不然会报错

CREATE TABLE cdc_mysql_source (

id bigint

,name string,

age bigint

,PRIMARY KEY (id) NOT ENFORCED

) WITH (

'connector' = 'mysql-cdc',

'hostname' = 'localhost',

'port' = '3306',

'username' = 'root',

'password' = '123456',

'database-name' = 'test',

'table-name' = 'test'

);

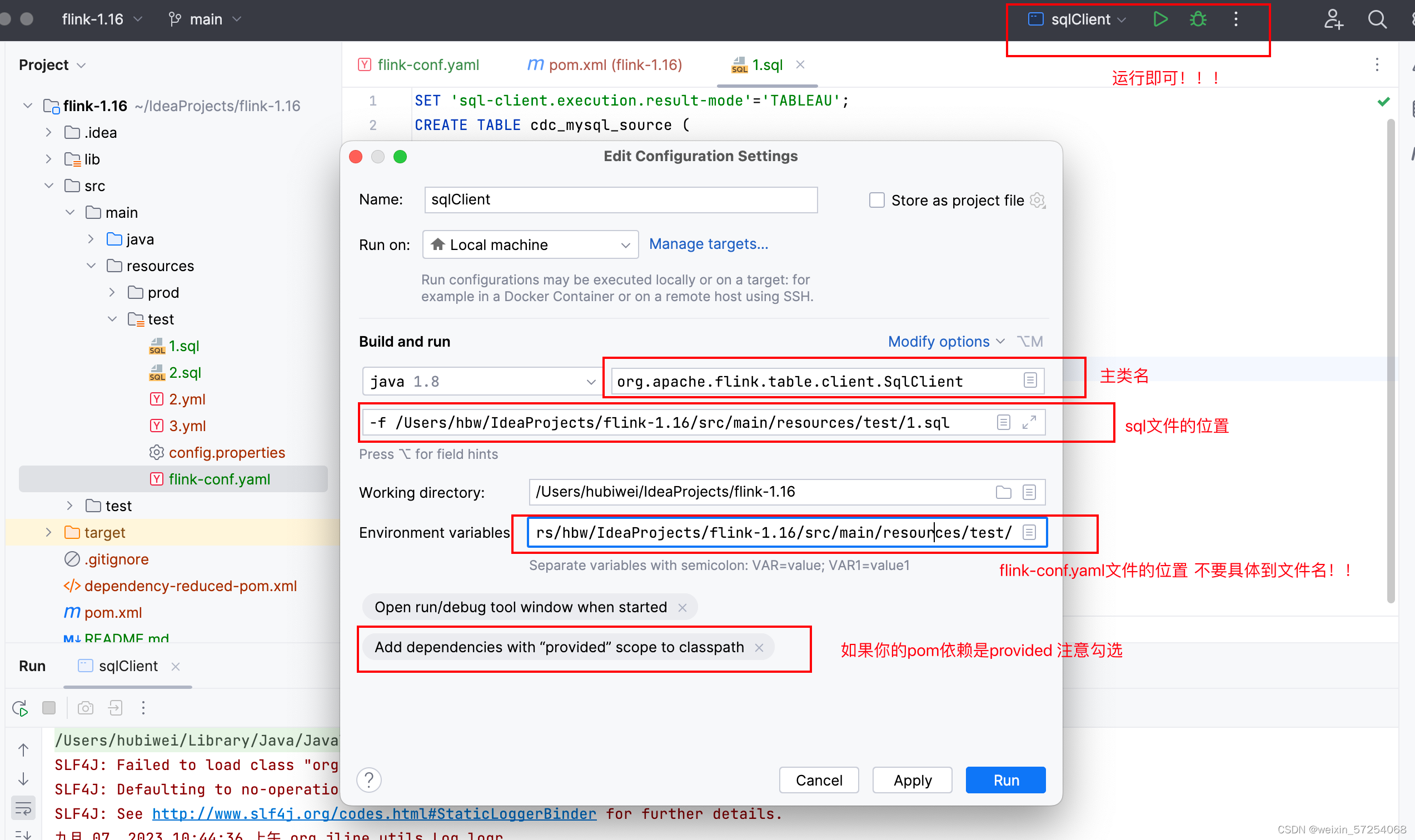

select * from cdc_mysql_source;8.准备运行

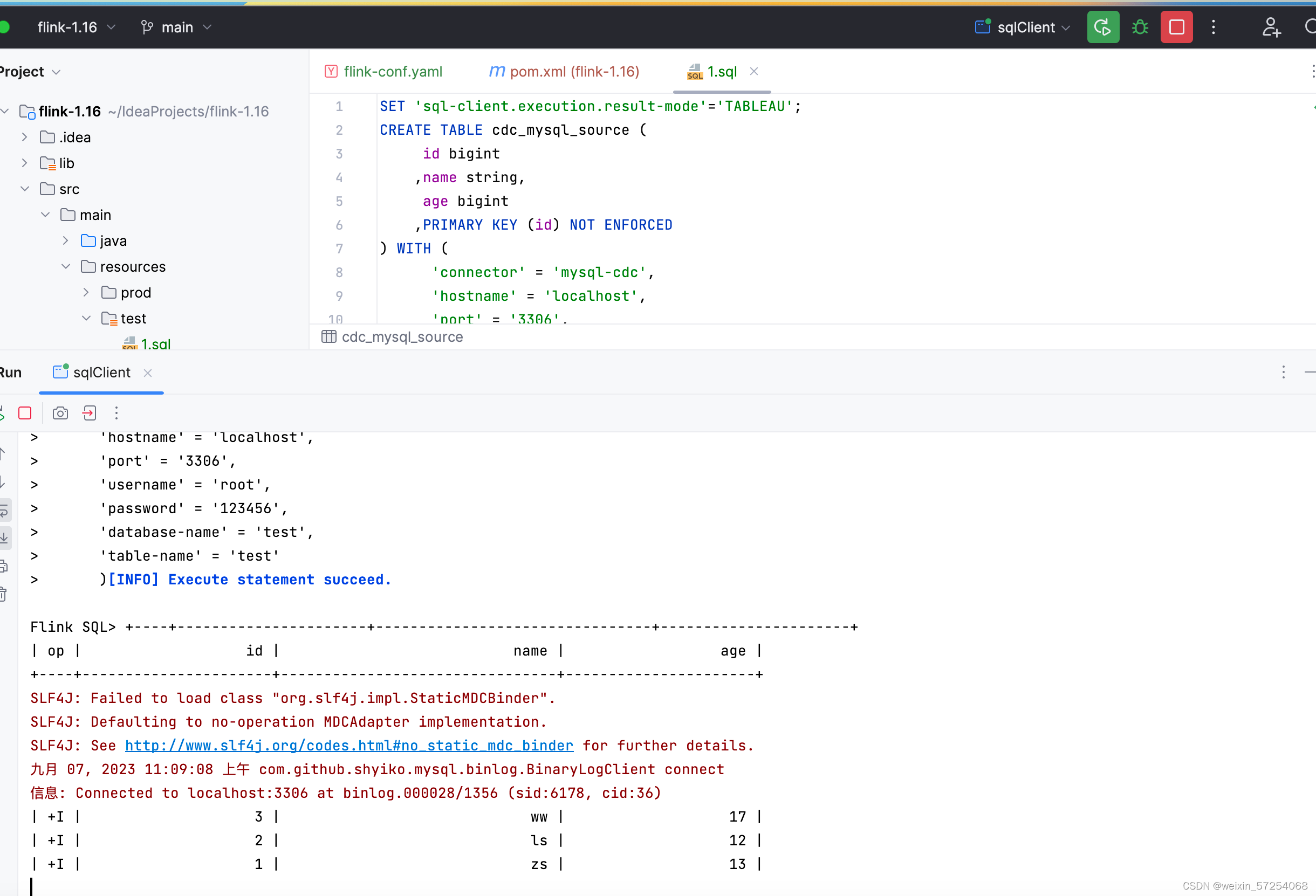

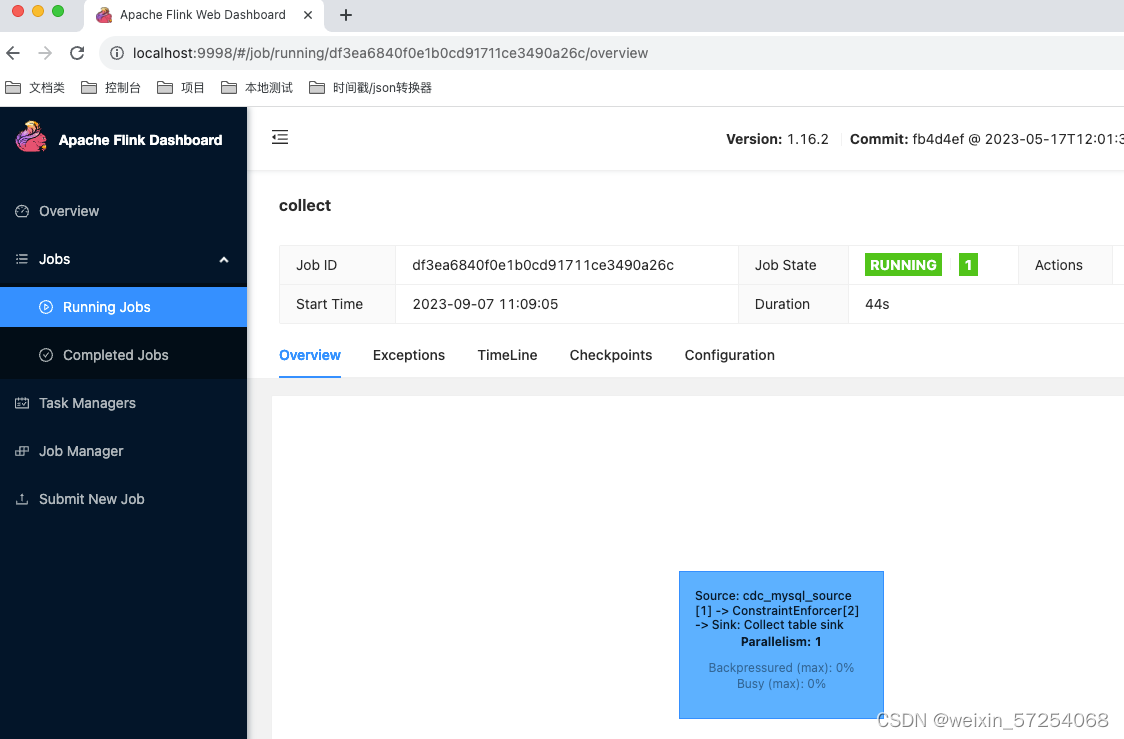

9.查看结果

757

757

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?