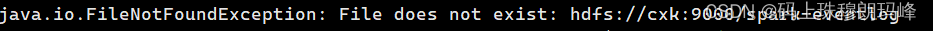

[root@cxk server]# spark-shell 2024-04-16 00:13:26,914 INFO util.SignalUtils: Registering signal handler for INT 2024-04-16 00:13:49,521 INFO spark.SparkContext: Running Spark version 3.4.2 2024-04-16 00:13:50,072 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2024-04-16 00:13:50,371 INFO resource.ResourceUtils: ============================================================== 2024-04-16 00:13:50,373 INFO resource.ResourceUtils: No custom resources configured for spark.driver. 2024-04-16 00:13:50,373 INFO resource.ResourceUtils: ============================================================== 2024-04-16 00:13:50,374 INFO spark.SparkContext: Submitted application: Spark shell 2024-04-16 00:13:50,464 INFO resource.ResourceProfile: Default ResourceProfile created, executor resources: Map(memory -> name: memory, amount: 1024, script: , vendor: , offHeap -> name: offHeap, amount: 0, script: , vendor: ), task resources: Map(cpus -> name: cpus, amount: 1.0) 2024-04-16 00:13:50,521 INFO resource.ResourceProfile: Limiting resource is cpu 2024-04-16 00:13:50,522 INFO resource.ResourceProfileManager: Added ResourceProfile id: 0 2024-04-16 00:13:50,757 INFO spark.SecurityManager: Changing view acls to: root 2024-04-16 00:13:50,759 INFO spark.SecurityManager: Changing modify acls to: root 2024-04-16 00:13:50,766 INFO spark.SecurityManager: Changing view acls groups to: 2024-04-16 00:13:50,768 INFO spark.SecurityManager: Changing modify acls groups to: 2024-04-16 00:13:50,772 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: root; groups with view permissions: EMPTY; users with modify permissions: root; groups with modify permissions: EMPTY 2024-04-16 00:13:51,770 INFO util.Utils: Successfully started service 'sparkDriver' on port 35229. 2024-04-16 00:13:51,890 INFO spark.SparkEnv: Registering MapOutputTracker 2024-04-16 00:13:51,982 INFO spark.SparkEnv: Registering BlockManagerMaster 2024-04-16 00:13:52,040 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 2024-04-16 00:13:52,041 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 2024-04-16 00:13:52,067 INFO spark.SparkEnv: Registering BlockManagerMasterHeartbeat 2024-04-16 00:13:52,175 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-e5bfea15-3d21-42bf-b9aa-0ef40cd479ce 2024-04-16 00:13:52,276 INFO memory.MemoryStore: MemoryStore started with capacity 2.7 GiB 2024-04-16 00:13:52,404 INFO spark.SparkEnv: Registering OutputCommitCoordinator 2024-04-16 00:13:52,574 INFO util.log: Logging initialized @31284ms to org.sparkproject.jetty.util.log.Slf4jLog 2024-04-16 00:13:52,827 INFO ui.JettyUtils: Start Jetty 0.0.0.0:4040 for SparkUI 2024-04-16 00:13:52,868 INFO server.Server: jetty-9.4.50.v20221201; built: 2022-12-01T22:07:03.915Z; git: da9a0b30691a45daf90a9f17b5defa2f1434f882; jvm 1.8.0_212-b10 2024-04-16 00:13:53,005 INFO server.Server: Started @31718ms 2024-04-16 00:13:53,328 INFO server.AbstractConnector: Started ServerConnector@1f85904a{HTTP/1.1, (http/1.1)}{0.0.0.0:4040} 2024-04-16 00:13:53,328 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 2024-04-16 00:13:53,451 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@66682e8f{/,null,AVAILABLE,@Spark} 2024-04-16 00:13:54,630 INFO client.StandaloneAppClient$ClientEndpoint: Connecting to master spark://cxk:7077... 2024-04-16 00:13:54,835 INFO client.TransportClientFactory: Successfully created connection to cxk/192.168.134.128:7077 after 138 ms (0 ms spent in bootstraps) 2024-04-16 00:13:55,574 INFO cluster.StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20240416001355-0001 2024-04-16 00:13:55,679 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 38557. 2024-04-16 00:13:55,679 INFO netty.NettyBlockTransferService: Server created on cxk:38557 2024-04-16 00:13:55,684 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 2024-04-16 00:13:55,710 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, cxk, 38557, None) 2024-04-16 00:13:55,756 INFO storage.BlockManagerMasterEndpoint: Registering block manager cxk:38557 with 2.7 GiB RAM, BlockManagerId(driver, cxk, 38557, None) 2024-04-16 00:13:55,795 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, cxk, 38557, None) 2024-04-16 00:13:55,775 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20240416001355-0001/0 on worker-20240416000403-192.168.134.128-32833 (192.168.134.128:32833) with 1 core(s) 2024-04-16 00:13:55,819 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, cxk, 38557, None) 2024-04-16 00:13:55,816 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20240416001355-0001/0 on hostPort 192.168.134.128:32833 with 1 core(s), 1024.0 MiB RAM 2024-04-16 00:13:55,873 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20240416001355-0001/1 on worker-20240416000400-192.168.134.130-34168 (192.168.134.130:34168) with 1 core(s) 2024-04-16 00:13:55,874 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20240416001355-0001/1 on hostPort 192.168.134.130:34168 with 1 core(s), 1024.0 MiB RAM 2024-04-16 00:13:55,874 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20240416001355-0001/2 on worker-20240416000359-192.168.134.129-46564 (192.168.134.129:46564) with 1 core(s) 2024-04-16 00:13:55,875 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20240416001355-0001/2 on hostPort 192.168.134.129:46564 with 1 core(s), 1024.0 MiB RAM 2024-04-16 00:13:56,231 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20240416001355-0001/1 is now RUNNING 2024-04-16 00:13:56,232 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20240416001355-0001/2 is now RUNNING 2024-04-16 00:13:59,059 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20240416001355-0001/0 is now RUNNING 2024-04-16 00:14:05,254 INFO cluster.StandaloneSchedulerBackend$StandaloneDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.134.129:38862) with ID 2, ResourceProfileId 0 2024-04-16 00:14:05,357 INFO cluster.StandaloneSchedulerBackend$StandaloneDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.134.130:47428) with ID 1, ResourceProfileId 0 2024-04-16 00:14:05,518 ERROR spark.SparkContext: Error initializing SparkContext. java.io.FileNotFoundException: File does not exist: hdfs://cxk:9000/spark-eventlog at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1757) at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765) at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77) at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221) at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:81) at org.apache.spark.SparkContext.<init>(SparkContext.scala:616) at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2740) at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:1026) at scala.Option.getOrElse(Option.scala:189) at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:1020) at org.apache.spark.repl.Main$.createSparkSession(Main.scala:112) at $line3.$read$$iw$$iw.<init>(<console>:15) at $line3.$read$$iw.<init>(<console>:42) at $line3.$read.<init>(<console>:44) at $line3.$read$.<init>(<console>:48) at $line3.$read$.<clinit>(<console>) at $line3.$eval$.$print$lzycompute(<console>:7) at $line3.$eval$.$print(<console>:6) at $line3.$eval.$print(<console>) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:747) at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1020) at scala.tools.nsc.interpreter.IMain.$anonfun$interpret$1(IMain.scala:568) at scala.reflect.internal.util.ScalaClassLoader.asContext(ScalaClassLoader.scala:36) at scala.reflect.internal.util.ScalaClassLoader.asContext$(ScalaClassLoader.scala:116) at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:41) at scala.tools.nsc.interpreter.IMain.loadAndRunReq$1(IMain.scala:567) at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:594) at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:564) at scala.tools.nsc.interpreter.IMain.$anonfun$quietRun$1(IMain.scala:216) at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:206) at scala.tools.nsc.interpreter.IMain.quietRun(IMain.scala:216) at org.apache.spark.repl.SparkILoop.$anonfun$initializeSpark$2(SparkILoop.scala:83) at scala.collection.immutable.List.foreach(List.scala:431) at org.apache.spark.repl.SparkILoop.$anonfun$initializeSpark$1(SparkILoop.scala:83) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at scala.tools.nsc.interpreter.ILoop.savingReplayStack(ILoop.scala:97) at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:83) at org.apache.spark.repl.SparkILoop.$anonfun$process$4(SparkILoop.scala:165) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at scala.tools.nsc.interpreter.ILoop.$anonfun$mumly$1(ILoop.scala:166) at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:206) at scala.tools.nsc.interpreter.ILoop.mumly(ILoop.scala:163) at org.apache.spark.repl.SparkILoop.loopPostInit$1(SparkILoop.scala:153) at org.apache.spark.repl.SparkILoop.$anonfun$process$10(SparkILoop.scala:221) at org.apache.spark.repl.SparkILoop.withSuppressedSettings$1(SparkILoop.scala:189) at org.apache.spark.repl.SparkILoop.startup$1(SparkILoop.scala:201) at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:236) at org.apache.spark.repl.Main$.doMain(Main.scala:78) at org.apache.spark.repl.Main$.main(Main.scala:58) at org.apache.spark.repl.Main.main(Main.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52) at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:1020) at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:192) at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:215) at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:91) at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1111) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) 2024-04-16 00:14:05,534 INFO spark.SparkContext: SparkContext is stopping with exitCode 0. 2024-04-16 00:14:05,866 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.134.129:45740 with 413.9 MiB RAM, BlockManagerId(2, 192.168.134.129, 45740, None) 2024-04-16 00:14:05,869 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.134.130:39114 with 413.9 MiB RAM, BlockManagerId(1, 192.168.134.130, 39114, None) 2024-04-16 00:14:05,979 INFO server.AbstractConnector: Stopped Spark@1f85904a{HTTP/1.1, (http/1.1)}{0.0.0.0:4040} 2024-04-16 00:14:06,122 INFO ui.SparkUI: Stopped Spark web UI at http://cxk:4040 2024-04-16 00:14:06,246 INFO cluster.StandaloneSchedulerBackend: Shutting down all executors 2024-04-16 00:14:06,273 INFO cluster.StandaloneSchedulerBackend$StandaloneDriverEndpoint: Asking each executor to shut down 2024-04-16 00:14:06,449 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 2024-04-16 00:14:06,753 INFO memory.MemoryStore: MemoryStore cleared 2024-04-16 00:14:06,761 INFO storage.BlockManager: BlockManager stopped 2024-04-16 00:14:06,783 INFO storage.BlockManagerMaster: BlockManagerMaster stopped 2024-04-16 00:14:06,792 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 2024-04-16 00:14:06,837 INFO spark.SparkContext: Successfully stopped SparkContext 2024-04-16 00:14:06,838 ERROR repl.Main: Failed to initialize Spark session. java.io.FileNotFoundException: File does not exist: hdfs://cxk:9000/spark-eventlog at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1757) at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765) at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77) at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221) at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:81) at org.apache.spark.SparkContext.<init>(SparkContext.scala:616) at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2740) at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:1026) at scala.Option.getOrElse(Option.scala:189) at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:1020) at org.apache.spark.repl.Main$.createSparkSession(Main.scala:112) at $line3.$read$$iw$$iw.<init>(<console>:15) at $line3.$read$$iw.<init>(<console>:42) at $line3.$read.<init>(<console>:44) at $line3.$read$.<init>(<console>:48) at $line3.$read$.<clinit>(<console>) at $line3.$eval$.$print$lzycompute(<console>:7) at $line3.$eval$.$print(<console>:6) at $line3.$eval.$print(<console>) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:747) at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1020) at scala.tools.nsc.interpreter.IMain.$anonfun$interpret$1(IMain.scala:568) at scala.reflect.internal.util.ScalaClassLoader.asContext(ScalaClassLoader.scala:36) at scala.reflect.internal.util.ScalaClassLoader.asContext$(ScalaClassLoader.scala:116) at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:41) at scala.tools.nsc.interpreter.IMain.loadAndRunReq$1(IMain.scala:567) at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:594) at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:564) at scala.tools.nsc.interpreter.IMain.$anonfun$quietRun$1(IMain.scala:216) at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:206) at scala.tools.nsc.interpreter.IMain.quietRun(IMain.scala:216) at org.apache.spark.repl.SparkILoop.$anonfun$initializeSpark$2(SparkILoop.scala:83) at scala.collection.immutable.List.foreach(List.scala:431) at org.apache.spark.repl.SparkILoop.$anonfun$initializeSpark$1(SparkILoop.scala:83) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at scala.tools.nsc.interpreter.ILoop.savingReplayStack(ILoop.scala:97) at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:83) at org.apache.spark.repl.SparkILoop.$anonfun$process$4(SparkILoop.scala:165) at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23) at scala.tools.nsc.interpreter.ILoop.$anonfun$mumly$1(ILoop.scala:166) at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:206) at scala.tools.nsc.interpreter.ILoop.mumly(ILoop.scala:163) at org.apache.spark.repl.SparkILoop.loopPostInit$1(SparkILoop.scala:153) at org.apache.spark.repl.SparkILoop.$anonfun$process$10(SparkILoop.scala:221) at org.apache.spark.repl.SparkILoop.withSuppressedSettings$1(SparkILoop.scala:189) at org.apache.spark.repl.SparkILoop.startup$1(SparkILoop.scala:201) at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:236) at org.apache.spark.repl.Main$.doMain(Main.scala:78) at org.apache.spark.repl.Main$.main(Main.scala:58) at org.apache.spark.repl.Main.main(Main.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52) at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:1020) at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:192) at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:215) at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:91) at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1111) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) 2024-04-16 00:14:06,933 INFO util.ShutdownHookManager: Shutdown hook called 2024-04-16 00:14:06,934 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-d4f121f1-6ff3-4650-81f0-b2ebd2928cb8 2024-04-16 00:14:06,949 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-cdce8222-cdd7-44cf-abc3-73e9b0294561 2024-04-16 00:14:06,954 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-cdce8222-cdd7-44cf-abc3-73e9b0294561/repl-950297f3-e265-40db-a18d-0eed14333cf8

出现上面的错误的解决办法就是创建一个路径

hadoop dfs -mkdir hdfs://cxk:9000/spark-eventlog

需要注意的一个点就是mkdir 后面开始就是要根据自己的报错路径进行修改

2510

2510

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?