基于Velero及MinIO实现对kubernetes 集群etcd数据自定义数据备份与恢复

部署对象存储minio

#pull镜像;官方镜像minio/minio:RELEASE.2022-04-12T06-55-35Z

root@k8s-deploy:~# docker pull registry.cn-hangzhou.aliyuncs.com/zhangshijie/minio:RELEASE.2022-04-12T06-55-35Z

#minio数据目录创建;可以是单独的数据盘,也可以是共享存储

root@k8s-deploy:~# mkdir -p /data/minio

#创建minio容器;如果不指定,则默认用户名与密码为 minioadmin/minioadmin,可以通过环境变量自定义,如下:

#9000是数据读写端口;9999是控制台界面端口

#server /data 给容器传递参数,设定/data为数据目录

#--console-address '0.0.0.0:9999' 给容器传递参数,设置控制台端口

docker run --name minio \

-p 9000:9000 \

-p 9999:9999 \

-d --restart=always \

-e "MINIO_ROOT_USER=admin" \

-e "MINIO_ROOT_PASSWORD=12345678" \

-v /data/minio/data:/data \

registry.cn-hangzhou.aliyuncs.com/zhangshijie/minio:RELEASE.2022-04-12T06-55-35Z server /data \

--console-address '0.0.0.0:9999'

#查看日志有没有错误

root@k8s-deploy:~# docker logs -f 78ac9d4d3b13

API: http://172.17.0.2:9000 http://127.0.0.1:9000

Console: http://0.0.0.0:9999

Documentation: https://docs.min.io

Finished loading IAM sub-system (took 0.0s of 0.0s to load data).

You are running an older version of MinIO released 1 year ago

Update: Run `mc admin update`

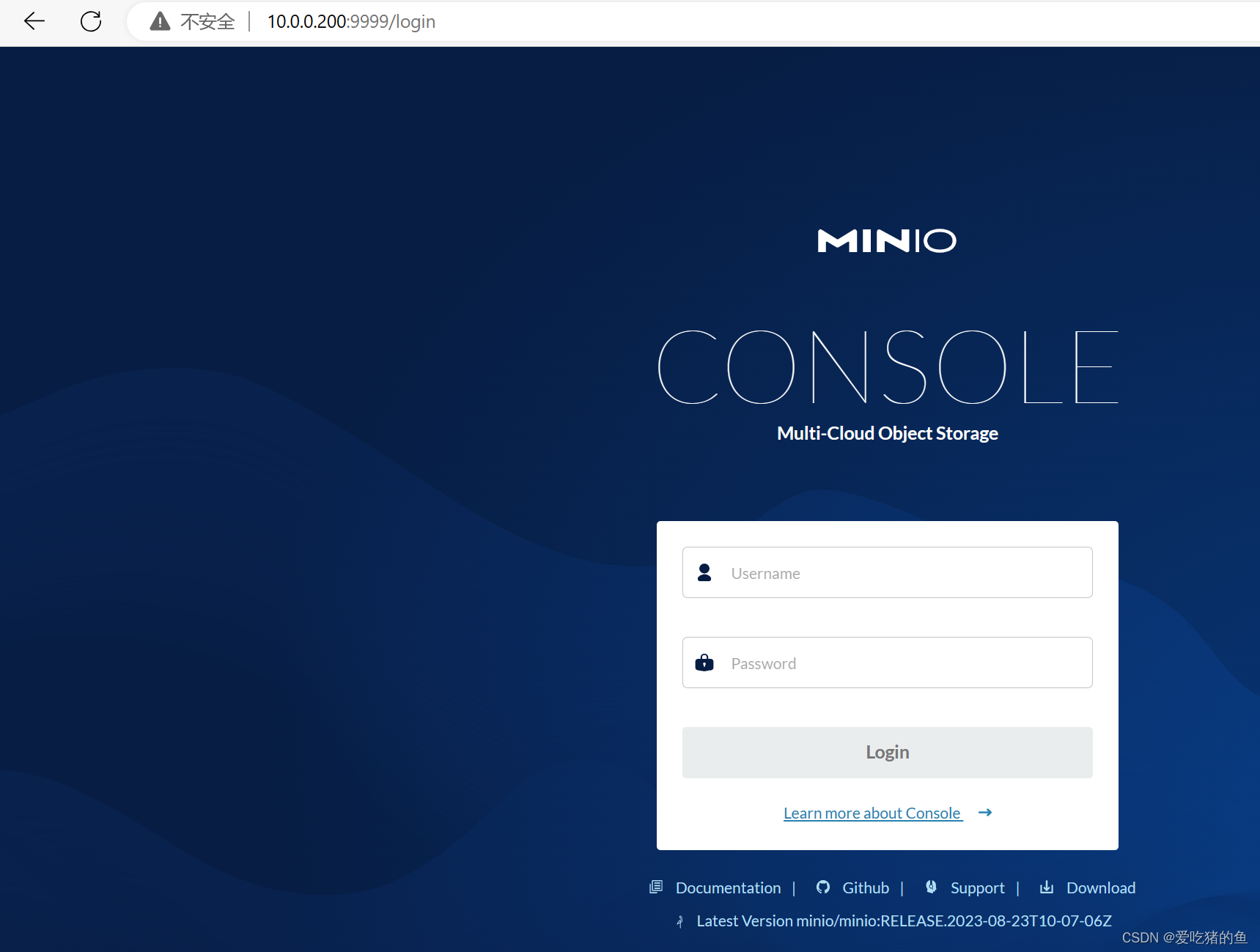

登录minio控制台:admin/12345678

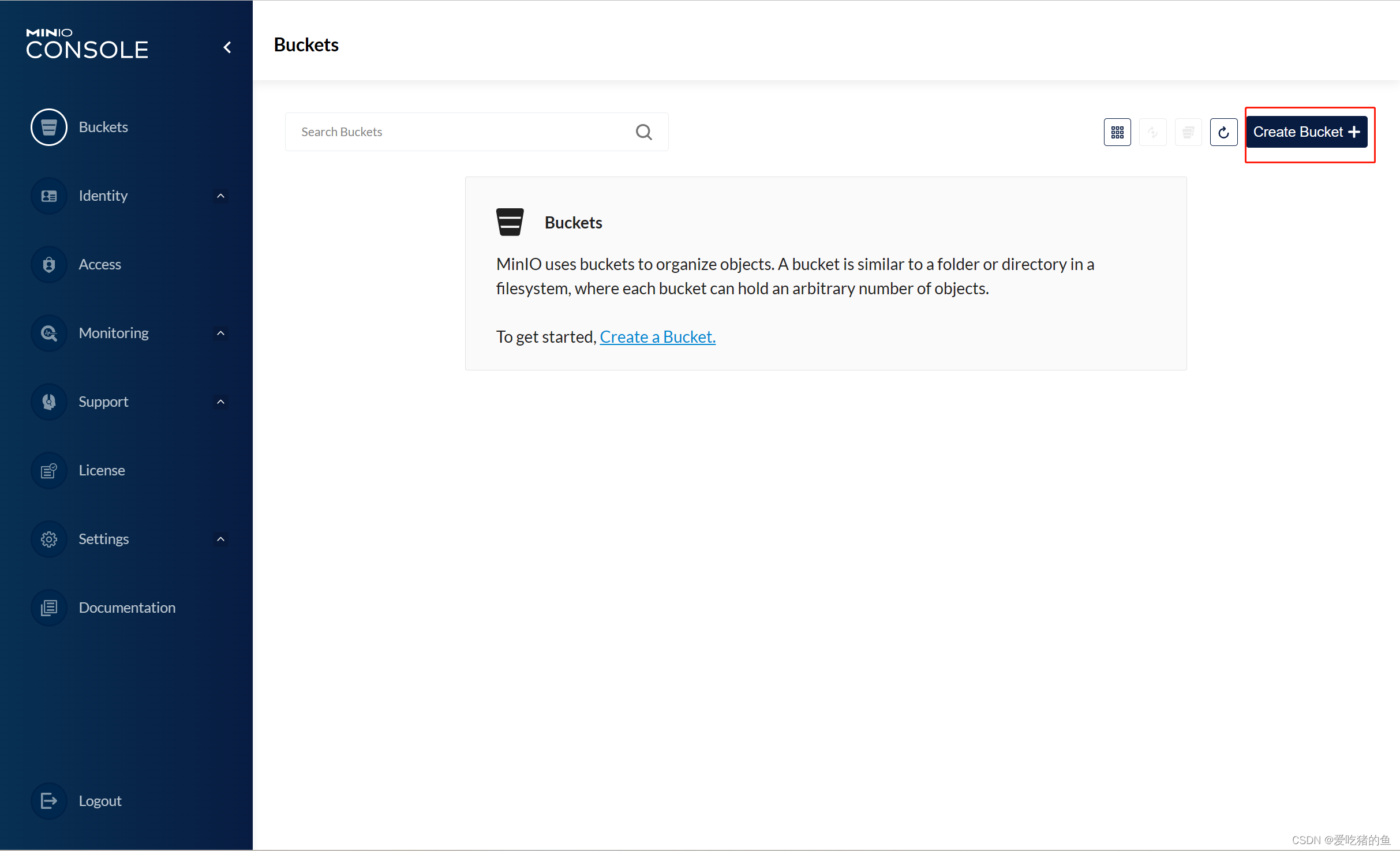

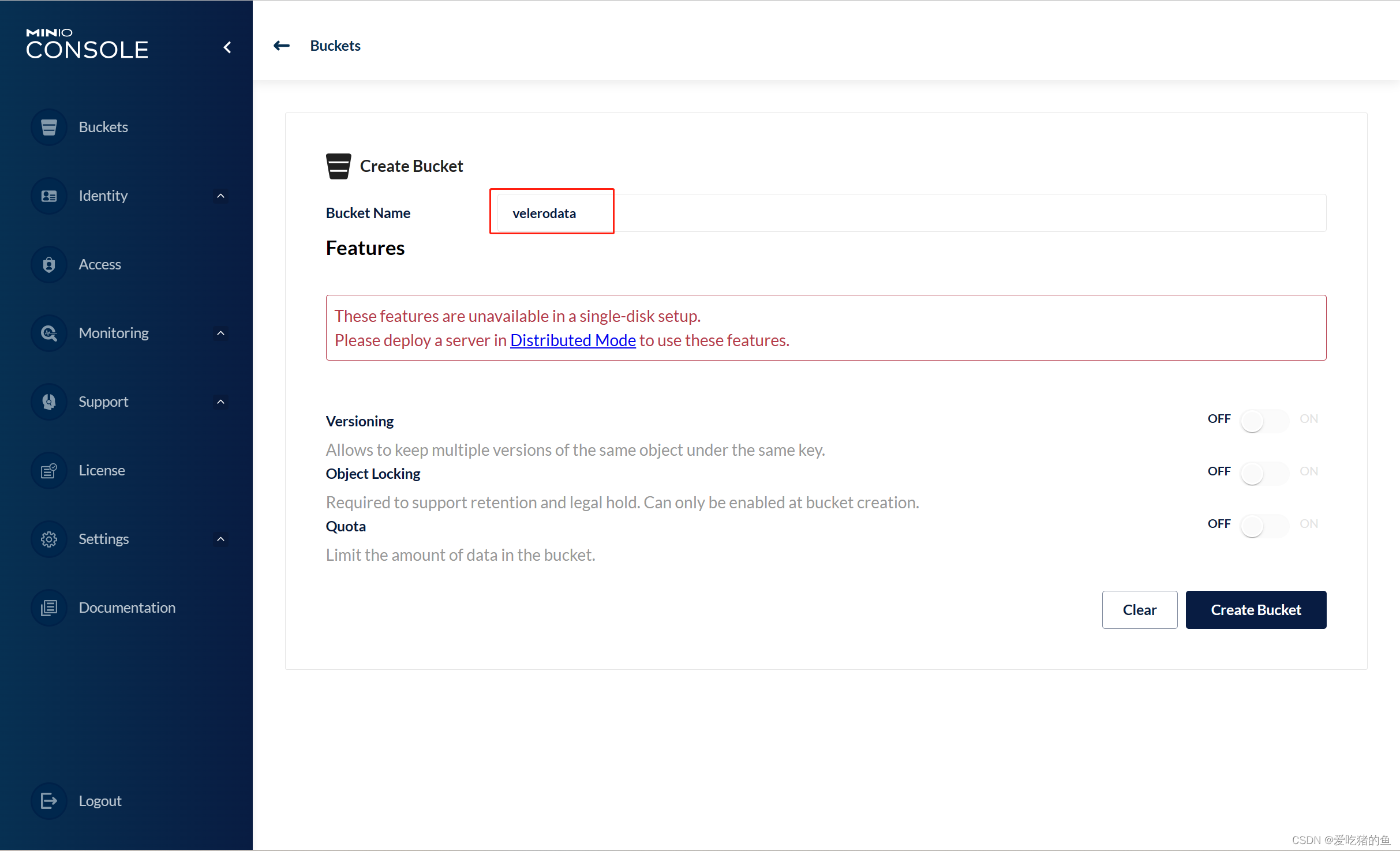

创建bucket,一般一个项目一个bucket

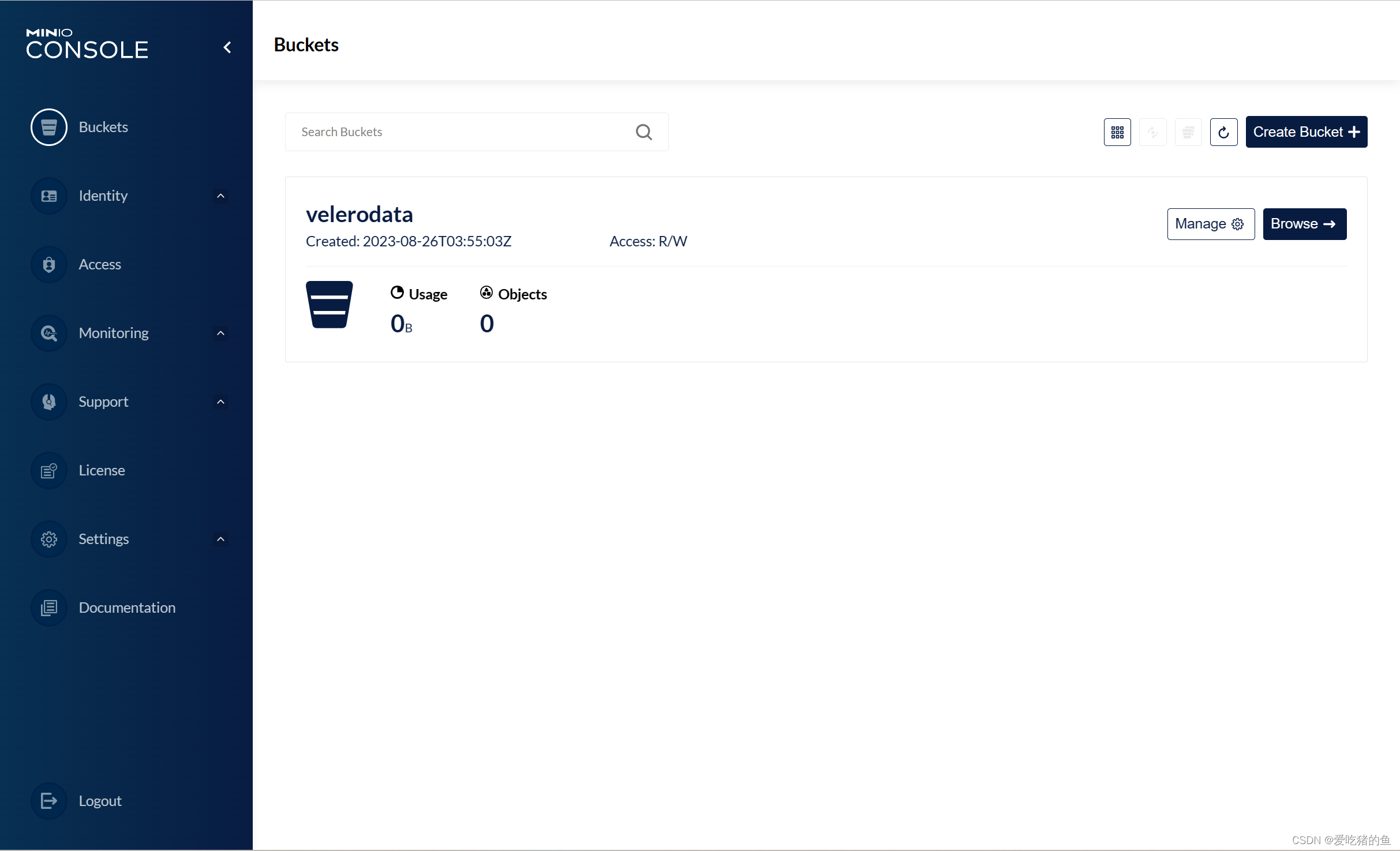

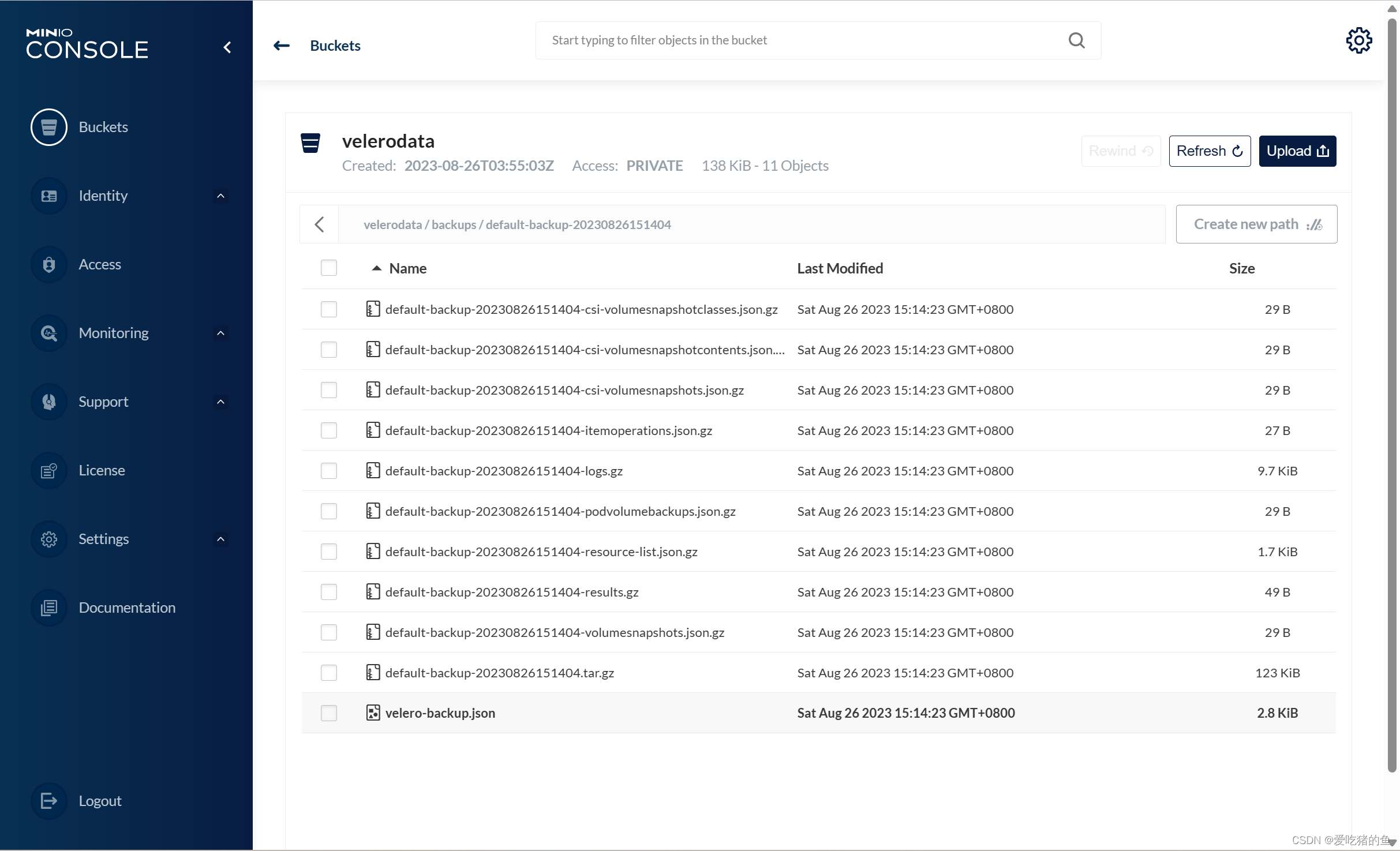

bucket 名称:velerodata,后期会使用;下面的选项都不开起

验证

部署velero

https://github.com/vmware-tanzu/velero #版本兼容性

#下载安装velero;下载安装在那个服务器呢?下载安装到有访问api-server权限的服务器,我选择的master1服务器

wget https://github.com/vmware-tanzu/velero/releases/download/v1.11.1/velero-v1.11.1-linux-amd64.tar.gz

tar xvf velero-v1.11.1-linux-amd64.tar.gz

cp velero-v1.11.1-linux-amd64/velero /usr/local/bin/

配置velero认证环境

需要配置mionio对象存储的认证和k8s的api-server的认证

#配置mionio对象存储的认证

root@k8s-deploy:~# mkdir /data/velero -p

root@k8s-deploy:~# cd /data/velero/

#在/data/velero/目录创建minio的认证文件

cat velero-auth.txt

[default]

aws_access_key_id = admin

aws_secret_access_key = 12345678

#配置api-server的认证

#准备user-csr文件,用来等会创建新用户时签发证书

root@k8s-master1:/data/velero# cat awsuser-csr.json

{

"CN": "awsuser",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

#准备证书签发环境,或使用已有的admin证书

#下载安装证书环境;也可以选择apt install golang-cfssl安装,但是这样版本过低,所以选择下载安装

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64

mv cfssl-certinfo_1.6.1_linux_amd64 cfssl-certinfo

mv cfssl_1.6.1_linux_amd64 cfssl

mv cfssljson_1.6.1_linux_amd64 cfssljson

cp cfssl-certinfo cfssl cfssljson /usr/local/bin/

chmod a+x /usr/local/bin/cfssl*

#执行证书签发;在执行证书签发的时候需要一个ca-config.json文件,这个文件在部署节点的/etc/kubeasz/clusters/k8s-cluster1/ssl目录下

root@k8s-deploy:/data/velero# scp /etc/kubeasz/clusters/k8s-cluster1/ssl/ca-config.json 10.0.0.201:/data/velero

#k8s版本>= 1.24.x执行:

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=./ca-config.json -profile=kubernetes ./awsuser-csr.json | cfssljson -bare awsuser

#k8s版本<== 1.23执行:

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=./ca-config.json -profile=kubernetes ./awsuser-csr.json | cfssljson -bare awsuser

#将证书拷贝到k8s的证书目录,可选

root@k8s-master1:/data/velero# cp awsuser-key.pem awsuser.pem /etc/kubernetes/ssl/

#生成集群认证config文件

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://10.0.0.230:6443 \

--kubeconfig=/root/.kube/awsuser.kubeconfig

#设置客户端证书认证

kubectl config set-credentials awsuser \

--client-certificate=/etc/kubernetes/ssl/awsuser.pem \

--client-key=/etc/kubernetes/ssl/awsuser-key.pem \

--embed-certs=true \

--kubeconfig=/root/.kube/awsuser.kubeconfig

#设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=awsuser \

--namespace=velero-system \

--kubeconfig=/root/.kube/awsuser.kubeconfig

#设置默认上下文

kubectl config use-context kubernetes --kubeconfig=/root/.kube/awsuser.kubeconfig

#k8s集群中创建awsuser账户

kubectl create clusterrolebinding awsuser --clusterrole=cluster-admin --user=awsuser

#创建namespace

kubectl create ns velero-system

#执行安装;use-volume-snapshots公有云可以改成true;region=minio表示区域,例如公有云的华北华南等;s3ForcePathStyle="true"对象存储的风格

#/root/.kube/awsuser.kubeconfig 这个文件可以用/root/.kube/config

velero --kubeconfig /root/.kube/awsuser.kubeconfig \

install \

--provider aws \

--plugins registry.cn-hangzhou.aliyuncs.com/zhangshijie/velero-plugin-for-aws:v1.7.1 \

--bucket velerodata \

--secret-file ./velero-auth.txt \

--use-volume-snapshots=false \

--namespace velero-system \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://10.0.0.200:9000

#测试对default ns进行备份;--include-cluster-resources=true导入全局资源,就是不依赖于namespace的,例如pv。备份的时候把他最好加上

DATE=`date +%Y%m%d%H%M%S`

velero backup create default-backup-${DATE} --include-cluster-resources=true --include-namespaces default --kubeconfig=/root/.kube/awsuser.kubeconfig --namespace velero-system

#验证备份

velero backup describe default-backup-20230826151404 --kubeconfig=/root/.kube/awsuser.kubeconfig --namespace velero-system

验证对default ns进行备份

删除pod并验证数据恢复

#查看pod

root@k8s-master1:/data/velero# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 6 (4h12m ago) 13d

net-test2 1/1 Running 6 (4h11m ago) 13d

net-test5 1/1 Running 7 (4h12m ago) 12d

#删除pod

root@k8s-master1:/data/velero# kubectl delete pod net-test1

pod "net-test1" deleted

root@k8s-master1:/data/velero# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test2 1/1 Running 6 (4h17m ago) 13d

net-test5 1/1 Running 7 (4h17m ago) 12d

#从备份恢复;--wait表示备份恢复完成后才会退出终端

root@k8s-master1:/data/velero# velero restore create --from-backup default-backup-20230826151404 --wait --kubeconfig=/root/.kube/awsuser.kubeconfig --namespace velero-system

Restore request "default-backup-20230826151404-20230826152518" submitted successfully.

Waiting for restore to complete. You may safely press ctrl-c to stop waiting - your restore will continue in the background.

......................

Restore completed with status: Completed. You may check for more information using the commands `velero restore describe default-backup-20230826151404-20230826152518` and `velero restore logs default-backup-20230826151404-20230826152518`.

#验证恢复

root@k8s-master1:/data/velero# kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 23s

net-test2 1/1 Running 6 (4h17m ago) 13d

net-test5 1/1 Running 7 (4h17m ago) 12d

备份指定的资源对象

velero backup create pod-backup-202308161135 --include-cluster-resources=true --ordered-resources 'pods=myserver/net-test1,defafut/net-test1;services=myserver/test-svc1,defafut/svc-test1' --namespace velero-system

批量备份不同的namespace

root@k8s-master1:~# cat /data/velero/ns-back.sh

#!/bin/bash

NS_NAME=`kubectl get ns | awk '{if (NR>2){print}}' | awk '{print $1}'`

DATE=`date +%Y%m%d%H%M%S`

cd /data/velero/

for i in $NS_NAME;do

velero backup create ${i}-ns-backup-${DATE} \

--include-cluster-resources=true \

--include-namespaces ${i} \

--kubeconfig=/root/.kube/config \

--namespace velero-system

done

基于nerdctl + BuildKit + Containerd构建容器镜像

安装buildkit

#下载buildkit安装包

root@k8s-master1:/usr/local/src# wget https://github.com/moby/buildkit/releases/download/v0.12.1/buildkit-v0.12.1.linux-amd64.tar.gz

#解压

root@k8s-master1:/usr/local/src# tar xvf buildkit-v0.12.1.linux-amd64.tar.gz

#二进制执行文件移到/usr/local/bin/

root@k8s-master1:/usr/local/src# mv bin/* /usr/local/bin/

#手动编辑buildkit.socket文件

vim /lib/systemd/system/buildkit.socket

[Unit]

Description=BuildKit

Documentation=https://github.com/moby/buildkit

[Socket]

ListenStream=%t/buildkit/buildkitd.sock

[Install]

WantedBy=sockets.target

#手动编辑buildkitd.service文件

vim /lib/systemd/system/buildkitd.service

[Unit]

Description=BuildKit

Requires=buildkit.socket

After=buildkit.socketDocumentation=https://github.com/moby/buildkit

[Service]

ExecStart=/usr/local/bin/buildkitd --oci-worker=false --

containerd-worker=true

[Install]

WantedBy=multi-user.target

#重启buildkitd

root@k8s-master1:/usr/local/src# systemctl daemon-reload

root@k8s-master1:/usr/local/src# systemctl restart buildkitd

#设置开机启动

root@k8s-master1:/usr/local/src# systemctl enable buildkitd

Created symlink /etc/systemd/system/multi-user.target.wants/buildkitd.service → /lib/systemd/system/buildkitd.service.

#查看是否启动成功

root@k8s-master1:/usr/local/src# systemctl status buildkitd

● buildkitd.service - BuildKit

Loaded: loaded (/lib/systemd/system/buildkitd.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-08-27 11:56:16 CST; 24s ago

#设置nerdctl自动补全

vim /etc/profile

source <(nerdctl completion bash)

source /etc/profile

#测试

#登录镜像仓库

root@k8s-master1:/usr/local/src# nerdctl login harbor.canghailyt.com

Enter Username: admin

Enter Password:

WARN[0006] skipping verifying HTTPS certs for "harbor.canghailyt.com"

WARNING: Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

#修改tag

root@k8s-master1:/usr/local/src# nerdctl tag easzlab.io.local:5000/calico/cni:v3.24.6 harbor.canghailyt.com/base/cni:v3.24.6

#上传镜像

root@k8s-master1:/usr/local/src# nerdctl push harbor.canghailyt.com/base/cni:v3.24.6

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:64ab9209ea185075284e351641579e872ab1be0cf138b38498de2c0bfdb9c148)

WARN[0000] skipping verifying HTTPS certs for "harbor.canghailyt.com"

manifest-sha256:64ab9209ea185075284e351641579e872ab1be0cf138b38498de2c0bfdb9c148: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:ca9fea5e07cb5e807b3814a55d6a33829b9633c31b643ceb4b9757f41bab7c55: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 3.3 s total: 7.2 Ki (2.2 KiB/s)

镜像构建

root@k8s-master1:/usr/local/src/ubuntu2204-nginx-1.22# ll

total 1120

drwxr-xr-x 3 root root 4096 Aug 17 12:00 ./

drwxr-xr-x 4 root root 4096 Aug 27 12:28 ../

-rw-r--r-- 1 root root 931 Aug 17 12:00 Dockerfile

-rw-r--r-- 1 root root 306 Aug 17 11:56 build-command.sh

-rw-r--r-- 1 root root 38751 Aug 5 2022 frontend.tar.gz

drwxr-xr-x 3 root root 4096 Aug 5 2022 html/

-rw-r--r-- 1 root root 1073322 May 24 2022 nginx-1.22.0.tar.gz

-rw-r--r-- 1 root root 2812 Oct 3 2020 nginx.conf

-rw-r--r-- 1 root root 1139 Aug 5 2022 sources.list

root@k8s-master1:/usr/local/src/ubuntu2204-nginx-1.22# cat Dockerfile

FROM registry.cn-hangzhou.aliyuncs.com/zhangshijie/ubuntu:22.04

MAINTAINER "jack 2973707860@qq.com"

#ADD sources.list /etc/apt/sources.list

RUN apt update && apt install -y iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip make

ADD nginx-1.22.0.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.22.0 && ./configure --prefix=/apps/nginx && make && make install && ln -sv /apps/nginx/sbin/nginx /usr/bin

RUN groupadd -g 2088 nginx && useradd -g nginx -s /usr/sbin/nologin -u 2088 nginx && chown -R nginx.nginx /apps/nginx

ADD nginx.conf /apps/nginx/conf/

ADD frontend.tar.gz /apps/nginx/html/

EXPOSE 80 443

#ENTRYPOINT ["nginx"]

CMD ["nginx","-g","daemon off;"]

root@k8s-master1:/usr/local/src/ubuntu2204-nginx-1.22# cat build-command.sh

#!/bin/bash

/usr/local/bin/nerdctl build -t harbor.canghailyt.com/base/nginx-base:1.22.0 .

/usr/local/bin/nerdctl push harbor.canghailyt.com/base/nginx-base:1.22.0

#nerdctl构建镜像时,不管本地有没有镜像都会去仓库拉取镜像的元数据。所以如果用nerdctl一定要保证和仓库地址的连通性。否则会失败。

root@k8s-master1:/usr/local/src/ubuntu2204-nginx-1.22# bash build-command.sh

[+] Building 601.9s (12/12)

=> [internal] load build definition from Dockerfile 0.0s

[+] Building 603.4s (12/12) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 970B 0.0s

=> [internal] load metadata for registry.cn-hangzhou.aliyuncs.com/zhangshijie/ubuntu:22.04 1.8s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [1/7] FROM registry.cn-hangzhou.aliyuncs.com/zhangshijie/ubuntu:22.04@sha256:6ab47c56b3ce16283ea128ebafd78f5f4a6b817 5.2s

=> => resolve registry.cn-hangzhou.aliyuncs.com/zhangshijie/ubuntu:22.04@sha256:6ab47c56b3ce16283ea128ebafd78f5f4a6b817 0.0s

=> => sha256:b237fe92c4173e4dfb3ba82e76e5fed4b16186a6161e07af15814cb40eb9069d 29.54MB / 29.54MB 3.0s

=> => extracting sha256:b237fe92c4173e4dfb3ba82e76e5fed4b16186a6161e07af15814cb40eb9069d 2.1s

=> [internal] load build context 0.1s

=> => transferring context: 1.12MB 0.1s

=> [2/7] RUN apt update && apt install -y iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common 541.7s

=> [3/7] ADD nginx-1.22.0.tar.gz /usr/local/src/ 0.3s

=> [4/7] RUN cd /usr/local/src/nginx-1.22.0 && ./configure --prefix=/apps/nginx && make && make install && ln -sv /ap 24.6s

=> [5/7] RUN groupadd -g 2088 nginx && useradd -g nginx -s /usr/sbin/nologin -u 2088 nginx && chown -R nginx.nginx /a 0.1s

=> [6/7] ADD nginx.conf /apps/nginx/conf/ 0.0s

=> [7/7] ADD frontend.tar.gz /apps/nginx/html/ 0.0s

=> exporting to docker image format 27.9s

=> => exporting layers 18.5s

=> => exporting manifest sha256:67e7286a47a10cb1181168efa26907024a774469b17de3f8585b46f2f088fea0 0.0s

=> => exporting config sha256:e3866e004b5824af8385a0566c74475674373ccb92e4d756eed98701c9ae2bf9 0.0s0 => => sending tarball 9.4s

Loaded image: harbor.canghailyt.com/base/nginx-base:1.22.0

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:67e7286a47a10cb1181168efa26907024a774469b17de3f8585b46f2f088fea0)

WARN[0000] skipping verifying HTTPS certs for "harbor.canghailyt.com"

manifest-sha256:67e7286a47a10cb1181168efa26907024a774469b17de3f8585b46f2f088fea0: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:e3866e004b5824af8385a0566c74475674373ccb92e4d756eed98701c9ae2bf9: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 2.8 s total: 5.3 Ki (1.9 KiB/s)

#测试创建容器

root@k8s-master1:/usr/local/src/ubuntu2204-nginx-1.22# nerdctl run -d -p 80:80 harbor.canghailyt.com/base/nginx-base:1.22.0

760968e642a0931acf3761618db5d11d92bc830d9be8fefd20493b2cc68f45a1

总结pod生命周期、pod常见状态、pod调度流程详解

pod生命周期

1.创建pod

向API-Server提交创建请求、API-Server完成鉴权和准入并将事件写入etcd

kube-scheduler完成调度流程

kubelet创建并启动pod、然后执行postStart

周期进行livenessProbe,进入running状态,readinessProbe检测通过后,service关联pod

接受客户端请求

2.删除pod

向API-Server提交删除请求、API-Server完成鉴权和准入并将事件写入etcd

Pod被设置为”Terminating”状态、从service的Endpoints列表中删除并不再接受客户端请求。

pod执行PreStop

kubelet向pod中的容器发送SIGTERM信号(正常终止信号)终止pod里面的主进程,这个信号让容器知道自己很快将会被关闭

terminationGracePeriodSeconds: 60 #可选终止等待期(pod删除宽限期),如果有设置删除宽限时间,则等待宽限时间到期,否则最多等待30s,Kubernetes等待指定的时间称为优雅终止宽限期,默认情况下是30秒,值得注意的是等待期与preStop Hook和SIGTERM信号并行执行,即Kubernetes可能不会等待preStop Hook完成(最长30秒之后主进程还没有结束就就强制终止pod)。

SIGKILL信号被发送到Pod,并删除Pod

pod常见状态

Unschedulable:#Pod不能被调度,kube-scheduler没有匹配到合适的node节点

PodScheduled:#pod正处于调度中,在kube-scheduler刚开始调度的时候,还没有将pod分配到指定的node,在筛选出合适的节点后就会更新etcd数据,将pod分配到指定的node

Pending: #正在创建Pod但是Pod中的容器还没有全部被创建完成=[处于此状态的Pod应该检查Pod依赖的存储是否有权限挂载等。

Failed: #Pod中有容器启动失败而导致pod工作异常。

Unknown: #由于某种原因无法获得pod的当前状态,通常是由于与pod所在的node节点通信错误。

Initialized:#所有pod中的初始化容器已经完成了

ImagePullBackOff: #Pod所在的node节点下载镜像失败

Running: #Pod内部的容器已经被创建并且启动。

Ready: #表示pod中的容器已经可以提供访

Error: #pod 启动过程中发生错误

NodeLost: #Pod 所在节点失

Waiting: #Pod 等待启

Terminating: #Pod 正在被销

CrashLoopBackOff:#pod崩溃,但是kubelet正在将它重启

InvalidImageName:#node节点无法解析镜像名称导致的镜像无法下载

ImageInspectError:#无法校验镜像,镜像不完整导致

ErrImageNeverPull:#策略禁止拉取镜像,镜像中心权限是私有等

RegistryUnavailable:#镜像服务器不可用,网络原因或harbor宕机

ErrImagePull:#镜像拉取出错,超时或下载被强制终止

CreateContainerConfigError:#不能创建kubelet使用的容器配置

CreateContainerError:#创建容器失败

RunContainerError:#pod运行失败,容器中没有初始化PID为1的守护进程等

ContainersNotInitialized:#pod没有初始化完毕

ContainersNotReady:#pod没有准备完毕

ContainerCreating:#pod正在创建中

PodInitializing:#pod正在初始化中

DockerDaemonNotReady:#node节点decker服务没有启动

NetworkPluginNotReady:#网络插件没有启动

pod调度流程

Pod的调度流程可以分为多个步骤。首先,当一个Pod创建请求提交给Kubernetes集群时,调度器会根据集群中的可用节点进行调度决策。调度器会考虑诸多因素,例如节点的资源可用性、节点上的标签匹配、Pod的亲和性和容忍性等等。

在调度过程中,调度器会根据Pod的亲和性规则进行节点选择。亲和性规则定义了Pod与哪些节点有关联,可以是硬性的亲和性要求或软性的亲和性偏好。硬性亲和性要求指定了Pod必须与某些节点有关联,例如要求Pod与某个特定的节点在同一个节点上运行。而软性亲和性偏好则是指定了Pod尽可能与某些节点有关联,但不强制要求。可以使用Pod的亲和性配置来定义这些规则。

同时,调度器还会考虑Pod的容忍性配置。容忍性配置指定了Pod可以容忍的节点条件,例如Pod可以容忍的节点故障、资源紧张等。如果没有节点满足Pod的亲和性规则或容忍性配置,Pod将无法被调度。

当调度器确定了一个合适的节点后,它会将Pod添加到该节点上,并将该节点上的Pod信息更新到集群的状态存储中。

在调度完成后,Kubernetes的控制器会监视Pod的状态,并确保Pod按照预期在节点上运行。如果Pod出现故障或被删除,控制器会负责重新调度Pod到其他节点上。

总结pause容器、init容器的功能及使用案例

pause容器

Pause 容器,又叫 Infra 容器,是pod的基础容器,镜像体积只有几百KB左右,配置在kubelet中,主要的功能是一个pod中多个容器的网络通信

Infra 容器被创建后会初始化 Network Namespace,之后其它容器就可以加入到 Infra 容器中共享Infra 容器的网络了,因此如果一个 Pod 中的两个容器 A 和 B,那么关系如下:

1.A容器和B容器能够直接使用 localhost 通

2.A容器和B容器可以可以看到网卡、IP与端口监听信息

3.Pod 只有一个 IP 地址,也就是该 Pod 的 Network Namespace 对应的IP 地址(由Infra 容器初始化并创建)。

4.k8s环境中的每个Pod有一个独立的IP地址(前提是地址足够用),并且此IP被当前 Pod 中所有容器在内部共享使用

5.pod删除后Infra 容器随机被删除,其IP被回收。

Pause容器共享的Namespace:

1.NET Namespace:Pod中的多个容器共享同一个网络命名空间,即使用相同的IP和端口信息

2.IPC Namespace:Pod中的多个容器可以使用System V IPC或POSIX消息队列进行通信

3.UTS Namespace:pod中的多个容器共享一个主机名。

MNT Namespace、PID Namespace、User Namespace未共享

#查看容器中对应宿主机的网卡

root@k8s-deploy:~# kubectl exec -it -n myserver myserver-myapp-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

#方式1

[root@myserver-myapp-0 /]# cat /sys/class/net/eth0/iflink

13

方式2:

[root@myserver-myapp-0 /]# ethtool -S eth0

NIC statistics:

peer_ifindex: 13

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_drops: 0

rx_queue_0_xdp_redirect: 0

rx_queue_0_xdp_drops: 0

rx_queue_0_xdp_tx: 0

rx_queue_0_xdp_tx_errors: 0

tx_queue_0_xdp_xmit: 0

tx_queue_0_xdp_xmit_errors: 0

#从上面两个方式我们可以得到为序号为13;然后我们去容器pod所在node查看序号为13的网卡

root@k8s-node2:~# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:e1:bf:7b brd ff:ff:ff:ff:ff:ff

altname enp2s1

altname ens33

3: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN mode DEFAULT group default

link/ether ba:51:e1:b7:af:ca brd ff:ff:ff:ff:ff:ff

4: calic4e163e8f39@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-3aebc722-0070-bce1-4000-549380d6561c

5: cali82c71ec4b91@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-eb44f3a1-2060-fcf5-f87b-3dd95cc8b6a7

6: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

9: calid6f9f42f877@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-5643a08a-a223-9a00-a771-a7550a5b75f0

10: cali2b2e7c9e43e@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-04afea87-6a0e-cd6e-809d-bf95adb0a82e

11: calib06dcaadfa7@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-2bc40fc2-4dd0-6d72-0eb8-30cccec50fd5

12: calif5e3b104649@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-65d043a4-5474-324e-0826-e99a5fcffe2e

13: calibc298e8cdb2@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netns cni-6dc3d544-aee5-b3bc-5b01-3e10adbc4c33

#查看对应序号13的

root@k8s-node2:~# ll /run/netns/

total 0

drwxr-xr-x 2 root root 180 Aug 27 11:46 ./

drwxr-xr-x 28 root root 900 Aug 27 15:24 ../

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-04afea87-6a0e-cd6e-809d-bf95adb0a82e

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-2bc40fc2-4dd0-6d72-0eb8-30cccec50fd5

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-3aebc722-0070-bce1-4000-549380d6561c

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-5643a08a-a223-9a00-a771-a7550a5b75f0

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-65d043a4-5474-324e-0826-e99a5fcffe2e

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-6dc3d544-aee5-b3bc-5b01-3e10adbc4c33

-r--r--r-- 1 root root 0 Aug 27 11:46 cni-eb44f3a1-2060-fcf5-f87b-3dd95cc8b6a7

root@k8s-node2:~# sudo nsenter --net=/run/netns/cni-6dc3d544-aee5-b3bc-5b01-3e10adbc4c33 ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.20.104.33 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::f004:78ff:fe6f:97eb prefixlen 64 scopeid 0x20<link>

ether f2:04:78:6f:97:eb txqueuelen 0 (Ethernet)

RX packets 3452 bytes 23932145 (23.9 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3312 bytes 186553 (186.5 KB)

TX errors 0 dropped 1 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

pause容器示例

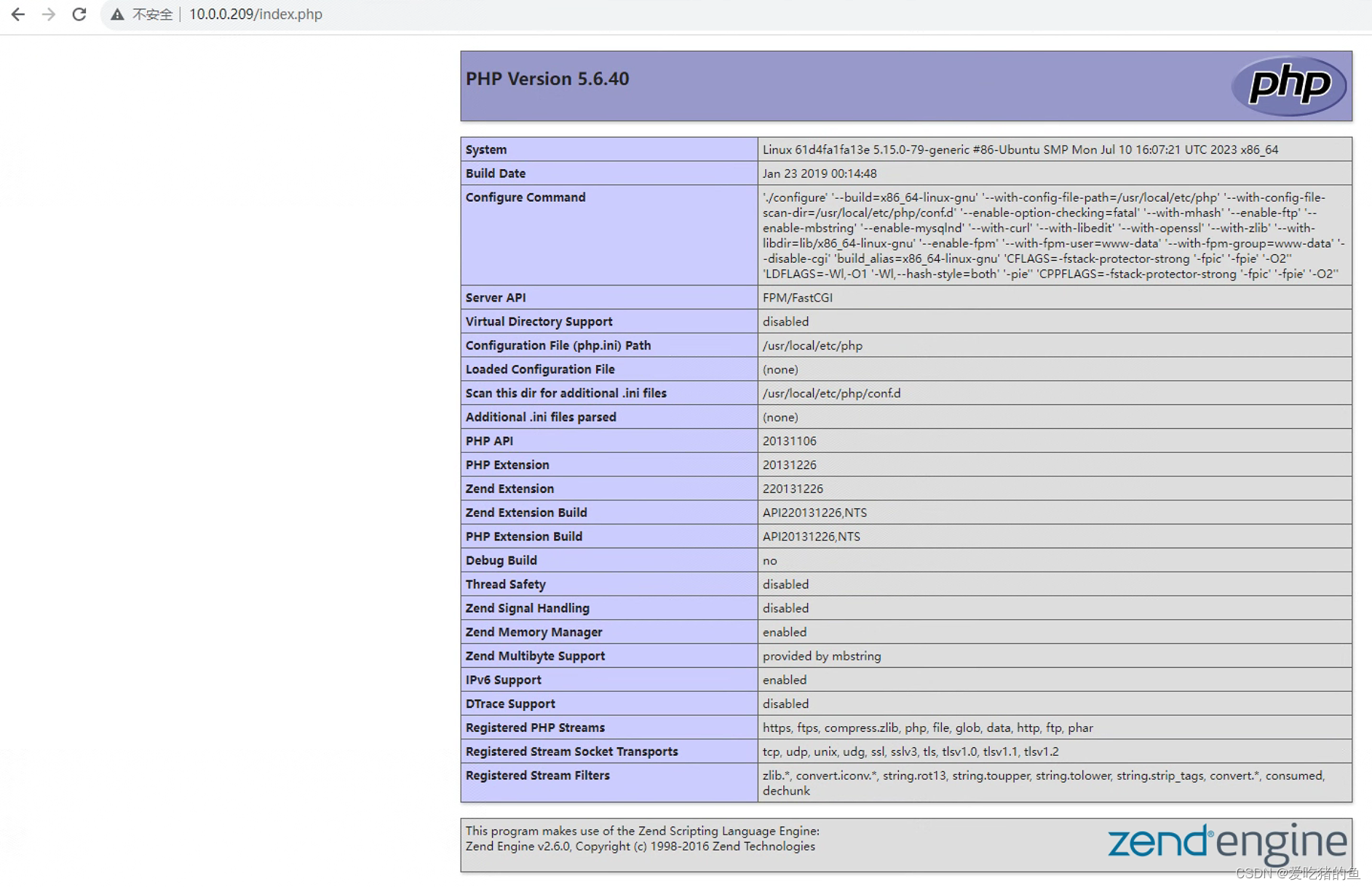

#在node1主机10.0.0.209测试,编写nginx配置文件实现动静分离

root@k8s-node1:~/pause-test-case# cat nginx.conf

error_log stderr;

events { worker_connections 1024; }

http {

access_log /dev/stdout;

server {

listen 80 default_server;

server_name www.mysite.com;

location / {

index index.html index.php;

root /usr/share/nginx/html;

}

location ~ \.php$ {

root /usr/share/nginx/html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

}

#部署pause容器

root@k8s-node1:~/pause-test-case# nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8

root@k8s-node1:~/pause-test-case# nerdctl run -d -p 80:80 --name container-test registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8

#测试页面

root@k8s-node1:~/pause-test-case# ll html/

total 20

drwxr-xr-x 2 root root 4096 Apr 24 2022 '*'/

drwxr-xr-x 3 root root 4096 Apr 24 2022 ./

drwxr-xr-x 3 root root 4096 Apr 24 2022 ../

-rw-r--r-- 1 root root 34 Apr 24 2022 index.html

-rw-r--r-- 1 root root 25 Apr 24 2022 index.php

#使用pause网络部署nginx容器

root@k8s-node1:~/pause-test-case# nerdctl run -d --name nginx-container --net=container:container-test -v $(pwd)/nginx.conf:/etc/nginx/nginx.conf -v $(pwd)/html:/usr/share/nginx/html registry.cn-hangzhou.aliyuncs.com/zhangshijie/nginx:1.22.0-alpine

#部署php容器名,使用pause容器网络

root@k8s-node1:~/pause-test-case# nerdctl run -d --name php-container --net=container:container-test -v $(pwd)/html:/usr/share/nginx/html registry.cn-hangzhou.aliyuncs.com/zhangshijie/php:5.6.40-fpm

测试

init容器

init容器的作用:

1.可以为业务容器提前准备好业务容器的运行环境,比如将业务容器需要的配置文件提前生成并放在指定位置、检查数据权限或完整性、软件版本等基础运行环境。

2.可以在运行业务容器之前准备好需要的业务数据,比如从OSS下载、或者从其它位置copy。

3.检查依赖的服务是否能够访问。

init容器的特点:

1.一个pod可以有多个业务容器还能同时再有多个init容器,但是每个init容器和业务容器的运行环境都是隔离的。

2.init容器会比业务容器先启动。

3.init容器运行成功之后才会继续运行业务容器。

4.如果一个pod有多个init容器,则需要从上到下逐个运行并且全部成功,最后才会运行业务容器。

5.init容器不支持探针检测(因为init容器初始化完成后就退出再也不运行了)。

init容器示例:

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-myapp

name: myserver-myapp-deployment-name

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-container

image: nginx:1.20.0

#imagePullPolicy: Always

volumeMounts:

- mountPath: "/usr/share/nginx/html/myserver"

name: myserver-data

- name: tz-config

mountPath: /etc/localtime

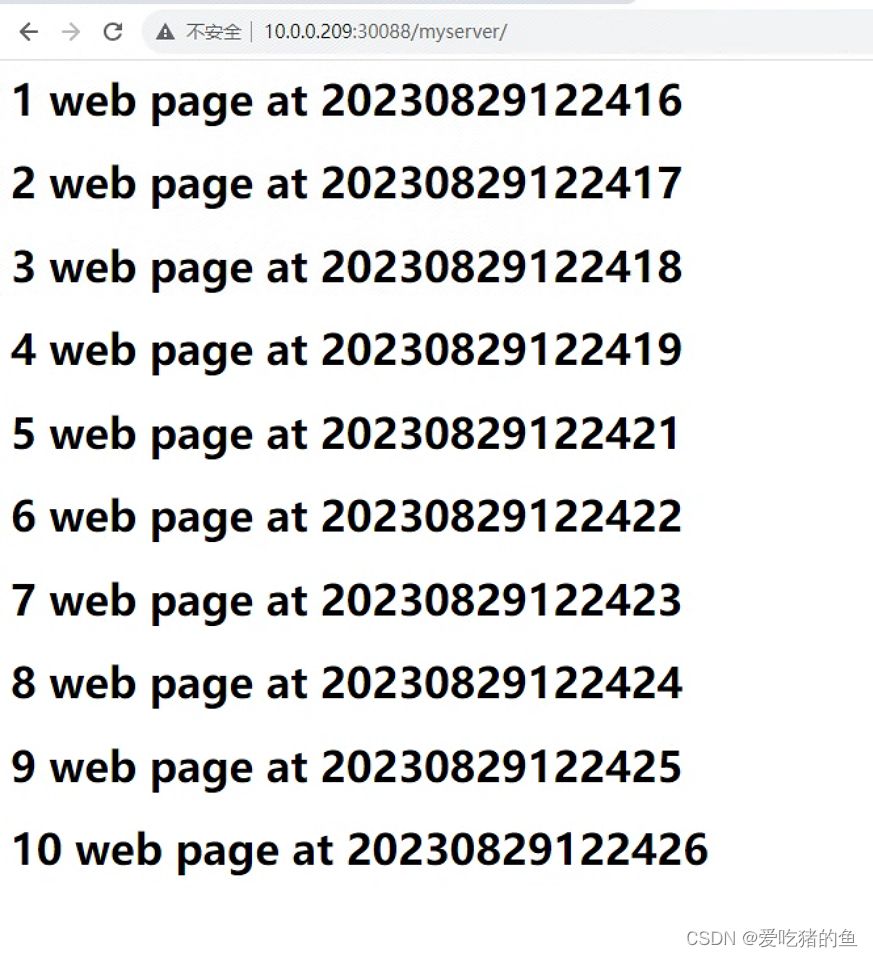

initContainers:

- name: init-web-data

image: centos:7.9.2009

command: ['/bin/bash','-c',"for i in `seq 1 10`;do echo '<h1>'$i web page at $(date +%Y%m%d%H%M%S) '<h1>' >> /data/nginx/html/myserver/index.html;sleep 1;done"]

volumeMounts:

- mountPath: "/data/nginx/html/myserver"

name: myserver-data

- name: tz-config

mountPath: /etc/localtime

- name: change-data-owner

image: busybox:1.28

command: ['/bin/sh','-c',"/bin/chmod 644 /data/nginx/html/myserver/* -R"]

volumeMounts:

- mountPath: "/data/nginx/html/myserver"

name: myserver-data

- name: tz-config

mountPath: /etc/localtime

volumes:

- name: myserver-data

hostPath:

path: /tmp/data/html

- name: tz-config

hostPath:

path: /etc/localtime

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-myapp-service

name: myserver-myapp-service-name

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30088

selector:

app: myserver-myapp-frontend

测试

总结探针类型、功能及区别、配置参数、使用场景、startupProbe、livenessProbe、readinessProbe案例

探针

pod的生命周期(pod lifecycle),从pod start时候可以配置postStart检测,运行过程中可以配置livenessProbe和readinessProbe,最后在 stop前可以配置preStop操作

探针是由 kubelet 对容器执行的定期诊断,以保证Pod的状态始终处于运行状态,要执行诊断,kubelet 调用由容器实现的Handler(处理程序),也成为Hook(钩子),目前有以下类型的处理程序

ExecAction #在容器内执行指定命令,如果命令退出时返回码为0则认为诊断成功。

TCPSocketAction #对指定端口上的容器的IP地址进行TCP检查,如果端口打开,则诊断被认为是成功的。

HTTPGetAction:#对指定的端口和路径上的容器的IP地址执行HTTPGet请求,如果响应的状态码大于等于200且小于 400,则诊断被认为是成功的

grpc:针对支持gRPC 健康检查协议应用的心跳检测(响应的状态是 “SERVING”,则认为诊断成功),1.23 Alpha、1.24 Beta、1.27 GA;grpc检查方式要 服务或者代码支持才行

https://github.com/grpc-ecosystem/grpc-health-probe/ #早期检测方式

https://kubernetes.io/zh-cn/docs/reference/command-line-tools-reference/feature-gates/ #1.23需要单独开启新特性

每次探测都将获得以下三种结果之一

成功:容器通过了诊断。

失败:容器未通过诊断

未知:诊断失败,因此不会采取任何行动。

Pod重启策略

Pod一旦配置探针,在检测失败时候,会基于restartPolicy对Pod进行下一步操作

restartPolicy (容器重启策略):

Always:当容器异常时,k8s自动重启该容器,ReplicationController/Replicaset/Deployment,默认为Always

OnFailure:当容器失败时(容器停止运行且退出码不为0),k8s自动重启该容器

Never:不论容器运行状态如何都不会重启该容器,Job或CronJob

imagePullPolicy (镜像拉取策略):

IfNotPresent:node节点没有此镜像就去指定的镜像仓库拉取,node有就使用node本地镜像(一般使用这种)

Always:每次重建pod都会重新拉取镜像

Never:从不到镜像中心拉取镜像,只使用本地镜像

探针类型

startupProbe: #启动探针,kubernetes v1.16引

判断容器内的应用程序是否已启动完成,如果配置了启动探测,则会先禁用所有其它的探测,直到startupProbe检测成功为止,如果startupProbe探测失败,则kubelet将杀死容器,容器将按照重启策略进行下一步操作,如果容器没有提供启动探测,则默认状态为成功

livenessProbe: #存活探针

检测容器容器是否正在运行,如果存活探测失败,则kubelet会杀死容器,并且容器将受到其重启策略的影响,如果容器不提供存活探针,则默认状态为 Success,livenessProbe用于控制是否重启pod

readinessProbe: #就绪探针

如果就绪探测失败,端点控制器将从与Pod匹配的所有Service的端点中删除该Pod的IP地址,初始延迟之前的就绪状态默认为Failure(失败),如果容器不提供就绪探针,则默认状态为 Success,readinessProbe用于控制pod是否添加至service。

总结:存活探针失败会重启pod(基于镜像重新创建pod,pod名称不会改变);就寻探针失败不会重启pod,会把这个pod的从service里面去掉,这样请求就不会转到pod里面;一般存活探针和就绪探针两个都要配置;存活探针和就绪探针是同时检测的

探针配置参数

探针配置参数

探针有很多配置字段,可以使用这些字段精确的控制存活和就绪检测的行为:

https://kubernetes.io/zh/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

initialDelaySeconds: 120 #初始化延迟时间,告诉kubelet在执行第一次探测前应该等待多少秒,默认是0秒,最小值是0

periodSeconds: 6 #探测周期间隔时间,指定了kubelet应该每多少秒秒执行一次存活探测,默认是 10 秒。最小值是

timeoutSeconds: 5 #单次探测超时时间,探测的超时后等待多少秒,默认值是1秒,最小值是1

successThreshold: 1 #从失败转为成功的重试次数,探测器在失败后,被视为成功的最小连续成功数,默认值是1,存活探测的这个值必须是1,最小值是 1

failureThreshold: 3 #从成功转为失败的重试次数,当Pod启动了并且探测到失败,Kubernetes的重试次数,存活探测情况下的放弃就意味着重新启动容器,就绪探测情况下的放弃Pod 会被打上未就绪的标签,默认值是3,最小值是1

探针http配置参数

HTTP 探测器可以在 httpGet 上配置额外的字段:

host: #连接使用的主机名,默认是Pod的 IP,也可以在HTTP头中设置 “Host” 来代替。

scheme: http #用于设置连接主机的方式(HTTP 还是 HTTPS),默认是 HTTP

path: /monitor/index.html #访问 HTTP 服务的路径。

httpHeaders: #请求中自定义的 HTTP 头,HTTP 头字段允许重复

port: 80 #访问容器的端口号或者端口名,如果数字必须在 1 ~ 65535 之间

示例:

#http检测

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: myserver

name: http-probe-deployment

spec:

replicas: 1

selector:

matchLabels:

app: http-probe

template:

metadata:

labels:

app: http-probe

spec:

containers:

- name: http-probe-nginx-container

image: nginx:1.20.2

ports:

- containerPort: 80

#readinessProbe:

livenessProbe:

httpGet:

path: /index.html

#path: /index.php

port: 80

initialDelaySeconds: 5 #第一次探测等待时间

periodSeconds: 3 #探测间隔时间

timeoutSeconds: 5 #探测超时时间

successThreshold: 1 #从失败转为成功的重试次数

failureThreshold: 3

#从成功转为失败的重试次数

---

apiVersion: v1

kind: Service

metadata:

namespace: myserver

name: http-probe-svc

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

protocol: TCP

selector:

app: http-probe

#tcp检测

apiVersion: apps/v1

kind: Deployment

metadata:

name: tcp-probe-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: tcp-probe-label

#matchExpressions:

# - {key: app, operator: In, values: [myserver-myapp-frontend,ng-rs-81]}

template:

metadata:

labels:

app: tcp-probe-label

spec:

containers:

- name: tcp-probe-label

image: nginx:1.20.2

ports:

- containerPort: 80

#livenessProbe:

readinessProbe:

tcpSocket:

port: 80

#port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

---

apiVersion: v1

kind: Service

metadata:

name: tcp-probe-service

namespace: myserver

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

protocol: TCP

type: NodePort

selector:

app: tcp-probe-label

#exec检测

apiVersion: apps/v1

kind: Deployment

metadata:

name: exec-probe-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: exec-probe-label

#matchExpressions:

# - {key: app, operator: In, values: [myserver-myapp-redis,ng-rs-81]}

template:

metadata:

labels:

app: exec-probe-label

spec:

containers:

- name: exec-probe-container

image: redis

ports:

- containerPort: 6379

livenessProbe:

#readinessProbe:

exec:

command:

#- /apps/redis/bin/redis-cli

- /usr/local/bin/redis-cli

- quit

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

---

apiVersion: v1

kind: Service

metadata:

name: exec-probe-service

namespace: myserver

spec:

ports:

- name: http

port: 6379

targetPort: 6379

nodePort: 40016

protocol: TCP

type: NodePort

selector:

app: exec-probe-label

#grpc检测

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-etcd-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: myserver-etcd-label

#matchExpressions:

# - {key: app, operator: In, values: [myserver-etcd,ng-rs-81]}

template:

metadata:

labels:

app: myserver-etcd-label

spec:

containers:

- name: myserver-etcd-label

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/etcd:3.5.1-0

command: [ "/usr/local/bin/etcd", "--data-dir", "/var/lib/etcd", "--listen-client-urls", "http://0.0.0.0:2379", "--advertise-client-urls", "http://127.0.0.1:2379", "--log-level", "debug"]

ports:

- containerPort: 2379

livenessProbe:

grpc:

port: 2379

initialDelaySeconds: 10

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

readinessProbe:

grpc:

port: 2379

initialDelaySeconds: 10

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

---

apiVersion: v1

kind: Service

metadata:

name: myserver-etcd-service

namespace: myserver

spec:

ports:

- name: http

port: 2379

targetPort: 2379

nodePort: 42379

protocol: TCP

type: NodePort

selector:

app: myserver-etcd-label

#startupProbe类型

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp-frontend-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: myserver-myapp-frontend-label

#matchExpressions:

# - {key: app, operator: In, values: [myserver-myapp-frontend,ng-rs-81]}

template:

metadata:

labels:

app: myserver-myapp-frontend-label

spec:

terminationGracePeriodSeconds: 60

containers:

- name: myserver-myapp-frontend-label

image: nginx:1.20.2

ports:

- containerPort: 80

startupProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 5 #首次检测延迟5s

failureThreshold: 3 #从成功转为失败的次数

periodSeconds: 3 #探测间隔周期

readinessProbe:

httpGet:

#path: /monitor/monitor.html

path: /index.html

port: 80

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

#path: /monitor/monitor.html

path: /index.html

port: 80

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend-service

namespace: myserver

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 40012

protocol: TCP

type: NodePort

selector:

app: myserver-myapp-frontend-label

postStart and preStop handlers-简介

https://kubernetes.io/zh/docs/tasks/configure-pod-container/attach-handler-lifecycle-event/

postStart 和 preStop handlers 处理函数:

postStart:Pod启动后立即执行指定的擦操作:

1、Pod被创建后立即执行,即不等待pod中的服务启动

2、如果postStart执行失败pod不会继续创建

preStop:

1、在pod被停止之前执行

2、如果preStop一直执行不完成,则最后宽限2秒后强制删除;terminationGracePeriodSeconds: 60 #可选终止等待期(pod删除宽限期),如果有设置删除宽限时间,则等待宽限时间到期,否则最多等待30s。

示例:

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp1-lifecycle

labels:

app: myserver-myapp1-lifecycle

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp1-lifecycle-label

template:

metadata:

labels:

app: myserver-myapp1-lifecycle-label

spec:

terminationGracePeriodSeconds: 60 #可选终止等待期(pod删除宽限期),如果有设置删除宽限时间,则等待宽限时间到期,否则最多等待30s。

containers:

- name: myserver-myapp1-lifecycle-label

image: tomcat:7.0.94-alpine

lifecycle:

postStart:

exec:

#command: 把自己注册到注册在中心

command: ["/bin/sh", "-c", "echo 'Hello from the postStart handler' >> /usr/local/tomcat/webapps/ROOT/index.html"]

#httpGet:

# #path: /monitor/monitor.html

# host: www.magedu.com

# port: 80

# scheme: HTTP

# path: index.html

preStop:

exec:

#command: 把自己从注册中心移除

command:

- /bin/bash

- -c

- 'sleep 10000000'

#command: ["/usr/local/tomcat/bin/catalina.sh","stop"]

#command: ['/bin/sh','-c','/path/preStop.sh']

ports:

- name: http

containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp1-lifecycle-service

namespace: myserver

spec:

ports:

- name: http

port: 80

targetPort: 8080

nodePort: 30012

protocol: TCP

type: NodePort

selector:

app: myserver-myapp1-lifecycle-label

Pod的启动和终止流程

https://cloud.google.com/blog/products/containers-kubernetes/kubernetes-best-practices-terminating-with-grace

1.创建pod

向API-Server提交创建请求、API-Server完成鉴权和准入并将事件写入etcd

kube-scheduler完成调度流程

kubelet创建并启动pod、然后执行postStart

周期进行livenessProbe,进入running状态,readinessProbe检测通过后,service关联pod

接受客户端请求

2.删除pod

向API-Server提交删除请求、API-Server完成鉴权和准入并将事件写入etcd

Pod被设置为”Terminating”状态、从service的Endpoints列表中删除并不再接受客户端请求。

pod执行PreStop

kubelet向pod中的容器发送SIGTERM信号(正常终止信号)终止pod里面的主进程,这个信号让容器知道自己很快将会被关闭

terminationGracePeriodSeconds: 60 #可选终止等待期(pod删除宽限期),如果有设置删除宽限时间,则等待宽限时间到期,否则最多等待30s,Kubernetes等待指定的时间称为优雅终止宽限期,默认情况下是30秒,值得注意的是等待期与preStop Hook和SIGTERM信号并行执行,即Kubernetes可能不会等待preStop Hook完成(最长30秒之后主进程还没有结束就就强制终止pod)。

SIGKILL信号被发送到Pod,并删除Pod

Kubernetes实战案例-PV/PVC简介、Kubernetes运行Zookeeper集群并基于PVC实现数据持久化

基于StatefulSet+storageClassName实现

#在存储服务器安装nfs-server

apt install nfs-server

#创建目录,用于存储类

mkdir -p /data/storageclassname

#编辑vim /etc/exports文件,将/data/storageclassname木目录共享出去,权限为读写

vim /etc/exports

/data/storageclassname *(rw,no_root_squash)

#重启nfs并设置开机启动

systemctl restart nfs-server.service

systemctl enable nfs-server.service

#查看验证

root@k8s-deploy:/opt/case/zookeeper# showmount -e

Export list for k8s-deploy.canghailyt.com:

/data/storageclassname *

#创建存储类需要的账号

vim 1-rbac.yaml

apiVersion: v1

kind: Namespace

metadata:

name: nfs

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

#创建账号

kubectl apply -f 1-rbac.yaml

#创建存储类

vim 2-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: wang-nfs-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

reclaimPolicy: Retain #PV的删除策略,默认为delete,删除PV后立即删除NFS server的数据

mountOptions:

#- vers=4.1 #containerd有部分参数异常

- noresvport #告知NFS客户端在重新建立网络连接时,使用新的传输控制协议源端口

- noatime #访问文件时不更新文件inode中的时间戳,高并发环境可提高性能

parameters:

#mountOptions: "vers=4.1,noresvport,noatime"

archiveOnDelete: "true" #删除pod时保留pod数据,默认为false时为不保留数据

#创建

kubectl apply -f 2-storageclass.yaml

#创建NFS provisioner

vim 3-nfs-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

spec:

replicas: 1

strategy: #部署策略

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

image: registry.cn-qingdao.aliyuncs.com/zhangshijie/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 10.0.0.200

- name: NFS_PATH

value: /data/storageclassname

volumes:

- name: nfs-client-root

nfs:

server: 10.0.0.200

path: /data/storageclassname

#创建

kubectl apply -f 3-nfs-provisioner.yaml

#基于StatefulSet+storageClassName实现Zookeeper集群数据持久化

vim zookeeper.yaml

apiVersion: v1

kind: Namespace

metadata:

name: zookeeper-ns

---

apiVersion: v1

kind: Service #这个svc可以以接近轮询的形式返回给你每个后端pod的地址;通过这个svc可以直连你的pod(在k8s内部同namespace中访问)(就是说没有svc转发到你的pod,直接连接你的pod),对于哪些需要直连你的后端服务比较适用,同时它是接近轮询的。

metadata:

namespace: zookeeper-ns

name: zookeeper-clusterip-svc

labels:

app: zookeeper

spec:

clusterIP: None

ports:

- name: server #zookeeper集群之间做数据同步的端口

port: 2888

- name: leader-election #zookeeper集群做选举使用的端口

port: 3888

selector:

app: zookeeper

---

apiVersion: v1

kind: Service #这个service,就是不是直接连pod,用service转发连接。

metadata:

namespace: zookeeper-ns

name: zookeeper-nodeip-svc

labels:

app: zookeeper

spec:

type: NodePort

ports:

- name: client #zookeeper客户端连接的端口

port: 2181

selector:

app: zookeeper

---

apiVersion: policy/v1

kind: PodDisruptionBudget #这个pdb就是说在后期zookeeper集群如果需要升级,标签为zookeeper的pod中,最大不可用的值是1;(maxUnavailable: 1)

metadata:

namespace: zookeeper-ns

name: zookeeper-pdb

spec:

selector:

matchLabels:

app: zookeeper

maxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: zookeeper-ns

name: zookeeper

spec:

selector:

matchLabels:

app: zookeeper

serviceName: zookeeper-clusterip-svc #使用那个svc

updateStrategy: #更新策略,滚动更新,更新的时候只有一个不可用 maxUnavailable: 1

type: RollingUpdate

podManagementPolicy: OrderedReady #Pod的管理策略 ;OrderedReady Pod 管理策略是 StatefulSet 的默认选项。它告诉 StatefulSet 控制器遵循上文展示的顺序性保证;Parallel Pod 管理策略告诉 StatefulSet 控制器并行的终止所有 Pod, 在启动或终止另一个 Pod 前,不必等待这些 Pod 变成 Running 和 Ready 或者完全终止状态。

template:

metadata:

labels:

app: zookeeper

spec:

affinity:

podAntiAffinity:

#亲和策略,基于node的主机名,把3个分配到不同的node

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zookeeper

topologyKey: "kubernetes.io/hostname"

containers:

- name: kubernetes-zookeeper

imagePullPolicy: Always

image: "registry.cn-hangzhou.aliyuncs.com/zhangshijie/zookeeper:v3.4.14-20230818"

resources:

limits:

memory: "512Mi"

cpu: "0.2"

requests:

#正式环境一般2c,4G

memory: "512Mi"

cpu: "0.2"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "start.sh \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "ready_live.sh 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "ready_live.sh 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: datadir

mountPath: /var/lib/zookeeper

securityContext:

#指定上下文,使用那个用户的id去运行进程;不配置这里就用root了

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: datadir

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: wang-nfs-storage

resources:

requests:

storage: 20Gi #zookeeper数据一般几十个G就够用了

#创建

kubectl apply -f zookeeper.yaml

#查看验证

root@k8s-deploy:~/zookeeper-case-n79/1.StatefulSet# kubectl get pod

NAME READY STATUS RESTARTS AGE

zookeeper-0 1/1 Running 0 8m41s

zookeeper-1 1/1 Running 0 8m38s

zookeeper-2 1/1 Running 0 8m35s

#进入pod查看zookeeper集群状态

root@k8s-deploy:~/zookeeper-case-n79/1.StatefulSet# kubectl exec -it zookeeper-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

zookeeper@zookeeper-0:/$

zookeeper@zookeeper-0:/$ whereis zkServer.sh

zkServer: /usr/bin/zkServer.cmd /usr/bin/zkServer.sh /opt/zookeeper-3.4.14/bin/zkServer.cmd /opt/zookeeper-3.4.14/bin/zkServer.sh

zookeeper@zookeeper-0:/$ /usr/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/bin/../etc/zookeeper/zoo.cfg

Mode: follower

#zookeeper主配置文件

zookeeper@zookeeper-2:/$ ls /opt/zookeeper/conf/zoo.cfg

/opt/zookeeper/conf/zoo.cfg

#查看配置文件中server.3,对应文件

zookeeper@zookeeper-2:/$ cat /opt/zookeeper/conf/zoo.cfg

#This file was autogenerated DO NOT EDIT

clientPort=2181

dataDir=/var/lib/zookeeper/data

dataLogDir=/var/lib/zookeeper/data/log

tickTime=2000

initLimit=10

syncLimit=5

maxClientCnxns=60

minSessionTimeout=4000

maxSessionTimeout=40000

autopurge.snapRetainCount=3

autopurge.purgeInteval=12

server.1=zookeeper-0.zookeeper-headless.default.svc.cluster.local:2888:3888

server.2=zookeeper-1.zookeeper-headless.default.svc.cluster.local:2888:3888

server.3=zookeeper-2.zookeeper-headless.default.svc.cluster.local:2888:3888

zookeeper@zookeeper-2:/$ cat /var/lib/zookeeper/data/myid

3

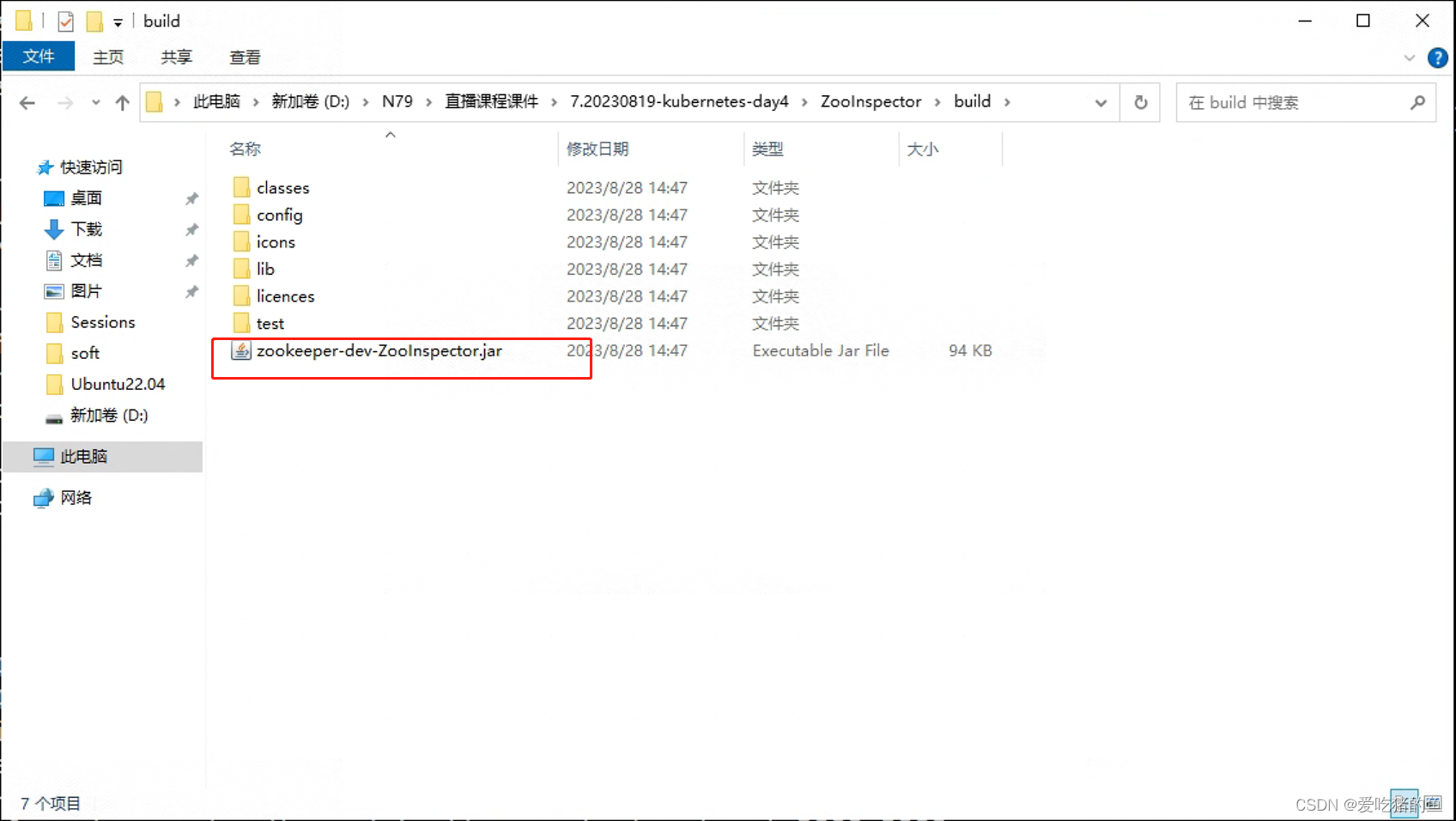

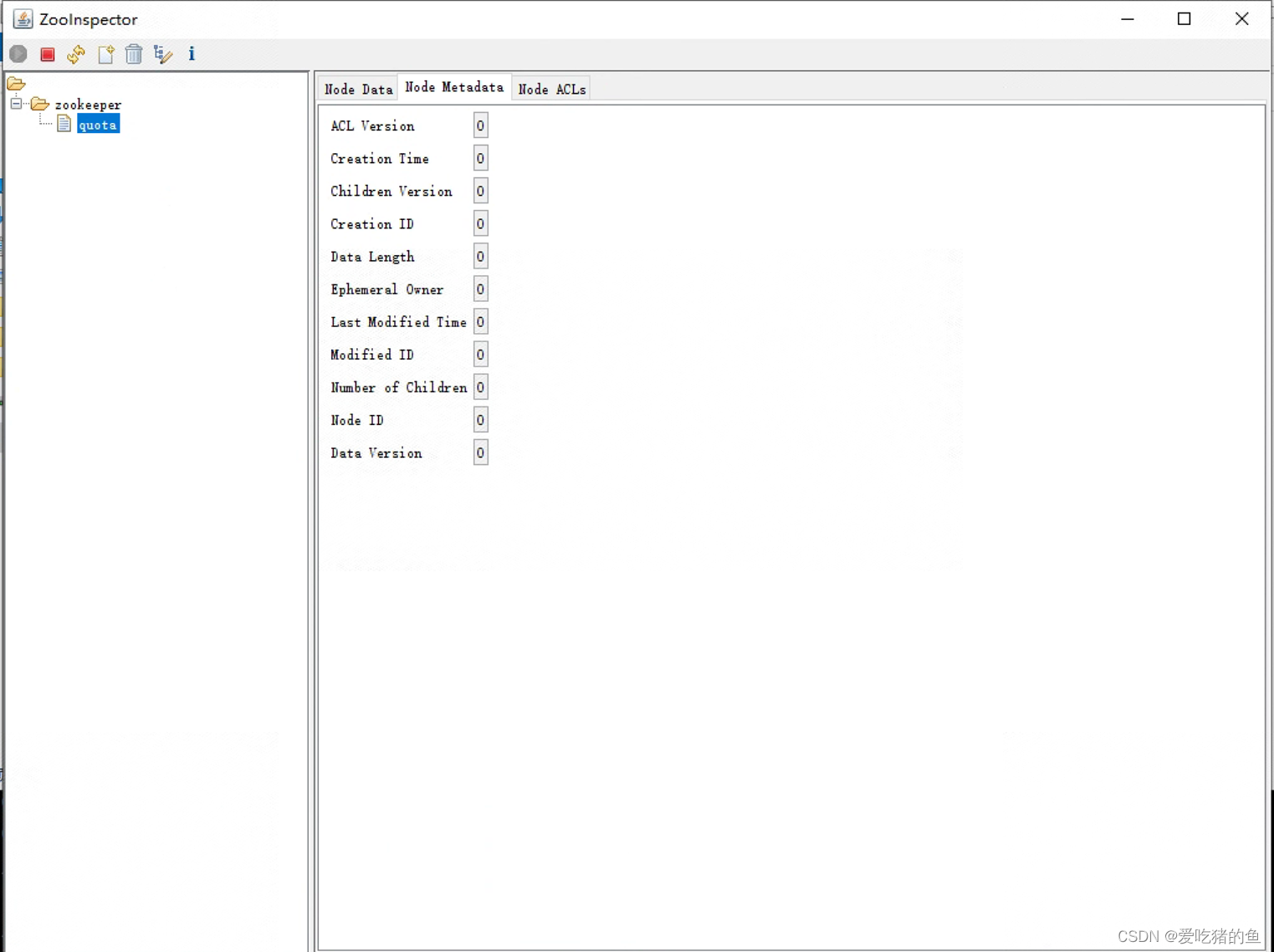

用工具测试zookeeper客户端连接:

基于Deployment实现Zookeeper集群

#创建zookeeper项目文件夹

root@k8s-deploy:/opt# mkdir -p /opt/zookeeper/{yaml,dockerfile}

#进入/opt/zookeeper/dockerfile编辑Dockerfile文件,打镜像

root@k8s-deploy:/opt/zookeeper/dockerfile# vim Dockerfile

#FROM elevy/slim_java:8

FROM registry.cn-hangzhou.aliyuncs.com/zhangshijie/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

#编辑镜像repositories源文件

root@k8s-deploy:/opt/zookeeper/dockerfile# vim repositories

http://mirrors.aliyun.com/alpine/v3.6/main

http://mirrors.aliyun.com/alpine/v3.6/community

#下载zookeeper-3.4.14.tar.gz和zookeeper-3.4.14.tar.gz.asc和KEYS到当前目录

wget https://www.apache.org/dyn/closer.cgi?action=download&filename=zookeeper/stable/zookeeper-3.4.14.tar.gz

wget https://www.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz.asc

wget https://dist.apache.org/repos/dist/release/zookeeper/KEYS

#编辑镜像创建及上传脚本

root@k8s-deploy:/opt/zookeeper/dockerfile# vim build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.canghailyt.com/base/zookeeper:${TAG} .

sleep 10

docker push harbor.canghailyt.com/base/zookeeper:${TAG}

#nerdctl build -t harbor.canghailyt.com/base/zookeeper:${TAG} .

#nerdctl push harbor.canghailyt.com/base/zookeeper:${TAG}

root@k8s-deploy:/opt/zookeeper/dockerfile# chmod a+x build-command.sh

#编辑zookeeper启动脚本

root@k8s-deploy:/opt/zookeeper/dockerfile# vim entrypoint.sh

#!/bin/bash

echo ${MYID:-1} > /zookeeper/data/myid #将MYID的值希尔MYID文件,如果变量为空就默认为1,MYID为pod中的系统级别环境变量

if [ -n "$SERVERS" ]; then #如果$SERVERS不为空则向下执行,SERVERS为pod中的系统级别环境变量

IFS=\, read -a servers <<<"$SERVERS" #IFS为bash内置变量用于分割字符并将结果形成一个数组

for i in "${!servers[@]}"; do #${!servers[@]}表示获取servers中每个元素的索引值,此索引值会用做当前ZK的ID

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg #打印结果并输出重定向到文件/zookeeper/conf/zoo.cfg,其中%i和%s的值来分别自于后面变量"$((1 + $i))" "${servers[$i]}"

done

fi

cd /zookeeper

exec "$@" #$@变量用于引用给脚本传递的所有参数,传递的所有参数会被作为一个数组列表,exec为终止当前进程、保留当前进程id、新建一个进程执行新的任务,即CMD [ "zkServer.sh", "start-foreground" ]

root@k8s-deploy:/opt/zookeeper/dockerfile# chmod a+x entrypoint.sh

#创建zookeeper配置文件

root@k8s-deploy:/opt/zookeeper/dockerfile# mkdir conf

root@k8s-deploy:/opt/zookeeper/dockerfile# cd conf/

root@k8s-deploy:/opt/zookeeper/dockerfile/conf# vim zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

quorumListenOnAllIPs=true

root@k8s-deploy:/opt/zookeeper/dockerfile/conf# vim log4j.properties

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO, CONSOLE, ROLLINGFILE

zookeeper.console.threshold=INFO

zookeeper.log.dir=/zookeeper/log

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=INFO

zookeeper.tracelog.dir=/zookeeper/log

zookeeper.tracelog.file=zookeeper_trace.log

#

# ZooKeeper Logging Configuration

#

# Format is "<default threshold> (, <appender>)+

# DEFAULT: console appender only

log4j.rootLogger=${zookeeper.root.logger}

# Example with rolling log file

#log4j.rootLogger=DEBUG, CONSOLE, ROLLINGFILE

# Example with rolling log file and tracing

#log4j.rootLogger=TRACE, CONSOLE, ROLLINGFILE, TRACEFILE

#

# Log INFO level and above messages to the console

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

# Max log file size of 10MB

log4j.appender.ROLLINGFILE.MaxFileSize=10MB

# uncomment the next line to limit number of backup files

log4j.appender.ROLLINGFILE.MaxBackupIndex=5

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L][%x] - %m%n

#创建bin文件

root@k8s-deploy:/opt/zookeeper/dockerfile/conf# cd /opt/zookeeper/dockerfile/

root@k8s-deploy:/opt/zookeeper/dockerfile# mkdir bin

root@k8s-deploy:/opt/zookeeper/dockerfile# cd bin/

root@k8s-deploy:/opt/zookeeper/dockerfile/bin# vim zkReady.sh

#!/bin/bash

/zookeeper/bin/zkServer.sh status | egrep 'Mode: (standalone|leading|following|observing)'

root@k8s-deploy:/opt/zookeeper/dockerfile/bin# chmod a+x zkReady.sh

#执行镜像创建脚本

cd /opt/zookeeper/dockerfile

root@k8s-deploy:/opt/zookeeper/dockerfile# bash build-command.sh 3.4.14

root@k8s-deploy:/opt/zookeeper/dockerfile# docker images | grep zookeeper

harbor.canghailyt.com/base/zookeeper 3.4.14 c31aafcf1e61 11 minutes ago 183MB

#创建nfs目录

mkdir -p /data/{zookeeper3,zookeeper2,zookeeper1}

vim /etc/exports

/data/zookeeper1 *(rw,no_root_squash,insecure)

/data/zookeeper2 *(rw,no_root_squash,insecure)

/data/zookeeper3 *(rw,no_root_squash,insecure)

systemctl restart nfs-server.service

root@k8s-deploy:/data/zookeeper1# showmount -e

Export list for k8s-deploy.canghailyt.com:

/data/zookeeper3 *

/data/zookeeper2 *

/data/zookeeper1 *

/data/storageclassname *

#创建pv

root@k8s-deploy:/opt/zookeeper/yaml# pwd

/opt/zookeeper/yaml

root@k8s-deploy:/opt/zookeeper/yaml# vim zookeeper-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.200

path: /data/zookeeper1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.200

path: /data/zookeeper2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.200

path: /data/zookeeper3

#查看pv

root@k8s-deploy:/opt/zookeeper/yaml# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

zookeeper-pv-1 20Gi RWO Retain Available 5s

zookeeper-pv-2 20Gi RWO Retain Available 5s

zookeeper-pv-3 20Gi RWO Retain Available 5s

#创建pvc文件

root@k8s-deploy:/opt/zookeeper/yaml# vim zookeeper-pvc.yaml

apiVersion: v1

kind: Namespace

metadata:

name: wang

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-1

namespace: wang

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-2

namespace: wang

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-3

namespace: wang

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-3

resources:

requests:

storage: 10Gi

#查看pvc

root@k8s-deploy:/opt/zookeeper/yaml# kubectl get pvc -n wang

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-pvc-1 Bound zookeeper-pv-1 20Gi RWO 62s

zookeeper-pvc-2 Bound zookeeper-pv-2 20Gi RWO 62s

zookeeper-pvc-3 Bound zookeeper-pv-3 20Gi RWO 62s

#创建zookeeper文件

root@k8s-deploy:/opt/zookeeper/yaml# vim zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: wang

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: wang

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: wang

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: wang

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: wang

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.canghailyt.com/base/zookeeper:3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-1

volumes:

- name: zookeeper-pvc-1

persistentVolumeClaim:

claimName: zookeeper-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: wang

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.canghailyt.com/base/zookeeper:3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-2

volumes:

- name: zookeeper-pvc-2

persistentVolumeClaim:

claimName: zookeeper-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: wang

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.canghailyt.com/base/zookeeper:3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-3

volumes:

- name: zookeeper-pvc-3

persistentVolumeClaim:

claimName: zookeeper-pvc-3

#查看pod

root@k8s-deploy:/opt/zookeeper/yaml# kubectl get pod -n wang

NAME READY STATUS RESTARTS AGE

zookeeper1-5dc88768b8-b5m75 1/1 Running 0 39s

zookeeper2-65cc464777-q2559 1/1 Running 0 39s

zookeeper3-6fcf76b8fd-8q8kh 1/1 Running 0 38s

#进入pod查看

root@k8s-deploy:/opt/zookeeper/yaml# kubectl exec -it -n wang zookeeper3-6fcf76b8fd-8q8kh sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader

/ # cat /zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

quorumListenOnAllIPs=true

server.1=zookeeper1:2888:3888

server.2=zookeeper2:2888:3888

server.3=zookeeper3:2888:3888

自定义镜像实现动静分离的web服务并结合NFS实现数据共享和持久

创建项目目录

mkdir -p /root/project/{dockerfile,yaml}

创建centos基础镜像

cd /root/project/dockerfile/

mkdir -p system/centos-base

cd system/centos-base/

#编辑dockerfile

vim Dockerfile

#自定义Centos 基础镜像

FROM centos:7.9.2009

MAINTAINER wang wang@qq.com

ADD filebeat-7.12.1-x86_64.rpm /tmp

RUN yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree \

lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel \

openssl openssl-devel iproute net-tools iotop && \

rm -rf /etc/localtime /tmp/filebeat-7.12.1-x86_64.rpm && \

ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

useradd nginx -u 2088

#下载filebeat-7.12.1-x86_64.rpm

#编辑镜像创建上传文件

vim build-command.sh

#!/bin/bash

#docker build -t harbor.canghailyt.com/base/wang-centos-base:7.9.2009 .

#docker push harbor.canghailyt.com/base/wang-centos-base:7.9.2009

/usr/local/bin/nerdctl build -t harbor.canghailyt.com/base/wang-centos-base:7.9.2009 .

/usr/local/bin/nerdctl push harbor.canghailyt.com/base/wang-centos-base:7.9.2009

#创建上传基础镜像

bash build-command.sh

构建jdk镜像

cd /root/project/dockerfile/

mkdir pub_images

cd pub_images/

mkdir jdk-centos

cd jdk-centos

#编辑Dockerfile

vim Dockerfile

#JDK Base Image

FROM harbor.canghailyt.com/base/wang-centos-base:7.9.2009

#FROM centos:7.9.2009

MAINTAINER wang "wang@dfvw.com"

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

#编辑profile文件

vim profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`/usr/bin/id -u`

UID=`/usr/bin/id -ru`

fi

USER="`/usr/bin/id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

fi

HOSTNAME=`/usr/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`/usr/bin/id -gn`" = "`/usr/bin/id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh /etc/profile.d/sh.local ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

fi

fi

done

unset i

unset -f pathmunge

export LANG=en_US.UTF-8

export HISTTIMEFORMAT="%F %T `whoami` "

export JAVA_HOME=/usr/local/jdk

export TOMCAT_HOME=/apps/tomcat

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$TOMCAT_HOME/bin:$PATH

export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar

#编辑镜像创建上传文件

vim build-command.sh

#!/bin/bash

#docker build -t harbor.canghailyt.com/base/jdk-base:v8.212 .

#sleep 1

#docker push harbor.canghailyt.com/base/jdk-base:v8.212

nerdctl build -t harbor.canghailyt.com/base/jdk-base:v8.212 .

nerdctl push harbor.canghailyt.com/base/jdk-base:v8.212

#下载jdk-8u212-linux-x64.tar.gz

root@k8s-master1:/opt/project/dockerfile/jdk-centos# ll

total 190464

drwxr-xr-x 2 root root 4096 Sep 4 15:39 ./

drwxr-xr-x 4 root root 4096 Sep 4 15:26 ../

-rw-r--r-- 1 root root 399 Sep 4 15:34 Dockerfile

-rw-r--r-- 1 root root 264 Sep 4 15:38 build-command.sh

-rw-r--r-- 1 root root 195013152 Sep 4 15:39 jdk-8u212-linux-x64.tar.gz

-rw-r--r-- 1 root root 2105 Sep 4 15:35 profile

#创建镜像上传镜像

root@k8s-master1:/opt/project/dockerfile/jdk-centos# bash build-command.sh

创建Tomcat镜像

cd /root/project/dockerfile/pub_images/

mkdir tomcat-base

cd tomcat-base/

#编辑dockerfile

vim Dockerfile

#Tomcat 8.5.43基础镜像

FROM harbor.canghailyt.com/base/jdk-base:v8.212

MAINTAINER wang

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN useradd tomcat -u 2050 && ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat && chown -R tomcat.tomcat /apps /data -R

#编辑镜像创建文件

vim build-command.sh

#!/bin/bash

#docker build -t harbor.canghailyt.com/base/tomcat-base:v8.5.43 .

#sleep 3

#docker push harbor.canghailyt.com/base/tomcat-base:v8.5.43

nerdctl build -t harbor.canghailyt.com/base/tomcat-base:v8.5.43 .

nerdctl push harbor.canghailyt.com/base/tomcat-base:v8.5.43

#下载apache-tomcat-8.5.43.tar.gz

root@k8s-master1:/opt/project/dockerfile/tomcat-base# ll

total 9508

drwxr-xr-x 2 root root 4096 Sep 4 16:00 ./

drwxr-xr-x 6 root root 4096 Sep 4 15:55 ../

-rw-r--r-- 1 root root 308 Sep 4 15:57 Dockerfile

-rw-r--r-- 1 root root 9717059 Sep 4 16:00 apache-tomcat-8.5.43.tar.gz

-rw-r--r-- 1 root root 280 Sep 4 16:00 build-command.sh

#创建

bash build-command.sh

配置Tomcat业务镜像

mkdir -p /root/project/dockerfile/web/tomcat-app

cd /root/project/dockerfile/web/tomcat-app

#编辑dockerfile

vim Dockerfile

#tomcat web1

FROM harbor.canghailyt.com/base/tomcat-base:v8.5.43

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

#ADD myapp/* /data/tomcat/webapps/myapp/

ADD app1.tar.gz /data/tomcat/webapps/app1/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

#ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R nginx.nginx /data/ /apps/

#ADD filebeat-7.5.1-x86_64.rpm /tmp/

#RUN cd /tmp && yum localinstall -y filebeat-7.5.1-amd64.deb

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

#创建Tomcat启动脚本 run_tomcat.sh

vim run_tomcat.sh

su - nginx -c "/apps/tomcat/bin/catalina.sh start"

tail -f /etc/hosts

#赋执行权限

chmod a+x run_tomcat.sh

#创建build-command.sh

vim build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.canghailyt.com/base/tomcat-app1:${TAG} .

#sleep 3

#docker push harbor.canghailyt.com/base/tomcat-app1:${TAG}

nerdctl build -t harbor.canghailyt.com/base/tomcat-app1:${TAG} .

nerdctl push harbor.canghailyt.com/base/tomcat-app1:${TAG}

#添加catalina.sh文件;并附执行权限

chmod a+x catalina.sh

#添加Tomcat配置文件server.xml

root@k8s-master1:~/project/dockerfile/web/tomcat-app# ll

total 23584

drwxr-xr-x 2 root root 4096 Sep 5 12:26 ./

drwxr-xr-x 3 root root 4096 Sep 5 12:22 ../

-rw-r--r-- 1 root root 531 Sep 5 12:24 Dockerfile

-rw-r--r-- 1 root root 144 Sep 5 12:24 app1.tar.gz

-rw-r--r-- 1 root root 281 Sep 5 12:24 build-command.sh

-rwxr-xr-x 1 root root 23611 Sep 5 12:24 catalina.sh*

-rw-r--r-- 1 root root 24086235 Sep 5 12:24 filebeat-7.5.1-x86_64.rpm

-rw-r--r-- 1 root root 667 Sep 5 12:24 filebeat.yml

-rwxr-xr-x 1 root root 373 Sep 5 12:24 run_tomcat.sh*

-rw-r--r-- 1 root root 6462 Sep 5 12:24 server.xml

#创建

bash build-command.sh v1

#运行容器测试

nerdctl run -it --rm -p 8080:8080 harbor.canghailyt.com/base/tomcat-app1:v1

#在另一个个服务器访问测试:

root@k8s-deploy:~# curl 10.0.0.206:8080/app1/index.html

tomcat app1 for magedu

将构建好的Tomcat业务镜像在k8s中运行

mkdir -p /root/project/yaml/web/tomcat-app1

cd /root/project/yaml/web/tomcat-app1

#编辑tomcat-app1.yaml

vim tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app1-deployment-label

name: tomcat-app1-deployment

namespace: wang

spec:

replicas: 1

selector:

matchLabels:

app: tomcat-app1-selector

template:

metadata:

labels:

app: tomcat-app1-selector

spec:

containers:

- name: tomcat-app1-container

image: harbor.canghailyt.com/base/tomcat-app1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

#resources:

# limits:

# cpu: 1

# memory: "512Mi"

# requests:

# cpu: 500m

# memory: "512Mi"

volumeMounts:

- name: wang-images

mountPath: /data/webapp/images

readOnly: false

- name: wang-static

mountPath: /data/webapp/static

readOnly: false

volumes:

- name: wang-images

nfs:

server: 10.0.0.200

path: /data/web/images

- name: wang-static

nfs:

server: 10.0.0.200

path: /data/web/static

# nodeSelector:

# project: wang

# app: tomcat

---

kind: Service

apiVersion: v1

metadata:

labels:

app: tomcat-app1-service-label

name: tomcat-app1-service

namespace: wang

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30092

selector:

app: tomcat-app1-selector

#执行yaml文件

kubectl apply -f tomcat-app1.yaml

#验证

root@k8s-master1:~# kubectl get pod -n wang

NAME READY STATUS RESTARTS AGE

tomcat-app1-deployment-85dcc779d9-8h578 1/1 Running 0 20m

root@k8s-master1:~# curl 10.0.0.209:30092/app1/index.html

tomcat app1 for magedu

构建nginx基础镜像

mkdir -p /root/project/dockerfile/pub_images/nginx-base

cd /root/project/dockerfile/pub_images/nginx-base

#编辑dockerfile

#Nginx Base Image

FROM harbor.canghailyt.com/base/wang-centos-base:7.9.2009

MAINTAINER wang

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.22.0.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.22.0 && ./configure && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.22.0.tar.gz