一、BCELoss()

生成对抗网络的所使用到的loss函数BCELoss和BCEWithLogitsLoss

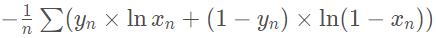

其中BCELoss的公式为:

其中y是target,x是模型输出的值。

二、例子

import torch

from torch import autograd

from torch import nn

import math

input = autograd.Variable(torch.tensor([[ 1.9072, 1.1079, 1.4906],

[-0.6584, -0.0512, 0.7608],

[-0.0614, 0.6583, 0.1095]]), requires_grad=True)

print(input)

print('-'*100)

输出:

tensor([[ 1.9072, 1.1079, 1.4906],

[-0.6584, -0.0512, 0.7608],

[-0.0614, 0.6583, 0.1095]], requires_grad=True)

----------------------------------------------------------------------------------------------------

先用Sigmoid给这些值都搞到0~1之间:

m = nn.Sigmoid()

print(m(input))

print('-'*100)

输出:

tensor([[0.8707, 0.7517, 0.8162],

[0.3411, 0.4872, 0.6815],

[0.4847, 0.6589, 0.5273]], grad_fn=<SigmoidBackward>)

----------------------------------------------------------------------------------------------------

假设Target是:

target = torch.FloatTensor([[0, 1, 1], [1, 1, 1], [0, 0, 0]])

print(target)

print('-'*100)

输出:

tensor([[0., 1., 1.],

[1., 1., 1.],

[0., 0., 0.]])

----------------------------------------------------------------------------------------------------

计算BCELoss:

r11 = 0 * math.log(0.8707) + (1-0) * math.log((1 - 0.8707))

r12 = 1 * math.log(0.7517) + (1-1) * math.log((1 - 0.7517))

r13 = 1 * math.log(0.8162) + (1-1) * math.log((1 - 0.8162))

r21 = 1 * math.log(0.3411) + (1-1) * math.log((1 - 0.3411))

r22 = 1 * math.log(0.4872) + (1-1) * math.log((1 - 0.4872))

r23 = 1 * math.log(0.6815) + (1-1) * math.log((1 - 0.6815))

r31 = 0 * math.log(0.4847) + (1-0) * math.log((1 - 0.4847))

r32 = 0 * math.log(0.6589) + (1-0) * math.log((1 - 0.6589))

r33 = 0 * math.log(0.5273) + (1-0) * math.log((1 - 0.5273))

r1 = -(r11 + r12 + r13) / 3

#0.8447112733378236

r2 = -(r21 + r22 + r23) / 3

#0.7260397266631787

r3 = -(r31 + r32 + r33) / 3

#0.8292933181294807

bceloss = (r1 + r2 + r3) / 3

print(bceloss)

print('-'*100)

输出:

0.8000147727101611

----------------------------------------------------------------------------------------------------

下面我们用BCELoss来验证一下Loss:

loss = nn.BCELoss()

print(loss(m(input), target))

print('-'*100)

输出:

tensor(0.8000, grad_fn=<BinaryCrossEntropyBackward>)

和上面计算的结果一样。

BCEWithLogitsLoss()

BCEWithLogitsLoss就是把Sigmoid-BCELoss合成一步。我们直接用刚刚的input验证一下:

loss = nn.BCEWithLogitsLoss()

print(loss(input, target))

输出:

tensor(0.8000, grad_fn=<BinaryCrossEntropyWithLogitsBackward>)

完整代码

import torch

from torch import autograd

from torch import nn

import math

input = autograd.Variable(torch.tensor([[ 1.9072, 1.1079, 1.4906],

[-0.6584, -0.0512, 0.7608],

[-0.0614, 0.6583, 0.1095]]), requires_grad=True)

print(input)

print('-'*100)

# from torch import nn

m = nn.Sigmoid()

print(m(input))

print('-'*100)

target = torch.FloatTensor([[0, 1, 1], [1, 1, 1], [0, 0, 0]])

print(target)

print('-'*100)

# import math

r11 = 0 * math.log(0.8707) + (1-0) * math.log((1 - 0.8707))

r12 = 1 * math.log(0.7517) + (1-1) * math.log((1 - 0.7517))

r13 = 1 * math.log(0.8162) + (1-1) * math.log((1 - 0.8162))

r21 = 1 * math.log(0.3411) + (1-1) * math.log((1 - 0.3411))

r22 = 1 * math.log(0.4872) + (1-1) * math.log((1 - 0.4872))

r23 = 1 * math.log(0.6815) + (1-1) * math.log((1 - 0.6815))

r31 = 0 * math.log(0.4847) + (1-0) * math.log((1 - 0.4847))

r32 = 0 * math.log(0.6589) + (1-0) * math.log((1 - 0.6589))

r33 = 0 * math.log(0.5273) + (1-0) * math.log((1 - 0.5273))

r1 = -(r11 + r12 + r13) / 3

#0.8447112733378236

r2 = -(r21 + r22 + r23) / 3

#0.7260397266631787

r3 = -(r31 + r32 + r33) / 3

#0.8292933181294807

bceloss = (r1 + r2 + r3) / 3

print(bceloss)

print('-'*100)

loss = nn.BCELoss()

print(loss(m(input), target))

print('-'*100)

loss = nn.BCEWithLogitsLoss()

print(loss(input, target))

4424

4424

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?