NSX-T NAPP 部署

提示:上一文章以经把HARBOR安装好了,接下来就是安装K8S,要求最少3节点.

文章目录

前言

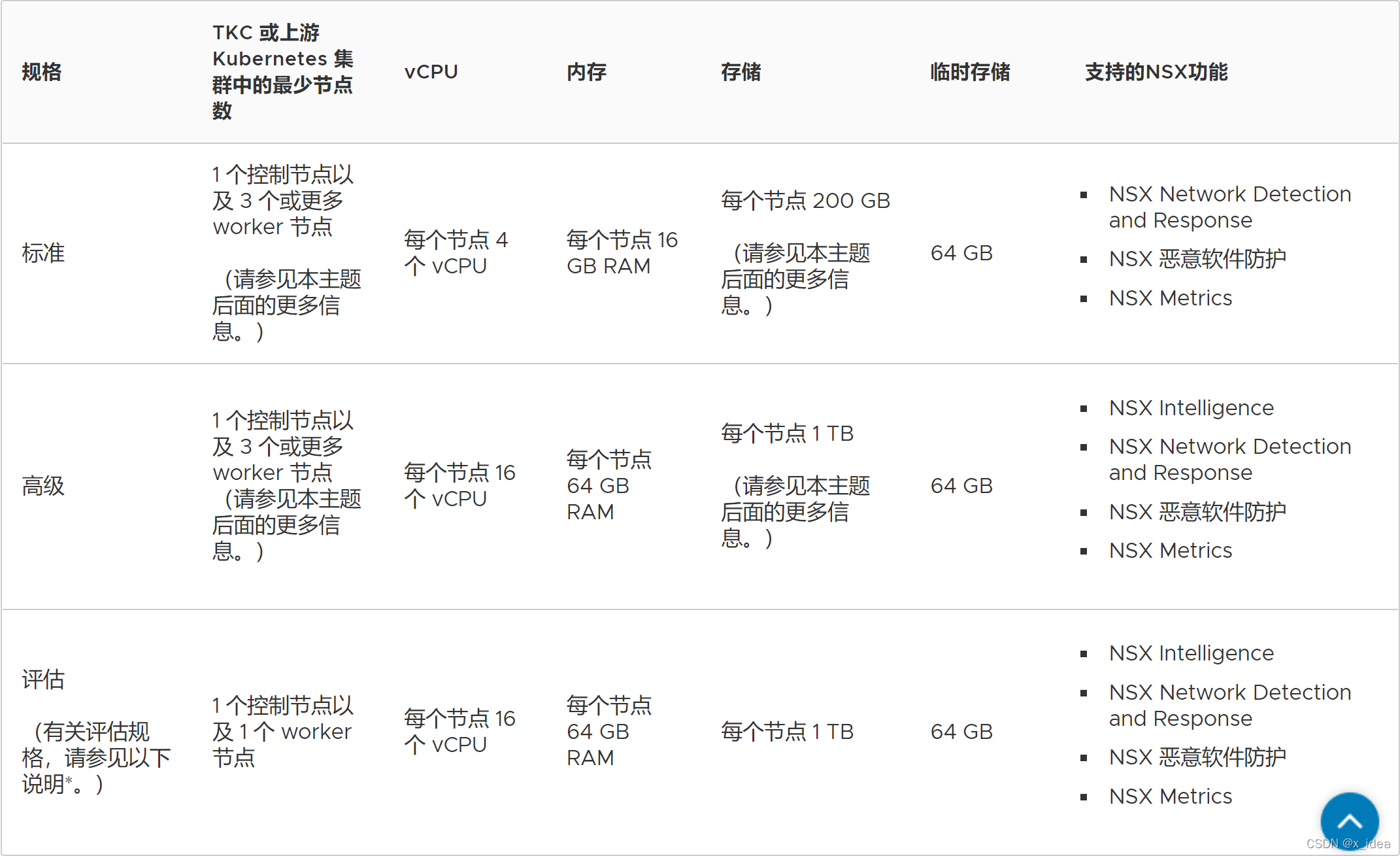

K8S集群安装,只兼容1.21.3以下版本,要求:1个主节点,3个NODE节点

一、安装K8S集群

示例:pandas 是基于NumPy 的一种工具,该工具是为了解决数据分析任务而创建的。

1、设置主机名

所有节点/etc/hosts文件内容如下

cat <<EOF >/etc/hosts

172.16.5.30 master

172.16.5.31 node1

172.16.5.32 node2

172.16.5.33 node3

EOF

2、设置hosts

设置主机名,三台分别执行

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

3、设置防火墙以及seliunx

systemctl disable firewalld && systemctl stop firewalld

禁用SELinux,让容器可以顺利地读取主机文件系统

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

为了切换系统方便,这里做一个免密操作

在本机(此主机名记为 nodeA)自动以rsa算法生成公私钥对

ssh-keygen -t rsa -f /root/.ssh/id_rsa -P ""

配置公钥到其他节点(此主机名记为 node1),输入对方密码即可完成从nodeA到node1的免密访问

ssh-copy-id node1

ssh-copy-id master

4.关闭swap分区

Swap是操作系统在内存吃紧的情况申请的虚拟内存,按照Kubernetes官网的说法,Swap会对Kubernetes的性能造成影响,不推荐使用Swap

swapoff -a

如果不禁用,则需要如下配置

vim /etc/sysconfig/kubelet 修改内容:

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

初始化(版本一定要与安装的一样,可以)kubeadm version 来查看版本信息

查看当前系统有效的repolist

yum repolist

重启同步系统时间(保证多台服务的时间一致)

systemctl restart chronyd

5、创建/etc/sysctl.d/k8s.conf文件,将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

#执行命令使修改生效

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

sysctl --system

如果后面初始化报错,则将显示 。。ip6tables = 0 的记录改为1

6、kube-proxy开启ipvs的前置条件

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

加载模块

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

安装了ipset软件包,安装管理工具ipvsadm

yum install ipset ipvsadm -y

三、安装docker

下载docker.repo包至 /etc/yum.repos.d/目录

cd /etc/yum.repos.d

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

配置镜像加速,可不配置

#cat >> /etc/docker/daemon.json <<-'EOF'

#{

# "registry-mirrors": ["https://5z6d320l.mirror.aliyuncs.com"],

#"exec-opts": ["native.cgroupdriver=systemd"]

#}

#EOF

yum默认是安装最新版本,但是为了兼容性,这里就指定版本安装

可选项

yum install -y --setopt=obsoletes=0 docker-ce-18.06.1.ce-3.el7

3、设置docker的Cgroup Driver

cat <<EOF >/etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

如果上面一行还有内容,记得在上面一行加上逗号

4.设置开机启动

systemctl daemon-reload && systemctl restart docker

docker info | grep Cgroup

四、使用kubeadm安装kubernetes

1-4需在所有节点执行,5-6在master节点上执行

1.配置yum源,在该目录中新建kubernetes仓库文件

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

2.开始安装kubelet kubeadm kubectl

#yum install -y kubelet kubeadm kubectl

yum -y install kubelet-1.21.1 kubeadm-1.21.1 kubectl-1.21.1

3.修改kubelet的Cgroup Driver

cat <<EOF >/etc/sysconfig/kubelet

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOF

启动kubelet并设置开机自启

systemctl enable kubelet && systemctl start kubelet

4、下载必要的镜像

[root@master ~]# kubeadm config images list

vim k8s.sh

#!/bin/bash

images=(

kube-apiserver:v1.21.1

kube-controller-manager:v1.21.1

kube-scheduler:v1.21.1

kube-proxy:v1.21.1

pause:3.4.1

# etcd:3.4.13-0

# coredns/coredns:v1.8.0

)

for imageName in ${images[@]};

do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

docker pull registry.aliyuncs.com/google_containers/coredns:1.8.0

docker tag registry.aliyuncs.com/google_containers/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns:v1.8.0

docker rmi registry.aliyuncs.com/google_containers/coredns:1.8.0

[root@master ~]#chmod +x k8s.sh

[root@master ~]#./k8s.sh

#[root@master ~]# docker pull registry.aliyuncs.com/google_containers/coredns:1.8.0

#[root@master ~]# docker tag registry.aliyuncs.com/google_containers/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns:v1.8.0

#[root@master ~]# docker rmi registry.aliyuncs.com/google_containers/coredns:1.8.0

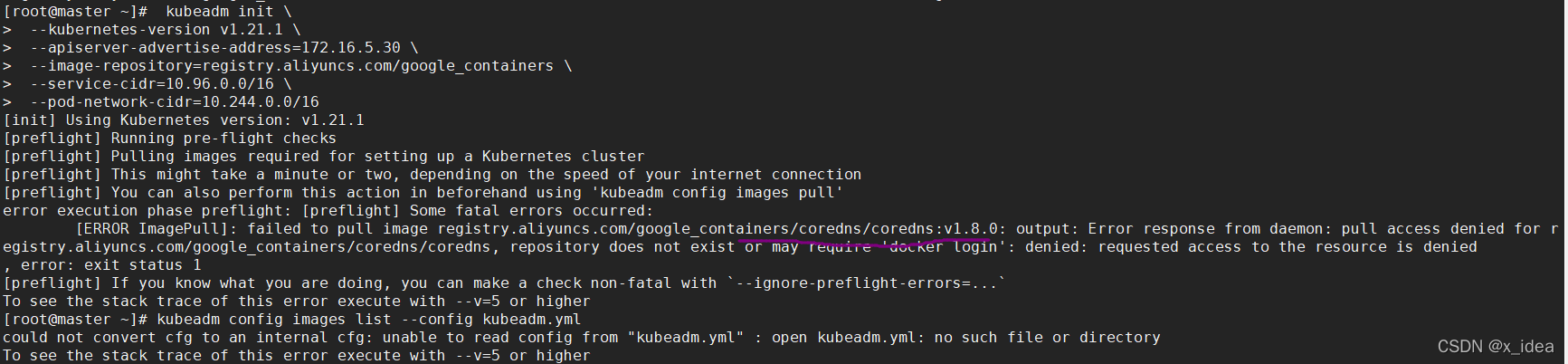

5、初始化,使用阿里云仓库,只在主节点执行

kubeadm init \

--kubernetes-version v1.21.1 \

--apiserver-advertise-address=172.16.5.30 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

参数说明

–apiserver-advertise-address=172.16.5.30 这个参数就是master主机的IP地址,例如我的Master主机的IP是:172.16.5.30

–image-repository=registry.aliyuncs.com/google_containers 这个是镜像地址,由于国外地址无法访问,故使用的阿里云仓库地址:registry.aliyuncs.com/google_containers

–kubernetes-version=v1.21.1 这个参数是下载的k8s软件版本号

–service-cidr=10.96.0.0/12 这个参数后的IP地址直接就套用10.96.0.0/12 ,以后安装时也套用即可,不要更改

–pod-network-cidr=10.244.0.0/16 k8s内部的pod节点之间网络可以使用的IP段,不能和service-cidr写一样,如果不知道怎么配,就先用这个10.244.0.0/16

kubernetes-version:修改要安装的版本

apiserver-advertise-address:master节点的ip地址

当出现如下信息表示成功,主节点安装完成后会生成一个token值,把这个token复制出来,暂存起来,以免弄丢,节点加入集群是需要这个token

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

在nide01和node02节点上分别执行如下命令,加入集群

kubeadm join 172.16.5.30:6443 --token gfo4yn.x3rprvhek0stgyfq \

--discovery-token-ca-cert-hash sha256:f90ac8e05d2b908e49fa6400c9af0ab026b7fec6e5402a6ee858e67100af4c53

要开始使用群集,您需要以普通用户身份运行以下命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

或者,如果您是root用户,则可以运行:

export KUBECONFIG=/etc/kubernetes/admin.conf

6、Flannel部署,主节点运行

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

当节点状态变成ready,主节点安装成功

#如需重新安装需要先删除所创建的网络配置

#kubectl delete -f kube-flannel.yml

7、节点加入集群

在node01和node02节点上分别执行如下命令加入集群:

kubeadm join 172.16.5.30:6443 --token z9yj17.p2w0tq0q4vkxzv9n \

--discovery-token-ca-cert-hash sha256:22fc9b53245bc4ab95660f11777ac150a43bab43aa97e0bed23b4cf675d3847e

失败处理,查看3.3 重点的

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

更改cgroup-driver为 cgroupfs

[root@node2 ~]# vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf -

-kubeconfig=/etc/kubernetes/kubelet.conf --cgroup-driver=cgroupfs"

8、查看状态

master节点上执行,如果都为Ready表示成功

kubectl get node

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 21h v1.21.1

node1 Ready <none> 11m v1.21.1

node2 Ready <none> 16m v1.21.1

Master初始化报错,

[root@master ~]# docker pull registry.aliyuncs.com/google_containers/coredns:1.8.0

1.8.0: Pulling from google_containers/coredns

Digest: sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e

Status: Downloaded newer image for registry.aliyuncs.com/google_containers/coredns:1.8.0

registry.aliyuncs.com/google_containers/coredns:1.8.0

[root@master ~]# docker tag registry.aliyuncs.com/google_containers/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns:v1.8.0

[root@master ~]# docker rmi registry.aliyuncs.com/google_containers/coredns:1.8.0

Untagged: registry.aliyuncs.com/google_containers/coredns:1.8.0

[root@master ~]# docker images

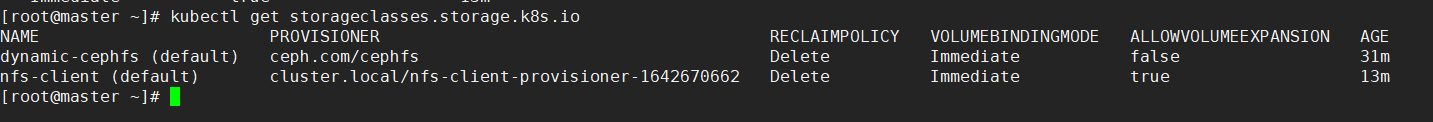

K8S对象-StorageClass,创建了SC存储类,所有节点都要装NFS

yum -y install nfs-utils

systemctl enable rpcbind && systemctl start rpcbind

安装Helm,DNS 换成1.1.1.1

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

git clone https://github.com/helm/charts.git

cd charts/

helm install stable/nfs-client-provisioner --set nfs.server=172.16.2.17 --set nfs.path=/nfs/k8s --generate-name

git clone https://github.com/helm/charts.git

cd charts/stable/nfs-client-provisioner

helm install storageclass --set nfs.server=172.16.2.17 --set nfs.path=/nfs/k8s -n storageclass ./

kubectl get storageclasses.storage.k8s.io

3808

3808

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?